This server integrates OpenAI SDK-compatible chat completion APIs into Model Context Protocol (MCP) clients like Claude Desktop and LibreChat. With it, you can:

Connect to multiple AI providers simultaneously (OpenAI, Perplexity, Groq, xAI, PyroPrompts)

Relay questions to configured providers using the

chattoolCustomize configurations via environment variables (API keys, models, base URLs)

Enable cross-platform compatibility (MacOS and Windows)

Debug communications with tools like MCP Inspector

Scale your setup with multiple providers using different configurations

Allows sending chat messages to OpenAI's API and receiving responses from models like gpt-4o

Integrates with Perplexity's API to send chat messages and receive responses from models like llama-3.1-sonar-small-128k-online

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@any-chat-completions-mcpexplain quantum computing in simple terms"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

any-chat-completions-mcp MCP Server

Integrate Claude with Any OpenAI SDK Compatible Chat Completion API - OpenAI, Perplexity, Groq, xAI, PyroPrompts and more.

This implements the Model Context Protocol Server. Learn more: https://modelcontextprotocol.io

This is a TypeScript-based MCP server that implements an implementation into any OpenAI SDK Compatible Chat Completions API.

It has one tool, chat which relays a question to a configured AI Chat Provider.

Development

Install dependencies:

Build the server:

For development with auto-rebuild:

Related MCP server: Stock Market Research Assistant

Installation

To add OpenAI to Claude Desktop, add the server config:

On MacOS: ~/Library/Application Support/Claude/claude_desktop_config.json

On Windows: %APPDATA%/Claude/claude_desktop_config.json

You can use it via npx in your Claude Desktop configuration like this:

Or, if you clone the repo, you can build and use in your Claude Desktop configuration like this:

You can add multiple providers by referencing the same MCP server multiple times, but with different env arguments:

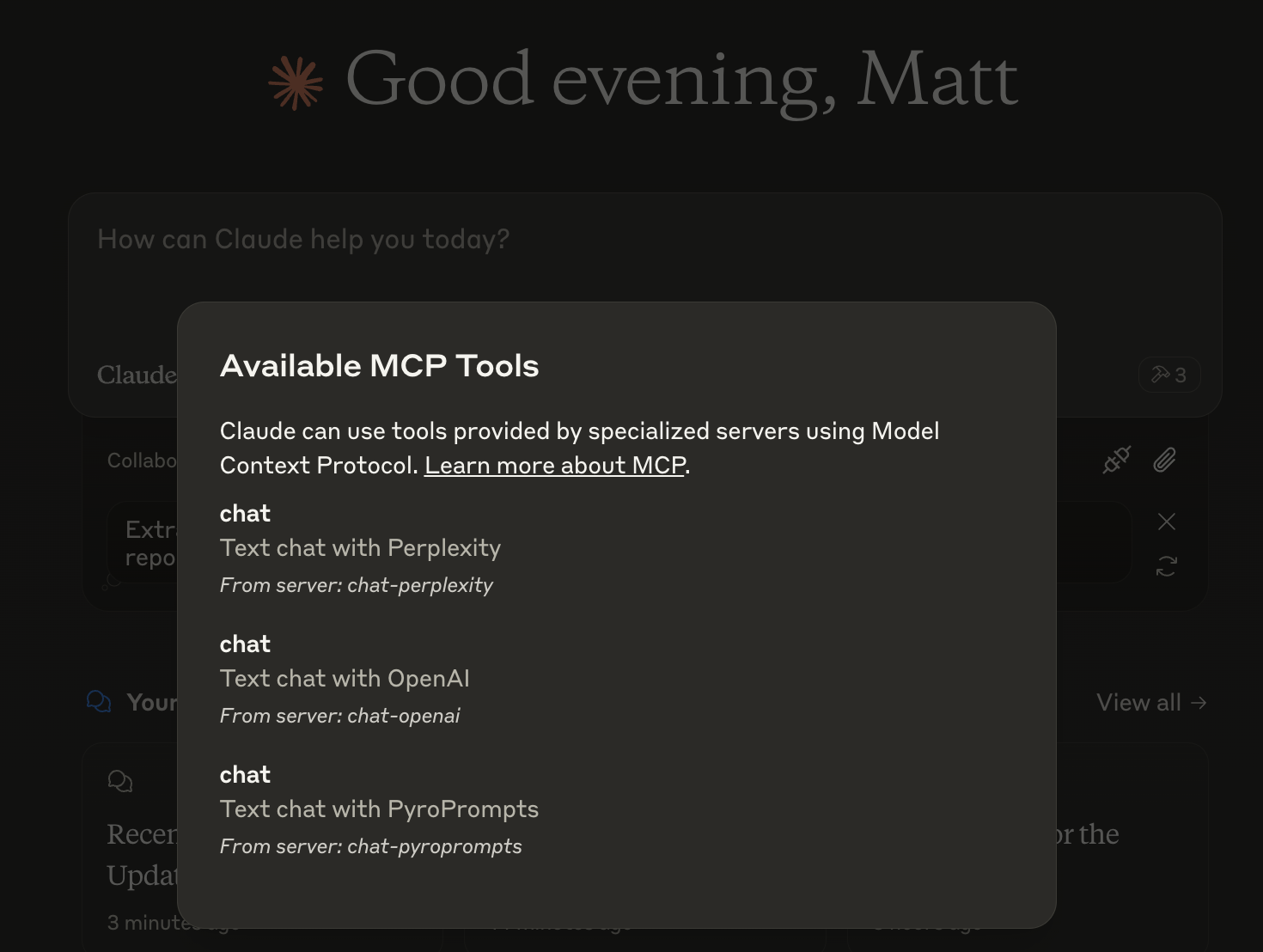

With these three, you'll see a tool for each in the Claude Desktop Home:

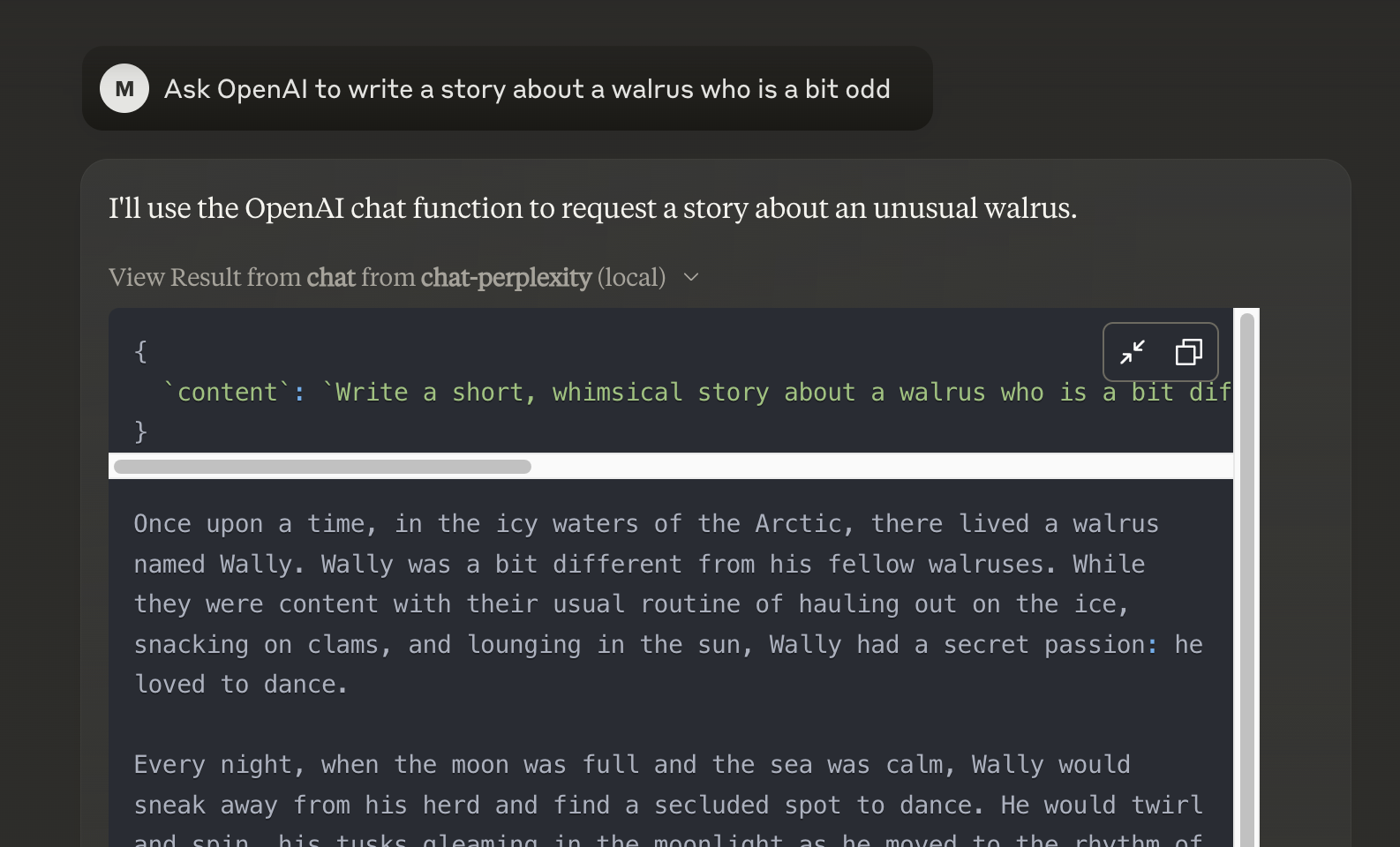

And then you can chat with other LLMs and it shows in chat like this:

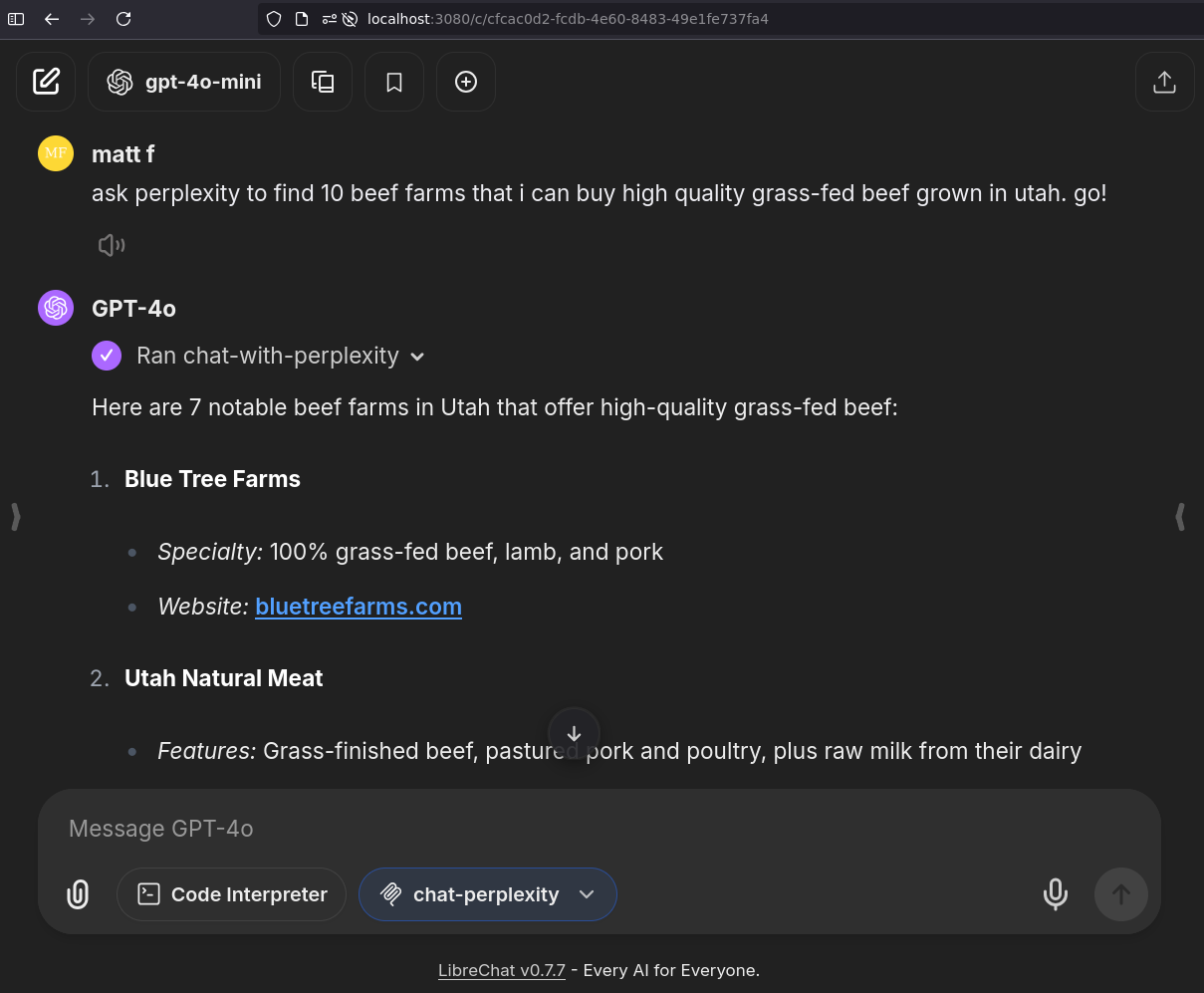

Or, configure in LibreChat like:

And it shows in LibreChat:

Installing via Smithery

To install Any OpenAI Compatible API Integrations for Claude Desktop automatically via Smithery:

Debugging

Since MCP servers communicate over stdio, debugging can be challenging. We recommend using the MCP Inspector, which is available as a package script:

The Inspector will provide a URL to access debugging tools in your browser.

Acknowledgements

Obviously the modelcontextprotocol and Anthropic team for the MCP Specification and integration into Claude Desktop. https://modelcontextprotocol.io/introduction

PyroPrompts for sponsoring this project. Use code

CLAUDEANYCHATfor 20 free automation credits on Pyroprompts.