Hosts tutorial content for txtai with guides on implementation and usage

Built with FastAPI to provide web API endpoints for all txtai functionality

Enables workflows to analyze GitHub data, demonstrated in examples for exploring and analyzing repository content

Mentioned as a platform where txtai tutorial series is available

Integrates with Hugging Face's Transformers and Hub for accessing ML models, enabling semantic search, embedding generation, and language model operations

Provides tutorials and documentation articles that are hosted on Medium

Supports integration with OpenAI models via LiteLLM for large language model operations and generation tasks

Offers Slack integration with a community workspace for collaboration and support

Supports processing YouTube content with capabilities for transcription and analysis

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@TxtAI MCP Serversearch for documents about machine learning workflows"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

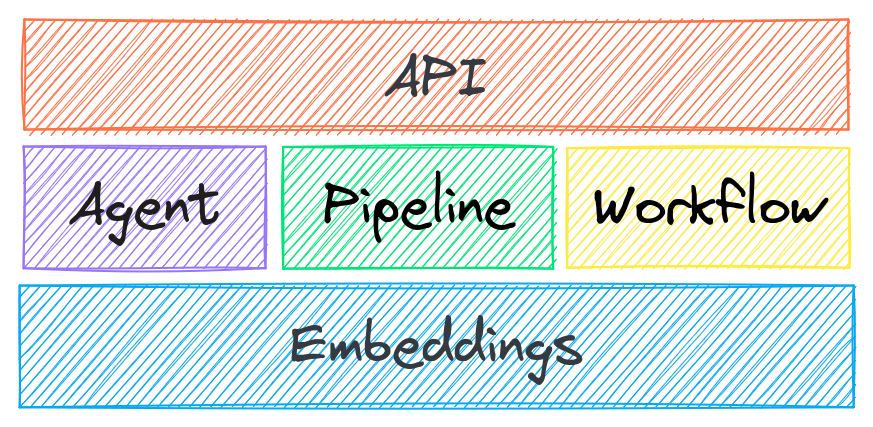

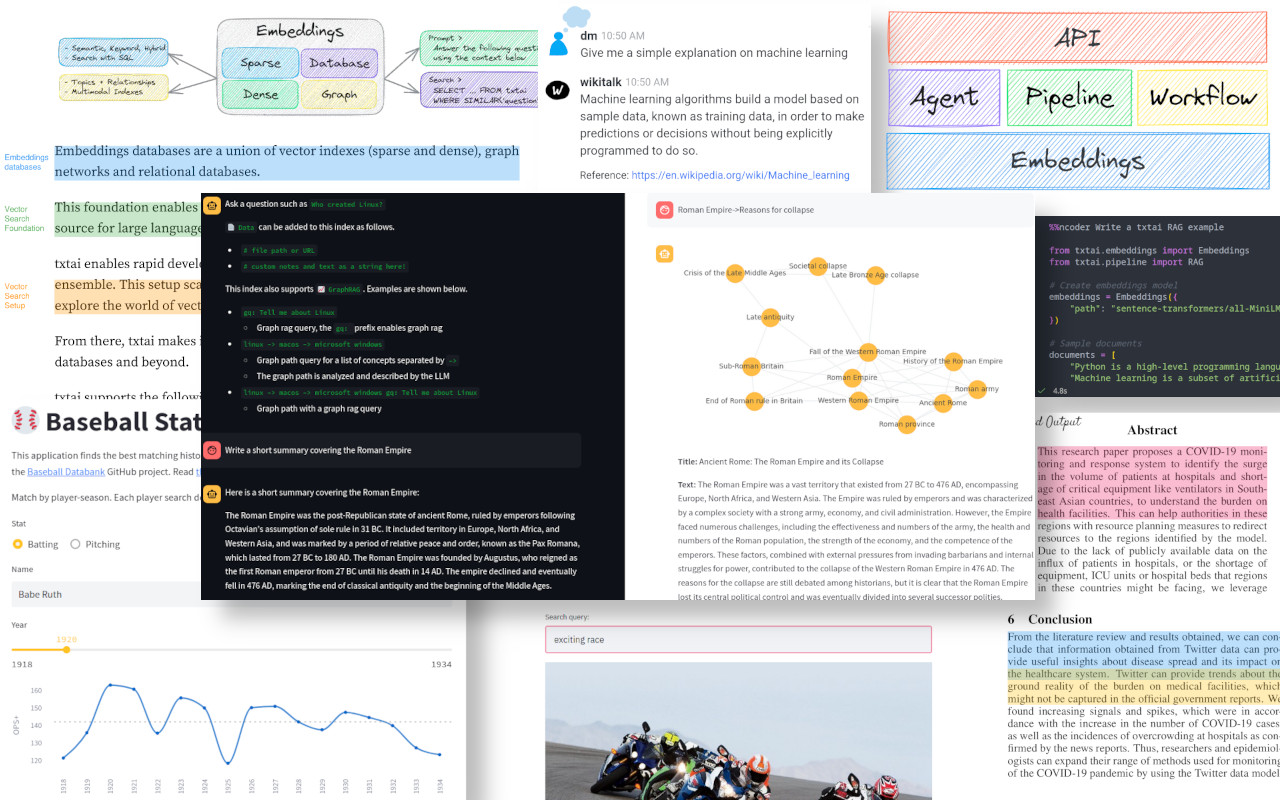

txtai is an all-in-one AI framework for semantic search, LLM orchestration and language model workflows.

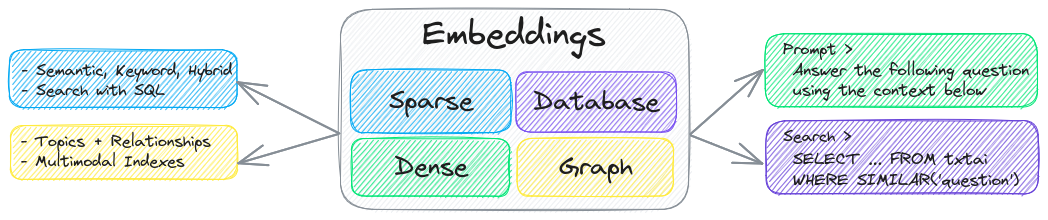

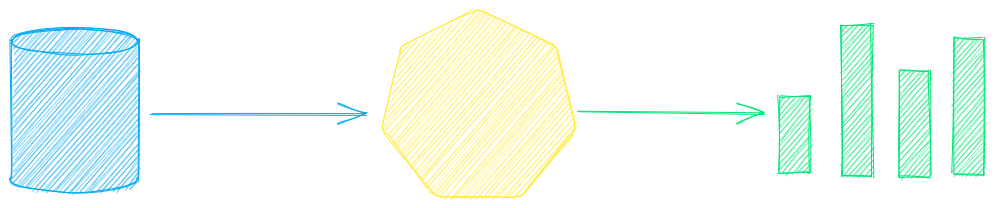

The key component of txtai is an embeddings database, which is a union of vector indexes (sparse and dense), graph networks and relational databases.

This foundation enables vector search and/or serves as a powerful knowledge source for large language model (LLM) applications.

Build autonomous agents, retrieval augmented generation (RAG) processes, multi-model workflows and more.

Summary of txtai features:

🔎 Vector search with SQL, object storage, topic modeling, graph analysis and multimodal indexing

📄 Create embeddings for text, documents, audio, images and video

💡 Pipelines powered by language models that run LLM prompts, question-answering, labeling, transcription, translation, summarization and more

↪️️ Workflows to join pipelines together and aggregate business logic. txtai processes can be simple microservices or multi-model workflows.

🤖 Agents that intelligently connect embeddings, pipelines, workflows and other agents together to autonomously solve complex problems

⚙️ Web and Model Context Protocol (MCP) APIs. Bindings available for JavaScript, Java, Rust and Go.

🔋 Batteries included with defaults to get up and running fast

☁️ Run local or scale out with container orchestration

txtai is built with Python 3.10+, Hugging Face Transformers, Sentence Transformers and FastAPI. txtai is open-source under an Apache 2.0 license.

NeuML is the company behind txtai and we provide AI consulting services around our stack. Schedule a meeting or send a message to learn more.

We're also building an easy and secure way to run hosted txtai applications with txtai.cloud.

Why txtai?

New vector databases, LLM frameworks and everything in between are sprouting up daily. Why build with txtai?

Built-in API makes it easy to develop applications using your programming language of choice

Run local - no need to ship data off to disparate remote services

Work with micromodels all the way up to large language models (LLMs)

Low footprint - install additional dependencies and scale up when needed

Learn by example - notebooks cover all available functionality

Related MCP server: ReActMCP Web Search

Use Cases

The following sections introduce common txtai use cases. A comprehensive set of over 70 example notebooks and applications are also available.

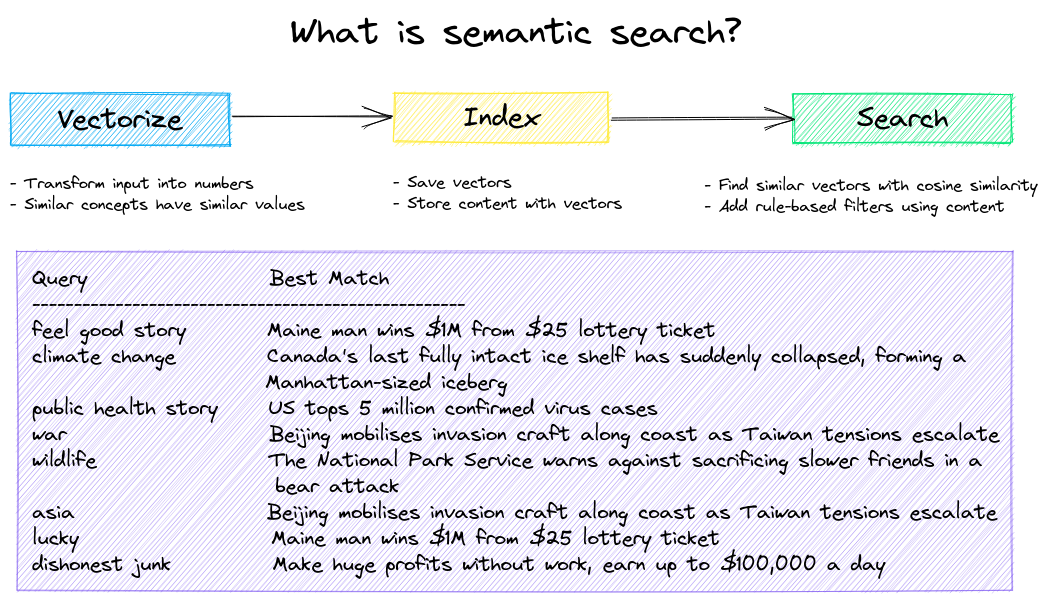

Semantic Search

Build semantic/similarity/vector/neural search applications.

Traditional search systems use keywords to find data. Semantic search has an understanding of natural language and identifies results that have the same meaning, not necessarily the same keywords.

Get started with the following examples.

Notebook | Description | |

Overview of the functionality provided by txtai | ||

Embed images and text into the same space for search | ||

Question matching with semantic search | ||

Explore topics, data connectivity and run network analysis |

LLM Orchestration

Autonomous agents, retrieval augmented generation (RAG), chat with your data, pipelines and workflows that interface with large language models (LLMs).

See below to learn more.

Notebook | Description | |

Build model prompts and connect tasks together with workflows | ||

Integrate llama.cpp, LiteLLM and custom generation frameworks | ||

Build knowledge graphs with LLM-driven entity extraction | ||

Explore an astronomical knowledge graph of known stars, planets, galaxies |

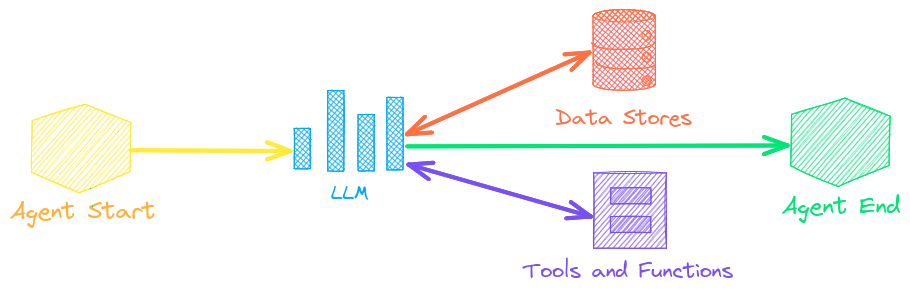

Agents

Agents connect embeddings, pipelines, workflows and other agents together to autonomously solve complex problems.

txtai agents are built on top of the smolagents framework. This supports all LLMs txtai supports (Hugging Face, llama.cpp, OpenAI / Claude / AWS Bedrock via LiteLLM).

Check out this Agent Quickstart Example. Additional examples are listed below.

Notebook | Description | |

Explore a rich dataset with Graph Analysis and Agents | ||

Agents that iteratively solve problems as they see fit | ||

Exploring how to improve social media engagement with AI |

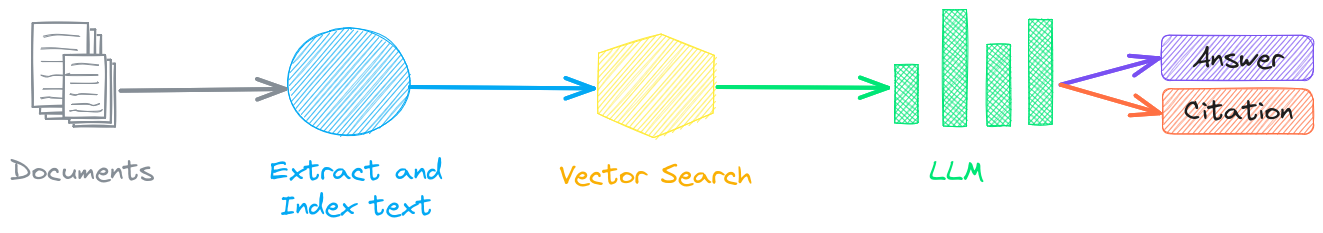

Retrieval augmented generation

Retrieval augmented generation (RAG) reduces the risk of LLM hallucinations by constraining the output with a knowledge base as context. RAG is commonly used to "chat with your data".

Check out this RAG Quickstart Example. Additional examples are listed below.

Notebook | Description | |

Guide on retrieval augmented generation including how to create citations | ||

Context retrieval via Web, SQL and other sources | ||

Deep graph search powered RAG | ||

Full cycle speech to speech workflow with RAG |

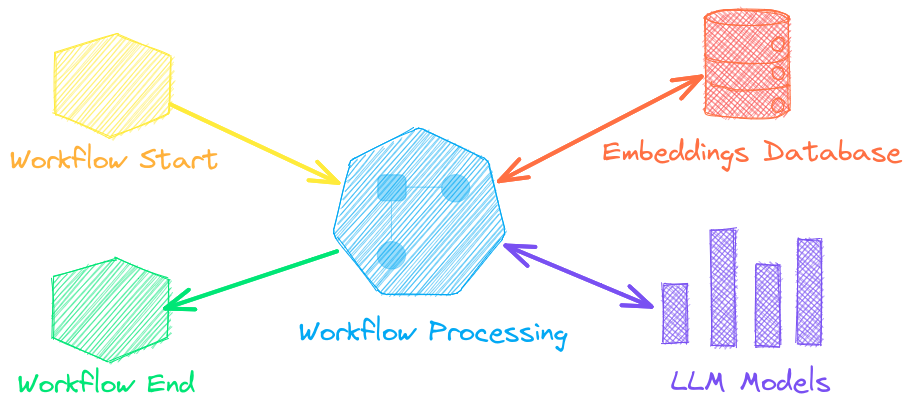

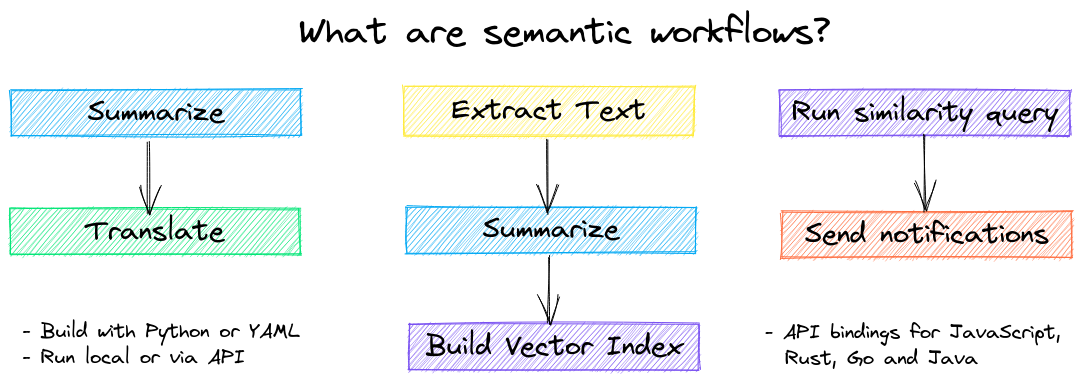

Language Model Workflows

Language model workflows, also known as semantic workflows, connect language models together to build intelligent applications.

While LLMs are powerful, there are plenty of smaller, more specialized models that work better and faster for specific tasks. This includes models for extractive question-answering, automatic summarization, text-to-speech, transcription and translation.

Check out this Workflow Quickstart Example. Additional examples are listed below.

Notebook | Description | |

Simple yet powerful constructs to efficiently process data | ||

Run abstractive text summarization | ||

Convert audio files to text | ||

Streamline machine translation and language detection |

Installation

The easiest way to install is via pip and PyPI

Python 3.10+ is supported. Using a Python virtual environment is recommended.

See the detailed install instructions for more information covering optional dependencies, environment specific prerequisites, installing from source, conda support and how to run with containers.

Model guide

See the table below for the current recommended models. These models all allow commercial use and offer a blend of speed and performance.

Component | Model(s) |

Fine-tune with training pipeline | |

Models can be loaded as either a path from the Hugging Face Hub or a local directory. Model paths are optional, defaults are loaded when not specified. For tasks with no recommended model, txtai uses the default models as shown in the Hugging Face Tasks guide.

See the following links to learn more.

Powered by txtai

The following applications are powered by txtai.

Application | Description |

Retrieval Augmented Generation (RAG) application | |

Build knowledge bases for RAG | |

AI for medical and scientific papers | |

Automatically annotate papers with LLMs |

In addition to this list, there are also many other open-source projects, published research and closed proprietary/commercial projects that have built on txtai in production.

Further Reading

Documentation

Full documentation on txtai including configuration settings for embeddings, pipelines, workflows, API and a FAQ with common questions/issues is available.

Contributing

For those who would like to contribute to txtai, please see this guide.