Runs an Express server to serve static frontend files and provide API endpoints for the UI, enabling task management and feature planning functionality.

Integrates with Git to analyze code changes using 'git diff HEAD', enabling code review functionality and automated task creation based on detected changes.

Uses WebSockets to handle real-time communication for the clarification workflow, allowing the UI to interact with the server when additional information is needed.

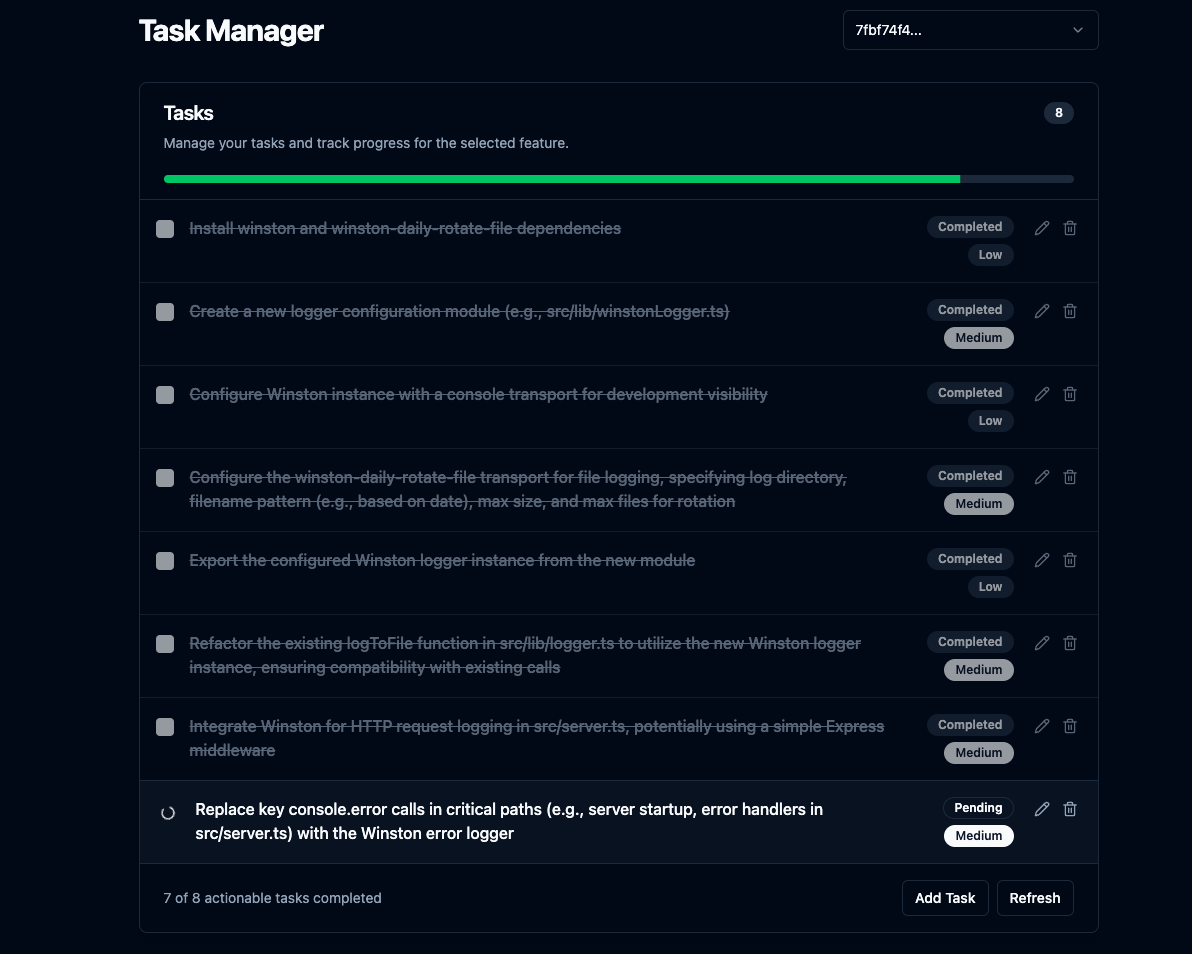

Provides a Svelte UI for viewing task lists, monitoring progress, manually adjusting plans, and reviewing changes made by the AI agent.

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@Task Manager MCP Serverplan a user authentication system with JWT tokens and password hashing"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

Task Manager MCP Server

This is an MCP server built to integrate with AI code editors like Cursor. The main goal here is to maximize Cursor's agentic capabilities and Gemini 2.5's excellent architecting capabilities while working around Cursor's extremely limited context window. This was inspired largely by Roo Code's Boomerang mode, but I found it extremely expensive as the only model that works with it's apply bot is Claude 3.7 Sonnet. With this server, you get the best of both worlds: unlimited context window and unlimited usage for the price of Cursor's $20/month subscription.

In addition, it includes a Svelte UI that allows you to view the task list and progress, manually adjust the plan, and review the changes.

Svelte UI

Related MCP server: Fastn Server

Core Features

Complex Feature Planning: Give it a feature description, and it uses an LLM with project context via

repomixto generate a step-by-step coding plan for the AI agent to follow with recursive task breakdown for high-effort tasks.Integrated UI Server: Runs an Express server to serve static frontend files and provides basic API endpoints for the UI. Opens the UI in the default browser after planning is complete or when clarification is needed and displays the task list and progress.

Unlimited Context Window: Uses Gemini 2.5's 1 million token context window with

repomix's truncation when needed.Conversation History: Keeps track of the conversation history for each feature in a separate JSON file within

.mcp/features/for each feature, allowing Gemini 2.5 to have context when the user asks for adjustments to the plan.Clarification Workflow: Handles cases where the LLM needs more info, pausing planning and interacting with a connected UI via WebSockets.

Task CRUD: Allows for creating, reading, updating, and deleting tasks via the UI.

Code Review: Analyzes

git diff HEADoutput using an LLM and creates new tasks if needed.Automatic Review (Optional): If configured (

AUTO_REVIEW_ON_COMPLETION=true), automatically runs the code review process after the last original task for a feature is completed.Plan Adjustment: Allows for adjusting the plan after it's created via the

adjust_plantool.

Setup

Prerequisites:

Node.js

npm

Git

Installation & Build:

Clone:

git clone https://github.com/jhawkins11/task-manager-mcp.git cd task-manager-mcpInstall Backend Deps:

npm installConfigure: You'll configure API keys later directly in Cursor's MCP settings (see Usage section), but you might still want a local

.envfile for manual testing (see Configuration section).Build: This command builds the backend and frontend servers and copies the Svelte UI to the

dist/frontend-ui/directory.npm run build

Running the Server (Manually):

For local testing without Cursor, you can run the server using Node directly or the npm script. This method will use the .env file for configuration.

Using Node directly (use absolute path):

Using npm start:

This starts the MCP server (stdio), WebSocket server, and the HTTP server for the UI. The UI should be accessible at http://localhost:<UI_PORT> (default 3000).

Configuration (.env file for Manual Running):

If running manually (not via Cursor), create a .env file in the project root for API keys and ports. Note: When running via Cursor, these should be set in Cursor's mcp.json configuration instead (see Usage section).

Avoiding Costs

IMPORTANT: It's highly recommended to integrate your own Google AI API key to OpenRouter to avoid the free models' rate limits. See below.

Using OpenRouter's Free Tiers: You can significantly minimize or eliminate costs by using models marked as "Free" on OpenRouter (like google/gemini-2.5-flash-preview:thinking at the time of writing) while connecting your own Google AI API key. Check out this reddit thread for more info: https://www.reddit.com/r/ChatGPTCoding/comments/1jrp1tj/a_simple_guide_to_setting_up_gemini_25_pro_free/

Fallback Costs: The server automatically retries with a fallback model if the primary hits a rate limit. The default fallback (FALLBACK_OPENROUTER_MODEL) is often a faster/cheaper model like Gemini Flash, which might still have associated costs depending on OpenRouter's current pricing/tiers. Check their site and adjust the fallback model in your configuration if needed.

Usage with Cursor (Task Manager Mode)

This is the primary way this server is intended to be used. I have not yet tested it with other AI code editors yet. If you try it, please let me know how it goes, and I'll update the README.

1. Configure the MCP Server in Cursor:

After building the server (npm run build), you need to tell Cursor how to run it.

Find Cursor's MCP configuration file. This can be:

Project-specific: Create/edit a file at

.cursor/mcp.jsoninside your project's root directory.Global: Create/edit a file at

~/.cursor/mcp.jsonin your user home directory (for use across all projects).

Add the following entry to the mcpServers object within that JSON file:

IMPORTANT:

Replace

/full/path/to/your/task-manager-mcp/dist/server.jswith the absolute path to the compiled server script on your machine.Replace

sk-or-v1-xxxxxxxxxxxxxxxxxxxxwith your actual OpenRouter API key (or set GEMINI_API_KEY if using Google AI directly).These env variables defined here will be passed to the server process when Cursor starts it, overriding any

.envfile.

2. Create a Custom Cursor Mode:

Go to Cursor Settings -> Features -> Chat -> Enable Custom modes.

Go back to the chat view, click the mode selector (bottom left), and click Add custom mode.

Give it a name (e.g., "MCP Planner", "Task Dev"), choose an icon/shortcut.

Enable Tools: Make sure the tools exposed by this server (

plan_feature,mark_task_complete,get_next_task,review_changes,adjust_plan) are available and enabled for this mode. You might want to enable other tools like Codebase, Terminal, etc., depending on your workflow.Recommended Instructions for Agent: Paste these rules exactly into the "Custom Instructions" text box:

Save the custom mode.

Expected Workflow (Using the Custom Mode):

Select your new custom mode in Cursor.

Give Cursor a feature request (e.g., "add auth using JWT").

Cursor, following the instructions, will call the

plan_featuretool.The server plans, saves data, and returns a JSON response (inside the text content) to Cursor.

If successful: The response includes

status: "completed"and the description of the first task in themessagefield. The UI (if running) is launched/updated.If clarification needed: The response includes

status: "awaiting_clarification", thefeatureId, theuiUrl, and instructions for the agent to wait and callget_next_tasklater. The UI is launched/updated with the question.

Cursor implements only the task described (if provided).

If clarification was needed, the user answers in the UI, the server resumes planning, and updates the UI via WebSocket. The agent, following instructions, then calls

get_next_taskwith thefeatureId.If a task was completed, Cursor calls

mark_task_complete(withtaskIdandfeatureId).The server marks the task done and returns the next pending task in the response message.

Cursor repeats steps 4-8.

If the user asks Cursor to "review", it calls

review_changes.

API Endpoints (for UI)

The integrated Express server provides these basic endpoints for the frontend:

GET /api/features: Returns a list of existing feature IDs.GET /api/tasks/:featureId: Returns the list of tasks for a specific feature.GET /api/tasks: Returns tasks for the most recently created/modified feature.GET /api/features/:featureId/pending-question: Checks if there's an active clarification question for the feature.POST /api/tasks: Creates a new task for a feature.PUT /api/tasks/:taskId: Updates an existing task.DELETE /api/tasks/:taskId: Deletes a task.(Static Files): Serves files from

dist/frontend-ui/(e.g.,index.html).