MCP Wolfram Alpha (Servidor + Cliente)

Integre perfectamente Wolfram Alpha en sus aplicaciones de chat.

Este proyecto implementa un servidor MCP (Protocolo de Contexto de Modelo) diseñado para interactuar con la API Wolfram Alpha. Permite que las aplicaciones basadas en chat realicen consultas computacionales y recuperen conocimiento estructurado, facilitando así capacidades conversacionales avanzadas.

Se incluye un ejemplo de MCP-Client que utiliza Gemini a través de LangChain, y que demuestra cómo conectar modelos de lenguaje grandes al servidor MCP para interacciones en tiempo real con el motor de conocimiento de Wolfram Alpha.

Características

Integración de Wolfram|Alpha para consultas de matemáticas, ciencias y datos.

Arquitectura modular Fácilmente ampliable para soportar API y funcionalidades adicionales.

Soporte multicliente Gestione sin problemas las interacciones de múltiples clientes o interfaces.

Ejemplo de cliente MCP que utiliza Gemini (a través de LangChain).

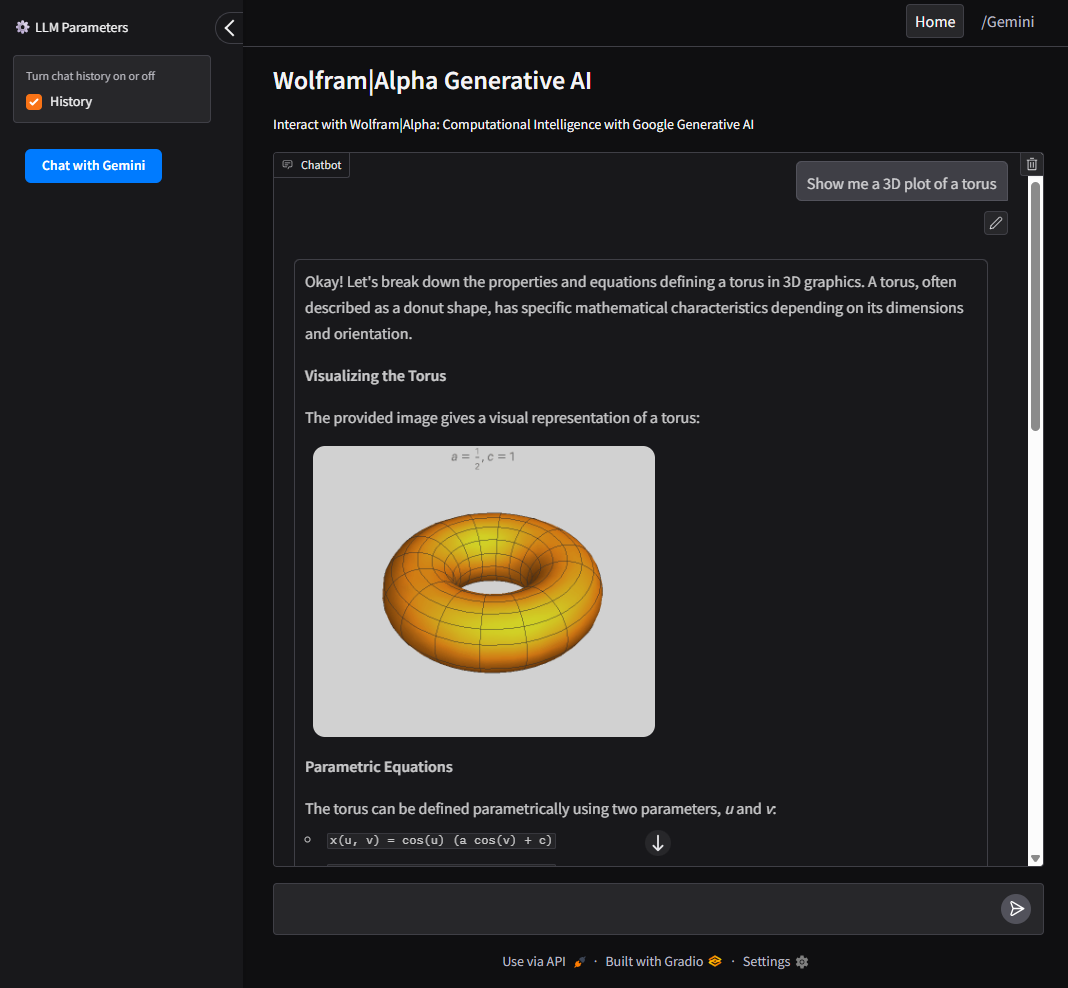

Soporte de interfaz de usuario mediante Gradio para una interfaz web fácil de usar para interactuar con Google AI y el servidor Wolfram Alpha MCP.

Related MCP server: Maya MCP

Instalación

Clonar el repositorio

Configurar variables de entorno

Cree un archivo .env basado en el ejemplo:

WOLFRAM_API_KEY=su_id_de_aplicación_wolframalpha

GeminiAPI=your_google_gemini_api_key (opcional si se utiliza el método Cliente a continuación).

Requisitos de instalación

Configuración

Para usar con el servidor MCP de VSCode:

Cree un archivo de configuración en

.vscode/mcp.jsonen la raíz de su proyecto.Utilice el ejemplo proporcionado en

configs/vscode_mcp.jsoncomo plantilla.Para obtener más detalles, consulte la Guía del servidor VSCode MCP .

Para utilizar con Claude Desktop:

Ejemplo de uso del cliente

Este proyecto incluye un cliente LLM que se comunica con el servidor MCP.

Ejecutar con Gradio UI

Requerido: GeminiAPI

Proporciona una interfaz web local para interactuar con Google AI y Wolfram Alpha.

Para ejecutar el cliente directamente desde la línea de comandos:

Estibador

Para construir y ejecutar el cliente dentro de un contenedor Docker:

Interfaz de usuario

Interfaz intuitiva creada con Gradio para interactuar con Google AI (Gemini) y el servidor Wolfram Alpha MCP.

Permite a los usuarios cambiar entre Wolfram Alpha, Google AI (Gemini) y el historial de consultas.

Ejecutar como herramienta CLI

Requerido: GeminiAPI

Para ejecutar el cliente directamente desde la línea de comandos:

Estibador

Para construir y ejecutar el cliente dentro de un contenedor Docker: