Zeek-MCP

该存储库提供了一组实用程序来构建 MCP 服务器(模型上下文协议),您可以将其与会话 AI 客户端集成。

目录

Related MCP server: ZenML MCP Server

先决条件

Python 3.7+

Zeek已安装并可在您的

PATH中使用(用于execzeek工具)pip (用于安装 Python 依赖项)

安装

1. 克隆存储库

2.安装依赖项

建议使用虚拟环境:

**注意:**如果没有

requirements.txt,请直接安装:pip install pandas mcp

用法

该存储库公开了两个主要的 MCP 工具和一个命令行入口点:

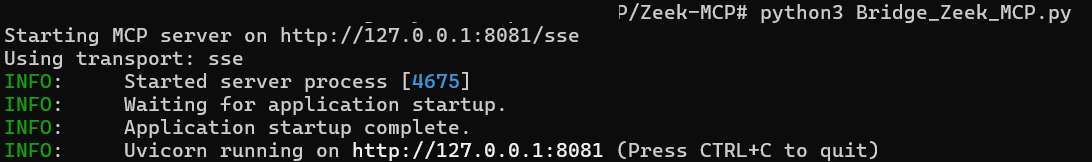

3. 运行 MCP 服务器

--mcp-host:MCP 服务器的主机(默认值:127.0.0.1)。--mcp-port:MCP 服务器的端口(默认值:8081)。--transport:传输协议,可以是sse(服务器发送事件)或stdio。

4. 使用 MCP 工具

您需要使用可以通过调用以下工具来支持 MCP 工具使用的 LLM:

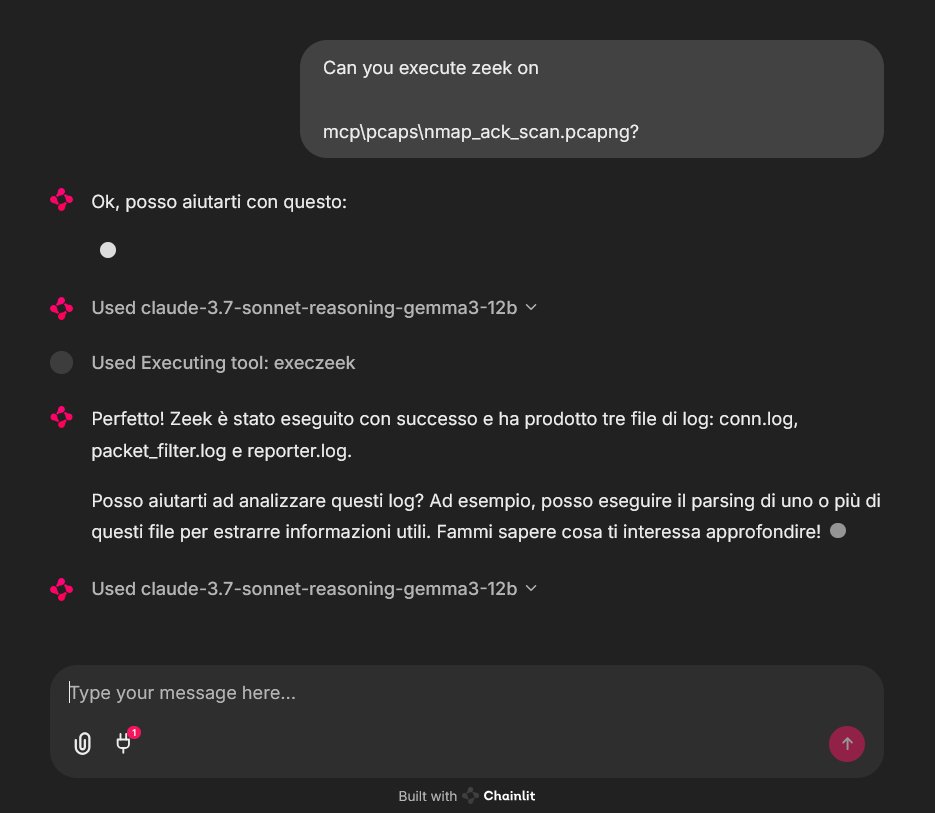

execzeek(pcap_path: str) -> str**描述:**删除工作目录中现有的

.log文件后,在给定的 PCAP 文件上运行 Zeek。**返回:**列出生成的

.log文件名的字符串或错误时的"1"。

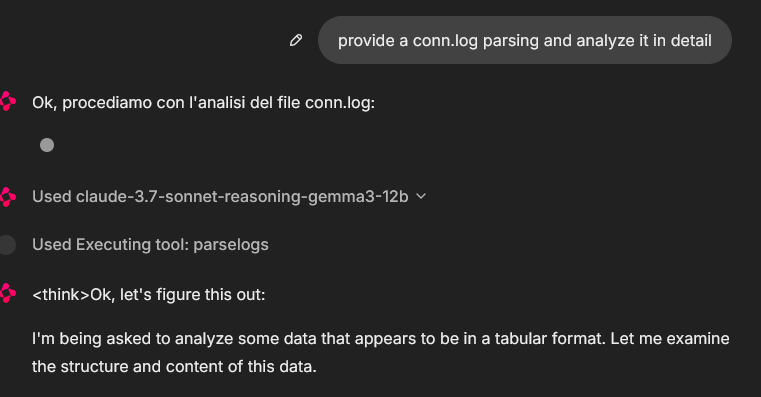

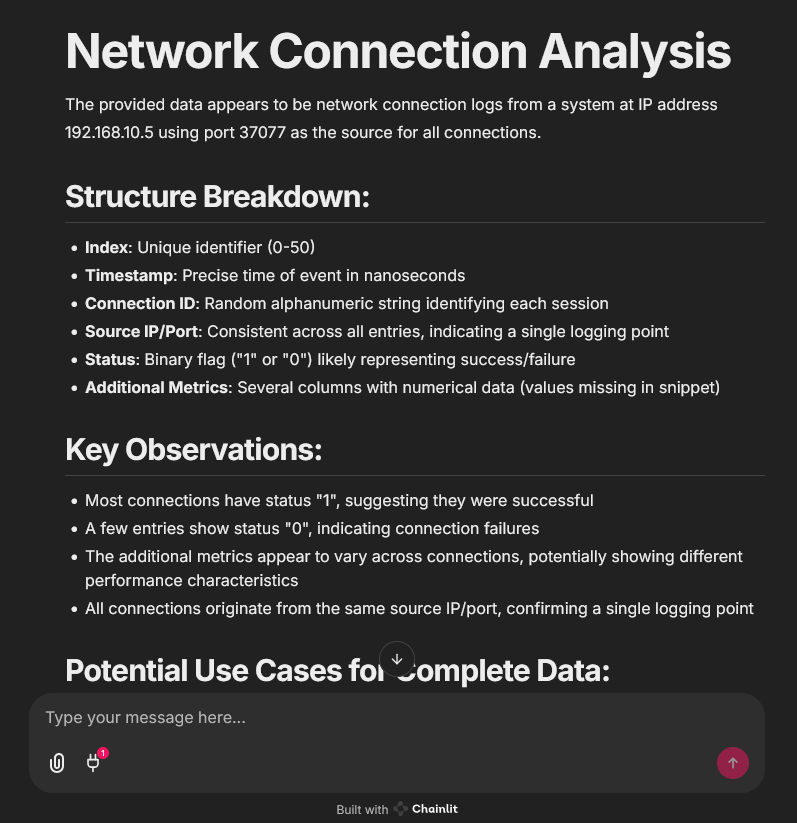

parselogs(logfile: str) -> DataFrame**描述:**解析单个 Zeek

.log文件并返回解析的内容。

您可以通过 HTTP(如果使用 SSE 传输)或嵌入 LLM 客户端(例如:Claude Desktop)与这些端点进行交互:

Claude 桌面集成:

要将 Claude Desktop 设置为 Zeek MCP 客户端,请转到Claude -> Settings -> Developer -> Edit Config -> claude_desktop_config.json并添加以下内容:

或者,直接编辑此文件:

5ire 集成:

另一个支持后端多种模型的 MCP 客户端是5ire 。要设置 Zeek-MCP,请打开 5ire,然后前往Tools -> New ,并设置以下配置:

工具密钥:ZeekMCP

姓名:Zeek-MCP

命令:

python /ABSOLUTE_PATH_TO/Bridge_Zeek_MCP.py

或者,您可以使用 Chainlit 框架并按照文档集成 MCP 服务器。

示例

来自 chainlit 聊天机器人客户端的 MCP 工具使用示例,它使用了一个示例 pcap 文件(您可以在 pcaps 文件夹中找到一些文件)

执照

请参阅LICENSE以了解更多信息。