The Prometheus Alertmanager MCP server enables programmatic querying and management of Alertmanager resources. With this server, you can:

Query Alertmanager status: Retrieve the current status of an Alertmanager instance and its cluster

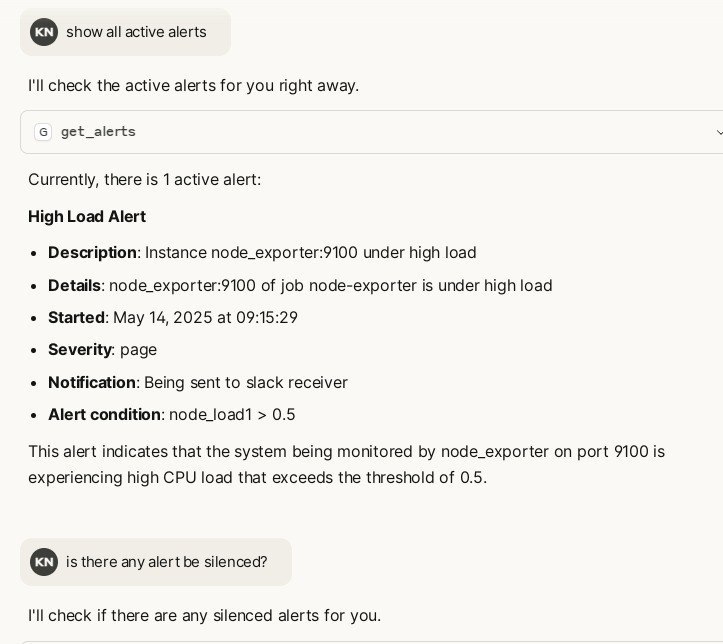

Manage alerts: List alerts with filtering options, create new alerts, and retrieve alert groups

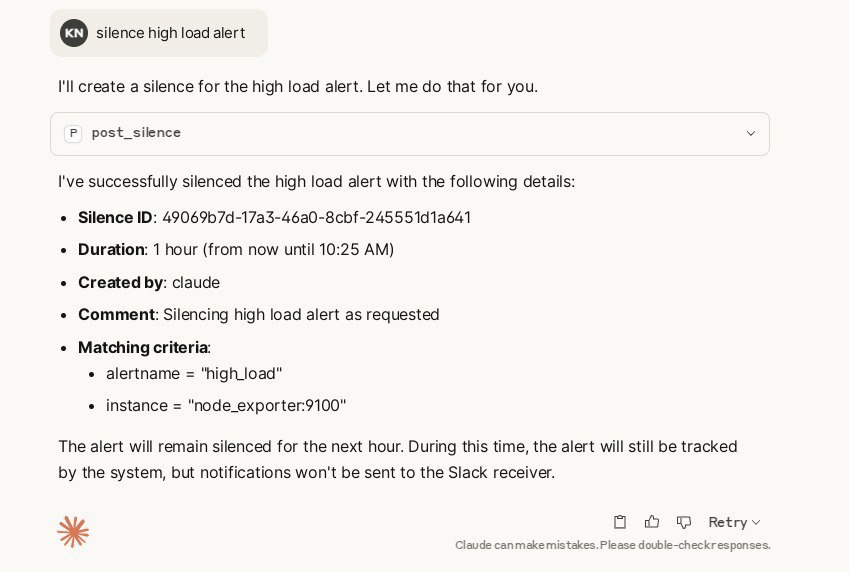

Handle silences: Retrieve, create, update, and delete silences by ID

Manage receivers: Get a list of all notification integration receivers

Integration with AI assistants: Interact with Alertmanager using natural language through supported AI tools like Claude Desktop

Supports containerized deployment of the MCP server through Docker, with configuration via environment variables for connecting to Alertmanager instances.

Enables querying and managing Prometheus Alertmanager resources including status, alerts, silences, receivers, and alert groups. Supports creating new alerts, managing silences (create, update, delete), and retrieving alert information through the Alertmanager API v2.

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@alertmanager-mcp-servershow me all active alerts"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

Table of Contents

Related MCP server: mcp-server-pacman

1. Introduction

Prometheus Alertmanager MCP is a Model Context Protocol (MCP) server for Prometheus Alertmanager. It enables AI assistants and tools to query and manage Alertmanager resources programmatically and securely.

2. Features

Query Alertmanager status, alerts, silences, receivers, and alert groups

Smart pagination support to prevent LLM context window overflow when handling large numbers of alerts

Create, update, and delete silences

Create new alerts

Authentication support (Basic auth via environment variables)

Multi-tenant support (via

X-Scope-OrgIdheader for Mimir/Cortex)Docker containerization support

3. Quickstart

3.1. Prerequisites

Python 3.12+

uv (for fast dependency management).

Docker (optional, for containerized deployment).

Ensure your Prometheus Alertmanager server is accessible from the environment where you'll run this MCP server.

3.2. Installing via Smithery

To install Prometheus Alertmanager MCP Server for Claude Desktop automatically via Smithery:

3.3. Local Run

Clone the repository:

Configure the environment variables for your Prometheus server, either through a .env file or system environment variables:

Multi-tenant Support

For multi-tenant Alertmanager deployments (e.g., Grafana Mimir, Cortex), you can specify the tenant ID in two ways:

Static configuration: Set

ALERTMANAGER_TENANTenvironment variablePer-request: Include

X-Scope-OrgIdheader in requests to the MCP server

The X-Scope-OrgId header takes precedence over the static configuration, allowing dynamic tenant switching per request.

Transport configuration

You can control how the MCP server communicates with clients using the transport options and host/port settings. These can be set either with command-line flags (which take precedence) or with environment variables.

MCP_TRANSPORT: Transport mode. One of

stdio,http, orsse. Default:stdio.MCP_HOST: Host/interface to bind when running

httporssetransports (used by the embedded uvicorn server). Default:0.0.0.0.MCP_PORT: Port to listen on when running

httporssetransports. Default:8000.

Examples:

Use environment variables to set defaults (CLI flags still override):

Or pass flags directly to override env vars:

Notes:

The

stdiotransport communicates over standard input/output and ignores host/port.The

http(streamable HTTP) andssetransports are served via an ASGI app (uvicorn) so host/port are respected when using those transports.Add the server configuration to your client configuration file. For example, for Claude Desktop:

Or install it using make command:

Restart Claude Desktop to load new configuration.

You can now ask Claude to interact with Alertmanager using natual language:

"Show me current alerts"

"Filter alerts related to CPU issues"

"Get details for this alert"

"Create a silence for this alert for the next 2 hours"

3.4. Docker Run

Run it with pre-built image (or you can build it yourself):

Running with Docker in Claude Desktop:

This configuration passes the environment variables from Claude Desktop to the Docker container by using the -e flag with just the variable name, and providing the actual values in the env object.

4. Tools

The MCP server exposes tools for querying and managing Alertmanager, following its API v2:

Get status:

get_status()List alerts:

get_alerts(filter, silenced, inhibited, active, count, offset)Pagination support: Returns paginated results to avoid overwhelming LLM context

count: Number of alerts per page (default: 10, max: 25)offset: Number of alerts to skip (default: 0)Returns:

{ "data": [...], "pagination": { "total": N, "offset": M, "count": K, "has_more": bool } }

List silences:

get_silences(filter, count, offset)Pagination support: Returns paginated results to avoid overwhelming LLM context

count: Number of silences per page (default: 10, max: 50)offset: Number of silences to skip (default: 0)Returns:

{ "data": [...], "pagination": { "total": N, "offset": M, "count": K, "has_more": bool } }

Create silence:

post_silence(silence_dict)Delete silence:

delete_silence(silence_id)List receivers:

get_receivers()List alert groups:

get_alert_groups(silenced, inhibited, active, count, offset)Pagination support: Returns paginated results to avoid overwhelming LLM context

count: Number of alert groups per page (default: 3, max: 5)offset: Number of alert groups to skip (default: 0)Returns:

{ "data": [...], "pagination": { "total": N, "offset": M, "count": K, "has_more": bool } }Note: Alert groups have lower limits because they contain all alerts within each group

Pagination Benefits

When working with environments that have many alerts, silences, or alert groups, the pagination feature helps:

Prevent context overflow: By default, only 10 items are returned per request

Efficient browsing: LLMs can iterate through results using

offsetandcountparametersSmart limits: Maximum of 50 items per page prevents excessive context usage

Clear navigation:

has_moreflag indicates when additional pages are available

Example: If you have 100 alerts, the LLM can fetch them in manageable chunks (e.g., 10 at a time) and only load what's needed for analysis.

See src/alertmanager_mcp_server/server.py for full API details.

5. Development

Contributions are welcome! Please open an issue or submit a pull request if you have any suggestions or improvements.

This project uses uv to manage dependencies. Install uv following the instructions for your platform.