시스템-mcp

systems-mcp는 시스템 모델링을 위한 lethain:systems 라이브러리와 상호작용하는 MCP 서버입니다.

여기에는 두 가지 도구가 제공됩니다.

run_systems_model시스템 모델의systems사양을 실행합니다. 사양과 선택적으로 모델 실행 라운드 수(기본값 100)를 매개변수로 받습니다.load_systems_documentation컨텍스트 창에 문서와 예제를 로드합니다. 이는 모델이 시스템 모델을 작성하는 데 더 도움이 되도록 준비하는 데 유용합니다.

Claude Desktop이나 비슷한 도구와 함께 로컬로 실행하도록 설계되었습니다.

용법

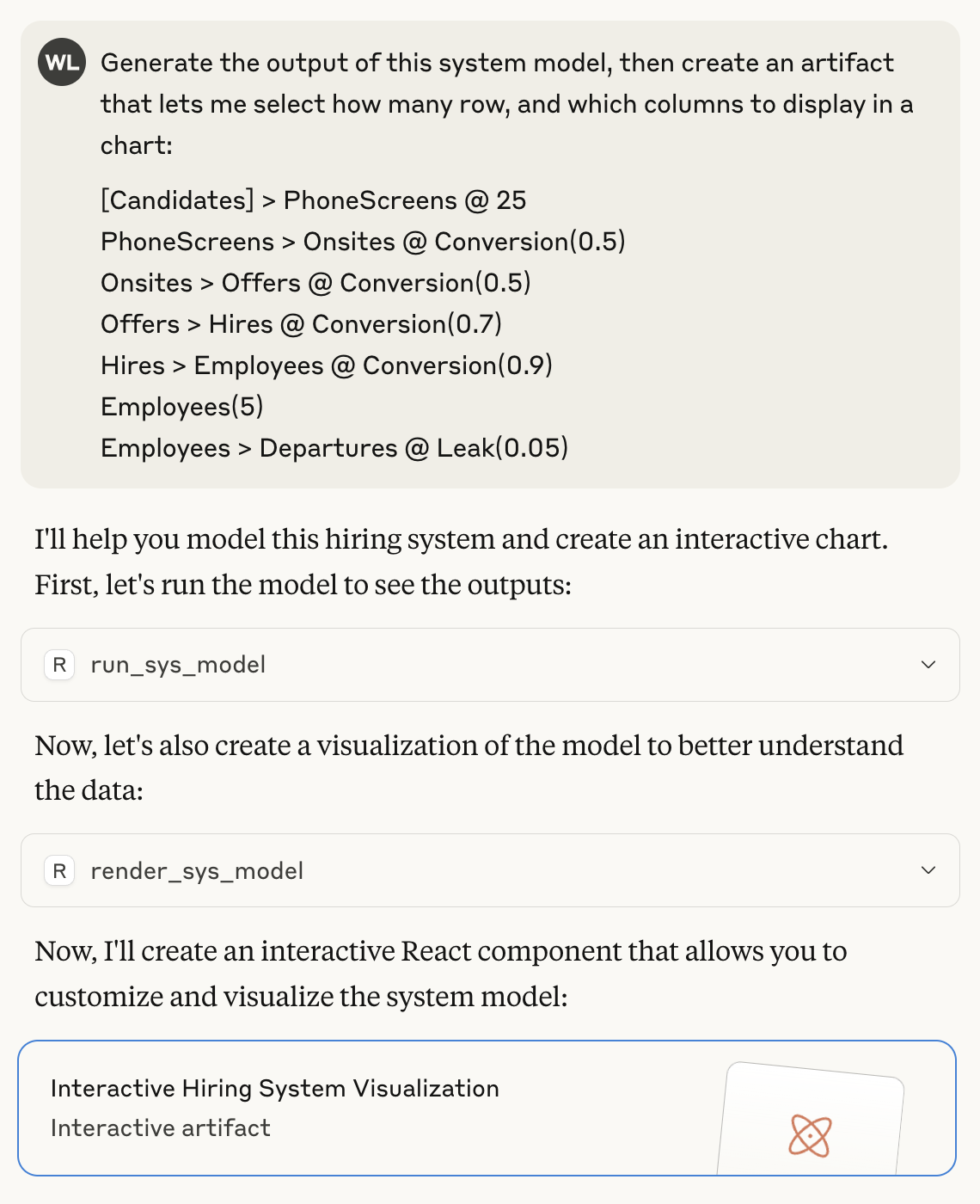

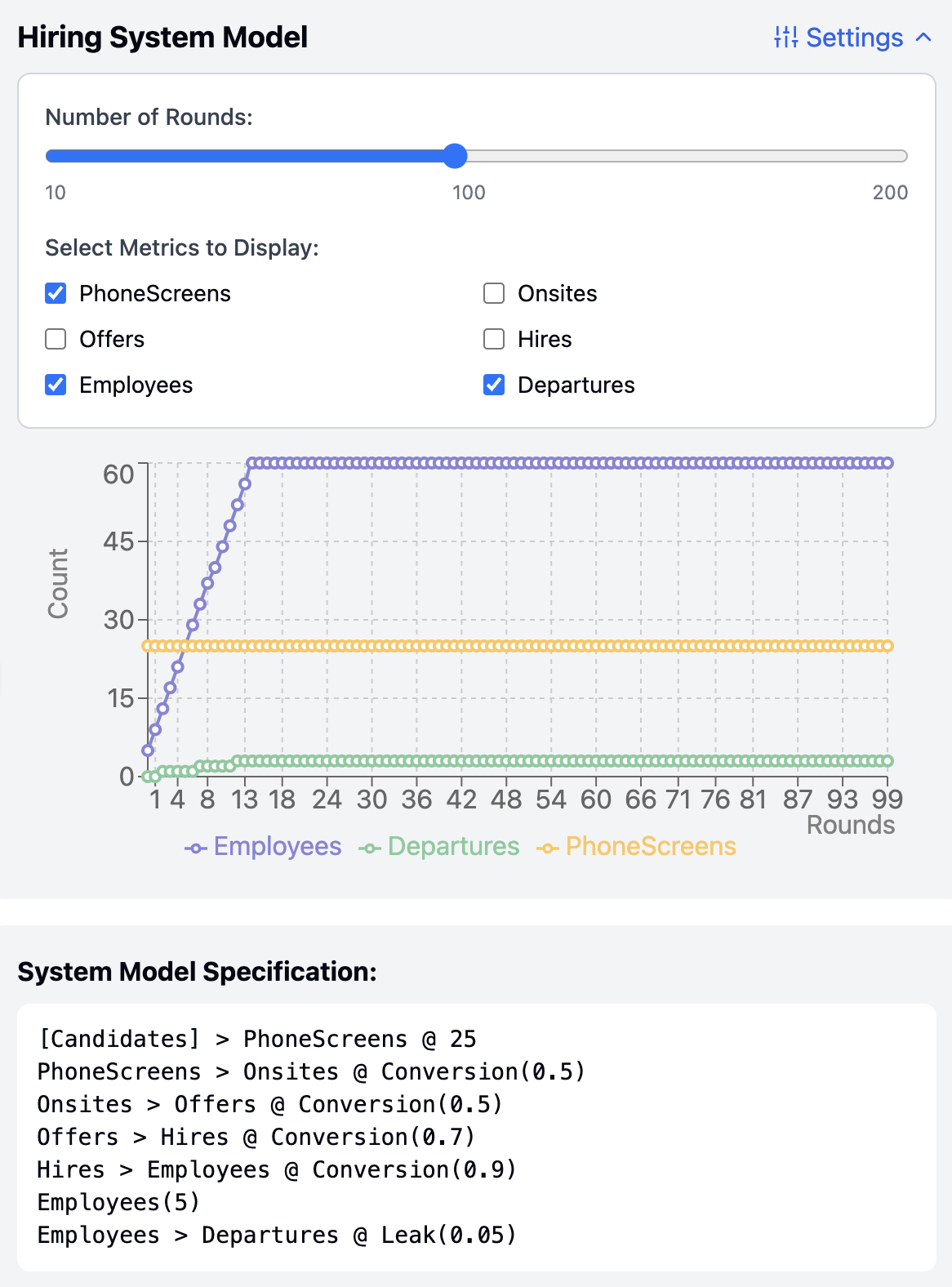

다음은 systems-mcp 사용하여 모델을 실행하고 렌더링하는 예입니다.

시스템 모델을 실행하여 생성된 출력을 포함하여 해당 프롬프트에서 생성된 아티팩트는 다음과 같습니다.

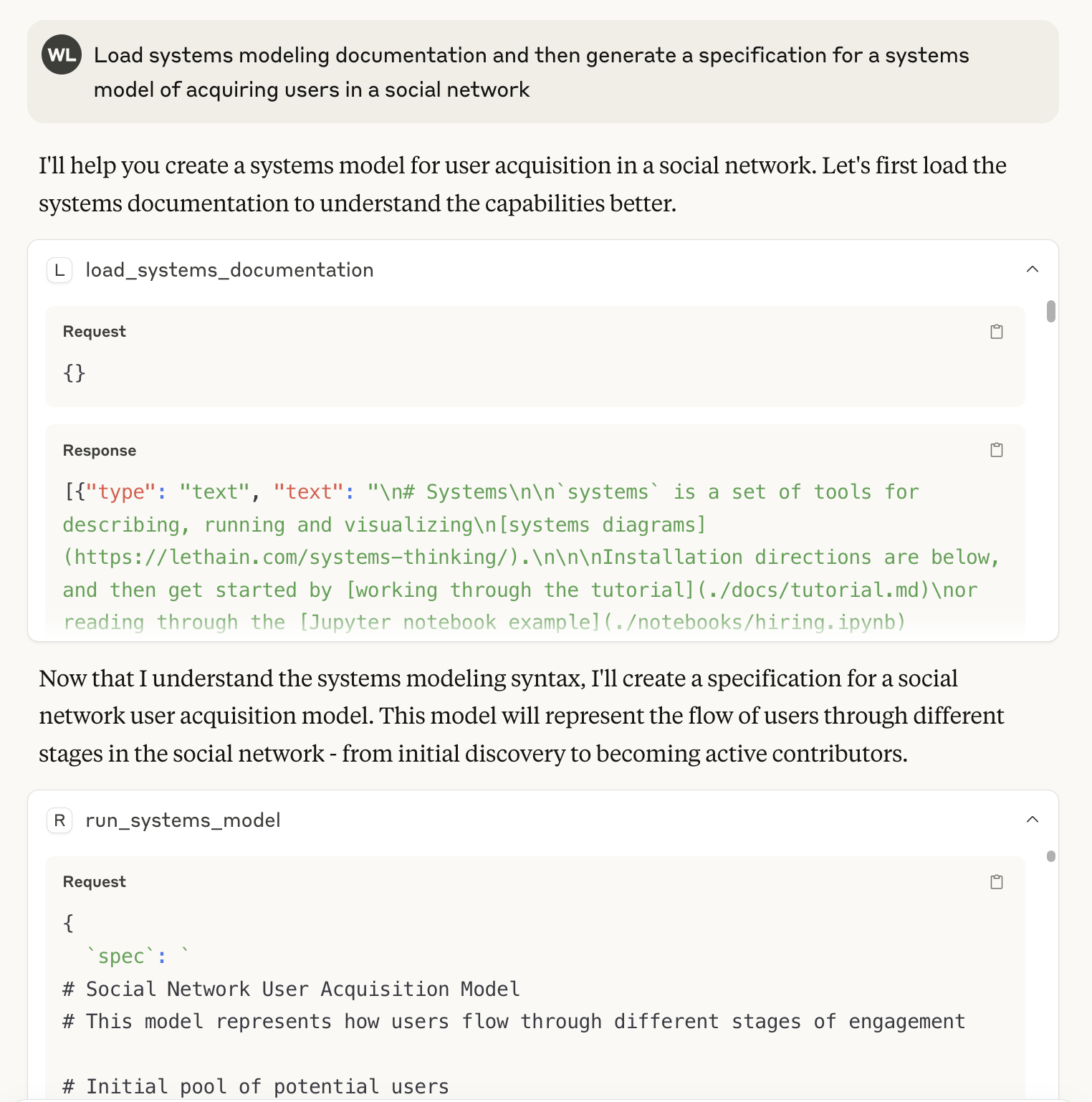

마지막으로, load_systems_documentation 도구를 사용하여 컨텍스트 창을 준비하고 시스템 사양을 생성하는 방법을 보여드리겠습니다. 이는 컨텍스트 창에 lethain:systems/README.md 포함하는 것과 거의 동일하지만, 몇 가지 추가 예제도 포함되어 있습니다( ./docs/ 에 포함된 파일 참조).

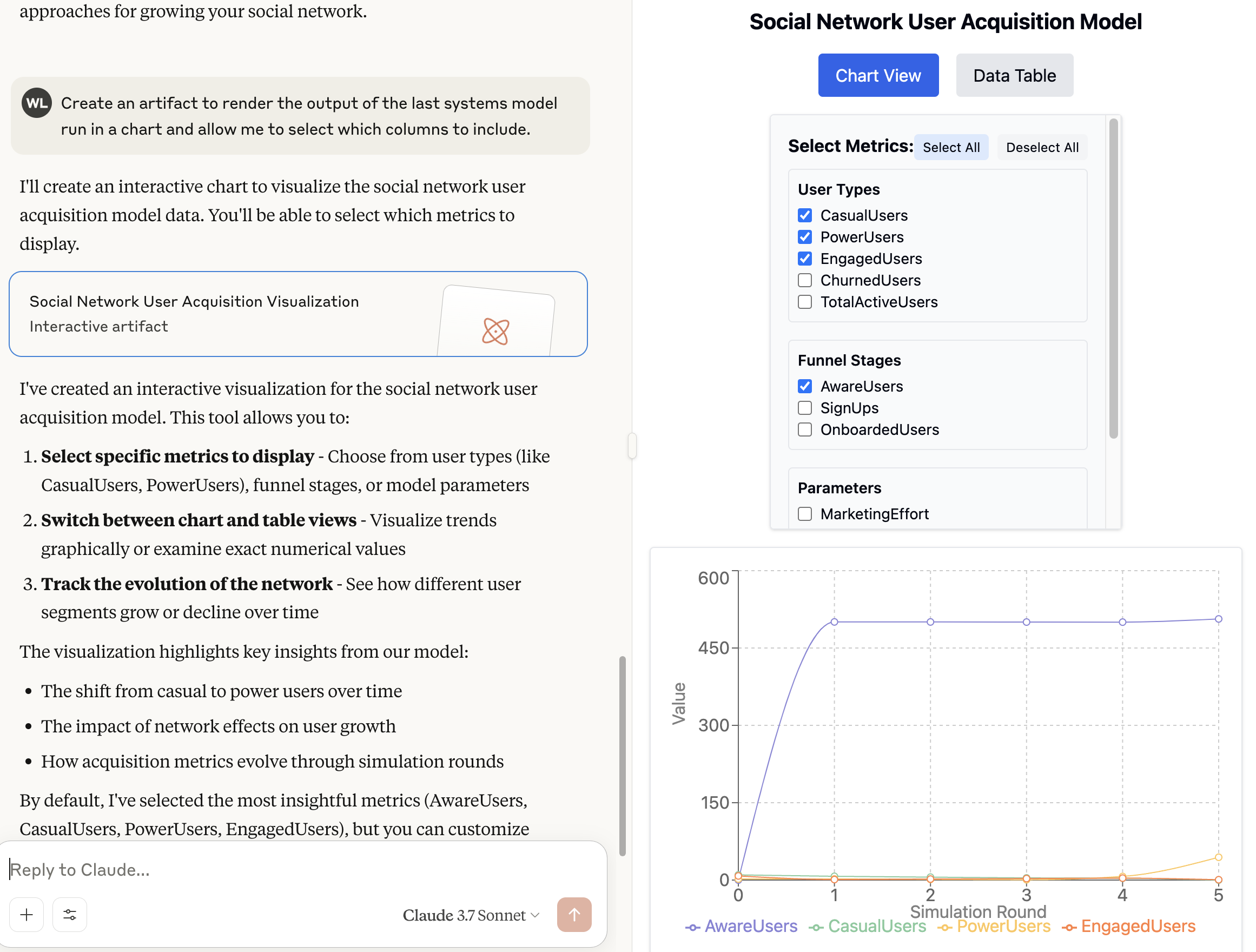

그러면 이전과 마찬가지로 모델을 렌더링할 수 있습니다.

여기서 가장 흥미로운 점은 제가 개인적으로 소셜 네트워크를 모델링하는 systems 사용해 본 적이 없지만, LLM은 그런 사실에도 불구하고 사양을 생성하는 데 놀라울 정도로 괜찮은 성과를 냈다는 것입니다.

Related MCP server: Library Docs MCP Server

설치

이 지침에서는 OS X에 Claude Desktop을 설치하는 방법을 설명합니다. 다른 플랫폼에서도 비슷한 방식으로 작동합니다.

Claude Desktop을 설치하세요.

편리한 위치에 systems-mcp를 복제합니다.

/Users/will/systems-mcp라고 가정합니다.uv설치되어 있는지 확인하고 다음 지침을 따르 세요.Claude Desktop, 설정, 개발자로 이동하여 MCP 구성 파일을 생성하세요. 그런 다음

claude_desktop_config.json파일을 업데이트하세요. (will사용자 이름으로 바꿔야 합니다. 예:whoami출력값)지엑스피1

그런 다음 이 섹션을 추가합니다.

{ "mcpServers": { "systems": { "command": "uv", "args": [ "--directory", "/Users/will/systems-mcp", "run", "main.py" ] } } }클로드를 닫았다가 다시 열어보세요.

작동해야 합니다...