The Systems MCP server enables interaction with the lethain:systems library for systems modeling, providing two main capabilities:

Run Systems Models: Execute systems model specifications using the

run_systems_modelfunction, optionally specifying the number of rounds, and receive the output as JSON.Load Documentation: Load systems documentation, examples, and specification details into the context window to enhance the model's ability to generate accurate systems specifications.

Provides tools for interacting with the lethain:systems library for systems modeling, allowing users to run and visualize systems models directly through the interface.

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@Systems MCPrun a simple supply chain model with 50 rounds"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

systems-mcp

systems-mcp is an MCP server for interacting with

the lethain:systems library for systems modeling.

It provides two tools:

run_systems_modelruns thesystemsspecification of a systems model. Takes two parameters, the specification and, optionally, the number of rounds to run the model (defaulting to 100).load_systems_documentationloads documentation and examples into the context window. This is useful for priming models to be more helpful at writing systems models.

It is intended for running locally in conjunction with Claude Desktop or a similar tool.

Usage

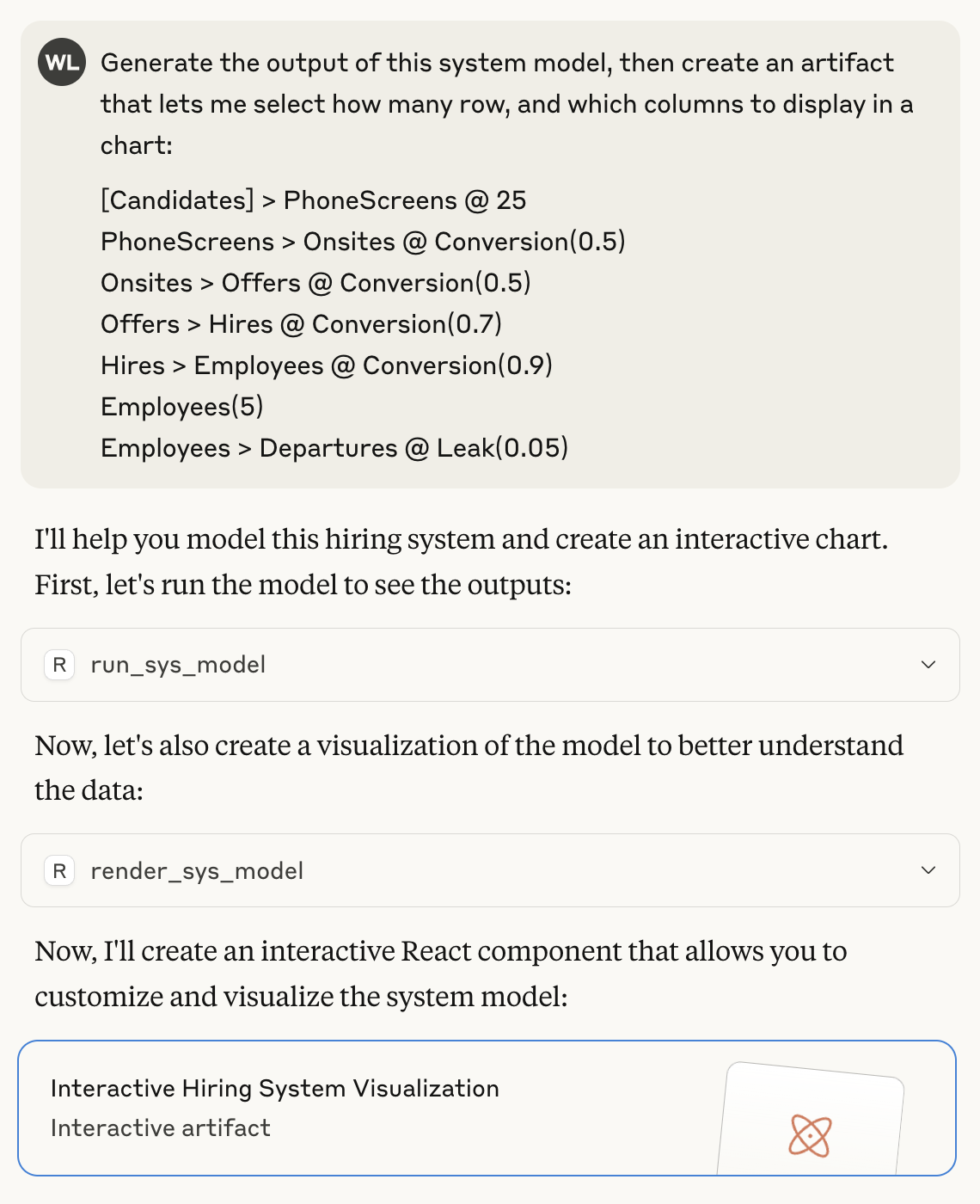

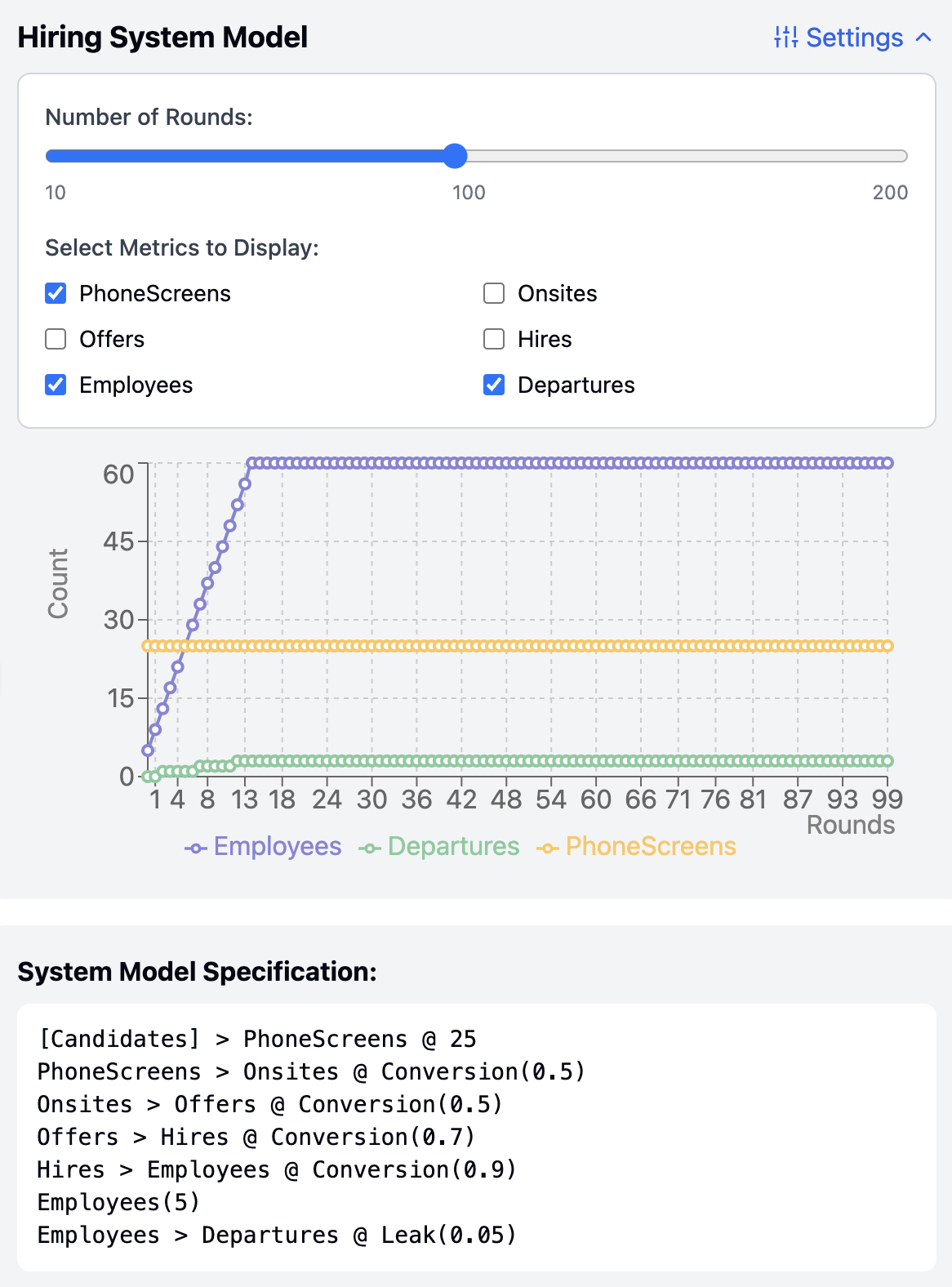

Here's an example of using systems-mcp to run and render a model.

Here is the artifact generated from that prompt, including the output from running the systems model.

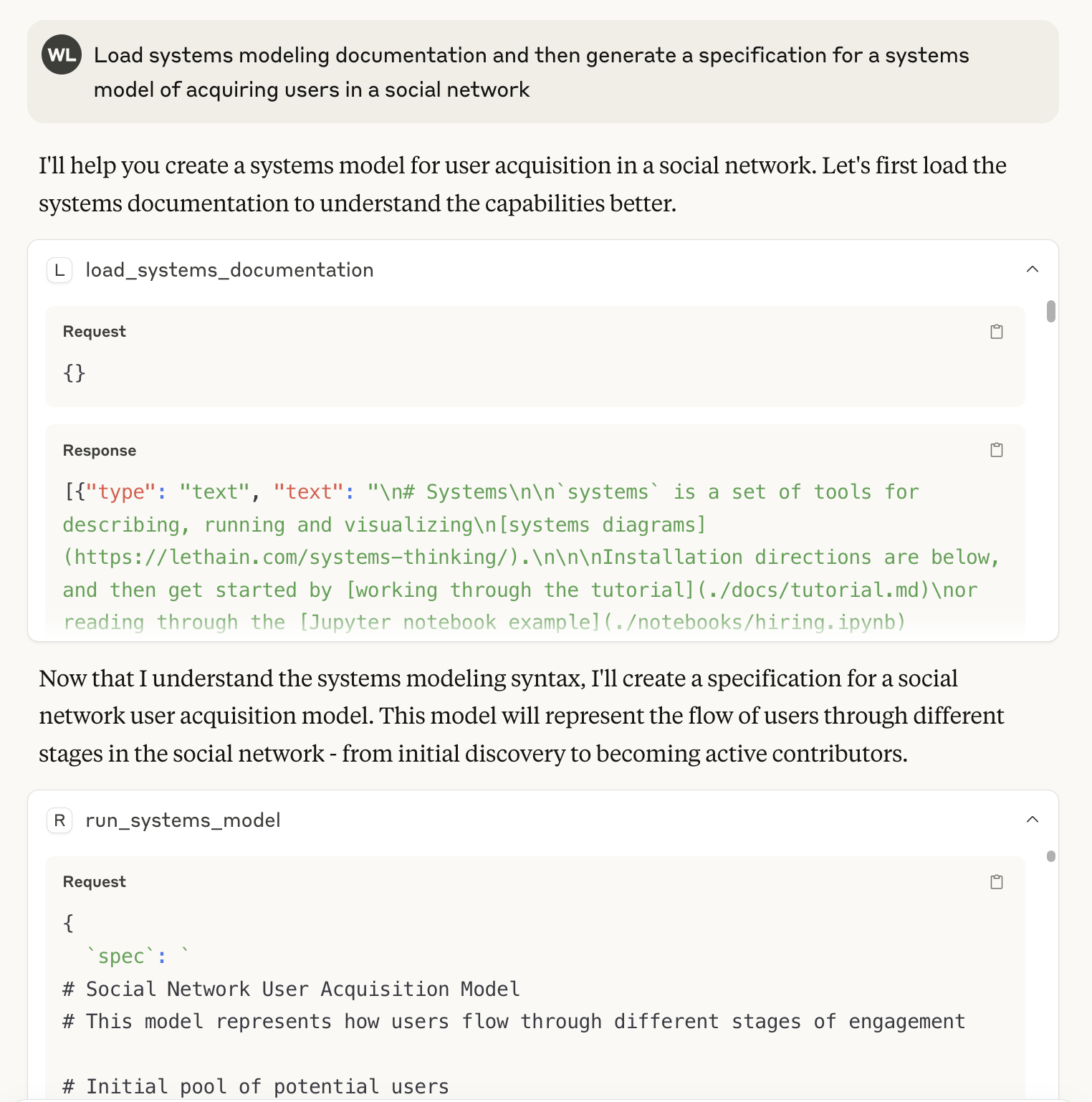

Finally, here is an example of using the load_systems_documentation tool to prime

the context window and using it to help generate a systems specification.

This is loosely equivalent to including lethain:systems/README.md in the context window,

but also includes a handful of additional examples

(see the included files in ./docs/.

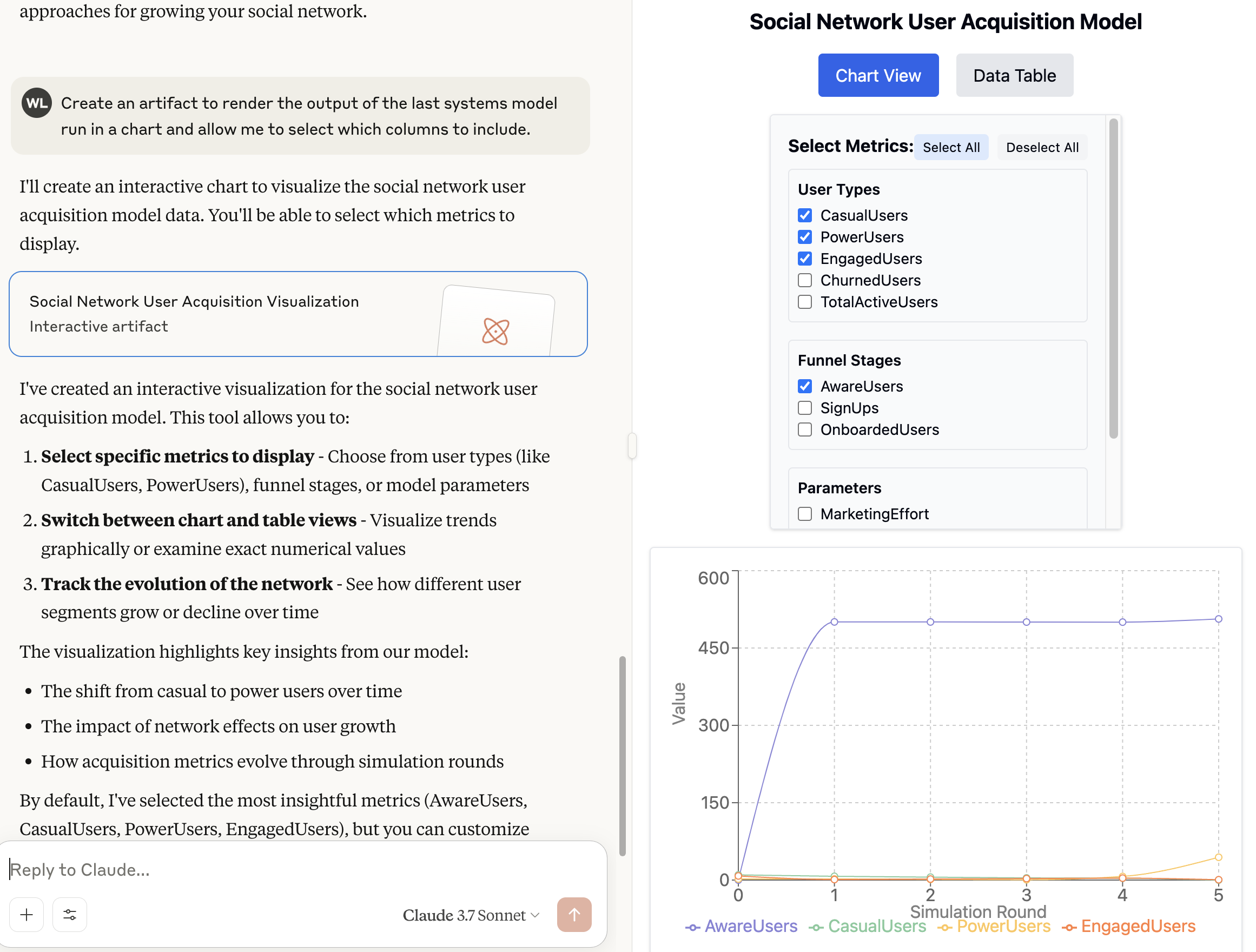

Then you can render the model as before.

The most interesting piece here is that I've never personally used systems to model a social network,

but the LLM was able to do a remarkably decent job at generating a specification despite that.

Related MCP server: Library Docs MCP Server

Installation

These instructions describe installation for Claude Desktop on OS X. It should work similarly on other platforms.

Install Claude Desktop.

Clone systems-mcp into a convenient location, I'm assuming

/Users/will/systems-mcpMake sure you have

uvinstalled, you can follow these instructionsGo to Cladue Desktop, Setting, Developer, and have it create your MCP config file. Then you want to update your

claude_desktop_config.json. (Note that you should replacewillwith your user, e.g. the output ofwhoami.cd ~/Library/Application\ Support/Claude/ vi claude_desktop_config.jsonThen add this section:

{ "mcpServers": { "systems": { "command": "uv", "args": [ "--directory", "/Users/will/systems-mcp", "run", "main.py" ] } } }Close Claude and reopen it.

It should work...