Integrates with GitHub Actions for CI/CD pipelines, automated testing, linting, and publishing releases to PyPI.

Uses pre-commit hooks for code quality checks before committing changes to the repository.

Publishes packages automatically to PyPI using trusted publishing with OIDC authentication, allowing users to install the MCP server directly from the Python package repository.

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

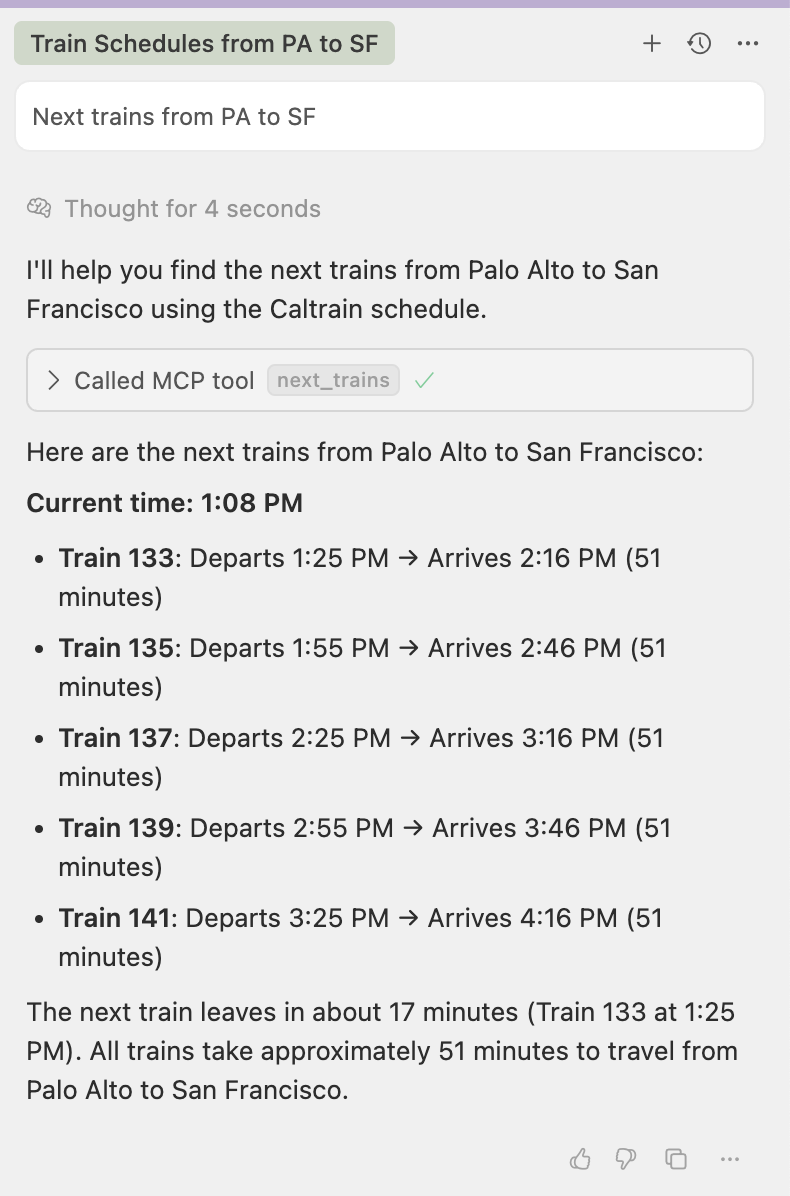

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@Caltrain MCP Servernext trains from Palo Alto to San Francisco"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

🚂 Caltrain MCP Server (Because You Love Waiting for Trains)

A Model Context Protocol (MCP) server that promises to tell you exactly when the next Caltrain will arrive... and then be 10 minutes late anyway. Uses real GTFS data, so at least the disappointment is official!

Features (Or: "Why We Built This Thing")

🚆 "Real-time" train schedules - Get the next departures between any two stations (actual arrival times may vary by +/- infinity)

📍 Station lookup - Because apparently 31 stations is too many to memorize 🤷♀️

🕐 Time-specific queries - Plan your commute with surgical precision, then watch it all fall apart

✨ Smart search - Type 'sf' instead of the full name because we're all lazy here

📊 GTFS-based - We use the same data Caltrain does, so when things go wrong, we can blame them together

Related MCP server: Meeting BaaS MCP Server

Setup (The Fun Part 🙄)

Install dependencies (aka "More stuff to break"):

# Install uv if you haven't already (because pip is apparently too mainstream now) curl -LsSf https://astral.sh/uv/install.sh | sh # Install dependencies using uv (fingers crossed it actually works) uv syncGet that sweet, sweet GTFS data: The server expects Caltrain GTFS data in the

src/caltrain_mcp/data/caltrain-ca-us/directory. Because apparently we can't just ask the trains nicely where they are.uv run python scripts/fetch_gtfs.pyThis magical script downloads files that contain:

stops.txt- All the places trains pretend to stoptrips.txt- Theoretical journeys through space and timestop_times.txt- When trains are supposed to arrive (spoiler: they don't)calendar.txt- Weekday vs weekend schedules (because trains also need work-life balance)

Usage (Good Luck!)

As an MCP Server (The Real Deal)

This server is designed to be used with MCP clients like Claude Desktop, not run directly by humans (because that would be too easy). Here's how to actually use it:

With Claude Desktop

Add this to your Claude Desktop MCP configuration file:

This will automatically install and run the latest version from PyPI.

Then restart Claude Desktop and you'll have access to Caltrain schedules directly in your conversations!

With Other MCP Clients

Any MCP-compatible client can use this server by starting it with:

The server communicates via stdin/stdout using the MCP protocol. It doesn't do anything exciting when run directly - it just sits there waiting for proper MCP messages.

Testing the Server (For Development)

You can test if this thing actually works by importing it directly:

Available Tools (Your New Best Friends)

next_trains(origin, destination, when_iso=None)

Ask politely when the next train will show up. The server will consult its crystal ball (GTFS data) and give you times that are technically accurate.

Parameters:

origin(str): Where you are now (probably regretting your life choices)destination(str): Where you want to be (probably anywhere but here)when_iso(str, optional): When you want to travel (as if time has any meaning in public transit)

Examples:

list_stations()

Get a list of all 31 Caltrain stations, because memorizing them is apparently too much to ask.

Returns: A formatted list that will make you realize just how many places this train supposedly goes.

Station Name Recognition (We're Not Mind Readers, But We Try)

The server supports various ways to be lazy about typing station names:

Full names: "San Jose Diridon Station" (for the perfectionists)

Short names: "San Francisco" (for the slightly less perfectionist)

Abbreviations: "sf" → "San Francisco" (for the truly lazy)

Partial matching: "diridon" matches "San Jose Diridon Station" (for when you can't be bothered)

Available Stations (All 31 Glorious Stops)

The server covers every single Caltrain station because we're completionists:

San Francisco to San Jose (The Main Event):

San Francisco, 22nd Street, Bayshore, South San Francisco, San Bruno, Millbrae, Broadway, Burlingame, San Mateo, Hayward Park, Hillsdale, Belmont, San Carlos, Redwood City, Menlo Park, Palo Alto, Stanford, California Avenue, San Antonio, Mountain View, Sunnyvale, Lawrence, Santa Clara, College Park, San Jose Diridon

San Jose to Gilroy (The "Why Does This Exist?" Extension):

Tamien, Capitol, Blossom Hill, Morgan Hill, San Martin, Gilroy

Sample Output (Prepare to Be Amazed)

Actual arrival times may vary. Side effects may include existential dread and a deep appreciation for remote work.

Technical Details (For the Nerds)

GTFS Processing: We automatically handle the relationship between stations and their platforms (because apparently trains are complicated)

Service Calendar: Respects weekday/weekend schedules (trains also need their beauty rest)

Data Types: Handles the chaos that is mixed integer/string formats in GTFS files

Time Parsing: Supports 24+ hour format for those mythical late-night services

Error Handling: Gracefully fails when you type "Narnia" as a station name

Project Structure (The Organized Chaos)

Development & Testing (For When Things Inevitably Break)

Code Quality & CI/CD

This project uses modern Python tooling to keep the code clean and maintainable:

Ruff: Lightning-fast linting and formatting (because life's too short for slow tools)

MyPy: Type checking (because guessing types is for amateurs)

Pytest: Testing framework with coverage reporting

Release Process (Automated Awesomeness)

This project uses automated versioning and publishing:

Semantic Versioning: Version numbers are automatically determined from commit messages using Conventional Commits

Automatic Tagging: When you push to

main, semantic-release creates version tags automaticallyPyPI Publishing: Tagged releases are automatically built and published to PyPI via GitHub Actions

Trusted Publishing: Uses OIDC authentication with PyPI (no API tokens needed!)

Making a Release

Just commit using conventional commit format and push to main:

The semantic-release workflow will:

Analyze your commit messages

Determine the appropriate version bump

Create a git tag (e.g.,

v1.2.3)Generate a changelog

Trigger the release workflow to publish to PyPI

Local Testing

Test the build process locally before pushing:

GitHub Actions CI

Every PR and push to main triggers automatic checks:

✅ Linting: Ruff checks for code quality issues

✅ Formatting: Ensures consistent code style

✅ Type Checking: MyPy validates type annotations

✅ Tests: Full test suite with coverage reporting

✅ Coverage: Test coverage reporting in CI logs

The CI will politely reject your PR if any checks fail, because standards matter.

MCP Integration (For the AI Overlords)

This server implements the Model Context Protocol (MCP), which means it's designed to work seamlessly with AI assistants and other MCP clients. Once configured:

Claude Desktop: Ask Claude about train schedules directly in conversation

Other MCP Clients: Any MCP-compatible tool can access Caltrain data

Real-time Integration: Your AI can check schedules, suggest routes, and help plan trips

Natural Language: No need to remember station names or command syntax

The server exposes two main tools:

next_trains- Get upcoming departures between stationslist_stations- Browse all available Caltrain stations

So your AI assistant can now disappoint you about train schedules just like a real human would! The future is truly here.

License (The Legal Stuff)

This project uses official Caltrain GTFS data. If something goes wrong, blame them, not us. We're just the messenger.

Built with ❤️ and a concerning amount of caffeine in the Bay Area, where public transit is both a necessity and a source of eternal suffering.