Provides utilities for retrieving schemas and metadata from Delta tables in Microsoft Fabric lakehouses, including generating markdown documentation for Delta tables.

The project is hosted on GitHub and can be cloned from a GitHub repository, though it doesn't appear to offer specific GitHub API integration functionality.

Enables generation of documentation for Delta tables in Markdown format, making it easier to document and share table schemas and metadata.

Fabric MCP

Fabric MCP is a Python-based MCP server for interacting with Microsoft Fabric APIs. It provides utilities for managing workspaces, lakehouses, warehouses, and tables.(More incoming features will be added in the future). This project is inspired by the following project: https://github.com/Augustab/microsoft_fabric_mcp/tree/main

Features

List workspaces, lakehouses, warehouses, and tables.

Retrieve schemas and metadata for Delta tables.

Generate markdown documentation for Delta tables.

Requirements

Python 3.12 or higher

Azure credentials for authentication

uv (from astral) : Installation instructions

Azure Cli : Installation instructions

Optional: Node.js and npm for running the MCP inspector : Installation instructions

Installation

Clone the repository:

git clone https://github.com/your-repo/fabric-mcp.git cd fabric-mcpSet up your virtual environment and install dependencies:

uv syncInstall dependencies:

pip install -r requirements.txt

Usage

Connect to MS Fabric

Run the Azure CLI command to log in:

az login --scope https://api.fabric.microsoft.com/.default

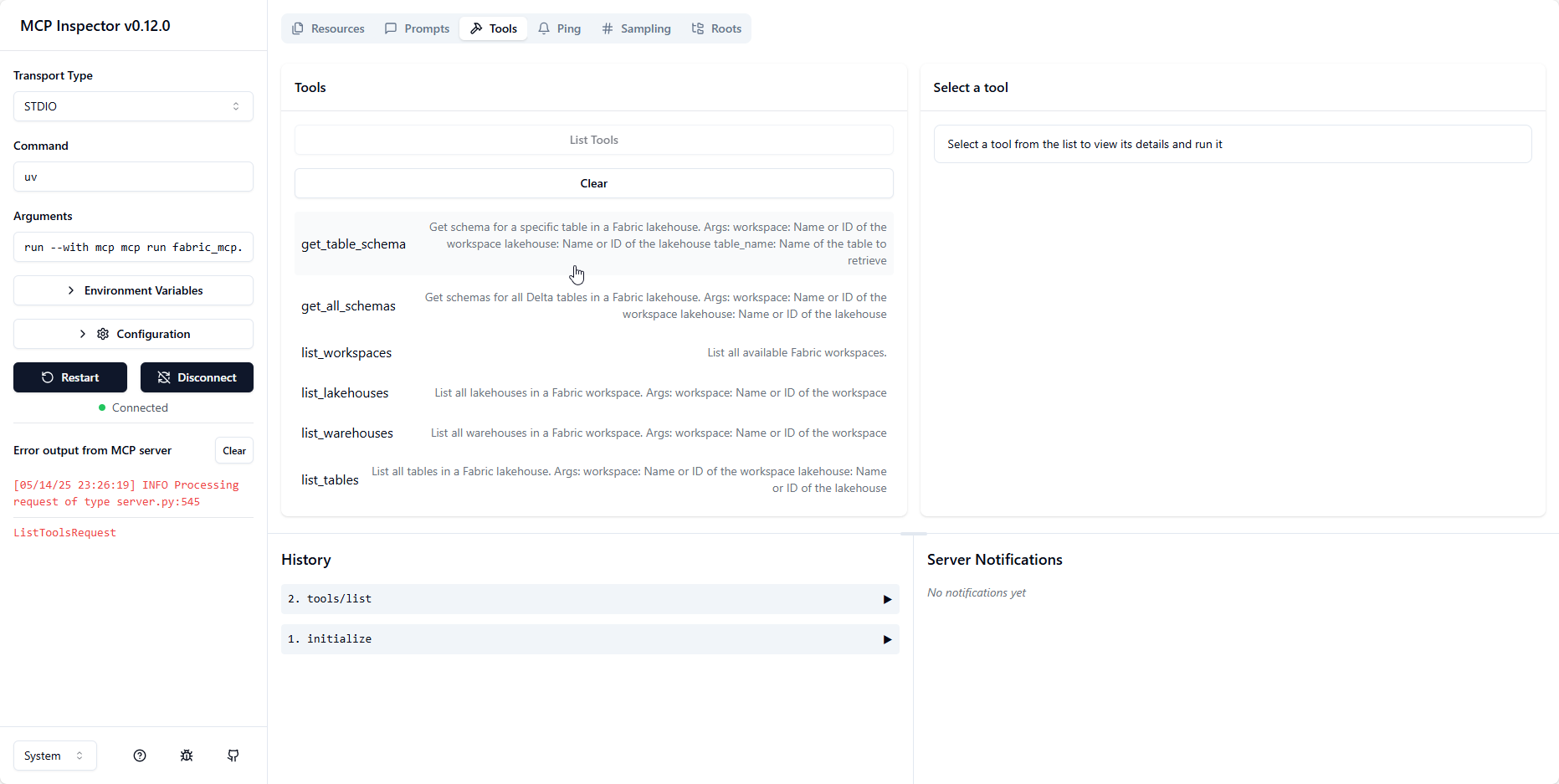

Running the MCP Server and coonecting to it using the MCP inspector

Run the MCP server with the inspector exposed for testing:

bash uv run --with mcp mcp dev fabric_mcp.pyThis will start the server and expose the inspector athttp://localhost:6274.

Running the MCP Server and coonecting to it using the MCP inspector

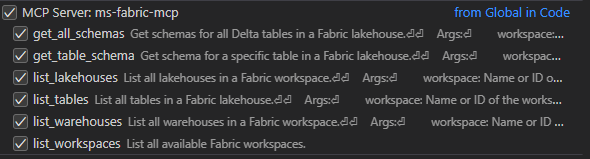

To use the MCP server with VSCode, you can set up a launch configuration in your

launch.jsonfile:{ //Existing configurations... "mcp": { "servers": { "ms-fabric-mcp": { "type": "stdio", "command": "<FullPathToProjectFolder>\\.venv\\Scripts\\python.exe ", "args": [ "<FullPathToProjectFolder>\\fabric_mcp.py" ] } } } }This configuration allows you to run and connect to the MCP server directly from VSCode. And provide access to the Tools :

Using Agent mode in the Copilot chat, you can access the different tools available in the MCP server by specifying to tool name with #, for example #list_workspaces.

Available Tools

The following tools are available via the MCP server:

list_workspaces: List all available Fabric workspaces.list_lakehouses(workspace): List all lakehouses in a specified workspace.list_warehouses(workspace): List all warehouses in a specified workspace.list_tables(workspace, lakehouse): List all tables in a specified lakehouse.get_lakehouse_table_schema(workspace, lakehouse, table_name): Retrieve the schema and metadata for a specific Delta table.get_all_lakehouse_schemas(workspace, lakehouse): Retrieve schemas and metadata for all Delta tables in a lakehouse.set_lakehouse(workspace, lakehouse): Set the current lakehouse context.set_warehouse(workspace, warehouse): Set the current warehouse context.set_workspace(workspace): Set the current workspace context.

License

This project is licensed under the MIT License. See the LICENSE file for details.

This server cannot be installed

remote-capable server

The server can be hosted and run remotely because it primarily relies on remote services or has no dependency on the local environment.

A Python-based MCP server that enables interaction with Microsoft Fabric APIs for managing workspaces, lakehouses, warehouses, and tables through natural language.

Related MCP Servers

- -securityAlicense-qualityA Python-based MCP server that integrates OpenAPI-described REST APIs into MCP workflows, enabling dynamic exposure of API endpoints as MCP tools.Last updated -122MIT License

- -securityFlicense-qualityThis is an MCP server that facilitates building tools for interacting with various APIs and workflows, supporting Python-based development with potential for customizable prompts and user configurations.Last updated -

- AsecurityFlicenseAqualityA Python-based server that helps users easily install and configure other MCP servers across different platforms.Last updated -23

- -securityAlicense-qualityA crash course for Python developers on building and integrating Model Context Protocol (MCP) servers into production applications and agent systems.Last updated -MIT License