🚀 ⚡️ 로커스트-MCP-서버

Locust 부하 테스트 실행을 위한 모델 컨텍스트 프로토콜(MCP) 서버 구현입니다. 이 서버를 통해 Locust 부하 테스트 기능과 AI 기반 개발 환경의 원활한 통합이 가능합니다.

✨ 특징

모델 컨텍스트 프로토콜 프레임워크와의 간단한 통합

헤드리스 및 UI 모드 지원

구성 가능한 테스트 매개변수(사용자, 생성 속도, 런타임)

Locust 부하 테스트를 실행하기 위한 사용하기 쉬운 API

실시간 테스트 실행 출력

HTTP/HTTPS 프로토콜 지원

사용자 정의 작업 시나리오 지원

Related MCP server: Web-QA

🔧 필수 조건

시작하기 전에 다음 사항이 설치되어 있는지 확인하세요.

Python 3.13 이상

uv 패키지 관리자( 설치 가이드 )

📦 설치

저장소를 복제합니다.

지엑스피1

필요한 종속성을 설치하세요:

환경 변수 설정(선택 사항): 프로젝트 루트에

.env파일을 만듭니다.

🚀 시작하기

Locust 테스트 스크립트를 만듭니다(예:

hello.py):

아래 사양을 사용하여 선호하는 MCP 클라이언트(Claude Desktop, Cursor, Windsurf 등)에서 MCP 서버를 구성하세요.

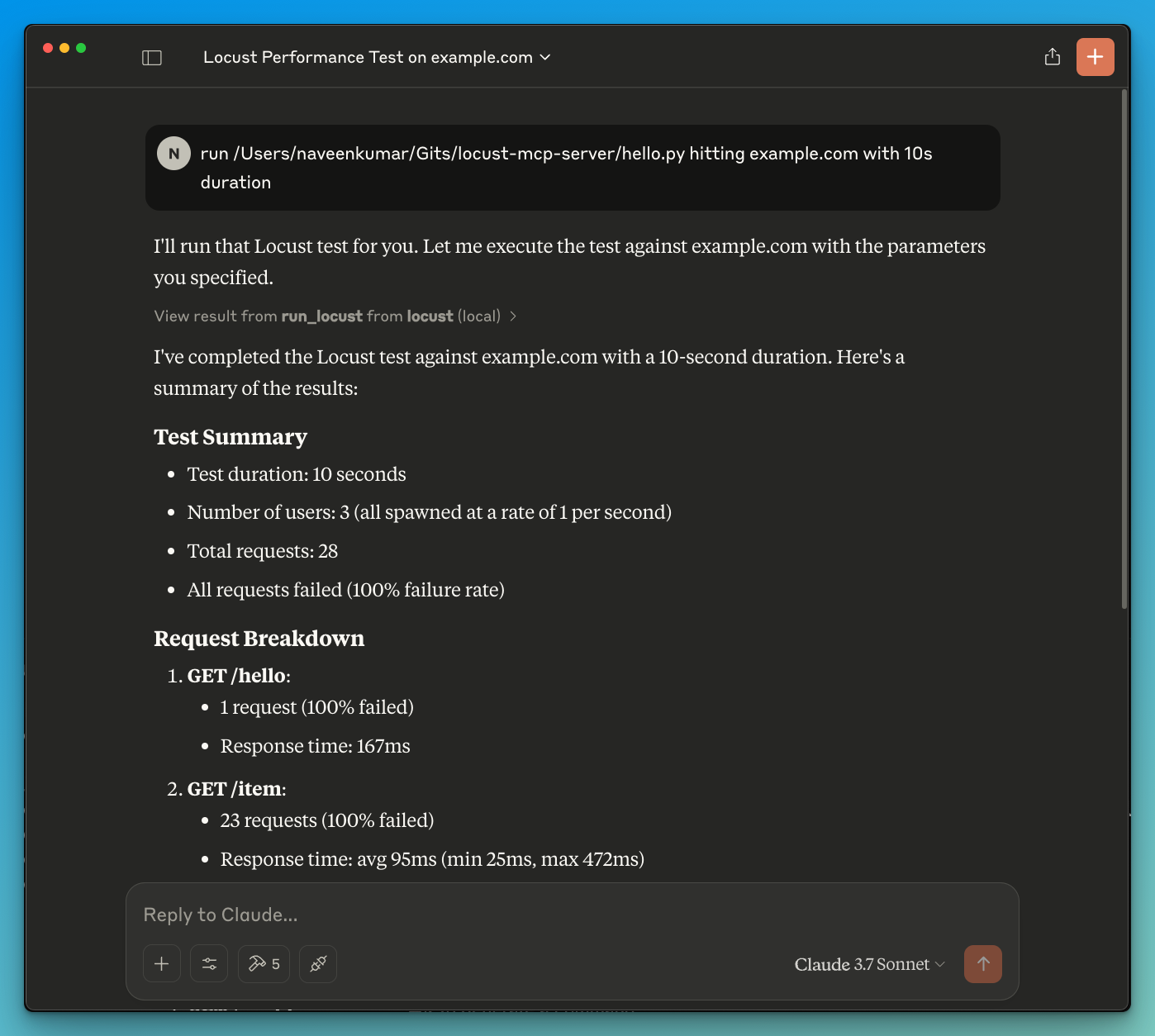

이제 LLM에 테스트를 실행하도록 요청합니다. 예:

run locust test for hello.py. Locust MCP 서버는 다음 도구를 사용하여 테스트를 시작합니다.

run_locust: 헤드리스 모드, 호스트, 런타임, 사용자 및 생성 속도에 대한 구성 가능한 옵션으로 테스트를 실행합니다.

📝 API 참조

메뚜기 테스트 실행

매개변수:

test_file: Locust 테스트 스크립트 경로headless: 헤드리스 모드(True) 또는 UI(False)로 실행host: 부하 테스트를 위한 대상 호스트runtime: 테스트 기간(예: "30초", "1분", "5분")users: 시뮬레이션할 동시 사용자 수spawn_rate: 사용자가 생성되는 속도

✨ 사용 사례

LLM 기반 결과 분석

LLM의 도움으로 효과적인 디버깅

🤝 기여하기

기여를 환영합니다! 풀 리퀘스트를 제출해 주세요.

📄 라이센스

이 프로젝트는 MIT 라이선스에 따라 라이선스가 부여되었습니다. 자세한 내용은 라이선스 파일을 참조하세요.