Enables configuration of the NebulaGraph MCP server via environment variables and .env files for storing connection details and credentials.

Hosts the server's repository and provides CI/CD workflows for testing and linting through GitHub Actions.

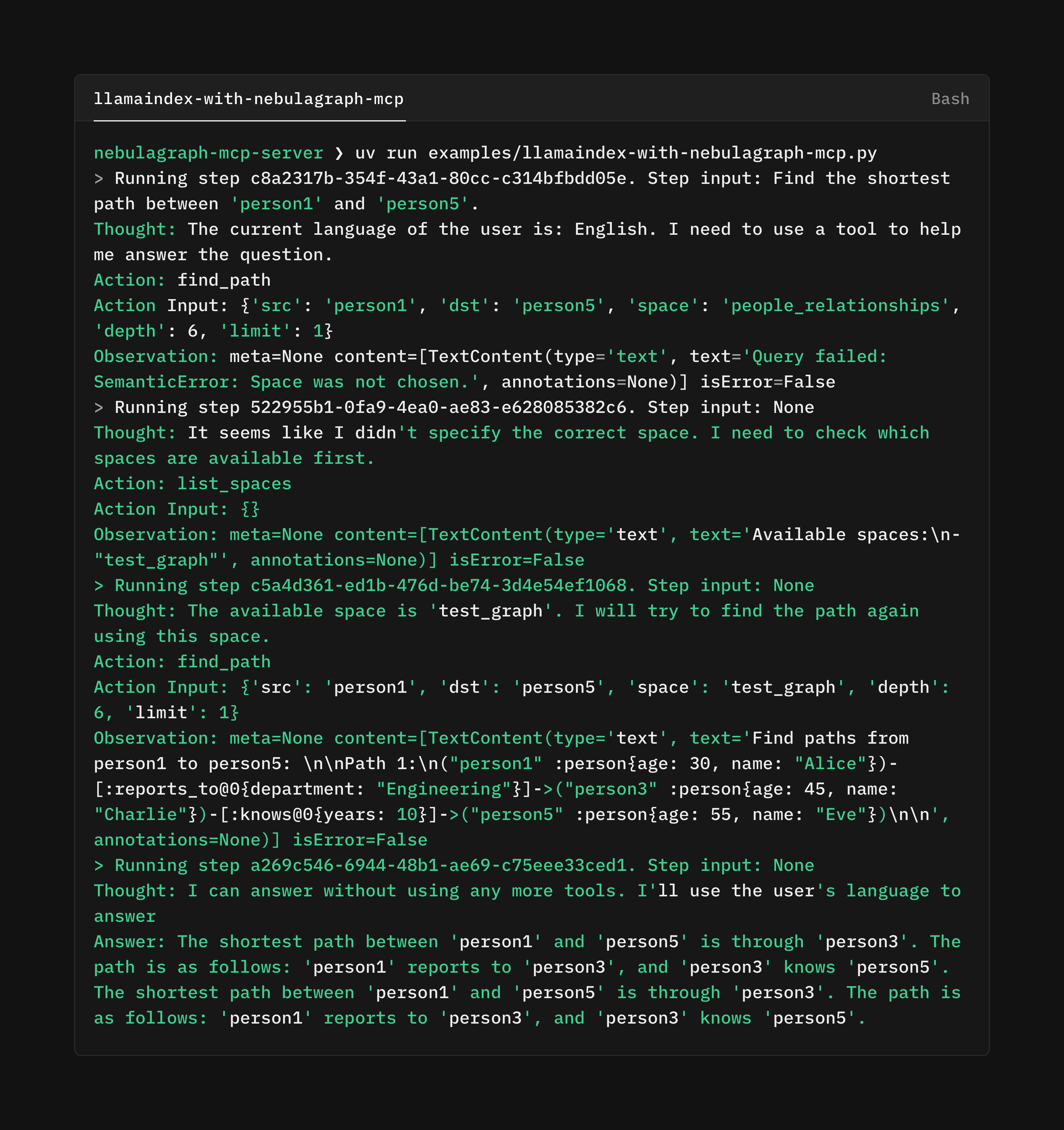

Provides access to NebulaGraph databases, enabling graph exploration through schema discovery, query execution, and graph algorithm shortcuts for AI agents to interact with graph data.

Enables distribution and installation of the NebulaGraph MCP server through the Python Package Index, allowing users to easily install the server using pip.

Runs on Python runtime, supporting multiple Python versions as indicated by the PyPI version badge.

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@NebulaGraph MCP Servershow me the schema of the social network graph"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

Model Context Protocol Server for NebulaGraph

A Model Context Protocol (MCP) server implementation that provides access to NebulaGraph.

Features

Seamless access to NebulaGraph 3.x .

Get ready for graph exploration, you know, Schema, Query, and a few shortcut algorithms.

Follow Model Context Protocol, ready to integrate with LLM tooling systems.

Simple command-line interface with support for configuration via environment variables and .env files.

Related MCP server: MongoDB MCP Server for LLMs

Installation

Usage

nebulagraph-mcp-server will load configs from .env, for example:

It requires the value of

NEBULA_VERSIONto be equal to v3 until we are ready for v5.

Development

Credits

The layout and workflow of this repo is copied from mcp-server-opendal.