Supports integration with Google's Gemini model via LangChain to create an example client that interacts with the MCP server for real-time Wolfram Alpha queries

Utilizes LangChain to connect large language models (specifically Gemini) to the Wolfram Alpha API, facilitating the creation of AI clients that can interact with the MCP server

Allows chat applications to perform computational queries and retrieve structured knowledge through the Wolfram Alpha API, enabling advanced mathematical, scientific, and data analysis capabilities

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@MCP_WolframAlphacalculate the derivative of x^2 + 3x - 5"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

MCP Wolfram Alpha (Server + Client)

Seamlessly integrate Wolfram Alpha into your chat applications.

This project implements an MCP (Model Context Protocol) server designed to interface with the Wolfram Alpha API. It enables chat-based applications to perform computational queries and retrieve structured knowledge, facilitating advanced conversational capabilities.

Included is an MCP-Client example utilizing Gemini via LangChain, demonstrating how to connect large language models to the MCP server for real-time interactions with Wolfram Alpha’s knowledge engine.

Related MCP server: Maya MCP

Features

Wolfram|Alpha Integration for math, science, and data queries.

Modular Architecture Easily extendable to support additional APIs and functionalities.

Multi-Client Support Seamlessly handle interactions from multiple clients or interfaces.

MCP-Client example using Gemini (via LangChain).

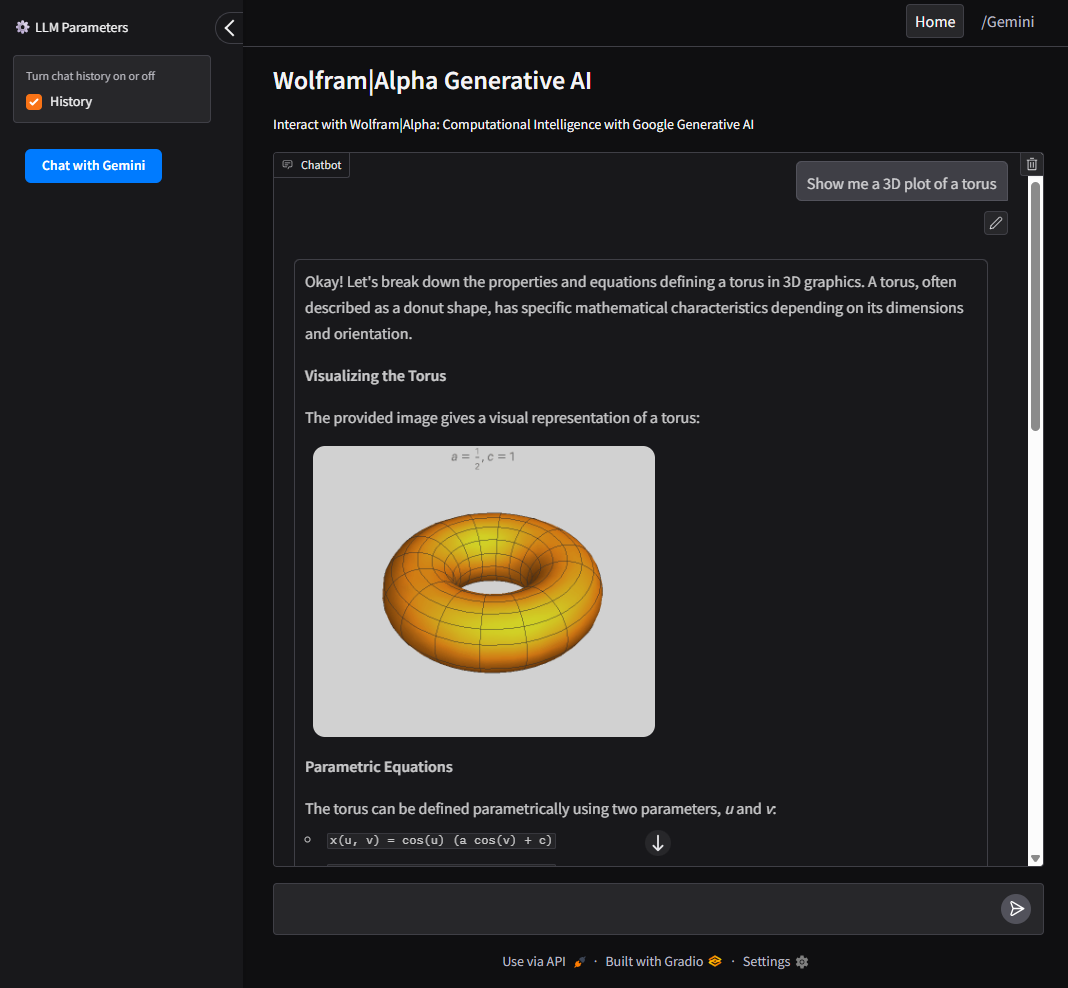

UI Support using Gradio for a user-friendly web interface to interact with Google AI and Wolfram Alpha MCP server.

Installation

Clone the Repo

Set Up Environment Variables

Create a .env file based on the example:

WOLFRAM_API_KEY=your_wolframalpha_appid

GeminiAPI=your_google_gemini_api_key (Optional if using Client method below.)

Install Requirements

Install the required dependencies with uv:

Ensure uv is installed.

Configuration

To use with the VSCode MCP Server:

Create a configuration file at

.vscode/mcp.jsonin your project root.Use the example provided in

configs/vscode_mcp.jsonas a template.For more details, refer to the VSCode MCP Server Guide.

To use with Claude Desktop:

Client Usage Example

This project includes an LLM client that communicates with the MCP server.

Run with Gradio UI

Required: GeminiAPI

Provides a local web interface to interact with Google AI and Wolfram Alpha.

To run the client directly from the command line:

Docker

To build and run the client inside a Docker container:

UI

Intuitive interface built with Gradio to interact with both Google AI (Gemini) and the Wolfram Alpha MCP server.

Allows users to switch between Wolfram Alpha, Google AI (Gemini), and query history.

Run as CLI Tool

Required: GeminiAPI

To run the client directly from the command line:

Docker

To build and run the client inside a Docker container:

Contact

Feel free to give feedback. The e-mail address is shown if you execute this in a shell: