Allows running the MCP server with local LLM models through Ollama, with specific support for models like qwen3 that can utilize MCP tools

Used for testing the MCP server functionality and ensuring proper integration with HPC tools

Serves as a core dependency for the MCP server, enabling development and execution of HPC-focused tools

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@HPC-MCPdebug the crash in my MPI program at examples/mpi/crash.exe"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

hpc-mcp :zap::computer:

This project provides MCP tools for HPC. These are designed to integrate with LLMs. My initial plan is to integrate with LLMs called from IDEs such as cursor and vscode.

Quick Start Guide :rocket:

This project uses uv for dependency management and installation. If you don't have uv installed, follow installation instructions on their website.

Once we have uv installed we can install the dependencies and run the tests with the following

command:

Adding the MCP Server

Cursor

Open Cursor and go to settings.

Click

Tools & IntegrationsClick

Add Custom MCP

This will open your system-wide MCP settings ($HOME/.cursor/mcp.json). If you prefer to set this

on a project-by-project basis, then you can create a local configuration using

<path/to/project/root>/.cursor/mcp.json.

Add the following configuration:

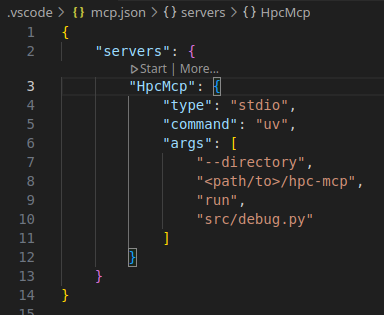

VSCode

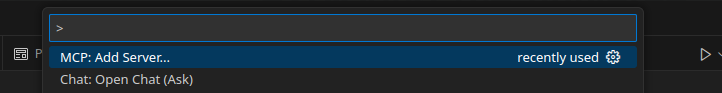

Open command palette (Ctrl+Shift+p) and select

MCP: Add Server...

Choose the option

command (stdio)since the server will be run locallyType the command to run the MCP server:

Select reasonable name for the server e.g. "HpcMcp" (camel case is a convention)

Select whether to add the server locally or globally.

You can tune the settings by opening

setting.json(global settings) or.vscode/setting.json(workspace settings)

Zed

Open Zed and go to settings.

Open general settings

CTRL-ALT-CUnder section Model Context Protocol (MCP) Servers click

Add Custom ServerAdd the following text (changing the

<path/to>/hpc-mcpto your actual path)

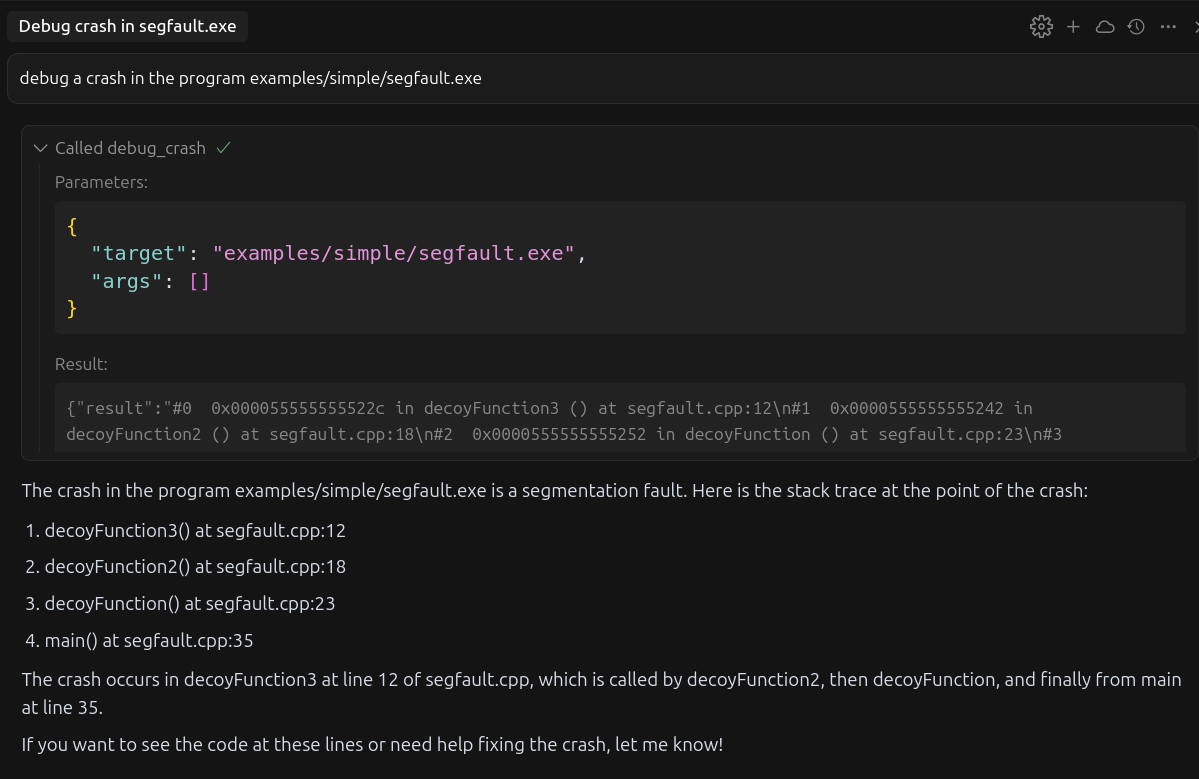

Test the MCP Server

Test the MCP using our simple example

open terminal

cd example/simplebuild the example using

makethis should generate

segfault.exethen type the following prompt into your IDE LLM agent

this should ask your permission to run

debug_crashMCP toolaccept and you should get a response like the following

Related MCP server: Cursor MCP Installer

Running local LLMs with Ollama

To run the hpc-mcp MCP tool with a local Ollama model use the Zed text editor. It should

automatically detect local running ollama models and make them available. As long as you have

installed the hpc-mcp MCP server in zed (see instructions here) it

should be available to your models. For more info on ollama integration with zed see zed's

documentation.

Not all models support calling of MCP tools. I managed to have success withqwen3:latest.

Core Dependencies

pythonuvfastmcp