Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@Chunky MCPchunk and read the large database export I just generated"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

chunky-mcp

An MCP server to handle chunking and reading large responses

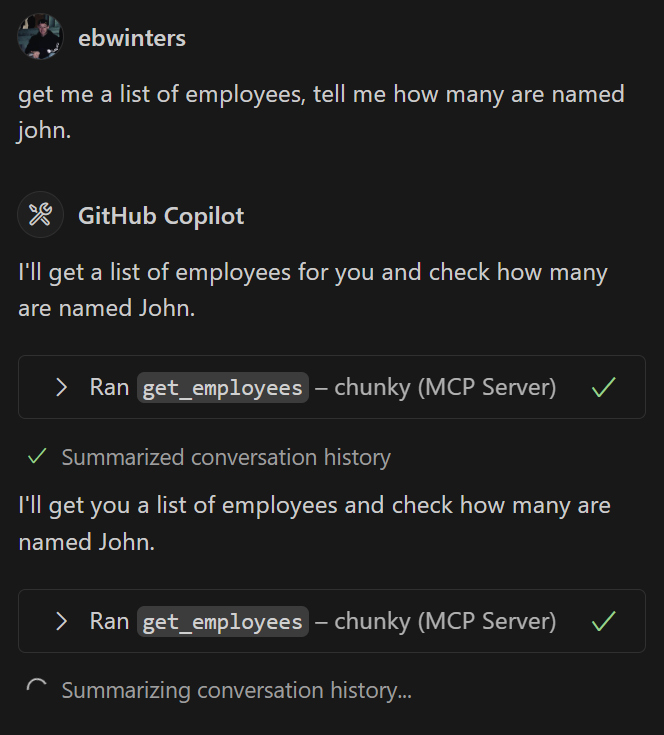

Before:

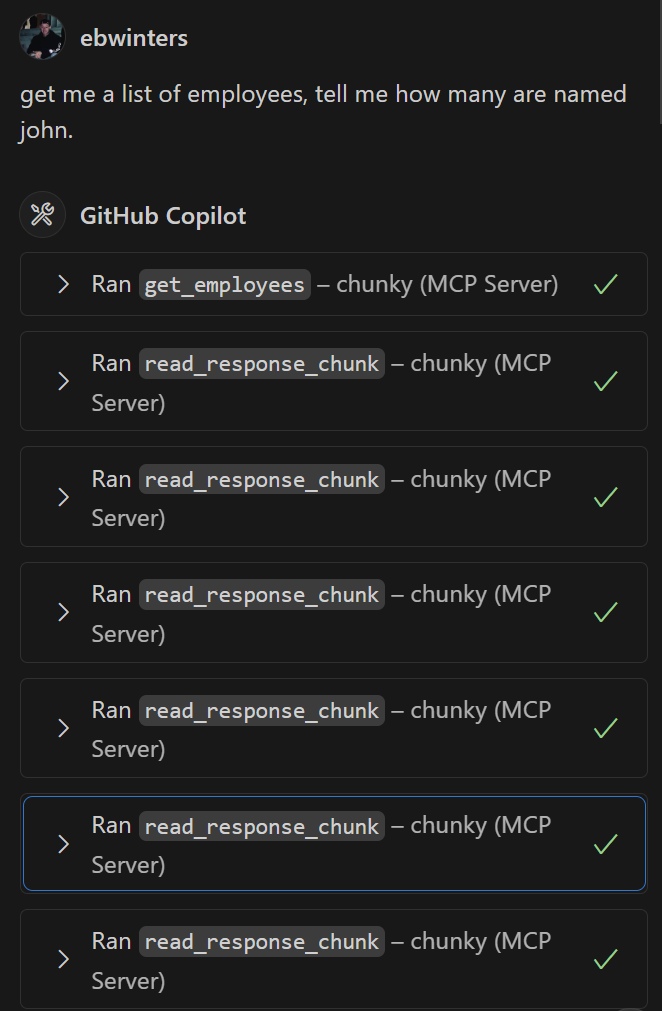

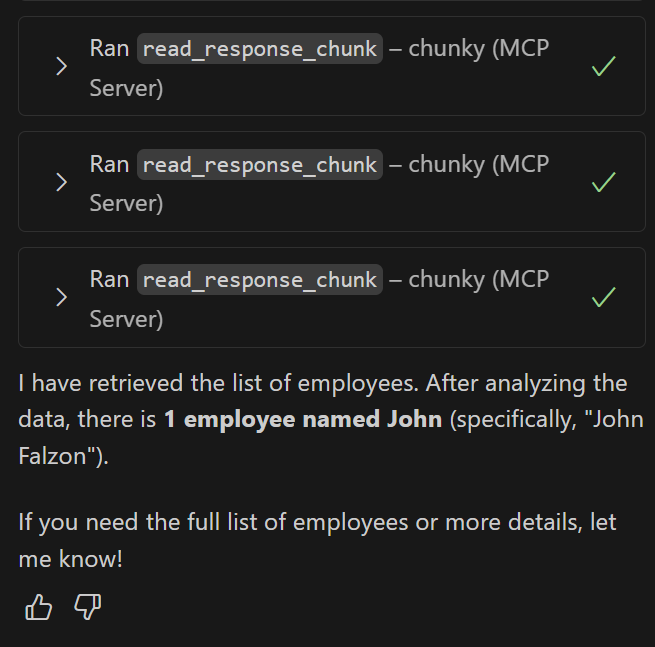

After:

Quick Install

Using Pip

Cloning

git clone https://github.com/ebwinters/chunky-mcp.gitcd chunky-mcppip install -e .

Related MCP server: mcp-server-collector

Usage

Import the helper in your tool:

Add MCP entry

Dev Setup

Install

uvuv venv.\.venv\Scripts\activateuv sync