Uses Docker to create isolated containers for safely executing code snippets submitted by AI assistants.

Integrates with Google's Gemini SDK and CLI to provide code execution capabilities for AI interactions.

Enables execution of JavaScript code in a Node.js environment within a secure, isolated container.

Provides alternative containerization using Podman for executing code in secure environments.

Executes Python code snippets in a secure, isolated sandbox environment, capturing output and error streams.

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@Code Sandbox MCP Serverrun a Python script that calculates the first 10 Fibonacci numbers"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

Code Sandbox MCP Server

The Code Sandbox MCP Server is a lightweight, STDIO-based Model Context Protocol (MCP) Server, allowing AI assistants and LLM applications to safely execute code snippets using containerized environments. It is uses the llm-sandbox package to execute the code snippets.

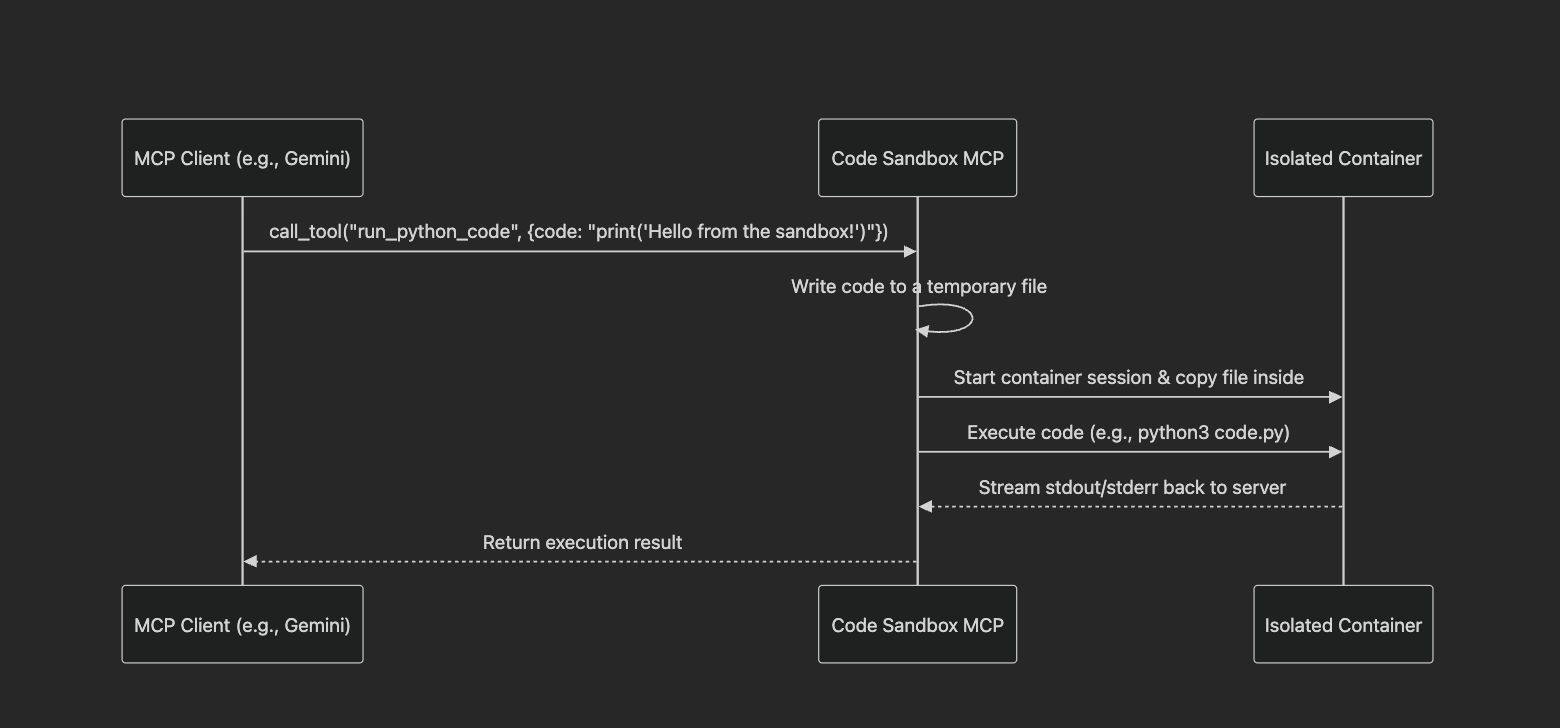

How It Works:

Starts a container session (podman, docker, etc.) and ensures the session is open.

Writes the

codeto a temporary file on the host.Copies this temporary file into the container at the configured

workdir.Executes the language-specific commands to run the code, e.g. python

python3 -u code.pyor javascriptnode -u code.jsCaptures the output and error streams from the container.

Returns the output and error streams to the client.

Stops and removes the container.

Available Tools:

run_python_code - Executes a snippet of Python code in a secure, isolated sandbox.

code(string, required): The Python code to execute.

run_js_code - Executes a snippet of JavaScript (Node.js) code in a secure, isolated sandbox.

code(string, required): The JavaScript code to execute.

Installation

Related MCP server: MCP Python Toolbox

Getting Started: Usage with an MCP Client

Examples:

Local Client Python example for running python code

Gemini SDK example for running python code with the Gemini SDK

Calling Gemini from a client example for running python code that uses the Gemini SDK and passes through the Gemini API key

Local Client Javascript example for running javascript code

To use the Code Sandbox MCP server, you need to add it to your MCP client's configuration file (e.g., in your AI assistant's settings). The server is designed to be launched on-demand by the client.

Add the following to your mcpServers configuration:

Provide Secrets and pass through environment variables

You can pass through environment variables to the sandbox by setting the --pass-through-env flag when starting the MCP server and providing the env when starting the server

Provide a custom container image

You can provide a custom container image by setting the CONTAINER_IMAGE and CONTAINER_LANGUAGE environment variables when starting the MCP server. Both variables are required as the CONTAINER_LANGUAGE is used to determine the commands to run in the container and the CONTAINER_IMAGE is used to determine the image to use.

Note: When providing a custom container image both tools will use the same container image.

Use with Gemini SDK

The code-sandbox-mcp server can be used with the Gemini SDK by passing the tools parameter to the generate_content method.

Use with Gemini CLI

The code-sandbox-mcp server can be used with the Gemini CLI. You can configure MCP servers at the global level in the ~/.gemini/settings.json file or in your project's root directory, create or open the .gemini/settings.json file. Within the file, add the mcpServers configuration block.

See settings.json for an example and read more about the Gemini CLI

Customize/Build new Container Images

The repository comes with 2 container images, which are published on Docker Hub:

philschmi/code-sandbox-python:latestphilschmi/code-sandbox-js:latest

The script will build the image using the current user's account. To update the images you want to use you can either pass the --python-image or --js-image flags when starting the MCP server or update the const.py file.

To push the images to Docker Hub you need to retag the images to your own account and push them.

To customize or install additional dependencies you can add them to the Dockerfile and build the image again.

Testing

With MCP Inspector

Start the server with streamable-http and test your server using the MCP inspector. Alternatively start inspector and run the server with stdio.

To run the test suite for code-sandbox-mcp and its components, clone the repository and run:

License

Code Sandbox MCP Server is open source software licensed under the MIT License.