The LangSmith MCP Server enables language models to access and manage LangSmith observability platform data through a Model Context Protocol interface.

Conversation History: Retrieve paginated message history from conversation threads using character-based pagination

Prompt Management: List prompts with visibility filtering (public/private), get specific prompts by name, and get guidance on creating/pushing prompts

Traces & Runs: Fetch runs (LLM, chain, tool, retriever, etc.) from one or more projects with powerful Filter Query Language (FQL) support, filtering by run type, error status, root status, and trace ID; list projects with optional name filtering

Datasets & Examples: List datasets filtered by ID, type, name, or metadata; read individual datasets/examples; fetch examples with filtering, pagination, versioning, and splits; get guidance on creating datasets and updating examples

Experiments & Evaluations: List experiment projects for a given dataset with key metrics (latency p50/p99, cost, feedback stats) and get guidance on running experiments

Billing & Usage: Fetch organization billing usage (e.g., trace counts) for a specified date range with optional workspace filtering

Flexible Output & Deployment: Fetch runs in

pretty,json, orrawformats; deploy via hosted HTTP endpoint, Docker container, or local PyPI installationCharacter-Based Pagination: Stateless character-budget pagination (

page_number,max_chars_per_page,preview_chars) keeps responses within LLM context limits

Provides seamless integration with the LangSmith observability platform, enabling language models to fetch conversation history, manage prompts, retrieve traces and runs, work with datasets and examples, and access experiment and evaluation data from LangSmith projects.

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@LangSmith MCP Serverfetch the history of my conversation from thread 'thread-123' in project 'my-chatbot'"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

🦜🛠️ LangSmith MCP Server

A production-ready Model Context Protocol (MCP) server that provides seamless integration with the LangSmith observability platform. This server enables language models to fetch conversation history, prompts, runs and traces, datasets, experiments, and billing usage from LangSmith.

📋 Example Use Cases

The server enables powerful capabilities including:

💬 Conversation History: "Fetch the history of my conversation from thread 'thread-123' in project 'my-chatbot'" (paginated by character budget)

📚 Prompt Management: "Get all public prompts in my workspace" / "Pull the template for the 'legal-case-summarizer' prompt"

🔍 Traces & Runs: "Fetch the latest 10 root runs from project 'alpha'" / "Get all runs for trace <uuid> (page 2 of 5)"

📊 Datasets: "List datasets of type chat" / "Read examples from dataset 'customer-support-qa'"

🧪 Experiments: "List experiments for dataset 'my-eval-set' with latency and cost metrics"

📈 Billing: "Get billing usage for September 2025"

🚀 Quickstart

A hosted version of the LangSmith MCP Server is available over HTTP-streamable transport, so you can connect without running the server yourself:

URL:

https://langsmith-mcp-server.onrender.com/mcpHosting: Render, built from this public repo using the project's Dockerfile.

Use it like any HTTP-streamable MCP server: point your client at the URL and send your LangSmith API key in the LANGSMITH-API-KEY header. No local install or Docker required.

Example (Cursor

Optional headers: LANGSMITH-WORKSPACE-ID, LANGSMITH-ENDPOINT (same as in the Docker Deployment section below).

Note: This deployed instance is intended for LangSmith Cloud. If you use a self-hosted LangSmith instance, run the server yourself and point it at your endpoint—see the Docker Deployment section below.

🛠️ Available Tools

The LangSmith MCP Server provides the following tools for integration with LangSmith.

💬 Conversation & Threads

Tool Name | Description |

| Retrieve message history for a conversation thread. Uses char-based pagination: pass |

📚 Prompt Management

Tool Name | Description |

| Fetch prompts from LangSmith with optional filtering by visibility (public/private) and limit. |

| Get a specific prompt by its exact name, returning the prompt details and template. |

| Documentation-only: how to create and push prompts to LangSmith. |

🔍 Traces & Runs

Tool Name | Description |

| Fetch LangSmith runs (traces, tools, chains, etc.) from one or more projects. Supports filters (run_type, error, is_root), FQL ( |

| List LangSmith projects with optional filtering by name, dataset, and detail level (simplified vs full). |

📊 Datasets & Examples

Tool Name | Description |

| Fetch datasets with filtering by ID, type, name, name substring, or metadata. |

| Fetch examples from a dataset by dataset ID/name or example IDs, with filter, metadata, splits, and optional |

| Read a single dataset by ID or name. |

| Read a single example by ID, with optional |

| Documentation-only: how to create datasets in LangSmith. |

| Documentation-only: how to update dataset examples in LangSmith. |

🧪 Experiments & Evaluations

Tool Name | Description |

| List experiment projects (reference projects) for a dataset. Requires |

| Documentation-only: how to run experiments and evaluations in LangSmith. |

📈 Usage & Billing

Tool Name | Description |

| Fetch organization billing usage (e.g. trace counts) for a date range. Optional workspace filter; returns metrics with workspace names inline. |

📄 Pagination (char-based)

Several tools use stateless, character-budget pagination so responses stay within a size limit and work well with LLM clients:

Where it’s used:

get_thread_historyandfetch_runs(whentrace_idis set).Parameters: You send

page_number(1-based) on every request. Optional:max_chars_per_page(default 25000, cap 30000) andpreview_chars(truncate long strings with "… (+N chars)").Response: Each response includes

page_number,total_pages, and the page payload (resultfor messages,runsfor runs). To get more, call again withpage_number = 2, then3, up tototal_pages.Why it’s useful: Pages are built by JSON character count, not item count, so each page fits within a fixed size. No cursor or server-side state—just integer page numbers.

🛠️ Installation Options

📝 General Prerequisites

Install uv (a fast Python package installer and resolver):

curl -LsSf https://astral.sh/uv/install.sh | shClone this repository and navigate to the project directory:

git clone https://github.com/langchain-ai/langsmith-mcp-server.git cd langsmith-mcp-server

🔌 MCP Client Integration

Once you have the LangSmith MCP Server, you can integrate it with various MCP-compatible clients. You have two installation options:

📦 From PyPI

Install the package:

uv run pip install --upgrade langsmith-mcp-serverAdd to your client MCP config:

{ "mcpServers": { "LangSmith API MCP Server": { "command": "/path/to/uvx", "args": [ "langsmith-mcp-server" ], "env": { "LANGSMITH_API_KEY": "your_langsmith_api_key", "LANGSMITH_WORKSPACE_ID": "your_workspace_id", "LANGSMITH_ENDPOINT": "https://api.smith.langchain.com" } } } }

⚙️ From Source

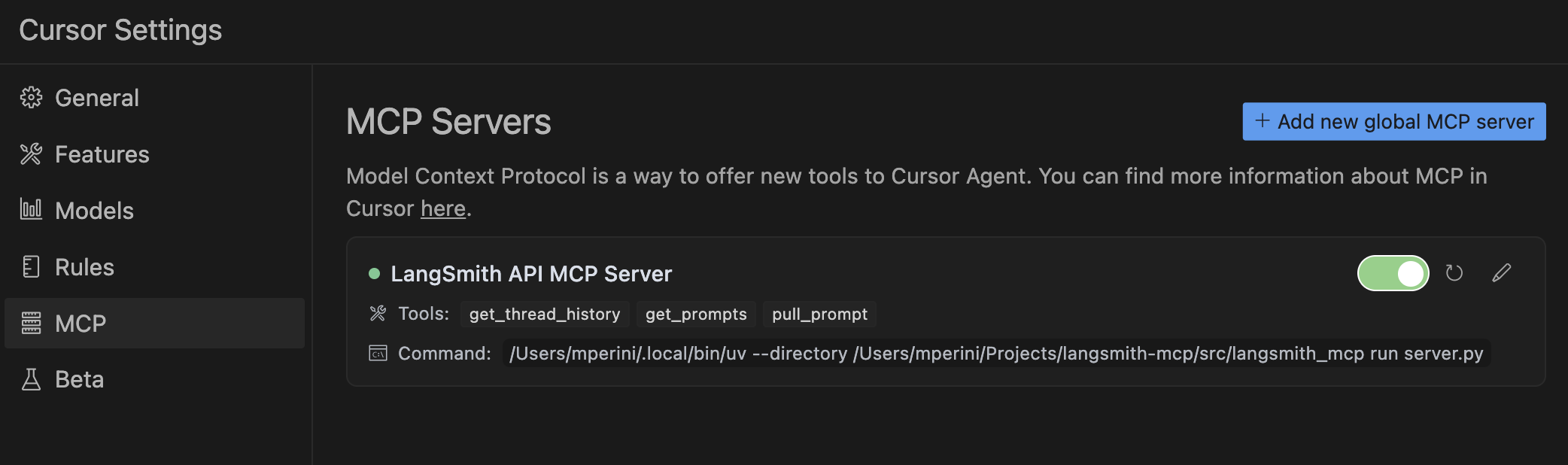

Add the following configuration to your MCP client settings (run from the project root so the package is found):

Replace the following placeholders:

/path/to/uv: The absolute path to your uv installation (e.g.,/Users/username/.local/bin/uv). You can find it withwhich uv./path/to/langsmith-mcp-server: The absolute path to the project root (the directory containingpyproject.tomlandlangsmith_mcp_server/).your_langsmith_api_key: Your LangSmith API key (required).your_workspace_id: Your LangSmith workspace ID (optional, for API keys scoped to multiple workspaces).https://api.smith.langchain.com: The LangSmith API endpoint (optional, defaults to the standard endpoint).

Example configuration (PyPI/uvx):

Copy this configuration into Cursor → MCP Settings (replace /path/to/uvx with the output of which uvx).

🔧 Environment Variables

The LangSmith MCP Server supports the following environment variables:

Variable | Required | Description | Example |

| ✅ Yes | Your LangSmith API key for authentication |

|

| ❌ No | Workspace ID for API keys scoped to multiple workspaces |

|

| ❌ No | Custom API endpoint URL (for self-hosted or EU region) |

|

Notes:

Only

LANGSMITH_API_KEYis required for basic functionalityLANGSMITH_WORKSPACE_IDis useful when your API key has access to multiple workspacesLANGSMITH_ENDPOINTallows you to use custom endpoints for self-hosted LangSmith installations or the EU region

🐳 Docker Deployment (HTTP-Streamable)

The LangSmith MCP Server can be deployed as an HTTP server using Docker, enabling remote access via the HTTP-streamable protocol.

Building the Docker Image

Running with Docker

The API key is provided via the LANGSMITH-API-KEY header when connecting, so no environment variables are required for HTTP-streamable protocol.

Connecting with HTTP-Streamable Protocol

Once the Docker container is running, you can connect to it using the HTTP-streamable transport. The server accepts authentication via headers:

Required header:

LANGSMITH-API-KEY: Your LangSmith API key

Optional headers:

LANGSMITH-WORKSPACE-ID: Workspace ID for API keys scoped to multiple workspacesLANGSMITH-ENDPOINT: Custom endpoint URL (for self-hosted or EU region)

Example client configuration:

Cursor Integration

To add the LangSmith MCP Server to Cursor using HTTP-streamable protocol, add the following to your mcp.json configuration file:

Optional headers:

Make sure the server is running before connecting Cursor to it.

Health Check

The server provides a health check endpoint:

This endpoint does not require authentication and returns "LangSmith MCP server is running" when the server is healthy.

🧪 Development and Contributing 🤝

If you want to develop or contribute to the LangSmith MCP Server, follow these steps:

Create a virtual environment and install dependencies:

uv syncTo include test dependencies:

uv sync --group testView available MCP commands:

uvx langsmith-mcp-serverFor development, run the MCP inspector:

uv run mcp dev langsmith_mcp_server/server.pyThis will start the MCP inspector on a network port

Install any required libraries when prompted

The MCP inspector will be available in your browser

Set the

LANGSMITH_API_KEYenvironment variable in the inspectorConnect to the server

Navigate to the "Tools" tab to see all available tools

Before submitting your changes, run the linting and formatting checks:

make lint make format

📄 License

This project is distributed under the MIT License. For detailed terms and conditions, please refer to the LICENSE file.

Made with ❤️ by the LangChain Team