Provides AI-powered web search with citations and complex reasoning capabilities through Perplexity AI's API, enabling research tasks and fact-checking with step-by-step analysis.

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@Scraper MCPscrape and filter .article-content from https://blog.example.com/post"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

Scraper MCP

A context-optimized MCP server for web scraping. Reduces LLM token usage by 70-90% through server-side HTML filtering, markdown conversion, and CSS selector targeting.

Quick Start

Try it:

Endpoints:

MCP:

http://localhost:8000/mcpDashboard:

http://localhost:8000/

Features

Web Scraping

4 scraping modes: Raw HTML, markdown, plain text, link extraction

JavaScript rendering: Optional Playwright-based rendering for SPAs and dynamic content

CSS selector filtering: Extract only relevant content server-side

Batch operations: Process multiple URLs concurrently

Smart caching: Three-tier cache system (realtime/default/static)

Retry logic: Exponential backoff for transient failures

Perplexity AI Integration

Web search: AI-powered search with citations (

perplexitytool)Reasoning: Complex analysis with step-by-step reasoning (

perplexity_reasontool)Requires

PERPLEXITY_API_KEYenvironment variable

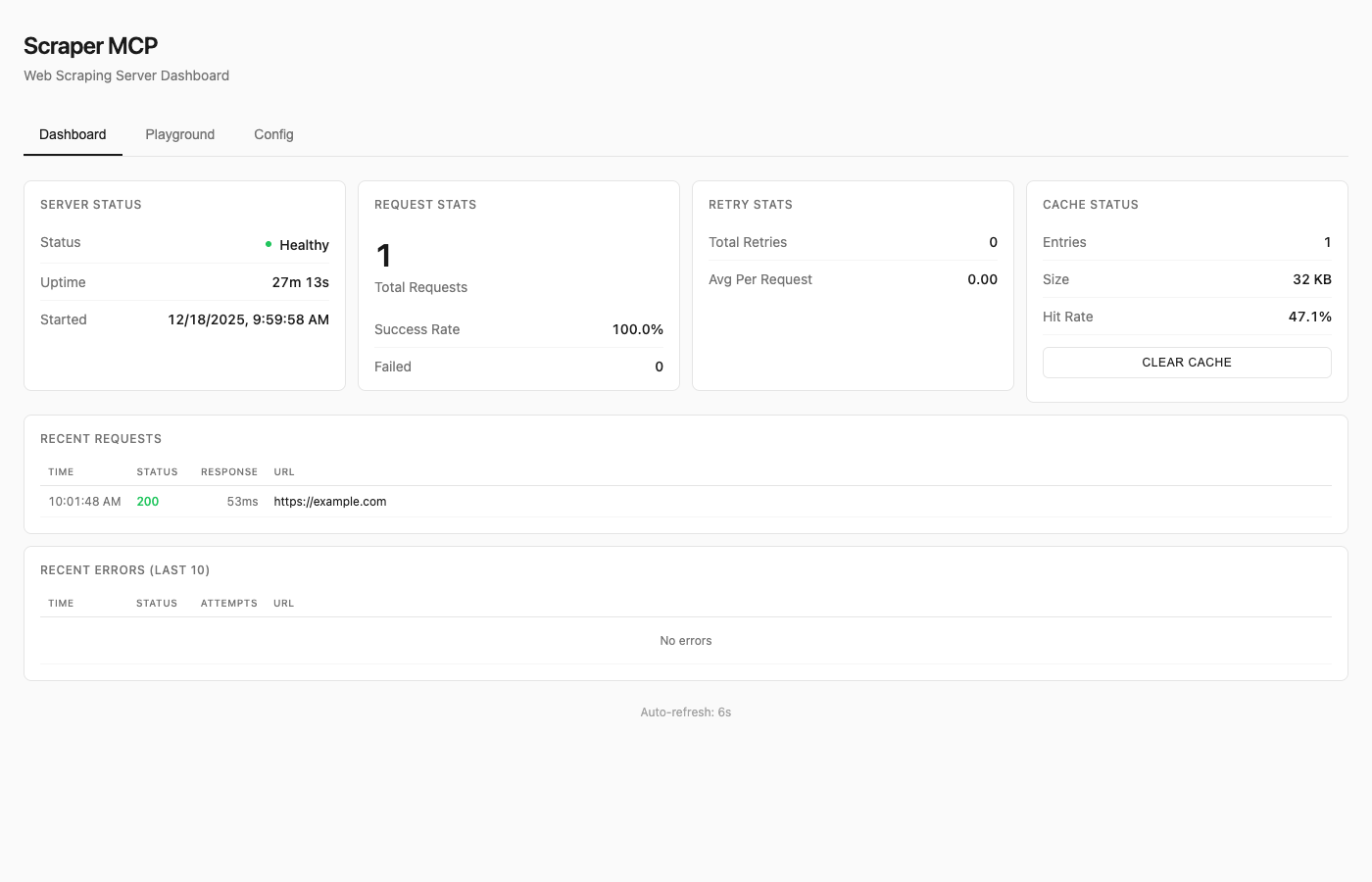

Monitoring Dashboard

Real-time request statistics and cache metrics

Interactive API playground for testing tools

Runtime configuration without restarts

See Dashboard Guide for details.

Available Tools

Tool | Description |

| HTML converted to markdown (best for LLMs) |

| Raw HTML content |

| Plain text extraction |

| Extract all links with metadata |

| AI web search with citations |

| Complex reasoning tasks |

All tools support:

Single URL or batch operations (pass array)

timeoutandmax_retriesparameterscss_selectorfor targeted extractionrender_jsfor JavaScript rendering (SPAs, dynamic content)

Resources

Note: Resources are disabled by default to reduce context overhead. Enable with

--enable-resourcesflag orENABLE_RESOURCES=trueenvironment variable.

MCP resources provide read-only data access via URI-based addressing:

URI | Description |

| Cache hit rate, size, entry counts |

| List of recent request IDs |

| Retrieve cached result by ID |

| Current runtime configuration |

| Timeout, retries, concurrency |

| Version, uptime, capabilities |

| Request counts, success rates |

Prompts

Note: Prompts are disabled by default to reduce context overhead. Enable with

--enable-promptsflag orENABLE_PROMPTS=trueenvironment variable.

MCP prompts provide reusable workflow templates:

Prompt | Description |

| Structured webpage analysis |

| Generate content summaries |

| Extract specific data types |

| Comprehensive SEO check |

| Analyze internal/external links |

| Multi-source research |

| Verify claims across sources |

See API Reference for complete documentation.

JavaScript Rendering

For SPAs (React, Vue, Angular) and pages with dynamic content, enable JavaScript rendering:

When to use

Single-page applications (SPAs) - React, Vue, Angular, etc.

Sites with lazy-loaded content

Pages requiring JavaScript execution

Dynamic content loaded via AJAX/fetch

When NOT needed:

Static HTML pages (most blogs, news sites, documentation)

Server-rendered content

Simple websites without JavaScript dependencies

How it works:

Uses Playwright with headless Chromium

Single browser instance with pooled contexts (~300MB base + 10-20MB per context)

Lazy initialization (browser only starts when first JS render is requested)

Semaphore-controlled concurrency (default: 5 concurrent contexts)

Memory considerations:

Base requests provider: ~50MB

With Playwright active: ~300-500MB depending on concurrent contexts

Recommend minimum 1GB container memory when using JS rendering

Testing JS rendering:

Use the dashboard playground at http://localhost:8000/ to test JavaScript rendering interactively with the toggle switch.

Docker Deployment

Quick Run

Docker Compose

For persistent storage and custom configuration:

Production deployment (pre-built image from GHCR):

Upgrading

To upgrade an existing deployment to the latest version:

Your cache data persists in the named volume across upgrades.

Available Tags

Tag | Description |

| Latest stable release |

| Latest build from main branch |

| Specific version |

Configuration

Create a .env file for custom settings:

See Configuration Guide for all options.

Claude Desktop

Add to your MCP settings:

Claude Code Skills

This project includes Agent Skills that provide Claude Code with specialized knowledge for using the scraper tools effectively.

Skill | Description |

CSS selectors, batch operations, retry configuration | |

AI search, reasoning tasks, conversation patterns |

Install Skills

Copy the skills to your Claude Code skills directory:

Or install directly:

Once installed, Claude Code will automatically use these skills when performing web scraping or Perplexity AI tasks.

Documentation

Document | Description |

Complete tool documentation, parameters, CSS selectors | |

Environment variables, proxy setup, ScrapeOps | |

Monitoring UI, playground, runtime config | |

Local setup, architecture, contributing | |

Test suite, coverage, adding tests |

Local Development

See Development Guide for details.

License

MIT License

Last updated: December 23, 2025