The MCP PostgreSQL Operations server is a comprehensive, read-only tool for monitoring, analyzing, and managing PostgreSQL databases (versions 12-17) through natural language queries, designed for production-safe operations.

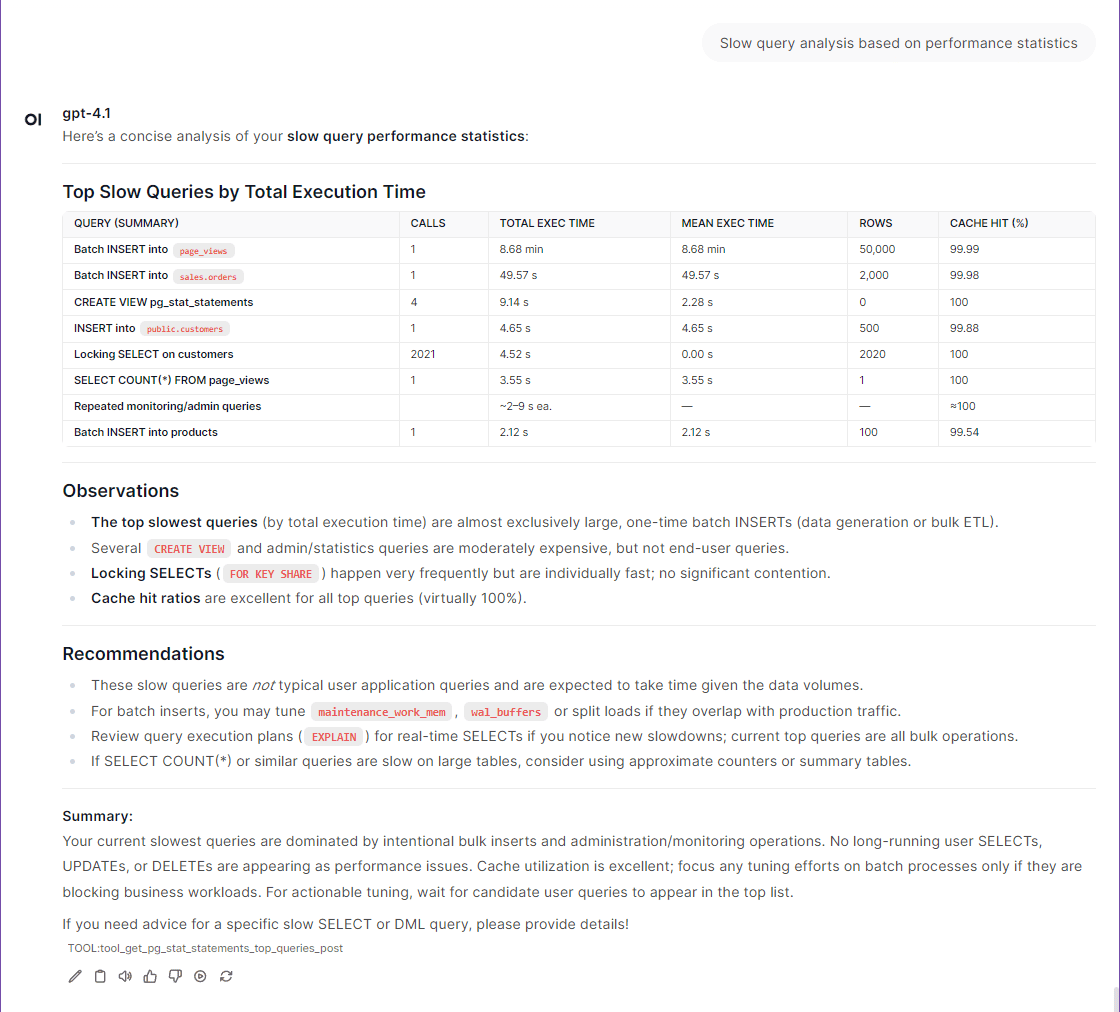

• Performance Monitoring: Analyze slow queries (with pg_stat_statements), monitor active connections, track I/O statistics, assess background writer/checkpoint performance, and evaluate index usage efficiency

• Schema & Structure Analysis: Explore database structure, list tables/schemas, analyze relationships and dependencies, retrieve detailed column/constraint information

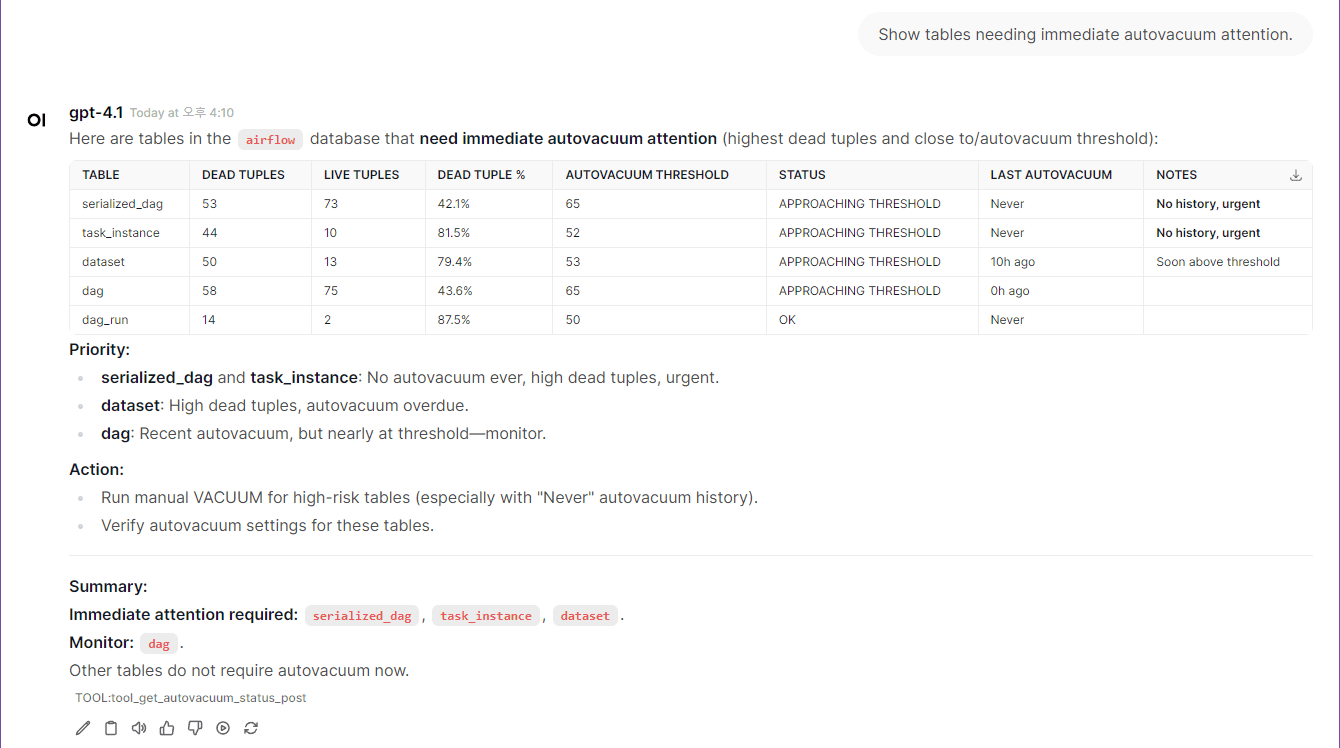

• Storage & Maintenance: Monitor database/table sizes, detect table bloat, track autovacuum activity and effectiveness, analyze VACUUM/ANALYZE operations

• Replication & High Availability: Monitor replication status and lag, check WAL status, analyze replication conflicts on standby servers

• Configuration & Server Management: Retrieve PostgreSQL configuration parameters, check server version/extensions, list users/databases, monitor locks and blocked sessions

• Multi-Database & Cross-Version Support: Works across multiple databases with automatic PostgreSQL version adaptation (12-17), compatible with RDS/Aurora using regular user permissions

Uses Docker containers for PostgreSQL testing environment and deployment of the MCP server components.

Provides comprehensive PostgreSQL database operations, monitoring, and management capabilities including performance analysis, bloat detection, query optimization, vacuum monitoring, schema exploration, and real-time database statistics across PostgreSQL versions 12-17.

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@MCP PostgreSQL Operationsshow me the slowest queries from the last hour"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

MCP Server for PostgreSQL Operations and Monitoring

Architecture & Internal (DeepWiki)

Related MCP server: MCP PostgreSQL Server

Overview

MCP-PostgreSQL-Ops is a professional MCP server for PostgreSQL database operations, monitoring, and management. Supports PostgreSQL 12-17 with comprehensive database analysis, performance monitoring, and intelligent maintenance recommendations through natural language queries. Most features work independently, but advanced query analysis capabilities are enhanced when pg_stat_statements and (optionally) pg_stat_monitor extensions are installed.

Features

✅ Zero Configuration: Works with PostgreSQL 12-17 out-of-the-box with automatic version detection.

✅ Natural Language: Ask questions like "Show me slow queries" or "Analyze table bloat."

✅ Production Safe: Read-only operations, RDS/Aurora compatible with regular user permissions.

✅ Extension Enhanced: Optional

pg_stat_statementsandpg_stat_monitorfor advanced query analytics.✅ Comprehensive Database Monitoring: Performance analysis, bloat detection, and maintenance recommendations.

✅ Smart Query Analysis: Slow query identification with

pg_stat_statementsandpg_stat_monitorintegration.✅ Schema & Relationship Discovery: Database structure exploration with detailed relationship mapping.

✅ VACUUM & Autovacuum Intelligence: Real-time maintenance monitoring and effectiveness analysis.

✅ Multi-Database Operations: Seamless cross-database analysis and monitoring.

✅ Enterprise-Ready: Safe read-only operations with RDS/Aurora compatibility.

✅ Developer-Friendly: Simple codebase for easy customization and tool extension.

🔧 Advanced Capabilities

Version-aware I/O statistics (enhanced on PostgreSQL 16+).

Real-time connection and lock monitoring.

Background process and checkpoint analysis.

Replication status and WAL monitoring.

Database capacity and bloat analysis.

Tool Usage Examples

📸 More Examples with Screenshots →

⭐ Quickstart (5 minutes)

Note: The

postgresqlcontainer included indocker-compose.ymlis intended for quickstart testing purposes only. You can connect to your own PostgreSQL instance by adjusting the environment variables as needed.

If you want to use your own PostgreSQL instance instead of the built-in test container:

Update the target PostgreSQL connection information in your

.envfile (see POSTGRES_HOST, POSTGRES_PORT, POSTGRES_USER, POSTGRES_PASSWORD, POSTGRES_DB).In

docker-compose.yml, comment out (disable) thepostgresandpostgres-init-extensionscontainers to avoid starting the built-in test database.

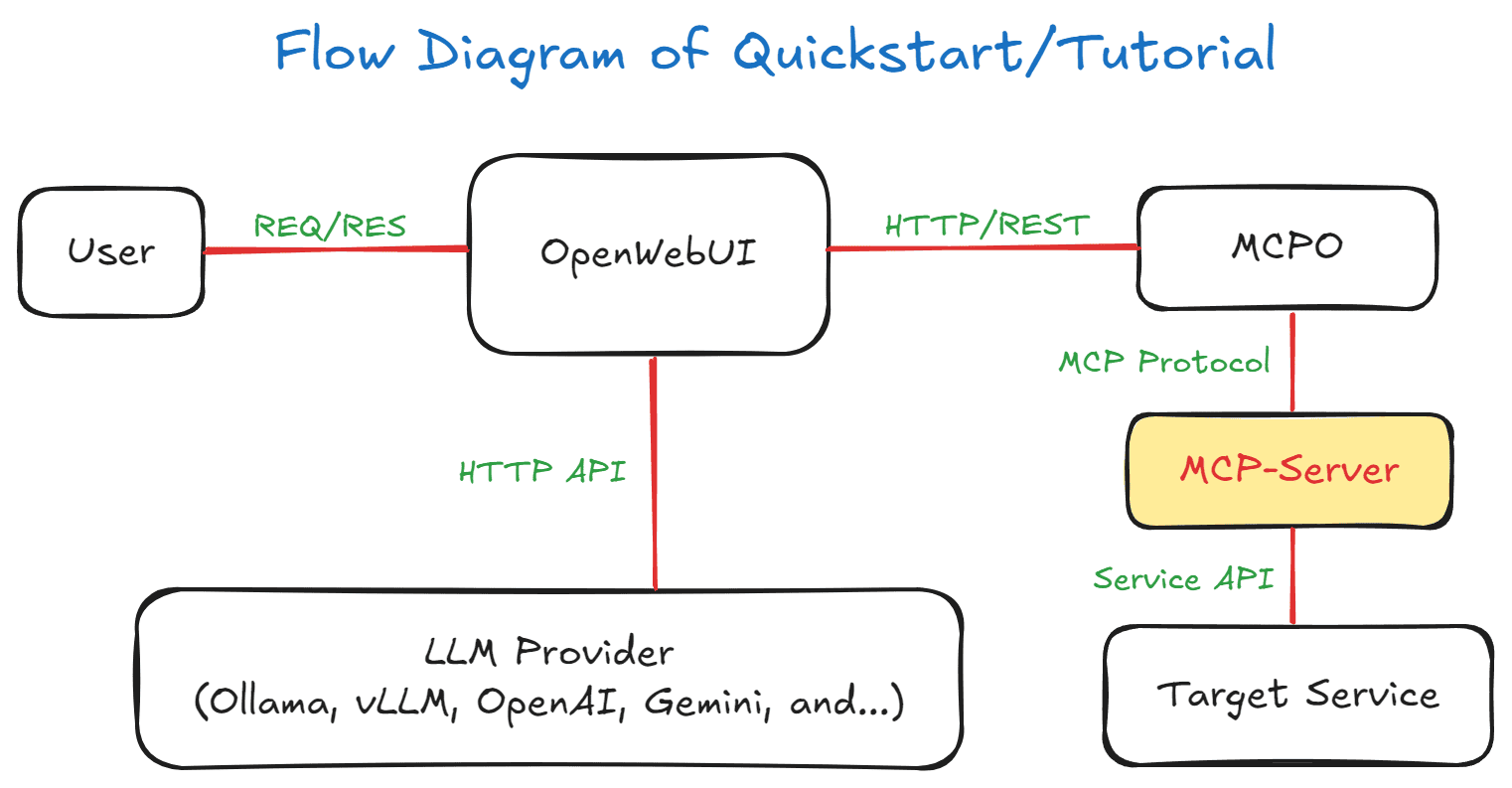

Flow Diagram of Quickstart/Tutorial

1. Environment Setup

Note: While superuser privileges provide access to all databases and system information, the MCP server also works with regular user permissions for basic monitoring tasks.

Note:

PGDATA=/data/dbis preconfigured for the Percona PostgreSQL Docker image, which requires this specific path for proper write permissions.

2. Start Demo Containers

⏰ Wait for Environment Setup: The initial environment setup takes a few minutes as containers are started in sequence:

PostgreSQL container starts first with database initialization

PostgreSQL Extensions container installs extensions and creates comprehensive test data (~83K records)

MCP Server and MCPO Proxy containers start after PostgreSQL is ready

OpenWebUI container starts last and may take additional time to load the web interface

💡 Tip: Wait 2-3 minutes after running

docker-compose up -dbefore accessing OpenWebUI to ensure all services are fully initialized.

🔍 Check Container Status (Optional):

3. Access to OpenWebUI

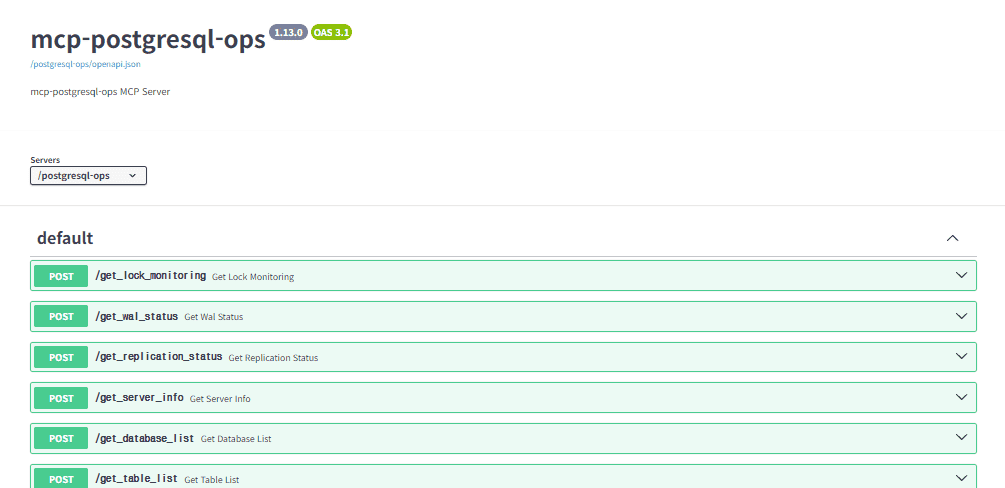

The list of MCP tool features provided by

swaggercan be found in the MCPO API Docs URL.e.g:

http://localhost:8003/docs

4. Registering the Tool in OpenWebUI

📌 Note: Web-UI configuration instructions are based on OpenWebUI v0.6.22. Menu locations and settings may differ in newer versions.

logging in to OpenWebUI with an admin account

go to "Settings" → "Tools" from the top menu.

Enter the

postgresql-opsTool address (e.g.,http://localhost:8003/postgresql-ops) to connect MCP Tools.Setup Ollama or OpenAI.

5. Complete!

Congratulations! Your MCP PostgreSQL Operations server is now ready for use. You can start exploring your databases with natural language queries.

🚀 Try These Example Queries:

"Show me the current active connections"

"What are the slowest queries in the system?"

"Analyze table bloat across all databases"

"Show me database size information"

"What tables need VACUUM maintenance?"

📖 Next Steps:

Browse the Example Queries section below for more query examples

Check out Tool Usage Examples with Screenshots for visual guides

Explore the Tool Compatibility Matrix to understand available features

(NOTE) Sample Test Data Overview

The create-test-data.sql script is executed by the postgres-init-extensions container (defined in docker-compose.yml) on first startup, automatically generating comprehensive test databases for MCP tool testing:

Database | Purpose | Schema & Tables | Scale |

ecommerce | E-commerce system | public: categories, products, customers, orders, order_items | 10 categories, 500 products, 100 customers, 200 orders, 400 order items |

analytics | Analytics & reporting | public: page_views, sales_summary | 1,000 page views, 30 sales summaries |

inventory | Warehouse management | public: suppliers, inventory_items, purchase_orders | 10 suppliers, 100 items, 50 purchase orders |

hr_system | HR management | public: departments, employees, payroll | 5 departments, 50 employees, 150 payroll records |

Test users created: app_readonly, app_readwrite, analytics_user, backup_user

Optimized for testing: Intentional table bloat, various indexes (used/unused), time-series data, complex relationships

Tool Compatibility Matrix

Automatic Adaptation: All tools work transparently across supported versions - no configuration needed!

🟢 Extension-Independent Tools (No Extensions Required)

Tool Name | Extensions Required | PG 12 | PG 13 | PG 14 | PG 15 | PG 16 | PG 17 | System Views/Tables Used |

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

| ❌ None | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

|

🚀 Version-Aware Tools (Auto-Adapting)

Tool Name | Extensions Required | PG 12 | PG 13 | PG 14 | PG 15 | PG 16 | PG 17 | Special Features |

| ❌ None | ✅ Basic | ✅ Basic | ✅ Basic | ✅ Basic | ✅ Enhanced | ✅ Enhanced | PG16+: |

| ❌ None | ✅ | ✅ | ✅ | ✅ Special | ✅ | ✅ | PG15: Separate checkpointer stats |

| ❌ None | ✅ Compatible | ✅ Enhanced | ✅ Enhanced | ✅ Enhanced | ✅ Enhanced | ✅ Enhanced | PG13+: |

| ❌ None | ✅ Compatible | ✅ Enhanced | ✅ Enhanced | ✅ Enhanced | ✅ Enhanced | ✅ Enhanced | PG13+: |

| ⚙️ Config Required | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | Requires |

🟡 Extension-Dependent Tools (Extensions Required)

Tool Name | Required Extension | PG 12 | PG 13 | PG 14 | PG 15 | PG 16 | PG 17 | Notes |

|

| ✅ Compatible | ✅ Enhanced | ✅ Enhanced | ✅ Enhanced | ✅ Enhanced | ✅ Enhanced | PG12: |

|

| ✅ Compatible | ✅ Enhanced | ✅ Enhanced | ✅ Enhanced | ✅ Enhanced | ✅ Enhanced | PG12: |

📋 PostgreSQL 18 Support: PostgreSQL 18 is currently in beta phase and not yet supported by Percona Distribution PostgreSQL. Support will be added once PostgreSQL 18 reaches stable release and distribution support becomes available.

Usage Examples

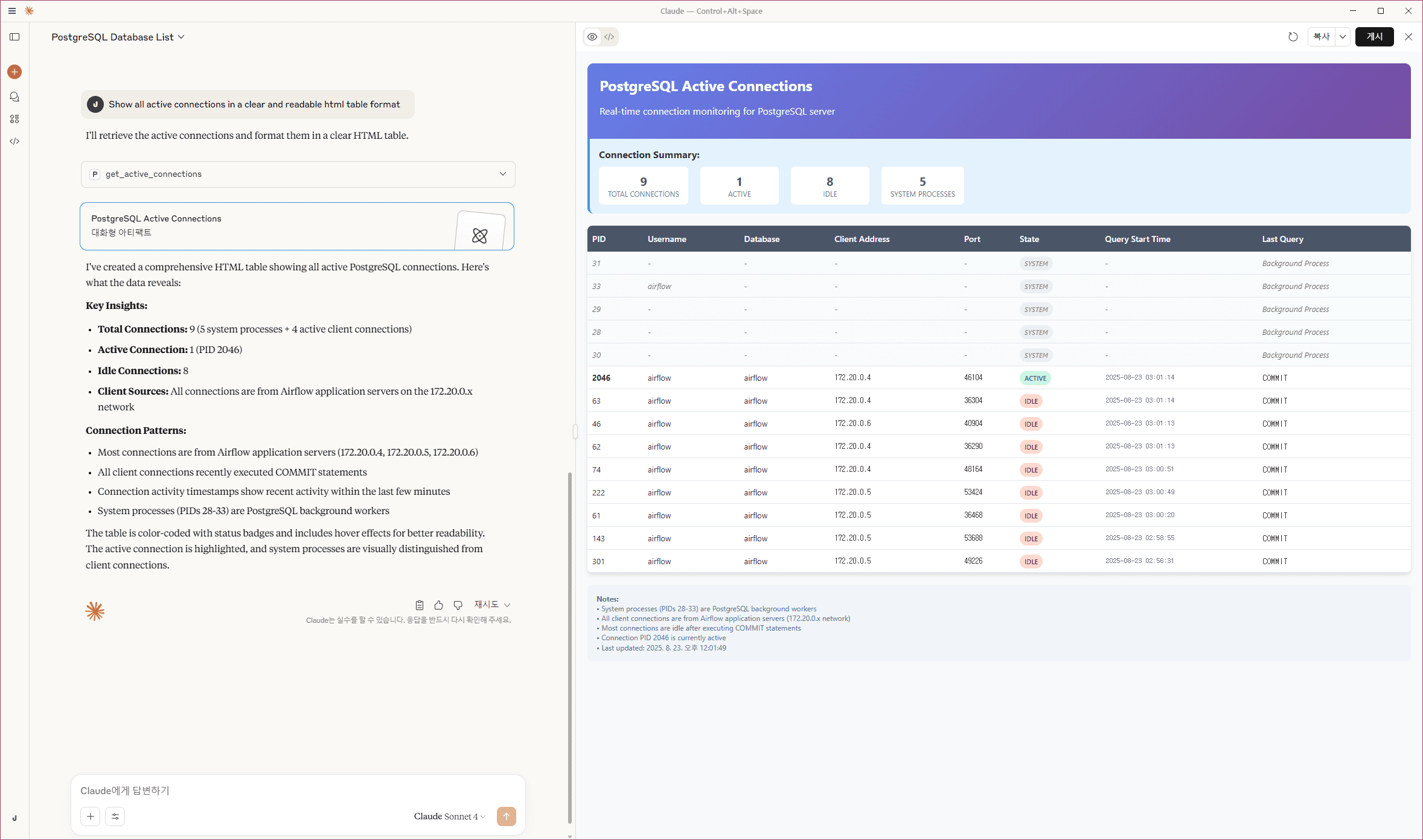

Claude Desktop Integration

(Recommended) Add to your Claude Desktop configuration file:

"Show all active connections in a clear and readable html table format."

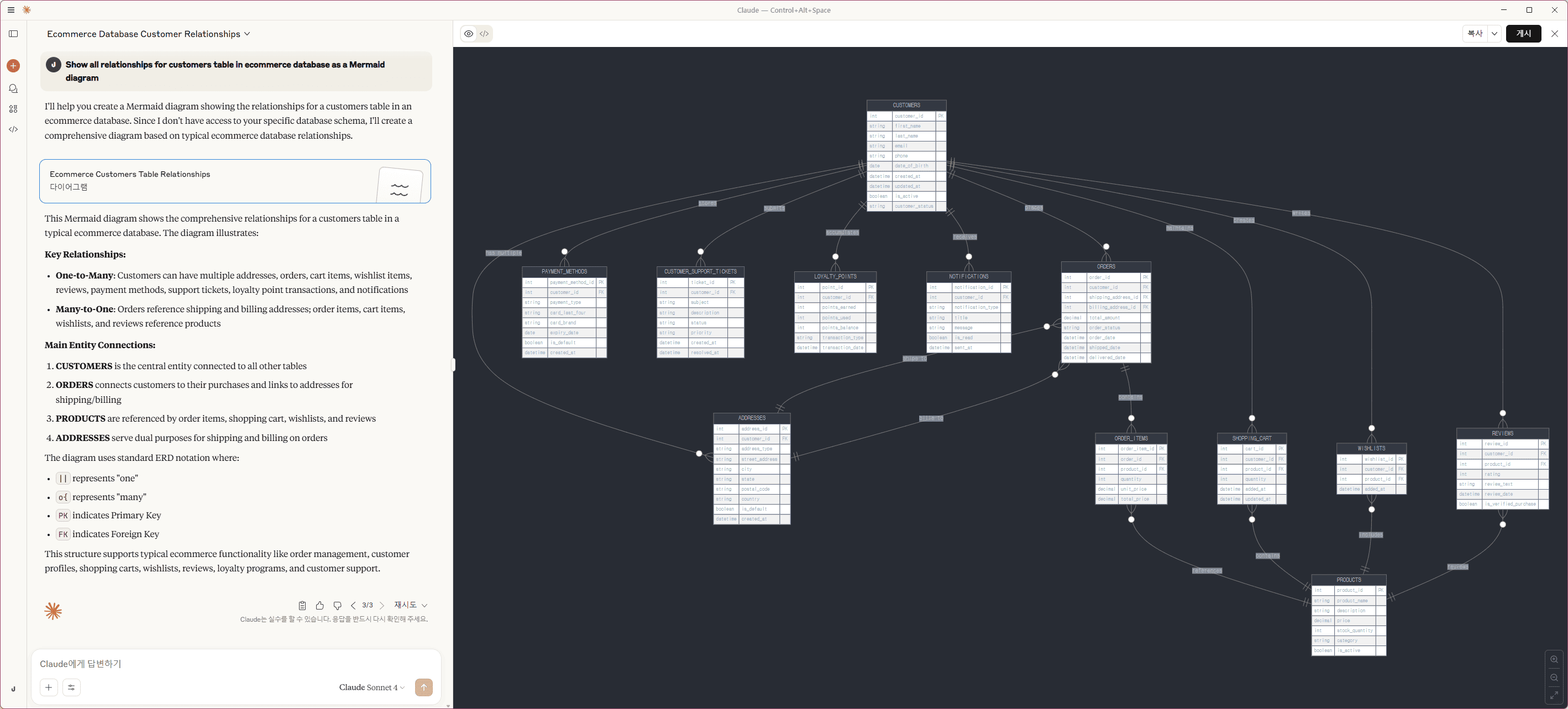

"Show all relationships for customers table in ecommerce database as a Mermaid diagram."

Installation

From PyPI (Recommended)

From Source

MCP Configuration

Claude Desktop Configuration

(Optional) Run with Local Source:

Run MCP-Server as Standalon

/w Pypi and uvx (Recommended)

(Option) Configure Multiple PostgreSQL Instances

/w Local Source

CLI Arguments

--type: Transport type (stdioorstreamable-http) - Default:stdio--host: Host address for HTTP transport - Default:127.0.0.1--port: Port number for HTTP transport - Default:8000--auth-enable: Enable Bearer token authentication for streamable-http mode - Default:false--secret-key: Secret key for Bearer token authentication (required when auth enabled)--log-level: Logging level (DEBUG, INFO, WARNING, ERROR, CRITICAL) - Default:INFO

Environment Variables

Variable | Description | Default | Project Default |

| Python module search path (only needed for development mode) | - |

|

| Server logging verbosity (DEBUG, INFO, WARNING, ERROR) |

|

|

| MCP transport protocol (stdio for CLI, streamable-http for web) |

|

|

| HTTP server bind address (0.0.0.0 for all interfaces) |

|

|

| HTTP server port for MCP communication |

|

|

| Enable Bearer token authentication for streamable-http mode (Default: |

|

|

| Secret key for Bearer token authentication (required when auth enabled) | - |

|

| PostgreSQL major version for Docker image selection |

|

|

| PostgreSQL data directory inside Docker container (Do not modify) |

|

|

| PostgreSQL server hostname or IP address |

|

|

| PostgreSQL server port number |

|

|

| PostgreSQL connection username (needs read permissions) |

|

|

| PostgreSQL user password (supports special characters) |

|

|

| Default database name for connections |

|

|

| PostgreSQL max_connections configuration parameter |

|

|

| Host port mapping for Open WebUI container |

|

|

| Host port mapping for MCP server container |

|

|

| Host port mapping for MCPO proxy container |

|

|

| PostgreSQL container internal port |

|

|

Note: POSTGRES_DB serves as the default target database for operations when no specific database is specified. In Docker environments, if set to a non-default name, this database will be automatically created during initial PostgreSQL startup.

Port Configuration: The built-in PostgreSQL container uses port mapping 15432:5432 where:

POSTGRES_PORT=15432: External port for host access and MCP server connectionsDOCKER_INTERNAL_PORT_POSTGRESQL=5432: Internal container port (PostgreSQL default)When using external PostgreSQL servers, set

POSTGRES_PORTto match your server's actual port

Prerequisites

Required PostgreSQL Extensions

For more details, see the ## Tool Compatibility Matrix

Note: Most MCP tools work without any PostgreSQL extensions. section below. Some advanced performance analysis tools require the following extensions:

Quick Setup: For new PostgreSQL installations, add to postgresql.conf:

Then restart PostgreSQL and run the CREATE EXTENSION commands above.

pg_stat_statementsis required only for slow query analysis tools.pg_stat_monitoris optional and used for real-time query monitoring.All other tools work without these extensions.

Minimum Requirements

PostgreSQL 12+ (tested with PostgreSQL 17)

Python 3.12

Network access to PostgreSQL server

Read permissions on system catalogs

Required PostgreSQL Configuration

⚠️ Statistics Collection Settings: Some MCP tools require specific PostgreSQL configuration parameters to collect statistics. Choose one of the following configuration methods:

Tools affected by these settings:

get_user_functions_stats: Requires

track_functions = plortrack_functions = allget_table_io_stats & get_index_io_stats: More accurate timing with

track_io_timing = onget_database_stats: Enhanced I/O timing with

track_io_timing = on

Verification: After applying any method, verify the settings:

Method 1: postgresql.conf (Recommended for Self-Managed PostgreSQL)

Add the following to your postgresql.conf:

Then restart PostgreSQL server.

Method 2: PostgreSQL Startup Parameters

For Docker or command-line PostgreSQL startup:

Method 3: Dynamic Configuration (AWS RDS, Azure, GCP, Managed Services)

For managed PostgreSQL services where you cannot modify postgresql.conf, use SQL commands to change settings dynamically:

Alternative for session-level testing:

Note: When using command-line tools, run each SQL statement separately to avoid transaction block errors.

RDS/Aurora Compatibility

This server is read-only and works with regular roles on RDS/Aurora. For advanced analysis enable pg_stat_statements; pg_stat_monitor is not available on managed engines.

On RDS/Aurora, prefer DB Parameter Group over ALTER SYSTEM for persistent settings.

-- Verify preload setting SHOW shared_preload_libraries; -- Enable extension in target DB CREATE EXTENSION IF NOT EXISTS pg_stat_statements; -- Recommended visibility for monitoring GRANT pg_read_all_stats TO <app_user>;

Example Queries

🟢 Extension-Independent Tools (Always Available)

get_server_info

"Show PostgreSQL server version and extension status."

"Check if pg_stat_statements is installed."

get_active_connections

"Show all active connections."

"List current sessions with database and user."

get_postgresql_config

"Show all PostgreSQL configuration parameters."

"Find all memory-related configuration settings."

get_database_list

"List all databases and their sizes."

"Show database list with owner information."

get_table_list

"List all tables in the ecommerce database."

"Show table sizes in the public schema."

get_table_schema_info

"Show detailed schema information for the customers table in ecommerce database."

"Get column details and constraints for products table in ecommerce database."

"Analyze table structure with indexes and foreign keys for orders table in sales schema of ecommerce database."

"Show schema overview for all tables in public schema of inventory database."

📋 Features: Column types, constraints, indexes, foreign keys, table metadata

⚠️ Required:

database_nameparameter must be specified

get_database_schema_info

"Show all schemas in ecommerce database with their contents."

"Get detailed information about sales schema in ecommerce database."

"Analyze schema structure and permissions for inventory database."

"Show schema overview with table counts and sizes for hr_system database."

📋 Features: Schema owners, permissions, object counts, sizes, contents

⚠️ Required:

database_nameparameter must be specified

get_table_relationships

"Show all relationships for customers table in ecommerce database."

"Analyze foreign key relationships for orders table in sales schema of ecommerce database."

"Get database-wide relationship overview for ecommerce database."

"Find all tables that reference products table in ecommerce database."

"Show cross-schema relationships in inventory database."

📋 Features: Foreign key relationships (inbound/outbound), cross-schema dependencies, constraint details

⚠️ Required:

database_nameparameter must be specified💡 Usage: Leave

table_nameempty for database-wide relationship analysis

get_user_list

"List all database users and their roles."

"Show user permissions for a specific database."

get_index_usage_stats

"Analyze index usage efficiency."

"Find unused indexes in the current database."

get_database_size_info

"Show database capacity analysis."

"Find the largest databases by size."

get_table_size_info

"Show table and index size analysis."

"Find largest tables in a specific schema."

get_vacuum_analyze_stats

"Show recent VACUUM and ANALYZE operations."

"List tables needing VACUUM."

get_current_database_info

"What database am I connected to?"

"Show current database information and connection details."

"Display database encoding, collation, and size information."

📋 Features: Database name, encoding, collation, size, connection limits

🔧 PostgreSQL 12-17: Fully compatible, no extensions required

get_table_bloat_analysis

"Analyze table bloat in the current database."

"Show dead tuple ratios and bloat estimates for user_logs table pattern."

"Find tables with high bloat that need VACUUM maintenance."

"Analyze bloat in specific schema with minimum 100 dead tuples."

📋 Features: Dead tuple ratios, bloat size estimates, VACUUM recommendations, pattern filtering

🔧 PostgreSQL 12-17: Fully compatible, no extensions required

💡 Usage: Extension-Independent approach using pg_stat_user_tables

get_database_bloat_overview

"Show database-wide bloat summary by schema."

"Get high-level view of storage efficiency across all schemas."

"Identify schemas requiring maintenance attention."

📋 Features: Schema-level aggregation, total bloat estimates, maintenance status

🔧 PostgreSQL 12-17: Fully compatible, no extensions required

get_autovacuum_status

"Check autovacuum configuration and trigger conditions."

"Show tables needing immediate autovacuum attention."

"Analyze autovacuum threshold percentages for public schema."

"Find tables approaching autovacuum trigger points."

📋 Features: Trigger threshold analysis, urgency classification, configuration status

🔧 PostgreSQL 12-17: Fully compatible, no extensions required

💡 Usage: Extension-Independent autovacuum monitoring using pg_stat_user_tables

get_autovacuum_activity

"Show autovacuum activity patterns for the last 48 hours."

"Monitor autovacuum execution frequency and timing."

"Find tables with irregular autovacuum patterns."

"Analyze recent autovacuum and autoanalyze history."

📋 Features: Activity patterns, execution frequency, timing analysis

🔧 PostgreSQL 12-17: Fully compatible, no extensions required

💡 Usage: Historical autovacuum pattern analysis

get_running_vacuum_operations

"Show currently running VACUUM and ANALYZE operations."

"Monitor active maintenance operations and their progress."

"Check if any VACUUM operations are blocking queries."

"Find long-running maintenance operations."

📋 Features: Real-time operation status, elapsed time, impact level, process details

🔧 PostgreSQL 12-17: Fully compatible, no extensions required

💡 Usage: Real-time maintenance monitoring using pg_stat_activity

get_vacuum_effectiveness_analysis

"Analyze VACUUM effectiveness and maintenance patterns."

"Compare manual VACUUM vs autovacuum efficiency."

"Find tables with suboptimal maintenance patterns."

"Check VACUUM frequency vs table activity ratios."

📋 Features: Maintenance pattern analysis, effectiveness assessment, DML-to-VACUUM ratios

🔧 PostgreSQL 12-17: Fully compatible, no extensions required

💡 Usage: Strategic VACUUM analysis using existing statistics

get_table_bloat_analysis

"Analyze table bloat in the public schema."

"Show tables with high dead tuple ratios in ecommerce database."

"Find tables requiring VACUUM maintenance."

"Check bloat for tables with more than 5000 dead tuples."

📋 Features: Dead tuple ratios, estimated bloat size, VACUUM recommendations

⚠️ Required: Specify

database_namefor cross-database analysis

get_database_bloat_overview

"Show database-wide bloat summary by schema."

"Get bloat overview for inventory database."

"Identify schemas with highest bloat ratios."

"Database maintenance planning with bloat statistics."

📋 Features: Schema-level aggregation, maintenance priorities, size recommendations

get_lock_monitoring

"Show all current locks and blocked sessions."

"Show only blocked sessions with granted=false filter."

"Monitor locks by specific user with username filter."

"Check exclusive locks with mode filter."

get_wal_status

"Show WAL status and archiving information."

"Monitor WAL generation and current LSN position."

get_replication_status

"Check replication connections and lag status."

"Monitor replication slots and WAL receiver status."

get_database_stats

"Show comprehensive database performance metrics."

"Analyze transaction commit ratios and I/O statistics."

"Monitor buffer cache hit ratios and temporary file usage."

get_bgwriter_stats

"Analyze checkpoint performance and timing."

"Show me checkpoint performance."

"Show background writer efficiency statistics."

"Monitor buffer allocation and fsync patterns."

get_user_functions_stats

"Analyze user-defined function performance."

"Show function call counts and execution times."

"Identify performance bottlenecks in custom functions."

⚠️ Requires:

track_functions = plin postgresql.conf

get_table_io_stats

"Analyze table I/O performance and buffer hit ratios."

"Identify tables with poor buffer cache performance."

"Monitor TOAST table I/O statistics."

💡 Enhanced with:

track_io_timing = onfor accurate timing

get_index_io_stats

"Show index I/O performance and buffer efficiency."

"Identify indexes causing excessive disk I/O."

"Monitor index cache-friendliness patterns."

💡 Enhanced with:

track_io_timing = onfor accurate timing

get_database_conflicts_stats

"Check replication conflicts on standby servers."

"Analyze conflict types and resolution statistics."

"Monitor standby server query cancellation patterns."

"Monitor WAL generation and current LSN position."

get_replication_status

"Check replication connections and lag status."

"Monitor replication slots and WAL receiver status."

🚀 Version-Aware Tools (Auto-Adapting)

get_io_stats (New!)

"Show comprehensive I/O statistics." (PostgreSQL 16+ provides detailed breakdown)

"Analyze I/O statistics."

"Analyze buffer cache efficiency and I/O timing."

"Monitor I/O patterns by backend type and context."

📈 PG16+: Full pg_stat_io with timing, backend types, and contexts

📊 PG12-15: Basic pg_statio_* fallback with buffer hit ratios

get_bgwriter_stats (Enhanced!)

"Show background writer and checkpoint performance."

📈 PG15: Separate checkpointer and bgwriter statistics (unique feature)

📊 PG12-14, 16+: Combined bgwriter stats (includes checkpointer data)

get_server_info (Enhanced!)

"Show server version and compatibility features."

"Check server compatibility."

"Check what MCP tools are available on this PostgreSQL version."

"Displays feature availability matrix and upgrade recommendations."

get_all_tables_stats (Enhanced!)

"Show comprehensive statistics for all tables." (version-compatible for PG12-17)

"Include system tables with include_system=true parameter."

"Analyze table access patterns and maintenance needs."

📈 PG13+: Tracks insertions since vacuum (

n_ins_since_vacuum) for optimal maintenance scheduling📊 PG12: Compatible mode with NULL for unsupported columns

🟡 Extension-Dependent Tools

get_pg_stat_statements_top_queries (Requires

pg_stat_statements)"Show top 10 slowest queries."

"Analyze slow queries in the inventory database."

📈 Version-Compatible: PG12 uses

total_time→total_exec_timemapping; PG13+ uses native columns💡 Cross-Version: Automatically adapts query structure for PostgreSQL 12-17 compatibility

get_pg_stat_monitor_recent_queries (Optional, uses

pg_stat_monitor)"Show recent queries in real time."

"Monitor query activity for the last 5 minutes."

📈 Version-Compatible: PG12 uses

total_time→total_exec_timemapping; PG13+ uses native columns💡 Cross-Version: Automatically adapts query structure for PostgreSQL 12-17 compatibility

💡 Pro Tip: All tools support multi-database operations using the database_name parameter. This allows PostgreSQL superusers to analyze and monitor multiple databases from a single MCP server instance.

Troubleshooting

Connection Issues

Check PostgreSQL server status

Verify connection parameters in

.envfileEnsure network connectivity

Check user permissions

Extension Errors

Run

get_server_infoto check extension statusInstall missing extensions:

CREATE EXTENSION pg_stat_statements; CREATE EXTENSION pg_stat_monitor;Restart PostgreSQL if needed

Configuration Issues

"No data found" for function statistics: Check

track_functionssettingSHOW track_functions; -- Should be 'pl' or 'all'Quick fix for managed services (AWS RDS, etc.):

ALTER SYSTEM SET track_functions = 'pl'; SELECT pg_reload_conf();Missing I/O timing data: Enable timing collection

SHOW track_io_timing; -- Should be 'on'Quick fix:

ALTER SYSTEM SET track_io_timing = 'on'; SELECT pg_reload_conf();Apply configuration changes:

Self-managed: Add settings to

postgresql.confand restart serverManaged services: Use

ALTER SYSTEM SET+SELECT pg_reload_conf()Temporary testing: Use

SET parameter = valuefor current sessionGenerate some database activity to populate statistics

Performance Issues

Use

limitparameters to reduce result sizeRun monitoring during off-peak hours

Check database load before running analysis

Version Compatibility Issues

For more details, see the ## Tool Compatibility Matrix

Run compatibility check first:

# "Use get_server_info to check version and available features"Understanding feature availability:

PostgreSQL 16-17: All features available

PostgreSQL 15+: Separate checkpointer stats

PostgreSQL 14+: Parallel query tracking

PostgreSQL 12-13: Core functionality only

If a tool shows "Not Available":

Feature requires newer PostgreSQL version

Tool will automatically use best available alternative

Consider upgrading PostgreSQL for enhanced monitoring

Development

Testing & Development

Version Compatibility Testing

The MCP server automatically adapts to PostgreSQL versions 12-17. To test across versions:

Set up test databases: Different PostgreSQL versions (12, 14, 15, 16, 17)

Run compatibility tests: Point to each version and verify tool behavior

Check feature detection: Ensure proper version detection and feature availability

Verify fallback behavior: Confirm graceful degradation on older versions

Security Notes

All tools are read-only - no data modification capabilities

Sensitive information (passwords) are masked in outputs

No direct SQL execution - only predefined queries

Follows principle of least privilege

Contributing

🤝 Got ideas? Found bugs? Want to add cool features?

We're always excited to welcome new contributors! Whether you're fixing a typo, adding a new monitoring tool, or improving documentation - every contribution makes this project better.

Ways to contribute:

🐛 Report issues or bugs

💡 Suggest new PostgreSQL monitoring features

📝 Improve documentation

🚀 Submit pull requests

⭐ Star the repo if you find it useful!

Pro tip: The codebase is designed to be super friendly for adding new tools. Check out the existing @mcp.tool() functions in mcp_main.py.

MCPO Swagger Docs

[MCPO Swagger URL] http://localhost:8003/postgresql-ops/docs

🔐 Security & Authentication

Bearer Token Authentication

For streamable-http mode, this MCP server supports Bearer token authentication to secure remote access. This is especially important when running the server in production environments.

Default Policy:

REMOTE_AUTH_ENABLEdefaults tofalseif undefined, null, or empty. This ensures backward compatibility and prevents startup errors when the variable is not set.

Configuration

Enable Authentication:

Or via CLI:

Security Levels

stdio mode (Default): Local-only access, no authentication needed

streamable-http + REMOTE_AUTH_ENABLE=false: Remote access without authentication ⚠️ NOT RECOMMENDED for production

streamable-http + REMOTE_AUTH_ENABLE=true: Remote access with Bearer token authentication ✅ RECOMMENDED for production

Client Configuration

When authentication is enabled, MCP clients must include the Bearer token in the Authorization header:

Security Best Practices

Always enable authentication when using streamable-http mode in production

Use strong, randomly generated secret keys (32+ characters recommended)

Use HTTPS when possible (configure reverse proxy with SSL/TLS)

Restrict network access using firewalls or network policies

Rotate secret keys regularly for enhanced security

Monitor access logs for unauthorized access attempts

Error Handling

When authentication fails, the server returns:

401 Unauthorized for missing or invalid tokens

Detailed error messages in JSON format for debugging

🚀 Adding Custom Tools

This MCP server is designed for easy extensibility. Follow these 4 simple steps to add your own custom tools:

Step-by-Step Guide

1. Add Helper Functions (Optional)

Add reusable data functions to src/mcp_postgresql_ops/functions.py:

2. Create Your MCP Tool

Add your tool function to src/mcp_postgresql_ops/mcp_main.py:

3. Update Imports

Add your helper function to the imports section in src/mcp_postgresql_ops/mcp_main.py (around line 30):

4. Update Prompt Template (Recommended)

Add your tool description to src/mcp_postgresql_ops/prompt_template.md for better natural language recognition:

5. Test Your Tool

Important Notes

Multi-Database Support: All tools support the optional

database_nameparameter to target specific databasesInput Validation: Always validate

limitparameters withmax(1, min(limit, 100))Error Handling: Return user-friendly error messages instead of raising exceptions

Logging: Use

logger.error()for debugging while returning clean error messages to usersPostgreSQL Compatibility: Your custom queries should work across PostgreSQL 12-17

Extension Dependencies: If your tool requires specific extensions, check availability with

check_extension_exists()

Advanced Patterns

For version-aware queries or extension-dependent features, see existing tools like get_pg_stat_statements_top_queries for reference patterns.

That's it! Your custom tool is ready to use with natural language queries through any MCP client.

License

Freely use, modify, and distribute under the MIT License.

⭐ Other Projects

Other MCP servers by the same author: