---

title: "Postgres MCP Server Review - DBHub Design Explained"

description: "A maintainer's deep-dive into DBHub—a zero-dependency, token efficient MCP server for PostgreSQL, MySQL, SQL Server, MariaDB, and SQLite with consistent guardrails."

---

_Last updated: Dec 28, 2025_

This is the third in a series reviewing Postgres MCP servers. Here we—the DBHub maintainers—explain the design behind [DBHub](https://github.com/bytebase/dbhub), a zero-dependency, token efficient MCP server that connects AI assistants to PostgreSQL, MySQL, MariaDB, SQL Server, and SQLite. We'll cover our design decisions, trade-offs, and where DBHub falls short compared to alternatives.

- **GitHub Stars**

[](https://www.star-history.com/#bytebase/dbhub&type=date&legend=top-left)

- **License:** MIT

- **Language:** TypeScript

## Design Objectives

The first question we ask ourselves is what's the primary use case for a database MCP server today, and our answer is local development:

1. Local development is where most developers spend their time coding and testing against databases (and likely with AI coding agents nowadays).

1. Local development runs in a trusted environment, thus [lethal trifecta attack](https://simonwillison.net/2025/Jun/16/the-lethal-trifecta/) is not a concern.

1. Local development is forgiving. If coding agent makes a mistake and nukes the database, it's not the end of the world.

With local development as the primary use case, our design objectives are:

1. **Minimal setup**: Developers want to get started quickly without installing complex software stacks or dependencies.

1. **Token efficiency**: Minimize the token overhead of MCP tools to maximize the context window for actual coding and queries.

1. **Auth is not required**: Since local development is a trusted environment, we can skip complex authentication mechanisms.

## Installation

<Note>Testing on Mac (Apple Silicon), same as the other reviews in this series.</Note>

```bash

npx @bytebase/dbhub@latest --dsn "postgres://user:password@localhost:5432/dbname"

```

One command, no configuration file required. Unlike MCP Toolbox which requires a YAML config file upfront, DBHub works directly with a DSN.

Configuration files are optional—use them when you need advanced settings.

For those who want to try DBHub without setting up a database first:

```bash

npx @bytebase/dbhub@latest --demo

```

This starts DBHub with a bundled SQLite employee database—useful for exploring the MCP integration before connecting to your own databases.

## Tools

DBHub provides two built-in tools:

| Tool | Purpose |

|------|---------|

| `execute_sql` | Execute SQL queries with transaction support, read-only mode, and row limiting |

| `search_objects` | Search and explore schemas, tables, columns, procedures, and indexes |

Beyond built-in tools, you can define [custom tools](/tools/custom-tools)—reusable, parameterized SQL operations with type validation. Useful for giving AI models well-defined operations instead of open-ended SQL access.

```toml

[[tools]]

name = "get_active_users"

description = "Get active users by department"

source = "prod"

statement = "SELECT * FROM users WHERE department = $1 AND active = true"

[[tools.parameters]]

name = "department"

type = "string"

description = "Department name"

allowed_values = ["engineering", "sales", "support"]

```

## Token Efficiency

Token efficiency is DBHub's key design objective. This matters for two reasons:

1. **Longer sessions without compaction**: Every token spent on tool definitions is a token unavailable for your actual work. Fewer tool tokens means more room for code, queries, and conversation history before hitting context limits.

1. **Lower cost**: Most AI providers charge per token. Tool definitions are a tax on every session—they're sent with each request regardless of whether you use them.

### Minimized Tool Load

DBHub loads only 2 built-in tools with 1.4k tokens by default:

```bash

> /context

⎿

Context Usage

⛁ ⛀ ⛁ ⛁ ⛁ ⛁ ⛁ ⛁ ⛁ ⛁ claude-opus-4-5-20251101 · 70k/200k tokens (35%)

⛁ ⛀ ⛁ ⛀ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶

⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛁ System prompt: 3.1k tokens (1.6%)

⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛁ System tools: 16.6k tokens (8.3%)

⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛁ MCP tools: 1.4k tokens (0.7%)

⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛁ Custom agents: 15 tokens (0.0%)

⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛁ Memory files: 2.5k tokens (1.2%)

⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛶ ⛝ ⛝ ⛝ ⛁ Messages: 1.1k tokens (0.5%)

⛝ ⛝ ⛝ ⛝ ⛝ ⛝ ⛝ ⛝ ⛝ ⛝ ⛶ Free space: 130k (65.1%)

⛝ ⛝ ⛝ ⛝ ⛝ ⛝ ⛝ ⛝ ⛝ ⛝ ⛝ Autocompact buffer: 45.0k tokens (22.5%)

MCP tools · /mcp

└ mcp__dbhub__execute_sql: 607 tokens

└ mcp__dbhub__search_objects: 817 tokens

```

You can further reduce this by exposing only specific tools. For example, to expose only `execute_sql`:

```toml

[[sources]]

id = "prod"

dsn = "postgres://..."

[[tools]]

name = "execute_sql"

source = "prod"

```

**Token Comparison**

| MCP Server | Default Config | Minimal Config |

|------------|----------------|----------------|

| DBHub | **1.4k** (2 tools) | **607** (1 tool) |

| MCP Toolbox | 19.0k (28 tools) | 579 (1 tool) |

| Supabase MCP | 19.3k (all features) | 3.1k (5 tools) |

<Note>Minimal Config refers to exposing only the `execute_sql` tool for fair comparison. Supabase MCP requires loading the entire `database` feature group.</Note>

### Progressive Disclosure

The built-in `search_objects` tool supports [progressive disclosure](https://www.anthropic.com/engineering/code-execution-with-mcp#progressive-disclosure) through `detail_level`:

- `names`: Minimal output (just names and schemas)

- `summary`: Adds metadata (row counts, column counts)

- `full`: Complete structure with all columns and indexes

| Scenario | Traditional Approach | DBHub Approach |

|----------|---------------------|----------------|

| Find "users" table in 500 tables | List all tables with full schema | `search_objects(pattern="users", detail_level="names")` |

| Explore table structure | Separate tool calls for columns, indexes | `search_objects(pattern="users", detail_level="full")` |

| Find ID columns across database | Load all schemas first | `search_objects(object_type="column", pattern="%_id")` |

## Guardrails & Security

DBHub supports advanced settings via TOML configuration:

- **Read-only mode**: Keyword filtering to restrict SQL execution. This is a limitation—Supabase MCP guarantees read-only via a dedicated `supabase_read_only_user`, while DBHub requires user discipline to supply a read-only database user for true guarantee.

- **Row limiting**: Prevent accidental large data retrieval with `max_rows`. If your query already has a `LIMIT` clause, DBHub uses the smaller value.

- **Connection and query timeouts**: Prevent runaway queries and connection hangs with `connection_timeout` and `query_timeout` settings.

- **SSH tunneling**: Connect through bastion hosts for databases not exposed to the public internet. Supports ProxyJump for multi-hop connections and automatic `~/.ssh/config` parsing.

**What's missing**: Authentication. Unlike MCP Toolbox which provides Google Auth, or Supabase MCP which integrates OAuth with its hosted service, DBHub doesn't provide any auth yet (we're planning to support vendor-neutral authentication such as Keycloak).

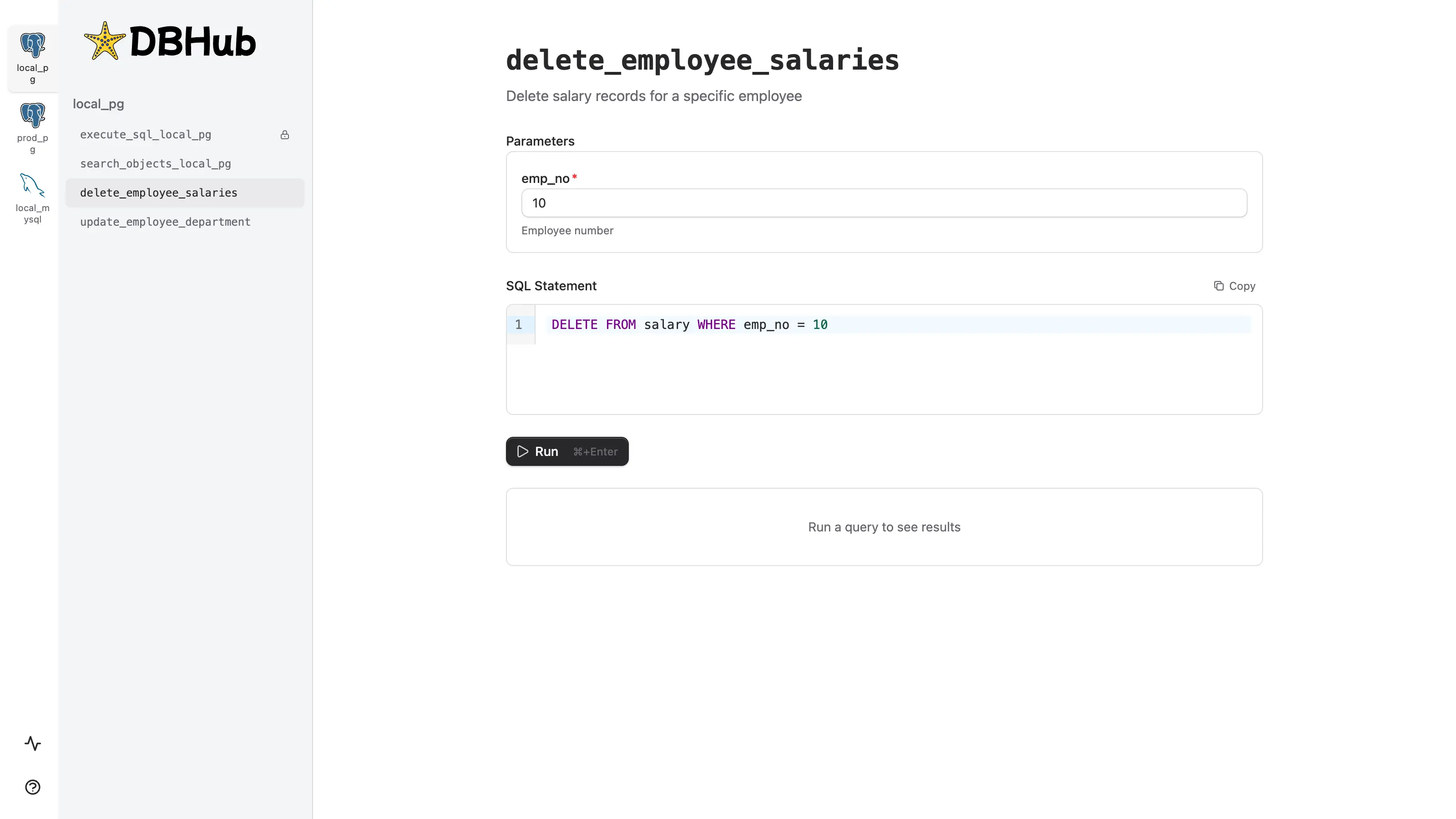

## Workbench

DBHub includes a [built-in web interface](/workbench/overview) with two main features:

1. **Tool Execution**: Run database tools directly from your browser—useful for testing and debugging without an MCP client. Custom tools render as constrained forms with validated inputs.

1. **Request Traces**: Inspect MCP requests to understand how AI agents interact with DBHub.

## Summary

**DBHub** is a zero-dependency, token efficient MCP server for relational databases with guardrails.

### The Good

- **Minimal design**: Zero dependency with just 2 general tools (`execute_sql`, `search_objects`) using 1.4k tokens—13-14x fewer than alternatives. Tools can also be customized and cherry-picked via configuration.

- **Vendor neutral**: Unlike Supabase MCP (Supabase-only) or MCP Toolbox (tilted toward Google Cloud), DBHub works with any deployment—cloud, on-premise, or local.

- **Multi-database with consistent guardrails**: Not limited to a single Postgres database, but we don't expand beyond the database boundary either. This focus lets us provide consistent guardrails across all supported databases: read-only mode, row limits, connection/query timeouts.

### The Bad

- **No platform integration**: MCP Toolbox integrates with Gemini and Google Cloud services. Supabase MCP connects to its hosted platform with OAuth. DBHub is standalone.

- **No built-in authentication**: Unlike MCP Toolbox's Google auth support or Supabase's OAuth, DBHub has no auth layer. You'll need a reverse proxy or network security for multi-tenant scenarios.

### Should You Use It?

```mermaid

quadrantChart

title Postgres MCP Server Comparison

x-axis Vendor-Locked --> Vendor-Neutral

y-axis Postgres-Specific --> Data Source Agnostic

Supabase MCP Server: [0.1, 0.1]

MCP Toolbox: [0.3, 0.9]

DBHub: [0.85, 0.6]

```

**If you're an AI-assisted developer working with local databases**, DBHub is built for you. It's optimized for the local development workflow—zero setup friction, minimal token overhead, and no authentication complexity. Works with PostgreSQL, MySQL, SQL Server, MariaDB, and SQLite out of the box.

**If you need a vendor-neutral solution**, DBHub is the only option that isn't tied to a specific cloud platform. Unlike Supabase MCP (Supabase-only) or MCP Toolbox (tilted toward Google Cloud), DBHub works anywhere your database runs.

**If you need integrated platform experience such as built-in authentication**, look at Supabase MCP (for Supabase projects with OAuth) or MCP Toolbox (for Gemini and Google Cloud services).

---

**Postgres MCP Server Series:**

1. [MCP Toolbox for Databases](/blog/postgres-mcp-server-review-mcp-toolbox) - Google's multi-database MCP server with 40+ data source support

2. [Supabase MCP Server](/blog/postgres-mcp-server-review-supabase-mcp) - Hosted MCP server for Supabase projects

3. **DBHub** (this article) - Zero-dependency, token efficient MCP server for PostgreSQL, MySQL, SQL Server, MariaDB, and SQLite

4. [The State of Postgres MCP Servers](/blog/state-of-postgres-mcp-servers-2025) - Landscape overview and future outlook