The ScrapeGraph MCP Server enables AI-powered web scraping and data extraction using the ScrapeGraph AI API.

Convert webpages to markdown: Transform any webpage into clean, structured markdown format.

Extract structured data: Use AI to scrape specific data from webpages based on user prompts.

Perform AI-powered web searches: Execute searches with structured, actionable results.

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@ScrapeGraph MCP Serverextract all product prices and descriptions from this e-commerce page"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

ScrapeGraph MCP Server

A production-ready Model Context Protocol (MCP) server that provides seamless integration with the ScrapeGraph AI API. This server enables language models to leverage advanced AI-powered web scraping capabilities with enterprise-grade reliability.

Table of Contents

Related MCP server: Prysm MCP Server

Key Features

8 Powerful Tools: From simple markdown conversion to complex multi-page crawling and agentic workflows

AI-Powered Extraction: Intelligently extract structured data using natural language prompts

Multi-Page Crawling: SmartCrawler supports asynchronous crawling with configurable depth and page limits

Infinite Scroll Support: Handle dynamic content loading with configurable scroll counts

JavaScript Rendering: Full support for JavaScript-heavy websites

Flexible Output Formats: Get results as markdown, structured JSON, or custom schemas

Easy Integration: Works seamlessly with Claude Desktop, Cursor, and any MCP-compatible client

Enterprise-Ready: Robust error handling, timeout management, and production-tested reliability

Simple Deployment: One-command installation via Smithery or manual setup

Comprehensive Documentation: Detailed developer docs in

.agent/folder

Quick Start

1. Get Your API Key

Sign up and get your API key from the ScrapeGraph Dashboard

2. Install with Smithery (Recommended)

3. Start Using

Ask Claude or Cursor:

"Convert https://scrapegraphai.com to markdown"

"Extract all product prices from this e-commerce page"

"Research the latest AI developments and summarize findings"

That's it! The server is now available to your AI assistant.

Available Tools

The server provides 8 enterprise-ready tools for AI-powered web scraping:

Core Scraping Tools

1. markdownify

Transform any webpage into clean, structured markdown format.

Credits: 2 per request

Use case: Quick webpage content extraction in markdown

2. smartscraper

Leverage AI to extract structured data from any webpage with support for infinite scrolling.

Credits: 10+ (base) + variable based on scrolling

Use case: AI-powered data extraction with custom prompts

3. searchscraper

Execute AI-powered web searches with structured, actionable results.

Credits: Variable (3-20 websites × 10 credits)

Use case: Multi-source research and data aggregation

Time filtering: Use

time_rangeto filter results by recency (e.g.,"past_week"for recent results)

Advanced Scraping Tools

4. scrape

Basic scraping endpoint to fetch page content with optional heavy JavaScript rendering.

Use case: Simple page content fetching with JS rendering support

5. sitemap

Extract sitemap URLs and structure for any website.

Use case: Website structure analysis and URL discovery

Multi-Page Crawling

6. smartcrawler_initiate

Initiate intelligent multi-page web crawling (asynchronous operation).

AI Extraction Mode: 10 credits per page - extracts structured data

Markdown Mode: 2 credits per page - converts to markdown

Returns:

request_idfor pollingUse case: Large-scale website crawling and data extraction

7. smartcrawler_fetch_results

Retrieve results from asynchronous crawling operations.

Returns: Status and results when crawling is complete

Use case: Poll for crawl completion and retrieve results

Intelligent Agent-Based Scraping

8. agentic_scrapper

Run advanced agentic scraping workflows with customizable steps and structured output schemas.

Use case: Complex multi-step workflows with custom schemas and persistent sessions

Setup Instructions

To utilize this server, you'll need a ScrapeGraph API key. Follow these steps to obtain one:

Navigate to the ScrapeGraph Dashboard

Create an account and generate your API key

Automated Installation via Smithery

For automated installation of the ScrapeGraph API Integration Server using Smithery:

Claude Desktop Configuration

Update your Claude Desktop configuration file with the following settings (located on the top rigth of the Cursor page):

(remember to add your API key inside the config)

The configuration file is located at:

Windows:

%APPDATA%/Claude/claude_desktop_config.jsonmacOS:

~/Library/Application\ Support/Claude/claude_desktop_config.json

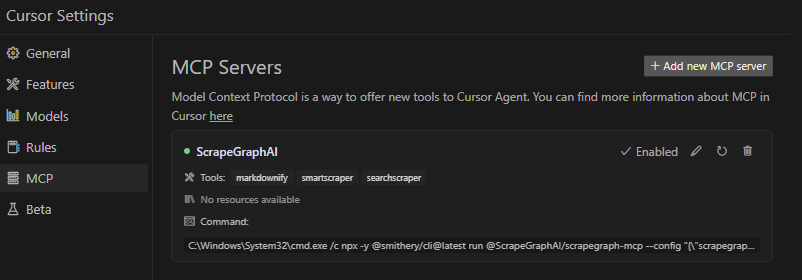

Cursor Integration

Add the ScrapeGraphAI MCP server on the settings:

Remote Server Usage

Connect to our hosted MCP server - no local installation required!

Claude Desktop Configuration (Remote)

Add this to your Claude Desktop config (~/Library/Application Support/Claude/claude_desktop_config.json on macOS):

Cursor Configuration (Remote)

Cursor supports native HTTP MCP connections. Add to your Cursor MCP settings (~/.cursor/mcp.json):

Benefits of Remote Server

No local setup - Just configure and start using

Always up-to-date - Automatically receives latest updates

Cross-platform - Works on any OS with Node.js

Local Usage

To run the MCP server locally for development or testing, follow these steps:

Prerequisites

Python 3.13 or higher

pip or uv package manager

ScrapeGraph API key

Installation

Clone the repository (if you haven't already):

Install the package:

Set your API key:

Running the Server Locally

You can run the server directly:

The server will start and communicate via stdio (standard input/output), which is the standard MCP transport method.

Testing with MCP Inspector

Test your local server using the MCP Inspector tool:

This provides a web interface to test all available tools interactively.

Configuring Claude Desktop for Local Server

To use your locally running server with Claude Desktop, update your configuration file:

macOS/Linux (~/Library/Application Support/Claude/claude_desktop_config.json):

Windows (%APPDATA%\Claude\claude_desktop_config.json):

Note: Make sure Python is in your PATH. You can verify by running python --version in your terminal.

Configuring Cursor for Local Server

In Cursor's MCP settings, add a new server with:

Command:

pythonArgs:

["-m", "scrapegraph_mcp.server"]Environment Variables:

{"SGAI_API_KEY": "your-api-key-here"}

Troubleshooting Local Setup

Server not starting:

Verify Python is installed:

python --versionCheck that the package is installed:

pip list | grep scrapegraph-mcpEnsure API key is set:

echo $SGAI_API_KEY(macOS/Linux) orecho %SGAI_API_KEY%(Windows)

Tools not appearing:

Check Claude Desktop logs:

macOS:

~/Library/Logs/Claude/Windows:

%APPDATA%\Claude\Logs\

Verify the server starts without errors when run directly

Check that the configuration JSON is valid

Import errors:

Reinstall the package:

pip install -e . --force-reinstallVerify dependencies:

pip install -r requirements.txt(if available)

Google ADK Integration

The ScrapeGraph MCP server can be integrated with Google ADK (Agent Development Kit) to create AI agents with web scraping capabilities.

Prerequisites

Python 3.13 or higher

Google ADK installed

ScrapeGraph API key

Installation

Install Google ADK (if not already installed):

Set your API key:

Basic Integration Example

Create an agent file (e.g., agent.py) with the following configuration:

Configuration Options

Timeout Settings:

Default timeout is 5 seconds, which may be too short for web scraping operations

Recommended: Set `timeout=300.0

Adjust based on your use case (crawling operations may need even longer timeouts)

Tool Filtering:

By default, all 8 tools are exposed to the agent

Use

tool_filterto limit which tools are available:tool_filter=['markdownify', 'smartscraper', 'searchscraper']

API Key Configuration:

Set via environment variable:

export SGAI_API_KEY=your-keyOr pass directly in

envdict:'SGAI_API_KEY': 'your-key-here'Environment variable approach is recommended for security

Usage Example

Once configured, your agent can use natural language to interact with web scraping tools:

For more information about Google ADK, visit the official documentation.

Example Use Cases

The server enables sophisticated queries across various scraping scenarios:

Single Page Scraping

Markdownify: "Convert the ScrapeGraph documentation page to markdown"

SmartScraper: "Extract all product names, prices, and ratings from this e-commerce page"

SmartScraper with scrolling: "Scrape this infinite scroll page with 5 scrolls and extract all items"

Basic Scrape: "Fetch the HTML content of this JavaScript-heavy page with full rendering"

Search and Research

SearchScraper: "Research and summarize recent developments in AI-powered web scraping"

SearchScraper: "Search for the top 5 articles about machine learning frameworks and extract key insights"

SearchScraper: "Find recent news about GPT-4 and provide a structured summary"

SearchScraper with time_range: "Search for AI news from the past week only" (uses

time_range="past_week")

Website Analysis

Sitemap: "Extract the complete sitemap structure from the ScrapeGraph website"

Sitemap: "Discover all URLs on this blog site"

Multi-Page Crawling

SmartCrawler (AI mode): "Crawl the entire documentation site and extract all API endpoints with descriptions"

SmartCrawler (Markdown mode): "Convert all pages in the blog to markdown up to 2 levels deep"

SmartCrawler: "Extract all product information from an e-commerce site, maximum 100 pages, same domain only"

Advanced Agentic Scraping

Agentic Scraper: "Navigate through a multi-step authentication form and extract user dashboard data"

Agentic Scraper with schema: "Follow pagination links and compile a dataset with schema: {title, author, date, content}"

Agentic Scraper: "Execute a complex workflow: login, navigate to reports, download data, and extract summary statistics"

Error Handling

The server implements robust error handling with detailed, actionable error messages for:

API authentication issues

Malformed URL structures

Network connectivity failures

Rate limiting and quota management

Common Issues

Windows-Specific Connection

When running on Windows systems, you may need to use the following command to connect to the MCP server:

This ensures proper execution in the Windows environment.

Other Common Issues

"ScrapeGraph client not initialized"

Cause: Missing API key

Solution: Set

SGAI_API_KEYenvironment variable or provide via--config

"Error 401: Unauthorized"

Cause: Invalid API key

Solution: Verify your API key at the ScrapeGraph Dashboard

"Error 402: Payment Required"

Cause: Insufficient credits

Solution: Add credits to your ScrapeGraph account

SmartCrawler not returning results

Cause: Still processing (asynchronous operation)

Solution: Keep polling

smartcrawler_fetch_results()until status is "completed"

Tools not appearing in Claude Desktop

Cause: Server not starting or configuration error

Solution: Check Claude logs at

~/Library/Logs/Claude/(macOS) or%APPDATA%\Claude\Logs\(Windows)

For detailed troubleshooting, see the .agent documentation.

Development

Prerequisites

Python 3.13 or higher

pip or uv package manager

ScrapeGraph API key

Installation from Source

Testing with MCP Inspector

Test your server locally using the MCP Inspector tool:

This provides a web interface to test all available tools.

Code Quality

Linting:

Type Checking:

Format Checking:

Project Structure

Contributing

We welcome contributions! Here's how you can help:

Adding a New Tool

Add method to in server.py:

Add MCP tool decorator:

Test with MCP Inspector:

Update documentation:

Add tool to this README

Update .agent documentation

Submit a pull request

Development Workflow

Fork the repository

Create a feature branch (

git checkout -b feature/amazing-feature)Make your changes

Run linting and type checking

Test with MCP Inspector and Claude Desktop

Update documentation

Commit your changes (

git commit -m 'Add amazing feature')Push to the branch (

git push origin feature/amazing-feature)Open a Pull Request

Code Style

Line length: 100 characters

Type hints: Required for all functions

Docstrings: Google-style docstrings

Error handling: Return error dicts, don't raise exceptions in tools

Python version: Target 3.13+

For detailed development guidelines, see the .agent documentation.

Documentation

For comprehensive developer documentation, see:

.agent/README.md - Complete developer documentation index

.agent/system/project_architecture.md - System architecture and design

.agent/system/mcp_protocol.md - MCP protocol integration details

Technology Stack

Core Framework

Python 3.13+ - Modern Python with type hints

FastMCP - Lightweight MCP server framework

httpx 0.24.0+ - Modern async HTTP client

Development Tools

Ruff - Fast Python linter and formatter

mypy - Static type checker

Hatchling - Modern build backend

Deployment

Smithery - Automated MCP server deployment

Docker - Container support with Alpine Linux

stdio transport - Standard MCP communication

API Integration

ScrapeGraph AI API - Enterprise web scraping service

Base URL:

https://api.scrapegraphai.com/v1Authentication: API key-based

License

This project is distributed under the MIT License. For detailed terms and conditions, please refer to the LICENSE file.

Acknowledgments

Special thanks to tomekkorbak for his implementation of oura-mcp-server, which served as starting point for this repo.

Resources

Official Links

ScrapeGraph Dashboard - Get your API key

MCP Resources

Model Context Protocol - Official MCP specification

FastMCP Framework - Framework used by this server

MCP Inspector - Testing tool

Smithery - MCP server distribution

mcp-name: io.github.ScrapeGraphAI/scrapegraph-mcp

AI Assistant Integration

Claude Desktop - Desktop app with MCP support

Cursor - AI-powered code editor

Support

GitHub Issues - Report bugs or request features

Developer Documentation - Comprehensive dev docs

Made with ❤️ by ScrapeGraphAI Team