This server provides a domain-specific API for querying the RAGmonsters monster dataset through natural language interactions, eliminating the need for raw SQL queries.

Core Tools:

Query monsters with filtering and sorting - Use

getMonstersto search by rarity, habitat, or category with pagination supportRetrieve detailed monster information - Use

getMonsterByIdfor comprehensive details (attributes, powers, abilities, strengths, weaknesses)Search monsters by name - Perform partial/fuzzy name matching (returns up to 5 matches)

Find monsters by habitat - Use

getMonsterByHabitatwith exact habitat namesExplore available habitats - Use

getHabitatsto discover all habitat types in the databasePerform basic arithmetic - Add two numbers (testing utility)

Key Features:

Natural language interaction - Chat interface powered by LLMs using LangGraph's ReAct agent pattern

Web-based exploration - Browse and filter monsters through a dedicated explorer interface

Enhanced performance & security - Optimized queries, no direct SQL exposure, reduced LLM cognitive load, and deterministic behavior

Robust LLM integration - Seamless integration with LangChain.js and strict typing for predictable responses

Deployment ready - Configured for cloud platforms like Clever Cloud with PostgreSQL integration

Mentioned for hosting capabilities, allowing deployment of the MCP server on Clever Cloud infrastructure

Integrates with GitHub repositories for accessing the RAGmonsters dataset, which serves as the foundation for the MCP server's functionality

Integrates with LangChain.js for LLM interactions, enabling structured communication between the custom MCP server and language models

Referenced as an LLM API provider that can be used with the MCP server for natural language interactions with the database

Provides domain-specific API access to PostgreSQL databases, specifically optimized for the RAGmonsters dataset with custom query operations

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@RAGmonsters Custom PostgreSQL MCP Servershow me the top 5 monsters by attack power"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

Custom PostgreSQL MCP Server for RAGmonsters

Overview

This repository demonstrates a more advanced approach to integrating Large Language Models (LLMs) with databases using the Model Context Protocol (MCP). While generic MCP PostgreSQL servers allow LLMs to explore databases through raw SQL queries, this project takes a different approach by creating a custom MCP server that provides a domain-specific API tailored to the application's needs.

This implementation uses FastMCP, a high-performance implementation of the Model Context Protocol, which provides improved efficiency and reliability for tool-based interactions with LLMs.

This project uses the RAGmonsters dataset as its foundation. RAGmonsters is an open-source project that provides a rich, fictional dataset of monsters with various attributes, abilities, and relationships - specifically designed for demonstrating and testing Retrieval-Augmented Generation (RAG) systems.

The Problem with Generic MCP Database Access

Generic MCP PostgreSQL servers provide LLMs with a query tool that allows them to:

Explore database schemas

Formulate SQL queries based on natural language questions

Execute those queries against the database

While this approach works, it has several limitations for real-world applications:

Cognitive Load: The LLM must understand the entire database schema

Inefficiency: Multiple SQL queries are often needed to answer a single question

Security Concerns: Raw SQL access requires careful prompt engineering to prevent injection attacks

Performance: Complex queries may be inefficient if the LLM doesn't understand the database's indexing strategy

Domain Knowledge Gap: The LLM lacks understanding of business rules and domain-specific constraints

About RAGmonsters Dataset

RAGmonsters is an open dataset specifically designed for testing and demonstrating Retrieval-Augmented Generation (RAG) systems. It contains information about fictional monsters with rich attributes, abilities, and relationships - making it perfect for natural language querying demonstrations.

The PostgreSQL version of RAGmonsters provides a well-structured relational database with multiple tables and relationships, including:

Monsters with various attributes (attack power, defense, health, etc.)

Abilities that monsters can possess

Elements (fire, water, earth, etc.) with complex relationships

Habitats where monsters can be found

Evolution chains and relationships between monsters

This rich, interconnected dataset is ideal for demonstrating the power of domain-specific APIs versus generic SQL access.

Our Solution: Domain-Specific MCP API

This project demonstrates how to build a custom MCP server that provides a higher-level, domain-specific API for the RAGmonsters dataset. Instead of exposing raw SQL capabilities, our MCP server offers purpose-built functions that:

Abstract Database Complexity: Hide the underlying schema and SQL details

Provide Domain-Specific Operations: Offer functions that align with business concepts

Optimize for Common Queries: Implement efficient query patterns for frequently asked questions

Enforce Business Rules: Embed domain-specific logic and constraints

Improve Security: Limit the attack surface by removing direct SQL access

Related MCP server: MongoDB MCP Server for LLMs

Web Interface

The project includes two main interfaces for interacting with the RAGmonsters dataset:

Explorer Interface

A data-focused interface for exploring and filtering the RAGmonsters dataset through the MCP API:

Browse all monsters with filtering by category, habitat, and rarity

View detailed information about each monster

Interactive UI built with Bootstrap

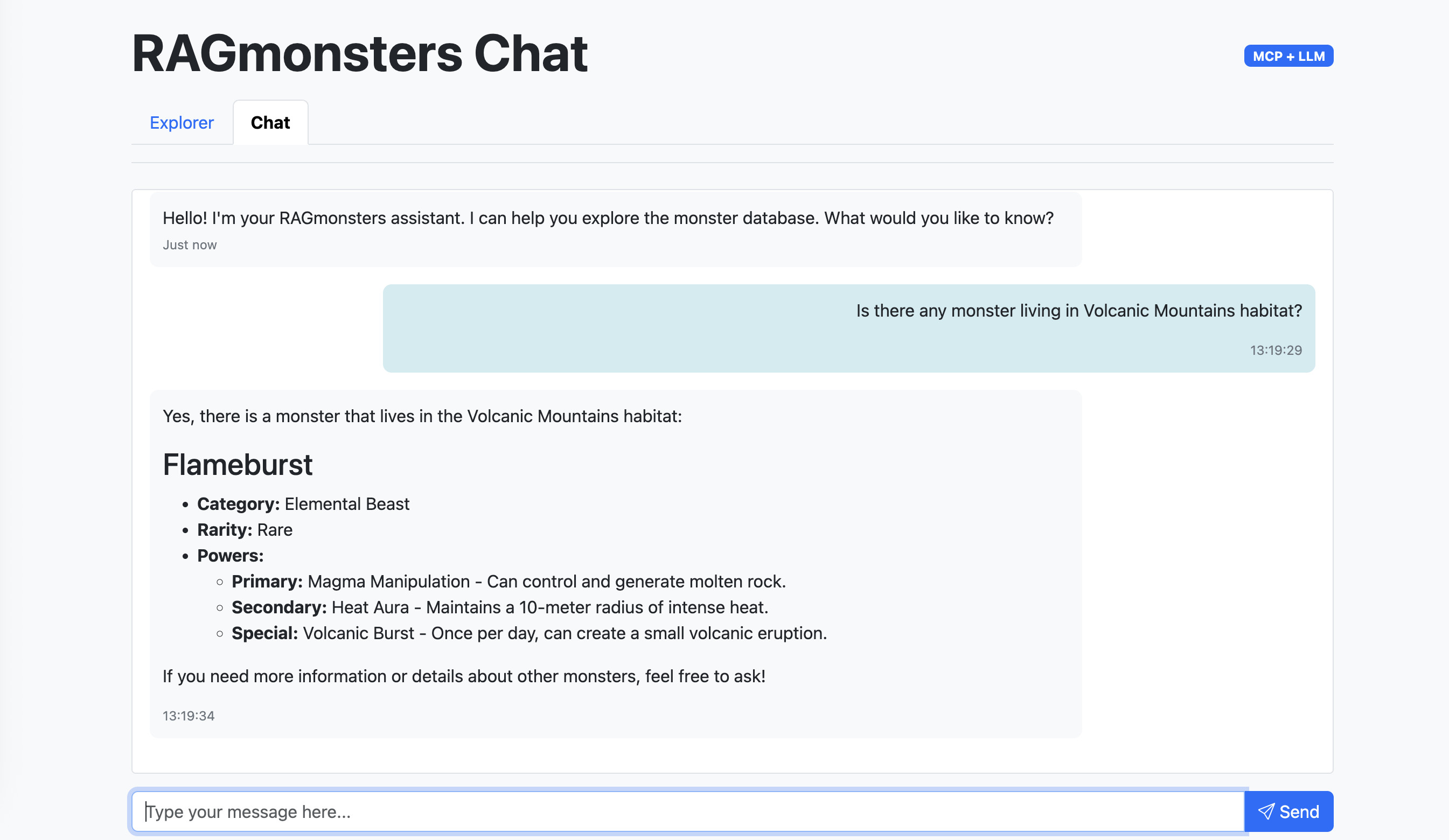

Chat Interface

A natural language interface for interacting with the RAGmonsters dataset:

Ask questions about monsters in natural language

Get Markdown-formatted responses with rich formatting

Powered by LangGraph's ReAct agent pattern

Seamless integration with the MCP tools

This interface allows users to:

Browse all monsters in the dataset

Filter monsters by habitat, category, and rarity

View detailed information about each monster, including powers, abilities, strengths, and weaknesses

Example: Domain-Specific API vs. Generic SQL

Generic MCP PostgreSQL Approach:

Our Custom MCP Server Approach:

Project Structure

Features

Custom MCP Server with FastMCP: High-performance domain-specific API for RAGmonsters data

Optimized Queries: Pre-built efficient database operations

Business Logic Layer: Domain rules and constraints embedded in the API

Structured Response Format: Consistent JSON responses for LLM consumption

Comprehensive Logging: Detailed logging for debugging and monitoring

Test Suite: Scripts to verify server functionality and LLM integration

LLM Integration:

LangChain.js integration with OpenAI and other compatible LLM providers

LangGraph ReAct agent pattern for efficient tool use

Automatic handling of tool calls and responses

Web Interfaces:

Explorer interface for browsing and filtering monsters

Chat interface with Markdown rendering for natural language interaction

Features

LangChain.js Integration: Fully integrated LLM interactions with MCP tools

Web Interface: Explorer and chat interfaces for interacting with the RAGmonsters dataset

Deployment Ready: Configured for easy deployment on platforms like Clever Cloud

Benefits of This Approach

Improved Performance: Optimized queries and caching strategies

Better User Experience: More accurate and faster responses

Reduced Token Usage: LLM doesn't need to process complex SQL or schema information

Enhanced Security: No direct SQL access means reduced risk of injection attacks

Maintainability: Changes to the database schema don't require retraining the LLM

Scalability: Can handle larger and more complex databases

Getting Started

Installation

Clone this repository

Install dependencies:

npm installCopy

.env.exampleto.envand configure your PostgreSQL connection string and LLM API keysRun the MCP server test script:

npm run testRun the LLM integration test script:

npm run test:llmStart the server:

npm start

Available Tools

The MCP server provides the following tools:

getMonsters - Get a list of monsters with optional filtering, sorting, and pagination

Parameters: filters (category, habitat, biome, rarity), sort (field, direction), limit, offset

Returns: Array of monster objects with basic information

getMonsterById - Get detailed information about a specific monster by ID

Parameters: monsterId

Returns: Detailed monster object with all attributes, powers, abilities, strengths, and weaknesses

getHabitats - Get a list of all available habitats in the database

Parameters: None

Returns: Array of habitat names

getCategories - Get a list of all available categories in the database

Parameters: None

Returns: Array of category names

getBiomes - Get a list of all available biomes in the database

Parameters: None

Returns: Array of biome names

getRarities - Get a list of all available rarities in the database

Parameters: None

Returns: Array of rarity names

getMonsterByHabitat - Get monsters by habitat (exact match only)

Parameters: habitat

Returns: Array of monster objects matching the habitat

getMonsterByName - Get monsters by name (partial match)

Parameters: name

Returns: Array of monster objects matching the name

compareMonsters - Compare two monsters side-by-side

Parameters: monsterNameA, monsterNameB

Returns: Comparison data including category, habitat, rarity, and stats

Available Resources

Resources provide static knowledge that the LLM can access for context. Data is cached at server startup for optimal performance.

ragmonsters://schema - Database schema definition

Describes available tables, columns, and data types

ragmonsters://categories - List of monster categories

All available categories (e.g., Aquatic, Elemental, Spirit/Ethereal)

ragmonsters://subcategories - List of monster subcategories

Subcategories grouped by their parent category

ragmonsters://habitats - List of monster habitats

All habitats where monsters can be found

Available Prompts

Prompts are workflow templates that guide the LLM through multi-step analysis using the available tools.

analyze_monster_weakness - Weakness analysis workflow

Fetches monster details, identifies vulnerabilities

Finds counter-monsters and ranks them by effectiveness

Provides battle strategy recommendations

compare_monsters - Monster comparison framework

Deep matchup analysis between two monsters

Analyzes powers, abilities, flaws, and environmental factors

Provides verdict with situational considerations

explore_habitat - Habitat ecosystem analysis

Maps monster population in a habitat

Identifies apex predators and power hierarchy

Provides danger assessment and exploration guidance

build_team - Team composition strategy

Builds optimal monster teams for specific objectives

Considers category diversity and power synergies

Recommends roles and backup alternatives

LLM Integration Architecture

This project uses a modern approach to LLM integration with domain-specific tools:

LangGraph ReAct Agent Pattern

The application uses LangGraph's ReAct (Reasoning and Acting) agent pattern, which:

Processes user queries to understand intent

Determines which tools to use based on the query

Automatically executes the appropriate tools

Synthesizes results into a coherent response

Handles multi-step reasoning when needed

Testing LLM Integration

The project includes a test script that demonstrates how to use LangChain.js to integrate an LLM with the MCP server:

This script:

Connects to the MCP server using the StdioClientTransport

Loads all available MCP tools using LangChain's MCP adapters

Creates a LangChain agent with the OpenAI API

Processes a natural language query about monsters

Shows how the LLM makes tool calls to retrieve information

Logs detailed information about the interaction

You can modify the test queries in the script to explore different capabilities of the system. The script is located at scripts/testLlmWithMcpServer.js.

Prerequisites

Node.js 23 or later

PostgreSQL database with RAGmonsters data

Access to an LLM API (e.g., OpenAI)

FastMCP package (included in dependencies)

Environment Variables

Create a .env file with the following variables:

LLM Configuration

LLM_API_KEY: Your OpenAI API key or compatible provider key

LLM_API_MODEL: The model to use (default: gpt-4o-mini)

LLM_API_URL: The API endpoint (default: OpenAI's endpoint)

The application supports any OpenAI-compatible API, including self-hosted models and alternative providers.

Implementing Smarter MCP Design Principles

This server implements the "Smarter MCP" design principles:

1. Narrow, Named Capabilities

Tools are scoped to specific tasks:

getMonsters: For search and discovery (returning summaries).getMonsterById: For retrieving deep details.

2. Stable Types In and Out

We enforce strict types using Zod schemas:

Enums:

categoryandrarityare restricted to known values (e.g., 'Aquatic', 'Rare') rather than open strings.Resources: The

ragmonsters://schemaresource exposes the data shape to the LLM.

3. Deterministic Behavior

Sorting: All queries use deterministic tie-breakers (e.g., sorting by name also sorts by ID) to ensure consistent pagination.

Prompts: The

ragmonsters://answering-styleprompt guides the LLM to answer consistently.

4. Least Privilege

Explicit Columns: Database queries select specific columns (

name,category, etc.) rather thanSELECT *, preventing accidental exposure of internal data.

5. Guardrails at the Edge

Input Validation: Limits are capped (max 50) and validated by Zod schema.

Sanitization: String inputs are handled via parameterized queries to prevent injection.

6. Human-Readable by Design

Structured Summaries: Responses include a natural-language

summaryfield (e.g., "Found 3 Aquatic monsters...") alongside the raw JSON data.

7. Explainability as a Feature

Metadata: Responses include

source("RAGmonsters DB") andpolicyfields to explain provenance.Next Steps: The API returns

nexthints (e.g., suggestinggetMonsterById) to guide the agent's next action.

Implementing Capability Modeling

We have also implemented the "Capability Modeling" best practices to clearly separate Actions (Tools), Knowledge (Resources), and Guidance (Prompts).

1. Tools: The Actions

Tools perform database queries and return structured data:

getMonsters,getMonsterById: Core retrieval operationsgetHabitats,getCategories,getBiomes,getRarities: Reference data lookupsgetMonsterByHabitat,getMonsterByName: Specialized search operationscompareMonsters(nameA, nameB): Side-by-side comparison with analysis

2. Resources: The Knowledge

Resources provide static reference data cached at server startup:

ragmonsters://schema: Database schema for understanding data structureragmonsters://categories: All monster categoriesragmonsters://subcategories: Subcategories grouped by parent categoryragmonsters://habitats: All available habitats

3. Prompts: The Guidance

Prompts are workflow templates that guide multi-step analysis:

analyze_monster_weakness: Structured weakness analysis and counter-strategy generationcompare_monsters: Detailed matchup framework for comparing two monstersexplore_habitat: Ecosystem analysis for habitat explorationbuild_team: Team composition strategy for specific objectives

Deploying to Clever Cloud

Using the Clever Cloud CLI

Install the Clever Cloud CLI:

npm install -g clever-toolsLogin to your Clever Cloud account:

clever loginCreate a new application:

clever create --type node <APP_NAME>Add your domain (optional but recommended):

clever domain add <YOUR_DOMAIN_NAME>Create a PostgreSQL add-on and link it to your application:

clever addon create <APP_NAME>-pg --plan dev clever service link-addon <APP_NAME>-pgThis will automatically set the

POSTGRESQL_ADDON_URIenvironment variable in your application.Set the required environment variables:

clever env set LLM_API_KEY "your-openai-api-key" clever env set LLM_API_MODEL "gpt-4o-mini" # Optional, defaults to gpt-4o-mini clever env set LLM_API_URL "https://api.your-llm-provider.com" # Optional, for alternative OpenAI-compatible providersDeploy your application:

clever deployOpen your application:

clever open

Using the Clever Cloud Console

You can also deploy directly from the Clever Cloud Console:

Create a new application in the console

Select Node.js as the runtime

Create a PostgreSQL add-on and link it to your application

Set the required environment variables in the console:

LLM_API_KEY: Your OpenAI API keyLLM_API_MODEL: (Optional) The model to use, defaults to gpt-4o-mini

Deploy your application using Git or GitHub integration

Important Notes

The

POSTGRESQL_ADDON_URIenvironment variable is automatically set by Clever Cloud when you link a PostgreSQL add-on to your applicationThe application requires Node.js 20 or later, which is available on Clever Cloud

The application will automatically run on port 8080, which is the default port for Node.js applications on Clever Cloud

License

This project is licensed under the MIT License - see the LICENSE file for details.

Acknowledgments

RAGmonsters for the sample dataset

Model Context Protocol for the MCP specification

FastMCP for the high-performance MCP implementation

Clever Cloud for hosting capabilities