The Say MCP Server provides text-to-speech functionality utilizing macOS's built-in say command.

Speak text: Convert text to speech with customizable voice, speaking rate (1-500 wpm), and background execution options

Voice modulation: Adjust speech parameters mid-text using inline commands for rate changes, volume, pitch, emphasis, and silences

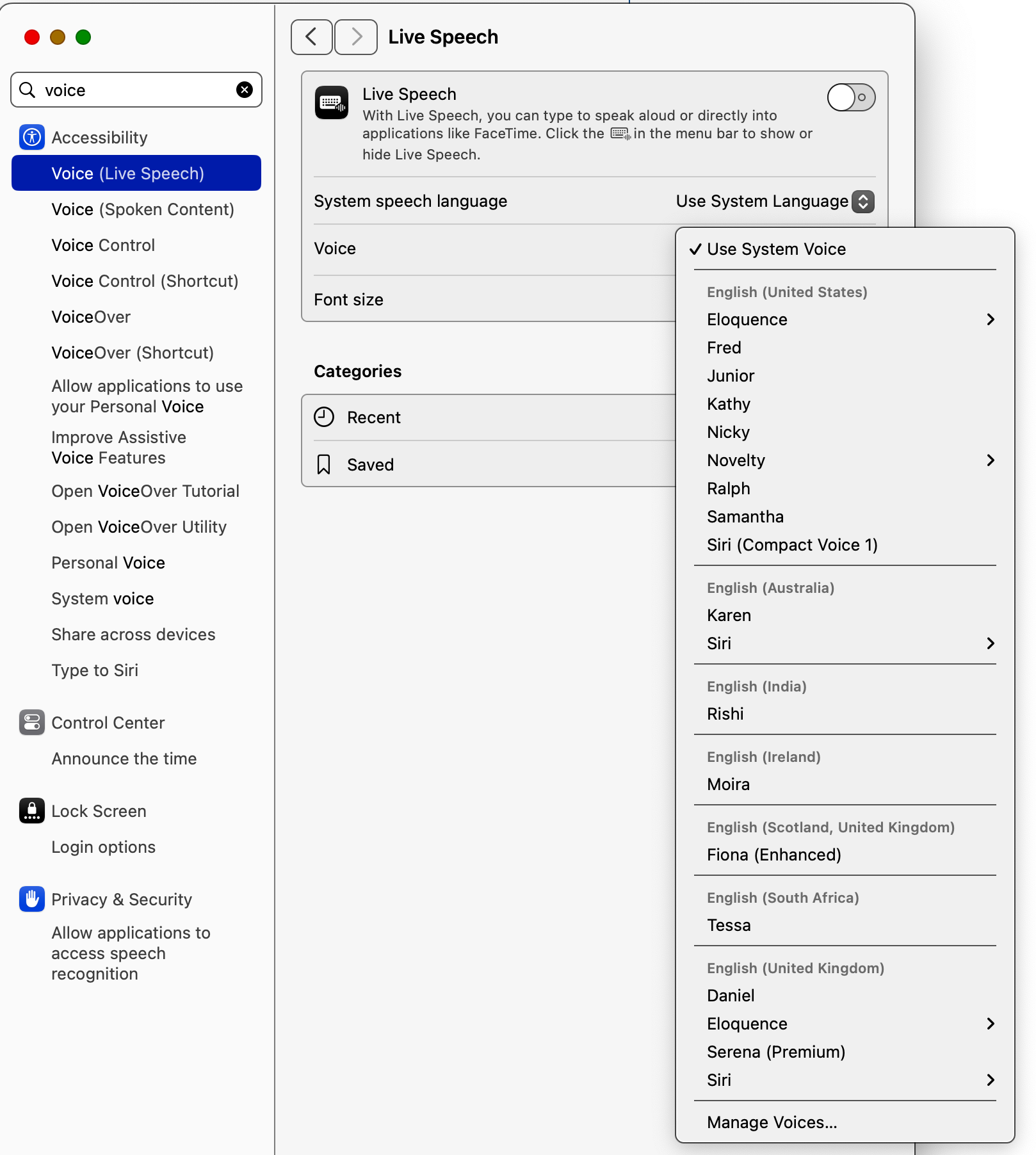

Voice selection: Choose from various voices including Alex, Victoria, and Daniel

List voices: Retrieve all available text-to-speech voices installed on the system

Integration: Works seamlessly with other MCP tools for extended workflows

Integrates with Apple Notes to read notes content aloud using text-to-speech functionality, allowing users to have their Apple Notes read to them with customizable voice properties.

Provides text-to-speech capabilities using macOS's built-in 'say' command, allowing customization of voice, speech rate, volume, pitch, and emphasis. Supports background speech processing and can be integrated with other MCP servers to read content aloud.

Can be used to read YouTube video transcripts aloud with customizable voice settings by integrating with a YouTube transcript MCP server to convert video transcripts to speech.

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@Say MCP Serverread my meeting notes aloud using the Samantha voice"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

say-mcp-server

An MCP server that provides text-to-speech functionality using macOS's built-in say command.

Requirements

macOS (uses the built-in

saycommand)Node.js >= 14.0.0

Related MCP server: Command-Line MCP Server

Configuration

Add the following to your MCP settings configuration file:

Installation

Tools

speak

The speak tool provides access to macOS's text-to-speech capabilities with extensive customization options.

Basic Usage

Use macOS text-to-speech to speak text aloud.

Parameters:

text(required): Text to speak. Supports:Plain text

Basic punctuation for pauses

Newlines for natural breaks

[[slnc 500]] for 500ms silence

[[rate 200]] for changing speed mid-text

[[volm 0.5]] for changing volume mid-text

[[emph +]] and [[emph -]] for emphasis

[[pbas +10]] for pitch adjustment

voice(optional): Voice to use (default: "Alex")rate(optional): Speaking rate in words per minute (default: 175, range: 1-500)background(optional): Run speech in background to allow further MCP interaction (default: false)

Advanced Features

Voice Modulation:

Dynamic Rate Changes:

Emphasis and Pitch:

Integration Examples

With Marginalia Search:

With YouTube Transcripts:

Background Speech with Multiple Actions:

With Apple Notes:

Example:

list_voices

List all available text-to-speech voices on the system.

Example:

Recommended Voices

Configuration

Add the following to your MCP settings configuration file:

Requirements

macOS (uses the built-in

saycommand)Node.js >= 14.0.0

Contributors

Barton Rhodes (@bmorphism) - barton@vibes.lol

License

MIT