Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

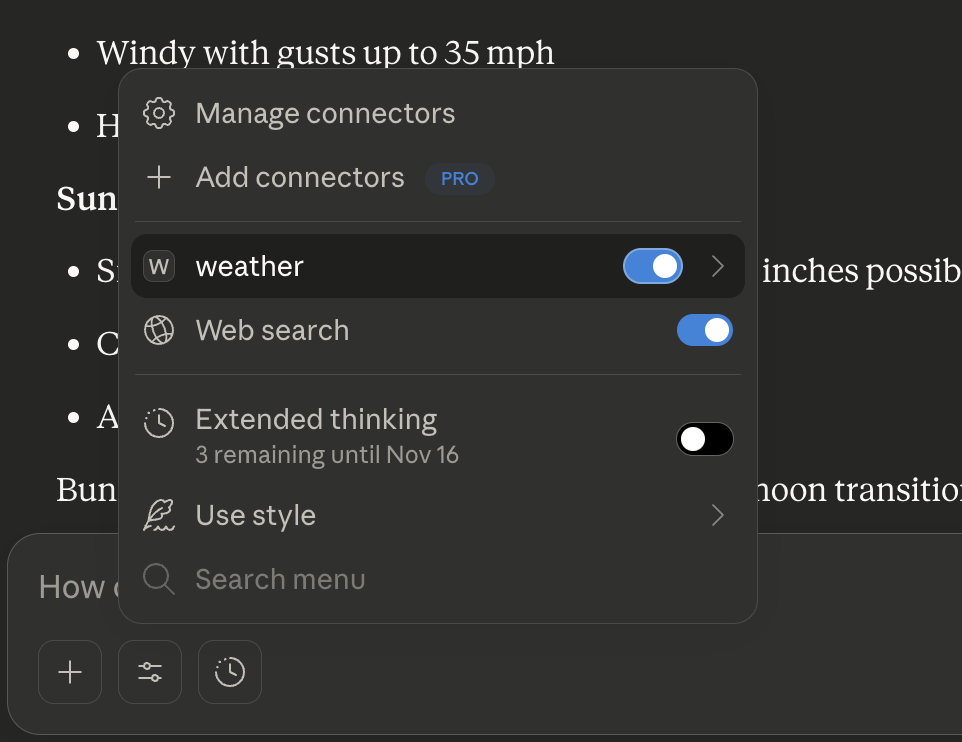

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@Weather MCP Serverwhat's the forecast for New York City?"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

How the LLM Uses These Tools

Example 1: Weather Alerts

You: "Are there any weather alerts in California?"

Claude's process:

Recognizes it needs weather alert info

Calls

get_alerts(state="CA")Gets the formatted response

Presents it to you in natural language

Example 2: Weather Forecast

You: "What's the weather forecast for San Francisco?"

Claude's process:

Knows SF coordinates (or looks them up)

Calls

get_forecast(latitude=37.7749, longitude=-122.4194)Gets 5-period forecast

Summarizes it for you

Run the mcp server

uv run weather.py

Update the claude config file claude_desktop_config.json to below content

{ "mcpServers": { "weather": { "command": "/Users/santhosh.sharma/.local/bin/uv", "args": [ "--directory", "/Users/santhosh.sharma/Repositories/mcp-weather", "run", "weather.py" ] } } }

Reference : https://modelcontextprotocol.io/docs/develop/build-server#python

Analyze logs in ~/Library/Logs/Claude/mcp.log

When you ask Claude (with this MCP server connected):

Docstring best practises:

First line = One-line summary (imperative mood: "Get", "Format", "Calculate")

Use present tense ("Returns the sum" not "Will return")

Be specific about parameter types and expected values

Include examples for complex functions

Keep it updated when code changes