Provides web search capabilities through Brave Search API, enabling AI to search the web and retrieve information.

Supports hibernation-enabled MCP servers hosted on Cloudflare Durable Objects, with automatic wake-up and state preservation across hibernation cycles.

Enables interaction with GitHub repositories, including operations like creating issues and managing repository resources.

Provides email management capabilities through Gmail's API, allowing AI to interact with email accounts.

Enables integration with Slack workspaces for messaging and collaboration operations.

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@NCP - Natural Context Providerfind a tool to read and summarize a PDF file"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

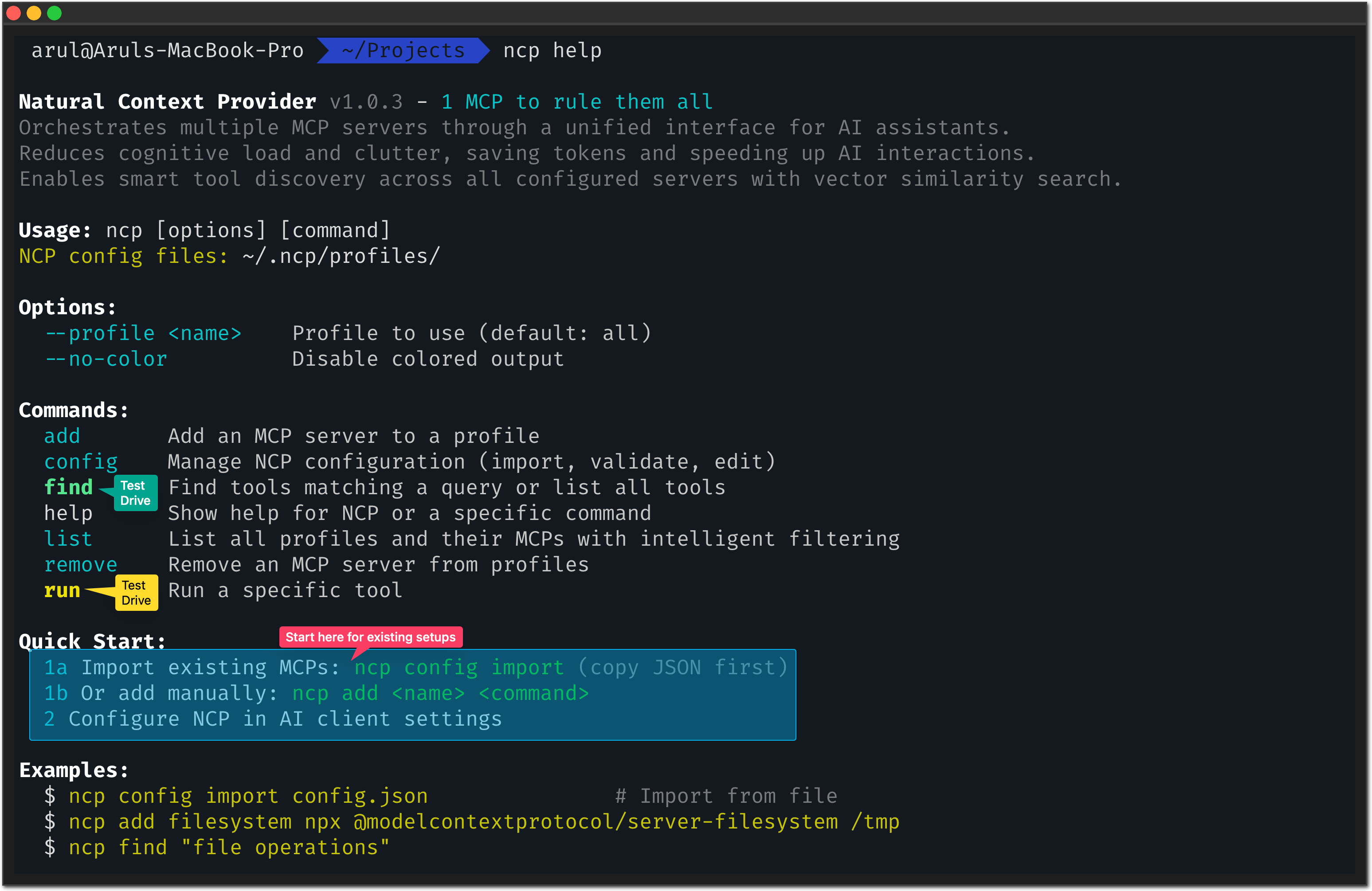

NCP - Natural Context Provider

1 MCP to rule them all

Your MCPs, supercharged. Find any tool instantly, execute with code mode, run on schedule, discover skills, load Photons, ready for any client. Smart loading saves tokens and energy.

💍 What is NCP?

Instead of your AI juggling 50+ tools scattered across different MCPs, NCP gives it a single, unified interface with code mode execution, scheduling, skills discovery, and custom Photons.

Your AI sees just 2-3 simple tools:

find- Search for any tool, skill, or Photon: "I need to read a file" → finds the right tool automaticallycode- Execute TypeScript directly:await github.create_issue({...})(code mode, enabled by default)run- Execute tools individually (when code mode is disabled)

Behind the scenes, NCP manages all 50+ tools + skills + Photons: routing requests, discovering the right capability, executing code, scheduling tasks, managing health, and caching responses.

Why this matters:

Your AI stops analyzing "which tool do I use?" and starts doing actual work

Code mode lets AI write multi-step TypeScript workflows combining tools, skills, and scheduling

Skills provide domain expertise: canvas design, PDF manipulation, document generation, more

Photons enable custom TypeScript MCPs without npm publishing

97% fewer tokens burned on tool confusion (2,500 vs 103,000 for 80 tools)

5x faster responses (sub-second tool selection vs 5-8 seconds)

Your AI becomes focused. Not desperate.

🚀 NEW: Project-level configuration - each project can define its own MCPs automatically

What's MCP? The Model Context Protocol by Anthropic lets AI assistants connect to external tools and data sources. Think of MCPs as "plugins" that give your AI superpowers like file access, web search, databases, and more.

📑 Quick Navigation

The Problem - Why too many tools break your AI

The Solution - How NCP transforms your experience

Getting Started - Installation & quick start

Try It Out - See the CLI in action

Supercharged Features - How NCP empowers your MCPs

Setup by Client - Claude Desktop, Cursor, VS Code, etc.

Popular MCPs - Community favorites to add

Advanced Features - Project config, scheduling, remote MCPs

Troubleshooting - Common issues & solutions

How It Works - Technical deep dive

Contributing - Help us improve NCP

😤 The MCP Paradox: From Assistant to Desperate

You gave your AI assistant 50 tools to be more capable. Instead, you got desperation:

Paralyzed by choice ("Should I use

read_fileorget_file_content?")Exhausted before starting ("I've spent my context limit analyzing which tool to use")

Costs explode (50+ tool schemas burn tokens before any real work happens)

Asks instead of acts (used to be decisive, now constantly asks for clarification)

🧸 Why Too Many Tools Break the System

Think about it like this:

A child with one toy → Treasures it, masters it, creates endless games with it A child with 50 toys → Can't hold them all, gets overwhelmed, stops playing entirely

Your AI is that child. MCPs are the toys. More isn't always better.

The most creative people thrive with constraints, not infinite options. A poet given "write about anything" faces writer's block. Given "write a haiku about rain"? Instant inspiration.

Your AI is the same. Give it one perfect tool → Instant action. Give it 50 tools → Cognitive overload. NCP provides just-in-time tool discovery so your AI gets exactly what it needs, when it needs it.

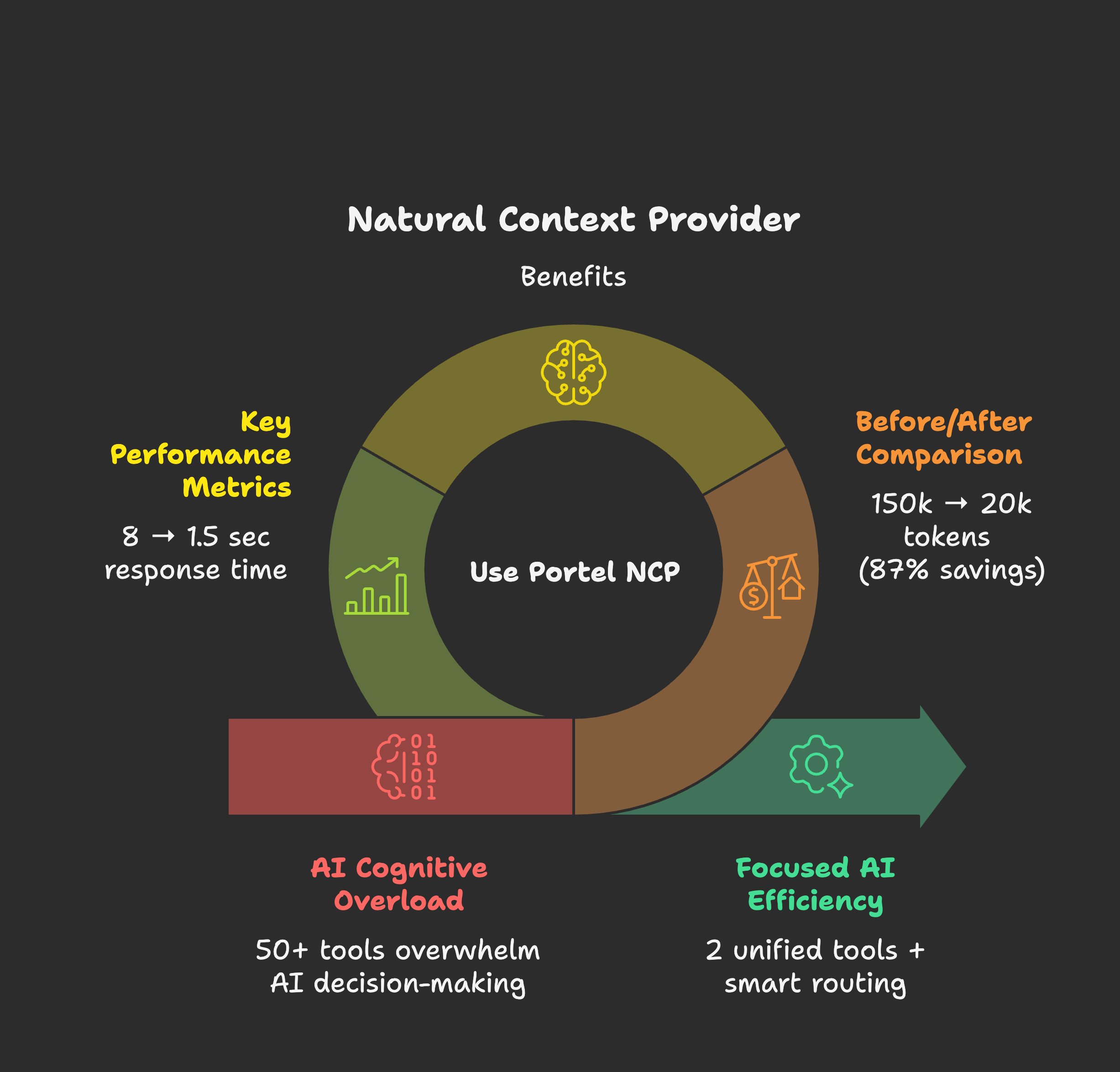

📊 The Before & After Reality

Before NCP: Desperate Assistant 😵💫

When your AI assistant manages 50 tools directly:

What happens:

AI burns 50%+ of context just understanding what tools exist

Spends 5-8 seconds analyzing which tool to use

Often picks wrong tool due to schema confusion

Hits context limits mid-conversation

After NCP: Executive Assistant ✨

With NCP as Chief of Staff:

Real results from our testing:

Your MCP Setup | Without NCP | With NCP | Token Savings |

Small (5 MCPs, 25 tools) | 15,000 tokens | 8,000 tokens | 47% saved |

Medium (15 MCPs, 75 tools) | 45,000 tokens | 12,000 tokens | 73% saved |

Large (30 MCPs, 150 tools) | 90,000 tokens | 15,000 tokens | 83% saved |

Enterprise (50+ MCPs, 250+ tools) | 150,000 tokens | 20,000 tokens | 87% saved |

Translation:

5x faster responses (8 seconds → 1.5 seconds)

12x longer conversations before hitting limits

90% reduction in wrong tool selection

Zero context exhaustion in typical sessions

📋 Prerequisites

Node.js 18+ (Download here)

npm (included with Node.js) or npx for running packages

Command line access (Terminal on Mac/Linux, Command Prompt/PowerShell on Windows)

🚀 Installation

Choose your MCP client for setup instructions:

Client | Description | Setup Guide |

Claude Desktop | Anthropic's official desktop app. Best for NCP - one-click .dxt install with auto-sync | |

Claude Code | Terminal-first AI workflow. Works out of the box! | Built-in support |

VS Code | GitHub Copilot with Agent Mode. Use NCP for semantic tool discovery | |

Cursor | AI-first code editor with Composer. Popular VS Code alternative | |

Windsurf | Codeium's AI-native IDE with Cascade. Built on VS Code | |

Cline | VS Code extension for AI-assisted development with MCP support | |

Continue | VS Code AI assistant with Agent Mode and local LLM support | |

Want more clients? | See the full list of MCP-compatible clients and tools | |

Other Clients | Any MCP-compatible client via npm |

Quick Start (npm)

For advanced users or MCP clients not listed above:

Step 1: Install NCP

Step 2: Import existing MCPs (optional)

Step 3: Configure your MCP client

Add to your client's MCP configuration:

✅ Done! Your AI now sees just 2 tools instead of 50+.

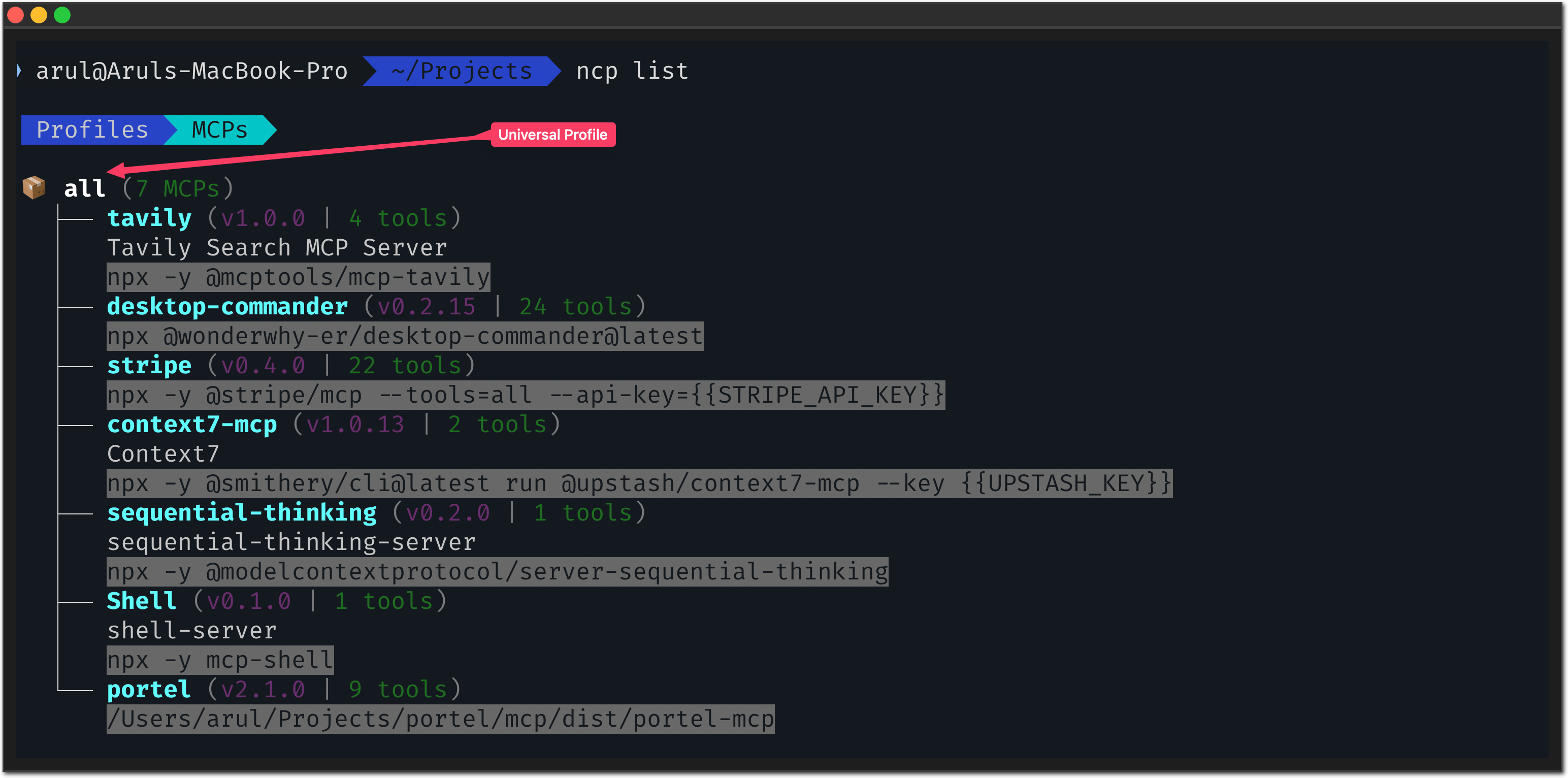

🧪 Test Drive: See the Difference Yourself

Want to experience what your AI experiences? NCP has a human-friendly CLI:

🔍 Smart Discovery

Notice: NCP understands intent, not just keywords. Just like your AI needs.

📋 Ecosystem Overview

⚡ Direct Testing

Why this matters: You can debug and test tools directly, just like your AI would use them.

✅ Verify Everything Works

✅ Success indicators:

NCP shows version number

ncp listshows your imported MCPsncp findreturns relevant toolsYour AI client shows only NCP in its tool list

💪 From Tools to Automation: The Real Power

You've seen find (discover tools) and code (execute TypeScript). Individually, they're useful. Together with scheduling, they become an automation powerhouse.

A Real Example: The MCP Conference Scraper

We wanted to stay on top of MCP-related conferences and workshops for an upcoming release. Instead of manually checking websites daily, we asked Claude:

"Set up a daily scraper that finds MCP conferences and saves them to a CSV file"

What Claude did:

Used

// Search the web for MCP conferences const results = await web.search({ query: "Model Context Protocol conference 2025" }); // Read each result and extract details for (const url of results) { const content = await web.read({ url }); // Extract title, deadline, description... // Save to ~/.ncp/mcp-conferences.csv }Used

ncp schedule create code:run "every day at 9am" \ --name "MCP Conference Scraper" \ --catchup-missed

How to set this up yourself:

First, install the web photon (provides search and read capabilities):

Then ask Claude to create the scraper - it will use the web photon automatically.

What happens now:

Every morning at 9am, the scraper runs automatically

Searches for new MCP events and adds them to the CSV

If our laptop was closed at 9am, it catches up when we open it

We wake up to fresh conference data - no manual work

The insight: find and code let AI write automation. schedule makes it run forever. That's the powerhouse.

💡 Why NCP Transforms Your AI Experience

🧠 From Desperation to Delegation

Desperate Assistant: "I see 50 tools... which should I use... let me think..."

Executive Assistant: "I need file access. Done." (NCP handles the details)

💰 Massive Token Savings

Before: 100k+ tokens burned on tool confusion

After: 2.5k tokens for focused execution

Result: 40x token efficiency = 40x longer conversations

🎯 Eliminates Choice Paralysis

Desperate: AI freezes, picks wrong tool, asks for clarification

Executive: NCP's Chief of Staff finds the RIGHT tool instantly

🚀 Confident Action

Before: 8-second delays, hesitation, "Which tool should I use?"

After: Instant decisions, immediate execution, zero doubt

Bottom line: Your AI goes from desperate assistant to executive assistant.

⚡ Supercharged Features

Here's exactly how NCP empowers your MCPs:

Feature | What It Does | Why It Matters |

🔍 Instant Tool Discovery | Semantic search understands intent ("read a file") not just keywords | Your AI finds the RIGHT tool in <1s instead of analyzing 50 schemas |

📦 On-Demand Loading | MCPs and tools load only when needed, not at startup | Saves 97% of context tokens - AI starts working immediately |

⏰ Automated Scheduling | Run any tool on cron schedules or natural language times | Background automation without keeping AI sessions open |

🔌 Universal Compatibility | Works with Claude Desktop, Claude Code, Cursor, VS Code, and any MCP client | One configuration for all your AI tools - no vendor lock-in |

💾 Smart Caching | Intelligent caching of tool schemas and responses | Eliminates redundant indexing - energy efficient and fast |

The result: Your MCPs go from scattered tools to a unified, intelligent system that your AI can actually use effectively.

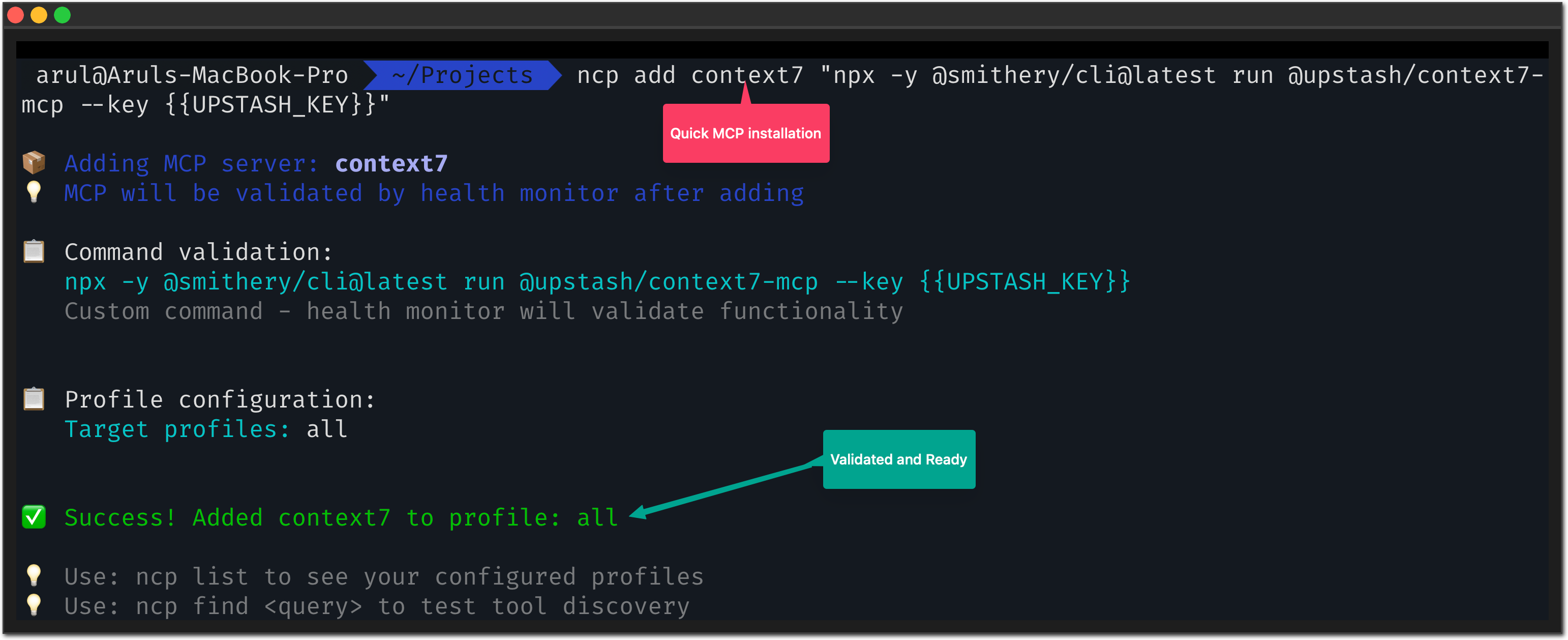

🛠️ For Power Users: Manual Setup

Prefer to build from scratch? Add MCPs manually:

💡 Pro tip: Browse Smithery.ai (2,200+ MCPs) or mcp.so to discover tools for your specific needs.

🎯 Popular MCPs That Work Great with NCP

🔥 Most Downloaded

🛠️ Development Essentials

🌐 Productivity & Integrations

🤖 Internal MCPs

NCP includes powerful internal MCPs that extend functionality beyond external tool orchestration:

Scheduler MCP - Automate Any Tool

Schedule any MCP tool to run automatically using cron or natural language schedules.

Features:

✅ Natural language schedules ("every day at 9am", "every monday")

✅ Standard cron expressions for advanced control

✅ Automatic validation before scheduling

✅ Execution history and monitoring

✅ Works even when NCP is not running (system cron integration)

MCP Management MCP - Install MCPs from AI

Install and configure MCPs dynamically through natural language.

Features:

✅ Search and discover MCPs from registries

✅ Install MCPs without manual configuration

✅ Update and remove MCPs programmatically

✅ AI can self-extend with new capabilities

Skills Management MCP - Extend Claude with Plugins

Manage Anthropic Agent Skills - modular extensions that add specialized knowledge and tools to Claude.

Features:

✅ Vector-powered semantic search for skills

✅ One-command install from official marketplace

✅ Progressive disclosure (metadata → full content → resources)

✅ Official Anthropic marketplace integration

✅ Custom marketplace support

✅ Auto-loading of installed skills

Analytics MCP - Visualize Usage & Performance

View usage statistics, token savings, and performance metrics directly in your chat.

Features:

✅ Usage trends and most used tools

✅ Token savings analysis (Code-Mode efficiency)

✅ Performance metrics (response times, error rates)

✅ ASCII-formatted charts for AI consumption

Configuration: Internal MCPs are disabled by default. Enable in your profile settings:

🔧 Advanced Features

Smart Health Monitoring

NCP automatically detects broken MCPs and routes around them:

🎯 Result: Your AI never gets stuck on broken tools.

Multi-Profile Organization

Organize MCPs by project or environment:

🚀 Project-Level Configuration

New: Configure MCPs per project with automatic detection - perfect for teams and Cloud IDEs:

How it works:

📁 Local → Uses project configuration

🏠 No local → Falls back to global

~/.ncp🎯 Zero profile management needed → Everything goes to default

all.json

Perfect for:

🤖 Claude Code projects (project-specific MCP tooling)

👥 Team consistency (ship

.ncpfolder with your repo)🔧 Project-specific tooling (each project defines its own MCPs)

📦 Environment isolation (no global MCP conflicts)

HTTP/SSE Transport & Hibernation Support

NCP supports both stdio (local) and HTTP/SSE (remote) MCP servers:

Stdio Transport (Traditional):

HTTP/SSE Transport (Remote):

OAuth 2.1 with PKCE (MCP 2025-03-26 spec):

OAuth 2.1 Features:

✅ PKCE (Proof Key for Code Exchange) - Required by OAuth 2.1 for security

✅ Dynamic Client Registration (RFC 7591) - Auto-registers if no clientId provided

✅ Token Storage & Auto-Refresh - Tokens saved to

~/.ncp/auth/and refreshed automatically✅ Browser Authorization Flow - Opens browser for user consent

✅ Headless Fallback - Manual code entry for servers/CI environments

✅ Protected Resource Discovery (RFC 9728) - Auto-discovers OAuth endpoints

First-time setup flow:

NCP opens browser to authorization URL

You grant permissions

Browser redirects to local callback (

http://localhost:9876)NCP exchanges code for access token (with PKCE)

Token saved and refreshed automatically for future requests

🔋 Hibernation-Enabled Servers:

NCP automatically supports hibernation-enabled MCP servers (like Cloudflare Durable Objects or Metorial):

Zero configuration needed - Hibernation works transparently

Automatic wake-up - Server wakes on demand when NCP makes requests

State preservation - Server state is maintained across hibernation cycles

Cost savings - Only pay when MCPs are actively processing requests

How it works:

Server hibernates when idle (consumes zero resources)

NCP sends a request → Server wakes instantly

Server processes request and responds

Server returns to hibernation after idle timeout

Perfect for:

💰 Cost optimization - Only pay for active processing time

🌐 Cloud-hosted MCPs - Metorial, Cloudflare Workers, serverless platforms

♻️ Resource efficiency - No idle server costs

🚀 Scale to zero - Servers automatically sleep when not needed

Note: Hibernation is a server-side feature. NCP's standard HTTP/SSE client automatically works with both traditional and hibernation-enabled servers without any special configuration.

Photon Runtime (CLI vs DXT)

The TypeScript Photon runtime is enabled by default, but the toggle lives in different places depending on how you run NCP:

CLI / npm installs: Edit

~/.ncp/settings.json(or runncp config) and setenablePhotonRuntime: trueorfalse. You can also override ad‑hoc withNCP_ENABLE_PHOTON_RUNTIME=true ncp find "photon".DXT / client bundles (Claude Desktop, Cursor, etc.): These builds ignore

~/.ncp/settings.json. Configure photons by setting the env var inside the client config:

If you disable the photon runtime, internal MCPs continue to work, but .photon.ts files are ignored until you re-enable the flag.

Import from Anywhere

🛟 Troubleshooting

Import Issues

AI Not Using Tools

Check connection:

ncp list(should show your MCPs)Test discovery:

ncp find "your query"Validate config: Ensure your AI client points to

ncpcommand

Performance Issues

🌓 Why We Built This

Like Yin and Yang, everything relies on the balance of things.

Compute gives us precision and certainty. AI gives us creativity and probability.

We believe breakthrough products emerge when you combine these forces in the right ratio.

How NCP embodies this balance:

What NCP Does | AI (Creativity) | Compute (Precision) | The Balance |

Tool Discovery | Understands "read a file" semantically | Routes to exact tool deterministically | Natural request → Precise execution |

Orchestration | Flexible to your intent | Reliable tool execution | Natural flow → Certain outcomes |

Health Monitoring | Adapts to patterns | Monitors connections, auto-failover | Smart adaptation → Reliable uptime |

Neither pure AI (too unpredictable) nor pure compute (too rigid).

Your AI stays creative. NCP handles the precision.

📚 Deep Dive: How It Works

Want the technical details? Token analysis, architecture diagrams, and performance benchmarks:

Learn about:

Vector similarity search algorithms

N-to-1 orchestration architecture

Real-world token usage comparisons

Health monitoring and failover systems

🤝 Contributing

Help make NCP even better:

🐛 Bug reports: GitHub Issues

💡 Feature requests: GitHub Discussions

🔄 Pull requests: Contributing Guide

📄 License

Elastic License 2.0 - Full License

TLDR: Free for all use including commercial. Cannot be offered as a hosted service to third parties.