The Android UI Assist MCP Server enables AI agents to analyze and provide feedback on Android app UI in real-time during development. It captures live screenshots from connected Android devices and emulators, providing visual context to AI agents like Claude Desktop, GitHub Copilot, and Gemini CLI.

Key capabilities:

Real-time UI Analysis: Capture live screenshots from running Android devices and emulators with device targeting support

Device Management: List all connected Android devices and emulators with detailed status information

Multi-Platform Support: Works with Expo, React Native, Flutter, and native Android development workflows

AI-Powered Development: Enables AI agents to provide instant visual feedback, code generation, and UI improvement suggestions

Development Integration: Seamlessly works with hot reload features and development servers for iterative refinement

Testing & Quality Assurance: Supports UI testing, visual regression analysis, accessibility testing, and cross-platform consistency checking

Flexible Deployment: Available via NPM, source installation, and Docker with multiple integration options

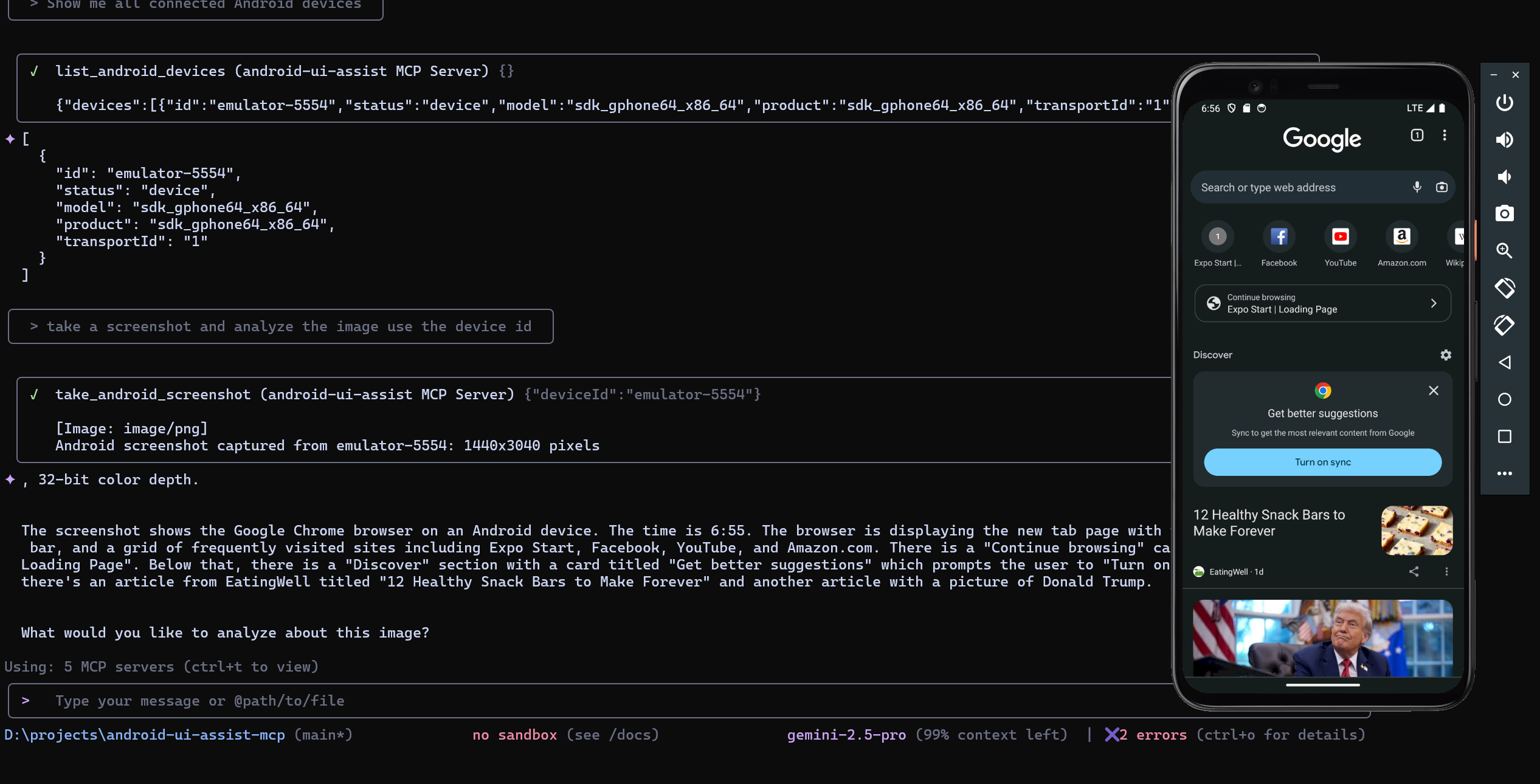

Enables AI agents to capture screenshots and manage connected Android devices and emulators through ADB commands for UI analysis and automation.

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@Android UI Assist MCP Servercapture a screenshot of my app's home screen from the emulator"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

Real-Time Android UI Development with AI Agents - MCP Server

Model Context Protocol server that enables AI coding agents to see and analyze your Android app UI in real-time during development. Perfect for iterative UI refinement with Expo, React Native, Flutter, and native Android development workflows. Connect your AI agent to your running app and get instant visual feedback on UI changes.

Keywords: android development ai agent, real-time ui feedback, expo development tools, react native ui assistant, flutter development ai, android emulator screenshot, ai powered ui testing, visual regression testing ai, mobile app development ai, iterative ui development, ai code assistant android

Quick Demo

See the MCP server in action with real-time Android UI analysis:

MCP Server Status | Live Development Workflow |

|

|

Server ready with 2 tools available | AI agent analyzing Android UI in real-time |

Features

Real-Time Development Workflow

Live screenshot capture during app development with Expo, React Native, Flutter

Instant visual feedback for AI agents on UI changes and iterations

Seamless integration with development servers and hot reload workflows

Support for both physical devices and emulators during active development

AI Agent Integration

MCP protocol support for Claude Desktop, GitHub Copilot, and Gemini CLI

Enable AI agents to see your app UI and provide contextual suggestions

Perfect for iterative UI refinement and design feedback loops

Visual context for AI-powered code generation and UI improvements

Developer Experience

Zero-configuration setup with running development environments

Docker deployment for team collaboration and CI/CD pipelines

Comprehensive error handling with helpful development suggestions

Secure stdio communication with timeout management

Table of Contents

AI Agent Configuration

This MCP server works with AI agents that support the Model Context Protocol. Configure your preferred agent to enable real-time Android UI analysis:

Claude Code

Claude Desktop

Add to %APPDATA%\Claude\claude_desktop_config.json:

GitHub Copilot (VS Code)

Add to .vscode/settings.json:

Gemini CLI

Installation

Package Manager Installation

Source Installation

Installation Verification

After installation, verify the package is available:

Development Workflow

This MCP server transforms how you develop Android UIs by giving AI agents real-time visual access to your running application. Here's the typical workflow:

Start Your Development Environment: Launch Expo, React Native Metro, Flutter, or Android Studio with your app running

Connect the MCP Server: Configure your AI agent (Claude, Copilot, Gemini) to use this MCP server

Iterative Development: Ask your AI agent to analyze the current UI, suggest improvements, or help implement changes

Real-Time Feedback: The AI agent takes screenshots to see the results of code changes immediately

Refine and Repeat: Continue the conversation with visual context for better UI development

Perfect for:

Expo development with live preview and hot reload

React Native development with Metro bundler

Flutter development with hot reload

Native Android development with instant run

UI testing and visual regression analysis

Collaborative design reviews with AI assistance

Accessibility testing with visual context

Cross-platform UI consistency checking

Prerequisites

Component | Version | Installation |

Node.js | 18.0+ | |

npm | 8.0+ | Included with Node.js |

ADB | Latest |

Android Device Setup

Enable Developer Options: Settings > About Phone > Tap "Build Number" 7 times

Enable USB Debugging: Settings > Developer Options > USB Debugging

Verify connection:

adb devices

Development Environment Setup

Expo Development

Start your Expo development server:

Open your app on a connected device or emulator

Ensure your device appears in

adb devicesYour AI agent can now take screenshots during development

React Native Development

Start Metro bundler:

Run on Android:

Enable hot reload for instant feedback with AI analysis

Flutter Development

Start Flutter in debug mode:

Use hot reload (

r) and hot restart (R) while getting AI feedbackThe AI agent can capture UI states after each change

Native Android Development

Open project in Android Studio

Run app with instant run enabled

Connect device or start emulator

Enable AI agent integration for real-time UI analysis

Docker Deployment

Docker Compose

Configure AI platform for Docker:

Manual Docker Build

Available Tools

Tool | Description | Parameters |

| Captures device screenshot |

|

| Lists connected devices | None |

Tool Schemas

take_android_screenshot

list_android_devices

Usage Examples

Example: AI agent listing devices, capturing screenshots, and providing detailed UI analysis in real-time

Real-Time UI Development

With your development environment running (Expo, React Native, Flutter, etc.), interact with your AI agent:

Initial Analysis:

"Take a screenshot of my current app UI and analyze the layout"

"Show me the current state of my login screen and suggest improvements"

"Capture the app and check for accessibility issues"

Iterative Development:

"I just changed the button color, take another screenshot and compare"

"Help me adjust the spacing - take a screenshot after each change"

"Take a screenshot and tell me if the new navigation looks good"

Cross-Platform Testing:

"Capture screenshots from both my phone and tablet emulator"

"Show me how the UI looks on device emulator-5554 vs my physical device"

Development Debugging:

"List all connected devices and their status"

"Take a screenshot from the specific emulator running my debug build"

"Capture the current error state and help me fix the UI issue"

Troubleshooting

ADB Issues

ADB not found: Verify ADB is installed and in PATH

No devices: Check USB connection and debugging authorization

Device unauthorized: Disconnect/reconnect USB, check device authorization prompt

Screenshot failed: Ensure device is unlocked and properly connected

Connection Issues

Verify

adb devicesshows your device as "device" statusRestart ADB server:

adb kill-server && adb start-serverCheck USB debugging permissions on device

Development

Build Commands

Project Structure

Performance

5-second timeout on ADB operations

In-memory screenshot processing

Stdio communication for security

Minimal privilege execution

License

MIT License - see LICENSE file for details.