Enables AI agents built with LangChain to persist and retrieve semantic memories, text, and PDF documents for long-term context retention.

Provides a durable memory layer for stateful agents, allowing them to recall chat histories and relevant context across different interactions or sessions.

Supports private, fully local AI pipelines by providing a semantic memory store for local LLMs running via Ollama.

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@Eternity MCPSearch for my notes on the project deadline and requirements."

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

🧠 Eternity MCP

Your Eternal Second Brain, Running Locally.

Eternity MCP is a lightweight, privacy-focused memory server designed to provide long-term memory for LLMs and AI agents using the Model Context Protocol (MCP).

It combines structured storage (SQLite) with semantic vector search (ChromaDB), enabling agents to persist and retrieve text, PDF documents, and chat histories across sessions using natural language queries.

Built to run fully locally, Eternity integrates seamlessly with MCP-compatible clients, LangChain, LangGraph, and custom LLM pipelines, giving agents a durable and private memory layer.

🚀 Why Eternity?

Building agents that "remember" is hard. Most solutions rely on expensive cloud vector databases or complex setups. Eternity solves this by being:

🔒 Private & Local: Runs entirely on your machine. No data leaves your network.

⚡ fast & Lightweight: Built on FastAPI and ChromaDB.

🔌 Agent-Ready: Perfect for LangGraph, LangChain, or direct LLM integration.

📄 Multi-Modal: Ingests raw text and PDF documents automatically.

🔎 Semantic Search: Finds matches by meaning, not just keywords.

📦 Installation

You can install Eternity directly from PyPI (coming soon) or from source:

🛠️ Usage

1. Start the Server

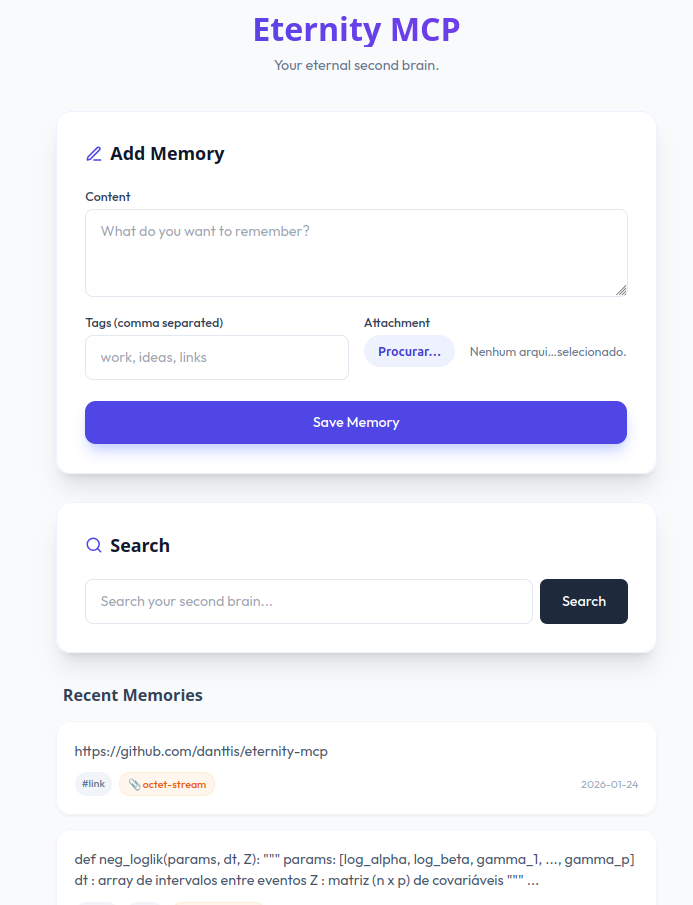

Run the server in a terminal. It will host the API and the Memory UI.

Server runs at

2. Client Usage (Python)

You can interact with Eternity using simple HTTP requests.

3. Integration with LangGraph/AI Agents

Eternity shines when connected to an LLM. Here is a simple pattern for an agent with long-term memory:

Recall: Before answering, search Eternity for context.

Generate: Feed the retrieved context to the LLM.

Memorize: Save the useful parts of the interaction back to Eternity.

(See

🔌 API Endpoints

Method | Endpoint | Description |

|

| Web UI to view recent memories. |

|

| Add text or file (PDF). Params: |

|

| Semantic search. Params: |

🤝 Contributing

Contributions are welcome! Please feel free to submit a Pull Request.

📜 License

This project is licensed under the MIT License - see the LICENSE file for details.

🌟 Inspiration

This project was inspired by Supermemory. We admire their vision for a second brain and their open-source spirit.

Created by with a little help from AI :)