Provides containerization for the MCP server and Open WebUI, allowing them to run together in an isolated environment with proper dependency management

Enables running language models locally for AI interactions with complete privacy and control, specifically configured to use the deepseek-r1 model for this application

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@Leave Manager MCP Tool Servercheck my remaining vacation days for this year"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

Local AI with Ollama, WebUI & MCP on Windows

A self-hosted AI stack combining Ollama for running language models, Open WebUI for user-friendly chat interaction, and MCP for centralized model management—offering full control, privacy, and flexibility without relying on the cloud.

This sample project provides an MCP-based tool server for managing employee leave balance, applications, and history. It is exposed via OpenAPI using mcpo for easy integration with Open WebUI or other OpenAPI-compatible clients.

🚀 Features

✅ Check employee leave balance

📆 Apply for leave on specific dates

📜 View leave history

🙋 Personalized greeting functionality

Related MCP server: Lark MCP Server

📁 Project Structure

leave-manager/

├── main.py # MCP server logic for leave management

├── requirements.txt # Python dependencies for the MCP server

├── Dockerfile # Docker image configuration for the leave manager

├── docker-compose.yml # Docker Compose file to run leave manager and Open WebUI

└── README.md # Project documentation (this file)📋 Prerequisites

Windows 10 or later (required for Ollama)

Docker Desktop for Windows (required for Open WebUI and MCP)

Install from: Docker Desktop for Windows

🛠️ Workflow

Install Ollama on Windows

Pull the

deepseek-r1modelClone the repository and navigate to the project directory

Run the

docker-compose.ymlfile to launch services

Install Ollama

➤ Windows

Download the Installer:

Visit Ollama Download and click Download for Windows to get

OllamaSetup.exe.Alternatively, download from Ollama GitHub Releases.

Run the Installer:

Execute

OllamaSetup.exeand follow the installation prompts.After installation, Ollama runs as a background service, accessible at: http://localhost:11434.

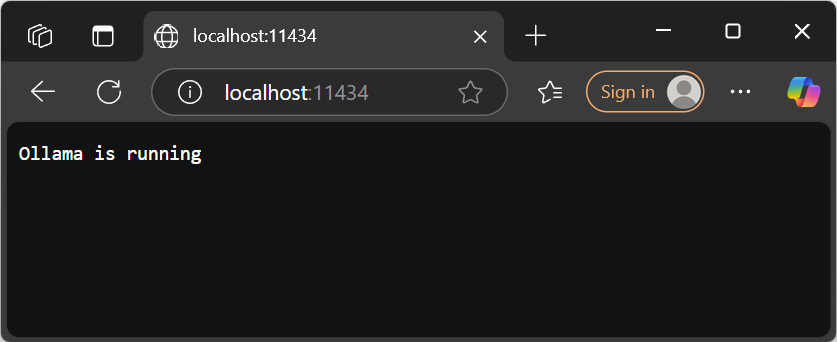

Verify in your browser; you should see:

Ollama is running

Start Ollama Server (if not already running):

ollama serveAccess the server at: http://localhost:11434.

Verify Installation

Check the installed version of Ollama:

ollama --versionExpected Output:

ollama version 0.7.1Pull the deepseek-r1 Model

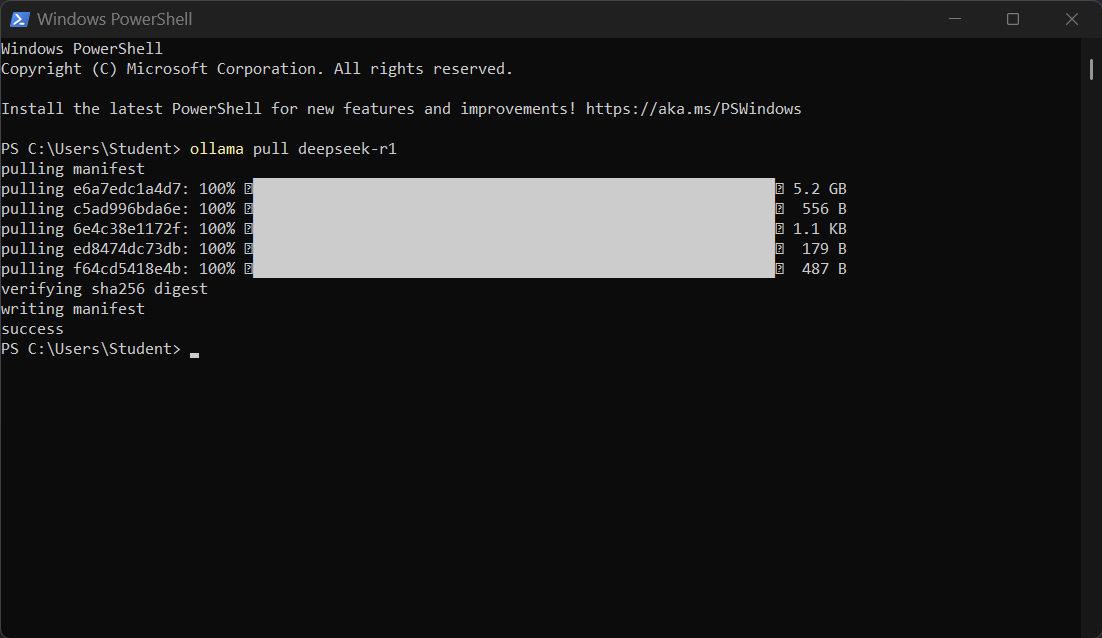

1. Pull the Default Model (7B):

Using PoweShell

ollama pull deepseek-r1

To Pull Specific Versions:

ollama run deepseek-r1:1.5b

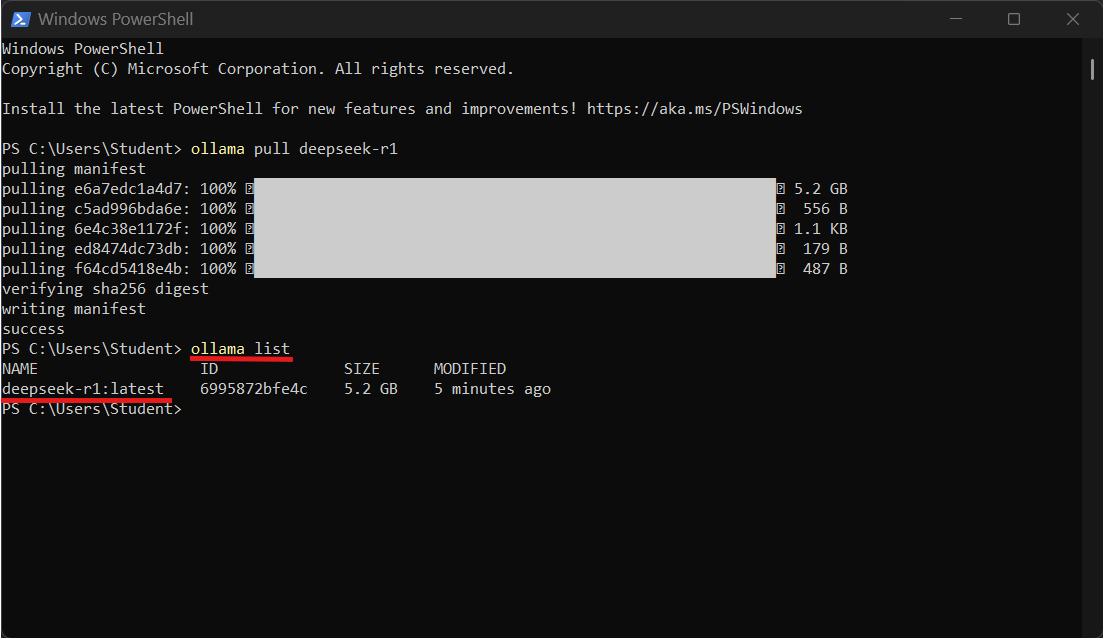

ollama run deepseek-r1:671b2. List Installed Models:

ollama listExpected:

Expected Output:

NAME ID SIZE

deepseek-r1:latest xxxxxxxxxxxx X.X GB

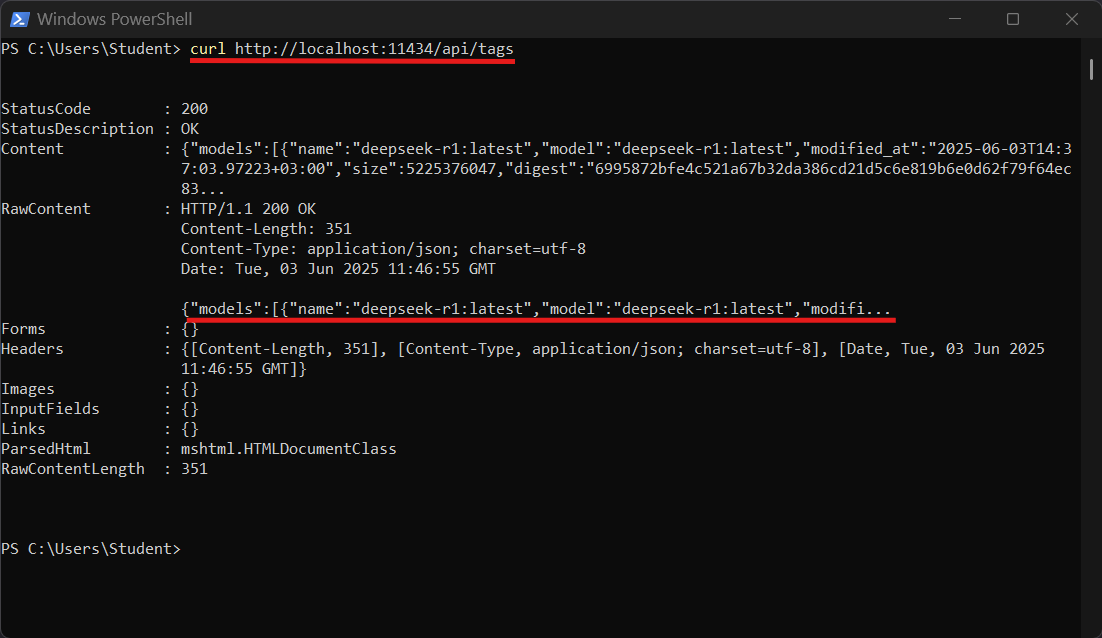

4. Alternative Check via API:

curl http://localhost:11434/api/tagsExpected Output:

A JSON response listing installed models, including deepseek-r1:latest.

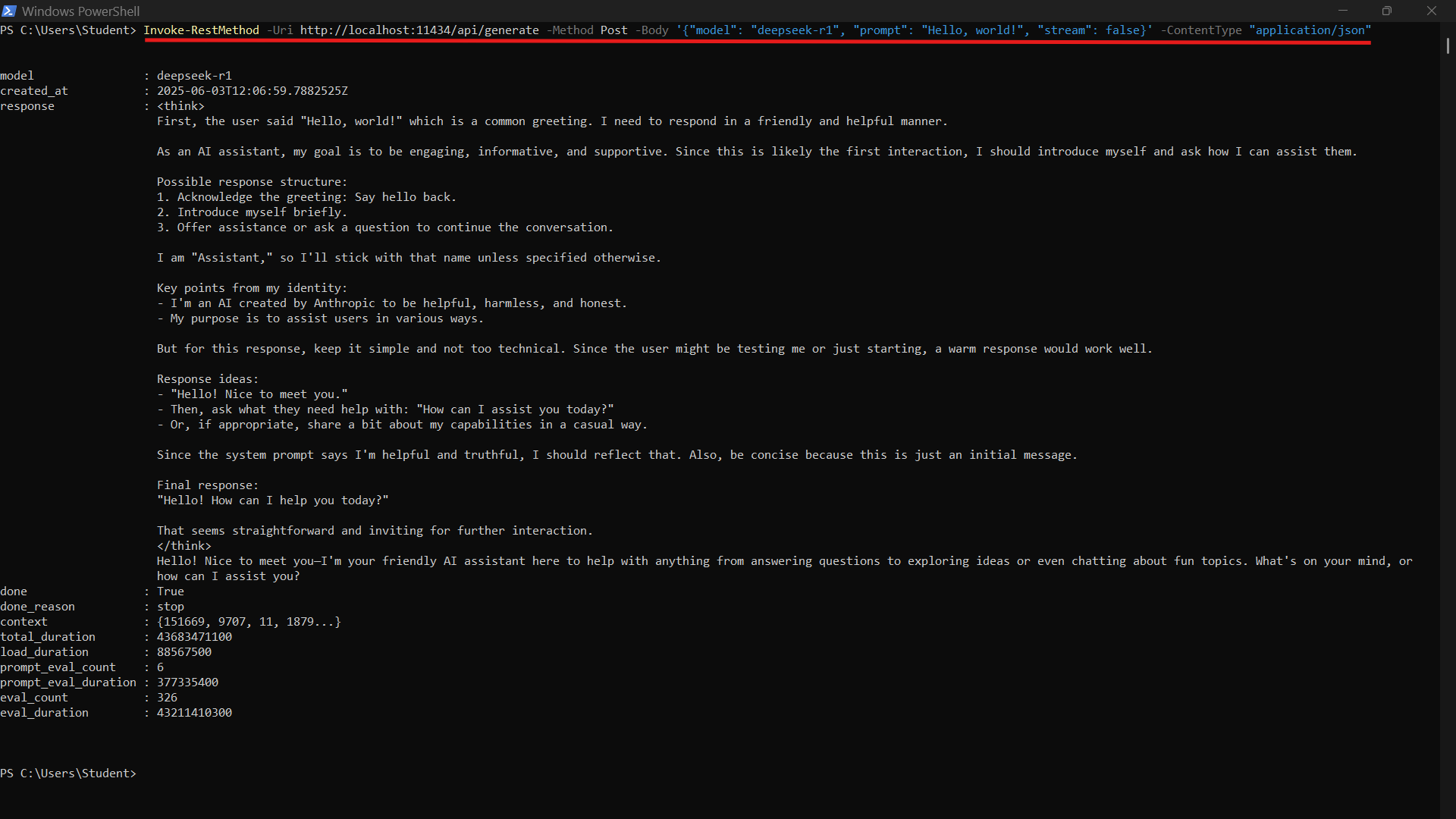

4. Test the API via PowerShell:

Invoke-RestMethod -Uri http://localhost:11434/api/generate -Method Post -Body '{"model": "deepseek-r1", "prompt": "Hello, world!", "stream": false}' -ContentType "application/json"Expected Response: A JSON object containing the model's response to the "Hello, world!" prompt.

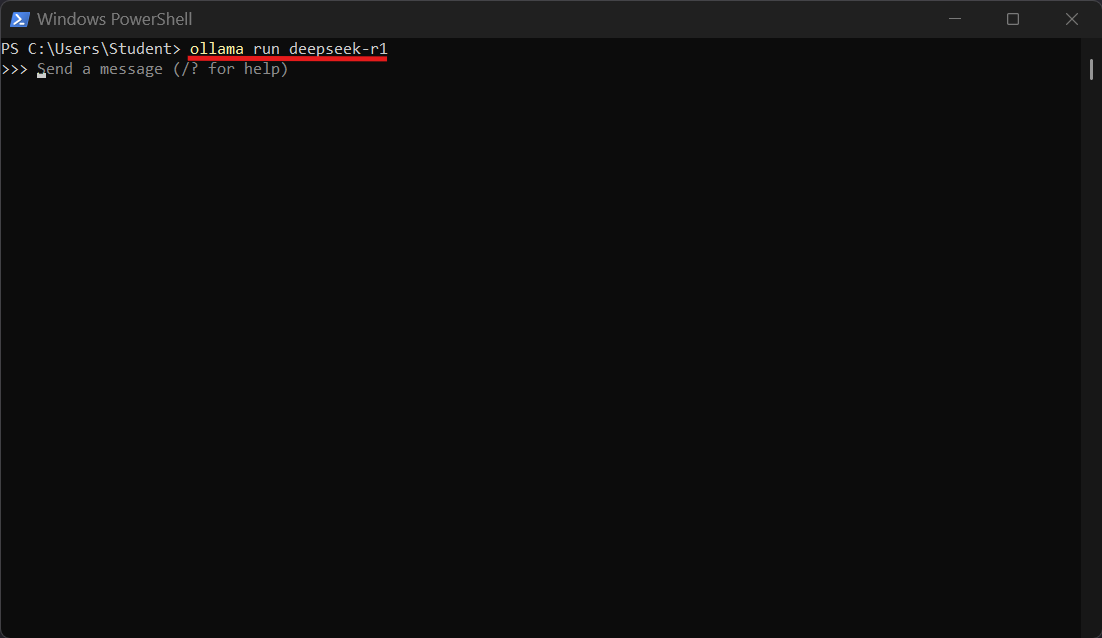

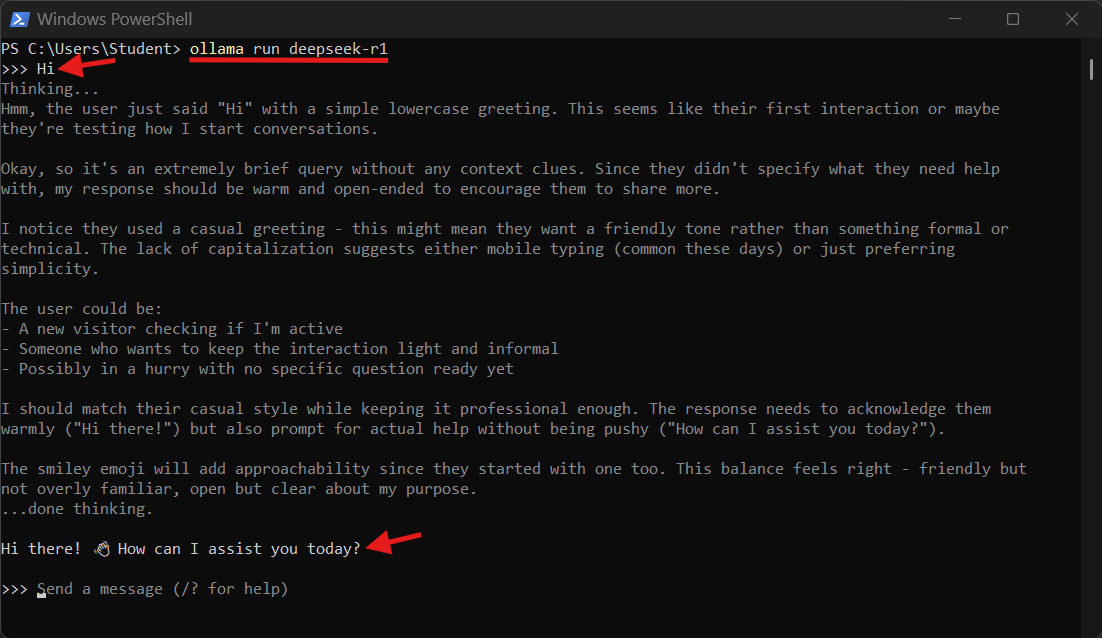

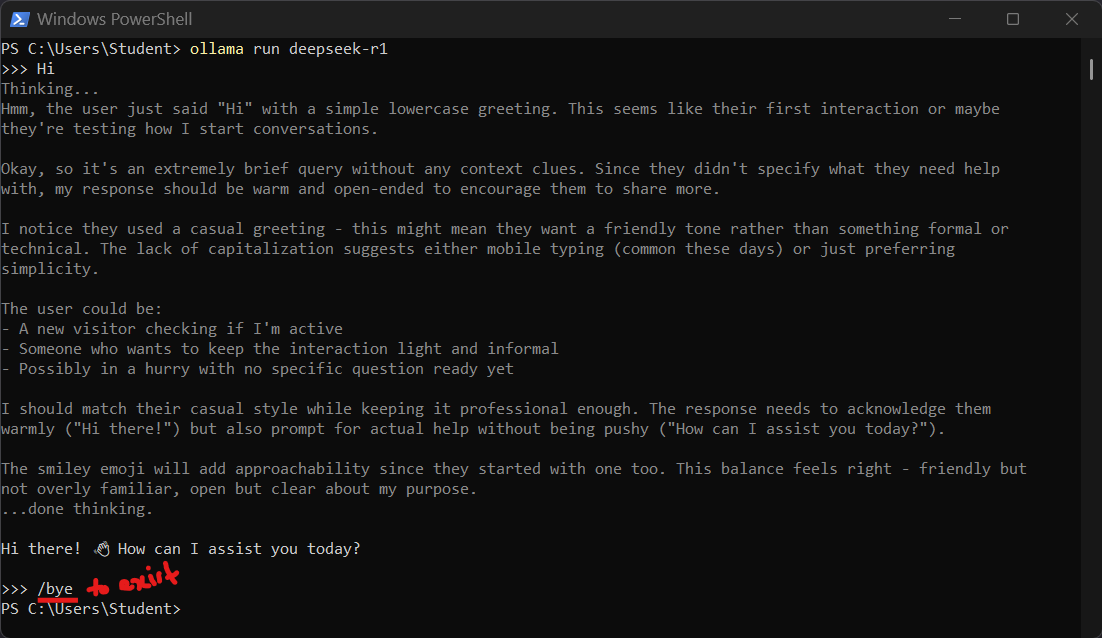

5. Run and Chat the Model via PowerShell:

ollama run deepseek-r1This opens an interactive chat session with the

deepseek-r1model.Type

/byeand pressEnterto exit the chat session.

🐳 Run Open WebUI and MCP Server with Docker Compose

Clone the Repository:

git clone https://github.com/ahmad-act/Local-AI-with-Ollama-Open-WebUI-MCP-on-Windows.git cd Local-AI-with-Ollama-Open-WebUI-MCP-on-WindowsTo launch both the MCP tool and Open WebUI locally (on Docker Desktop):

docker-compose up --build

This will:

Start the Leave Manager (MCP Server) tool on port

8000Launch Open WebUI at http://localhost:3000

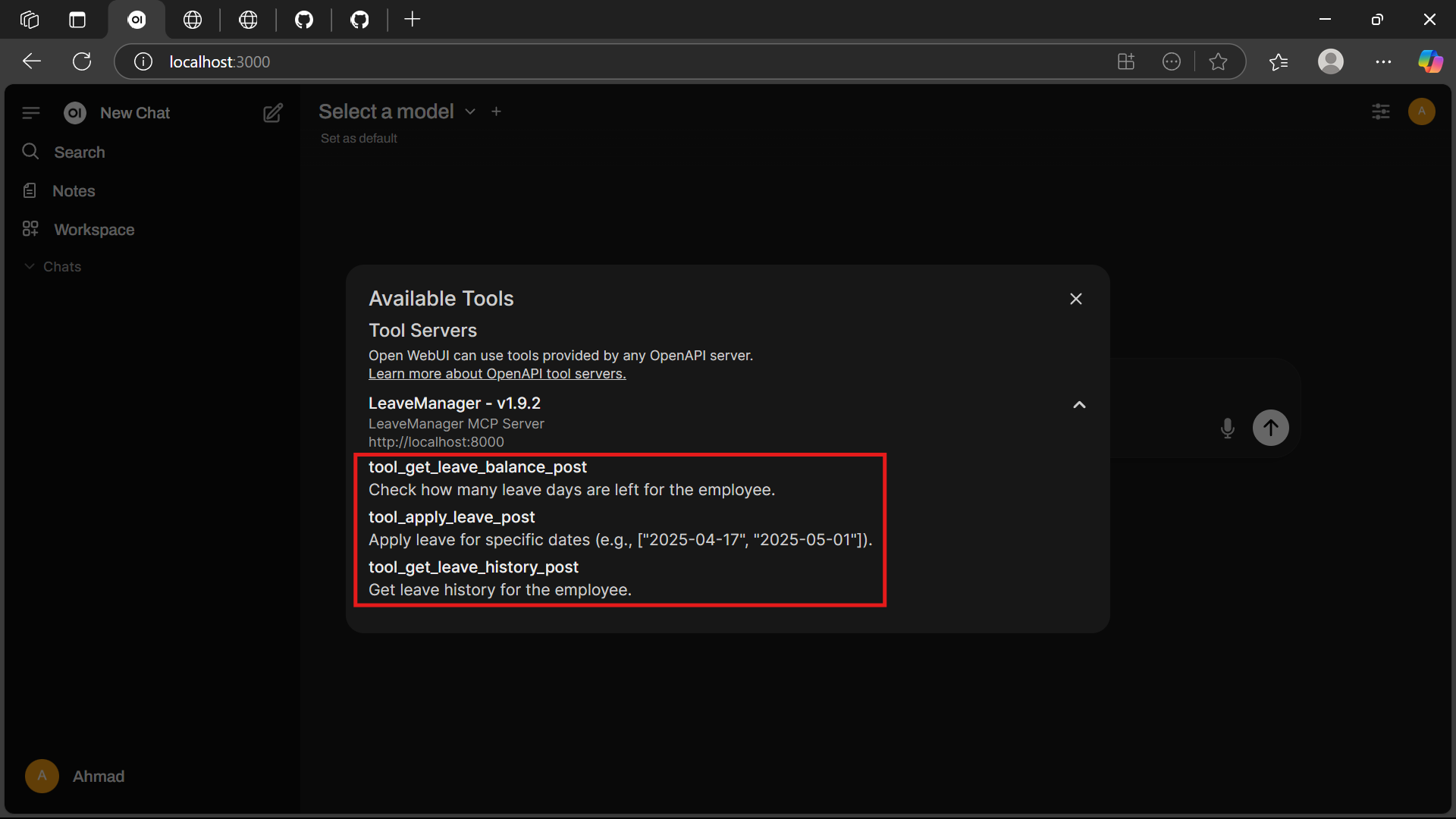

🌐 Add MCP Tools to Open WebUI

The MCP tools are exposed via the OpenAPI specification at: http://localhost:8000/openapi.json.

Open http://localhost:3000 in your browser.

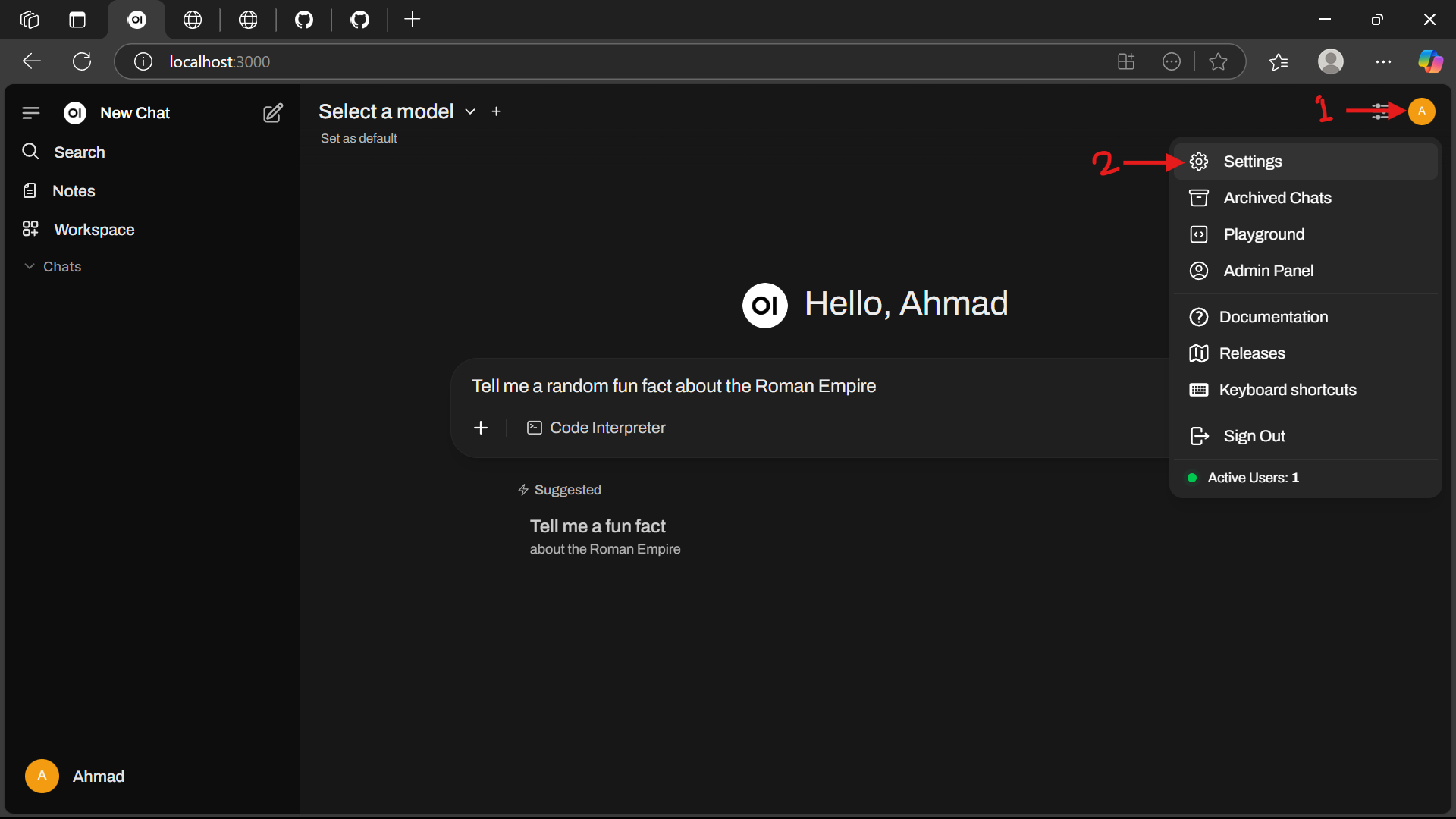

Click the Profile Icon and navigate to Settings.

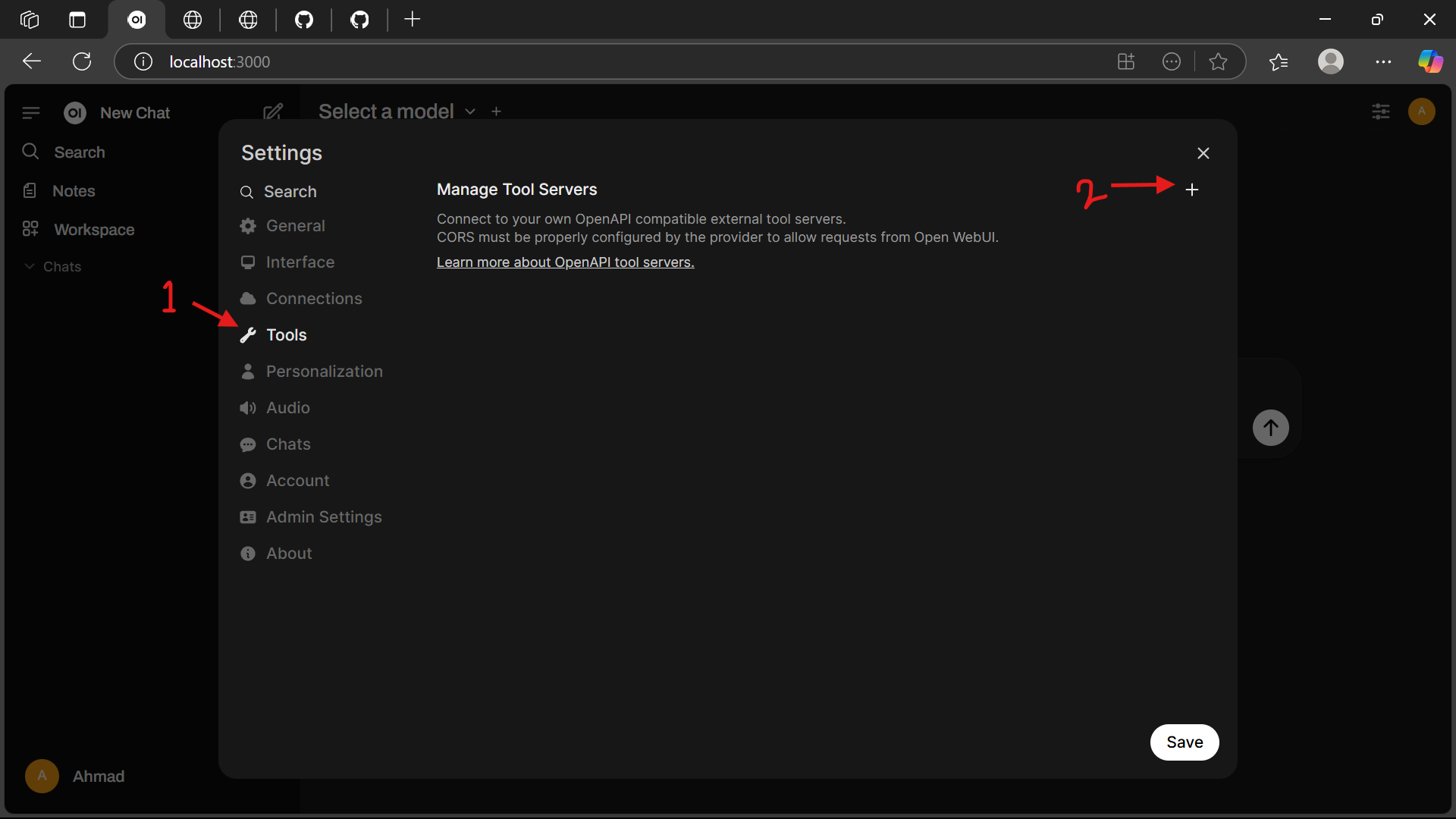

Select the Tools menu and click the Add (+) Button.

Add a new tool by entering the URL: http://localhost:8000/.

💬 Example Prompts

Use these prompts in Open WebUI to interact with the Leave Manager tool:

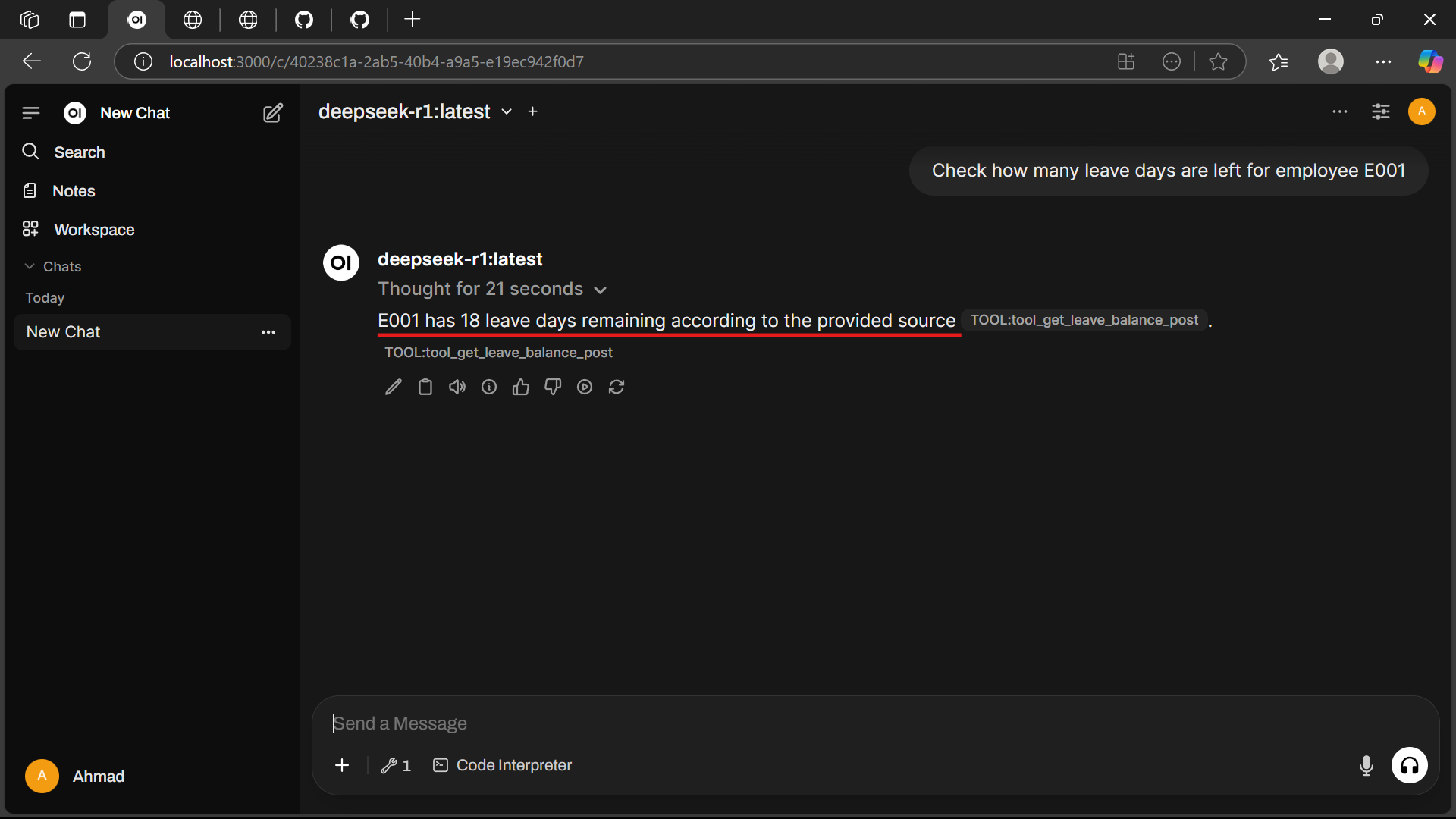

Check Leave Balance:

Check how many leave days are left for employee E001

Apply for Leave:

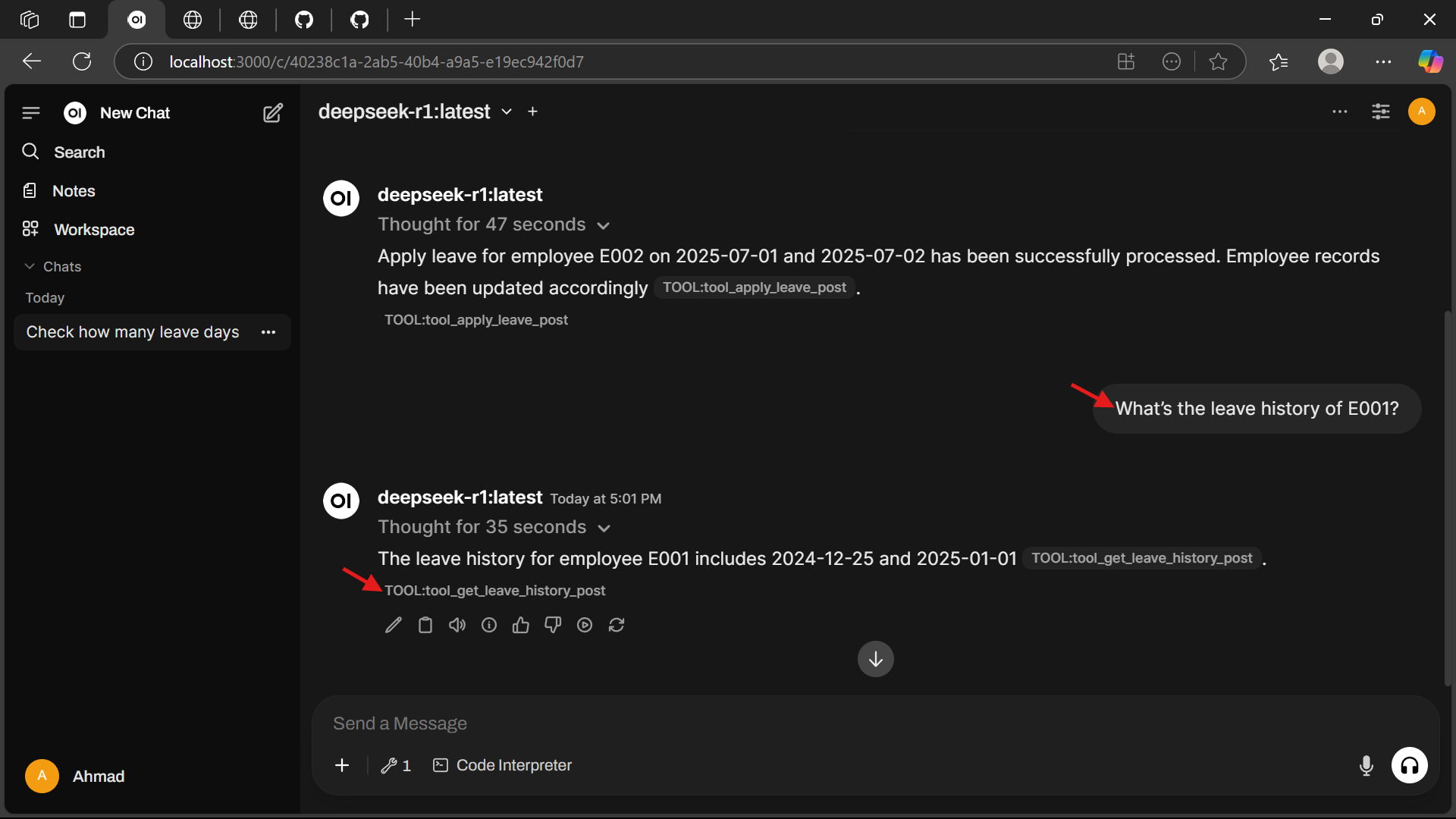

Apply View Leave History:

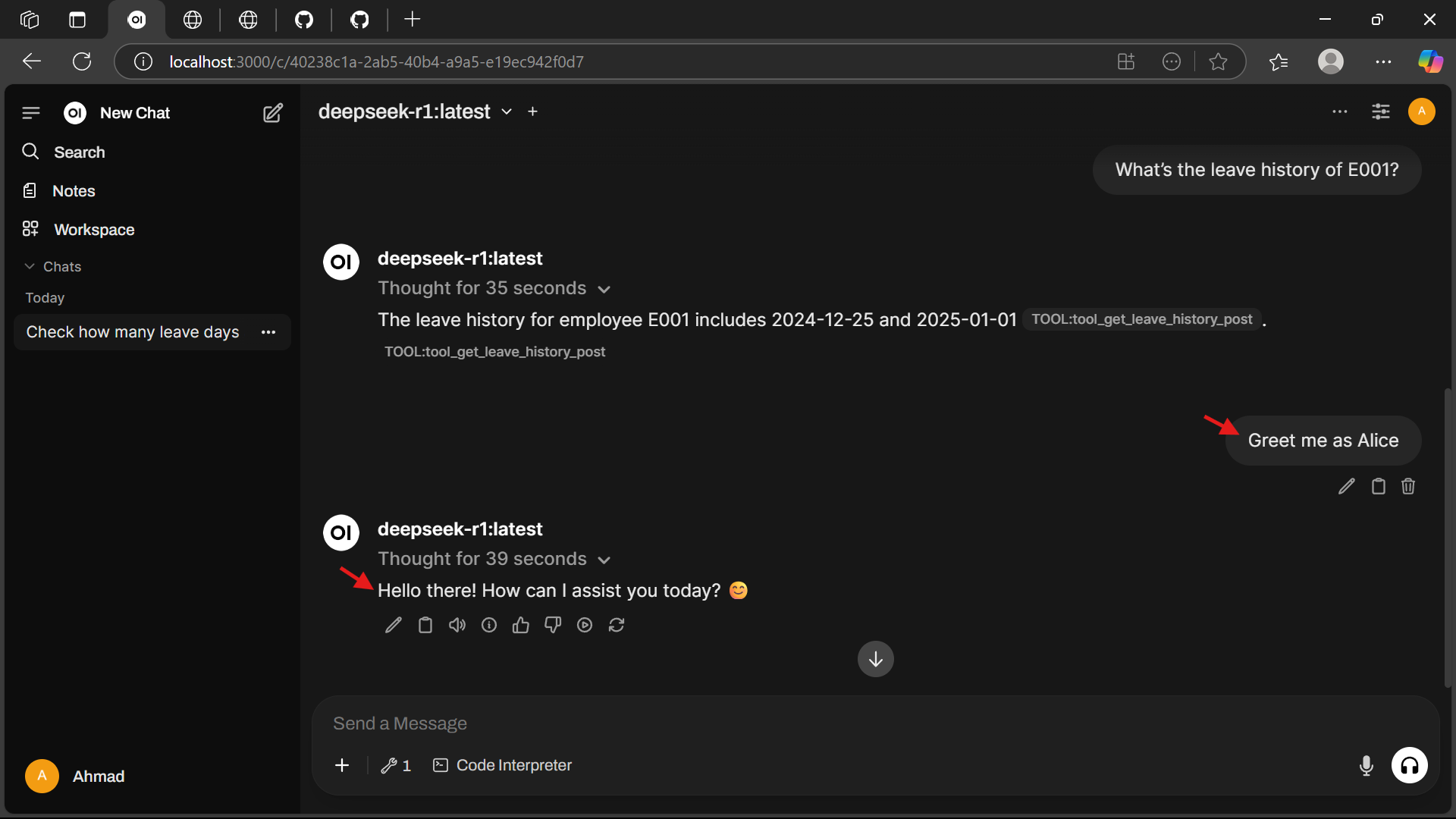

What's the leave history of E001?

Personalized Greeting:

Greet me as Alice

🛠️ Troubleshooting

Ollama not running: Ensure the service is active (

ollama serve) and check http://localhost:11434.Docker issues: Verify Docker Desktop is running and you have sufficient disk space.

Model not found: Confirm the

deepseek-r1model is listed withollama list.Port conflicts: Ensure ports

11434,3000, and8000are free.