Provides a sandboxed execution environment for JavaScript to filter large results, perform server-side aggregations, and transform data before it reaches the AI context.

Uses a secure Node.js virtual machine to power a Code Execution Mode that processes tool results and executes complex 'skills' patterns.

Exports performance metrics, including tool call latency, error rates, and cache performance, for real-time monitoring and observability.

Supports secure TypeScript code execution to batch multiple operations into a single tool call, reducing round-trips and token consumption.

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@MCP Gatewayfetch the last 100 error logs from my database and summarize the main issues"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

MCP Gateway

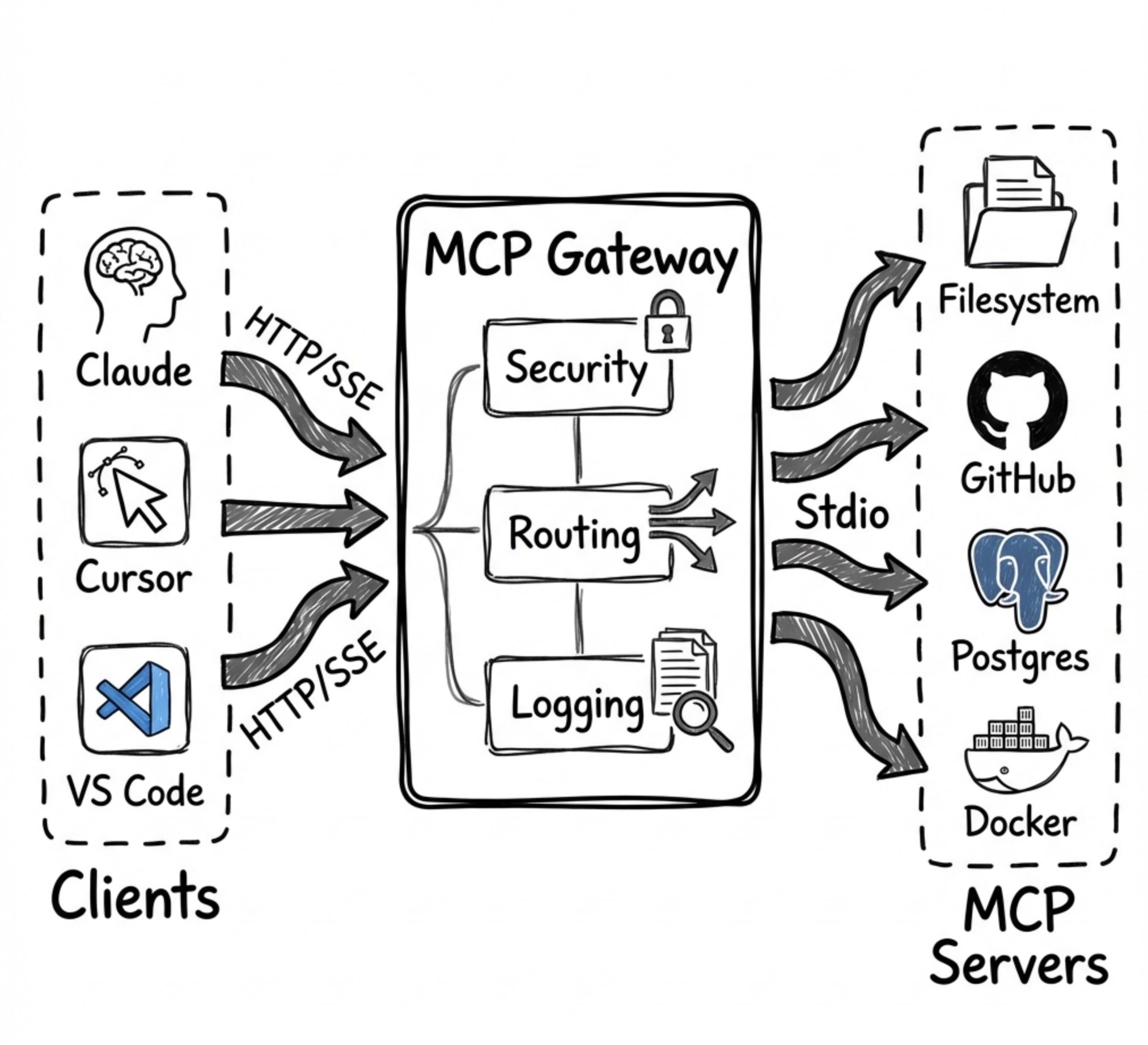

A universal Model Context Protocol (MCP) Gateway that aggregates multiple MCP servers and provides result optimization that native tool search doesn't offer. Works with all major MCP clients:

✅ Claude Desktop / Claude Code

✅ Cursor

✅ OpenAI Codex

✅ VS Code Copilot

How MCP Gateway Complements Anthropic's Tool Search

January 2025: Anthropic released Tool Search Tool - a native server-side feature for discovering tools from large catalogs using

defer_loadingand regex/BM25 search.

MCP Gateway and Anthropic's Tool Search solve different problems:

Problem | Anthropic Tool Search | MCP Gateway |

Tool Discovery (finding the right tool from 100s) | ✅ Native | ✅ Progressive disclosure |

Result Filtering (trimming large results) | ❌ Not available | ✅ |

Auto-Summarization (extracting insights) | ❌ Not available | ✅ 60-90% token savings |

Delta Responses (only send changes) | ❌ Not available | ✅ 90%+ savings for polling |

Aggregations (count, sum, groupBy) | ❌ Not available | ✅ Server-side analytics |

Code Batching (multiple ops in one call) | ❌ Not available | ✅ 60-80% fewer round-trips |

Skills (reusable code patterns) | ❌ Not available | ✅ 95%+ token savings |

Bottom line: Anthropic's Tool Search helps you find the right tool. MCP Gateway helps you use tools efficiently by managing large results, batching operations, and providing reusable patterns.

You can use both together - let Anthropic handle tool discovery while routing tool calls through MCP Gateway for result optimization.

Why MCP Gateway?

Problem: AI agents face three critical challenges when working with MCP servers:

Tool Overload - Loading 300+ tool definitions consumes 77,000+ context tokens before any work begins

Result Bloat - Large query results (10K rows) can consume 50,000+ tokens per call

Repetitive Operations - Same workflows require re-explaining to the model every time

Note: Anthropic's Tool Search Tool now addresses #1 natively for direct API users. MCP Gateway remains essential for #2 and #3, and provides tool discovery for MCP clients that don't have native tool search.

Solution: MCP Gateway aggregates all your MCP servers and provides 15 layers of token optimization:

Layer | What It Does | Token Savings | Unique to Gateway? |

Progressive Disclosure | Load tool schemas on-demand | 85% | Shared* |

Smart Filtering | Auto-limit result sizes | 60-80% | ✅ |

Aggregations | Server-side analytics | 90%+ | ✅ |

Code Batching | Multiple ops in one call | 60-80% | ✅ |

Skills | Zero-shot task execution | 95%+ | ✅ |

Caching | Skip repeated queries | 100% | ✅ |

PII Tokenization | Redact sensitive data | Security | ✅ |

Response Optimization | Strip null/empty values | 20-40% | ✅ |

Session Context | Avoid resending data in context | Very High | ✅ |

Schema Deduplication | Reference identical schemas by hash | Up to 90% | ✅ |

Micro-Schema Mode | Ultra-compact type abbreviations | 60-70% | ✅ |

Delta Responses | Send only changes for repeated queries | 90%+ | ✅ |

Context Tracking | Monitor context usage, prevent overflow | Safety | ✅ |

Auto-Summarization | Extract insights from large results | 60-90% | ✅ |

Query Planning | Detect optimization opportunities | 30-50% | ✅ |

*Anthropic's Tool Search provides native tool discovery; MCP Gateway provides it for MCP clients without native support.

Result: A typical session drops from ~500,000 tokens to ~25,000 tokens (95% reduction).

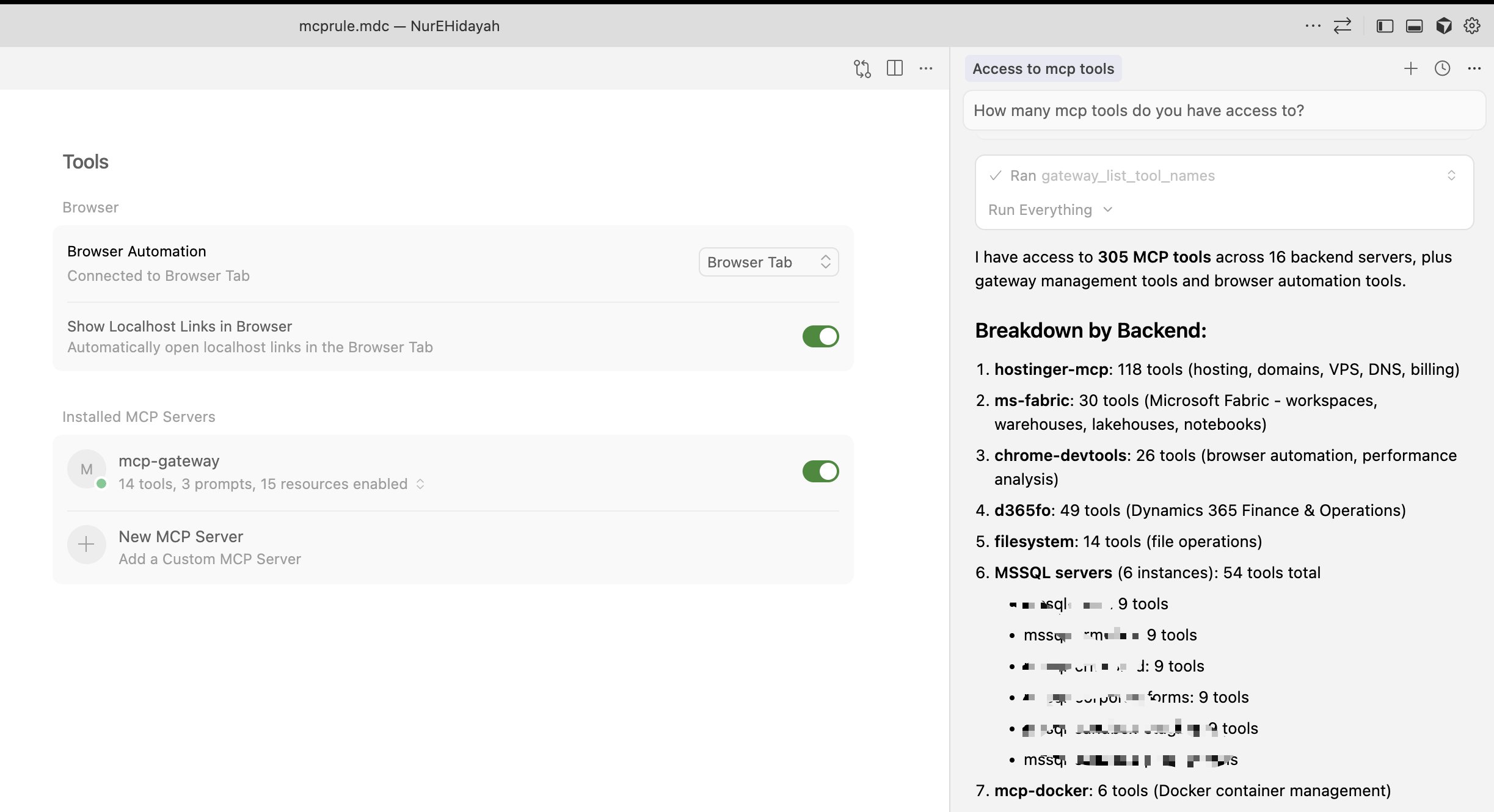

305 Tools Through 19 Gateway Tools

Cursor connected to MCP Gateway - 19 tools provide access to 305 backend tools across 16 servers

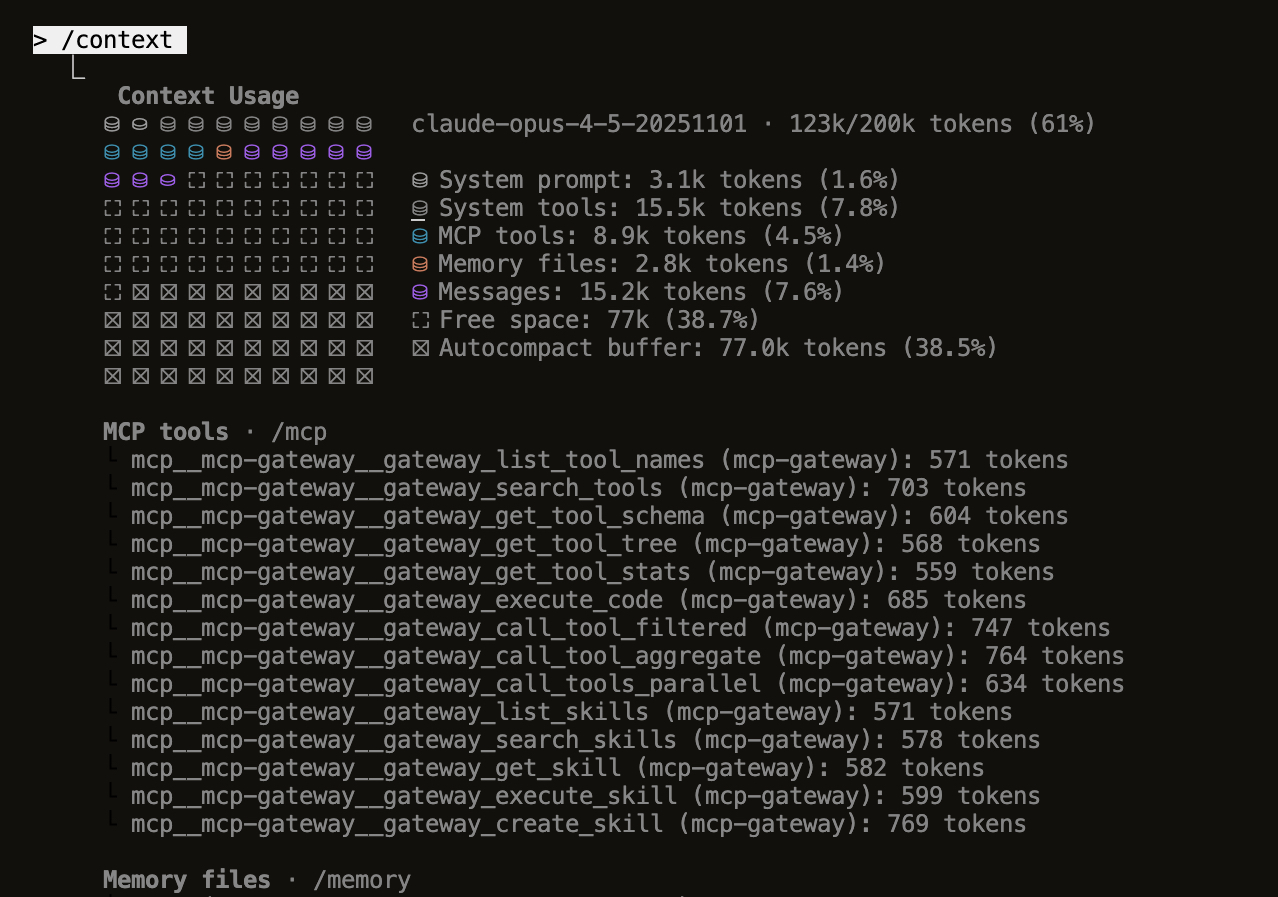

Minimal Context Usage

Claude Code

What's New (v1.0.0)

Gateway MCP Tools - All code execution features now exposed as MCP tools (

gateway_*) that any client can discover and use directlyHot-Reload Server Management - Add, edit, and delete MCP servers from the dashboard without restarting

UI State Persistence - Disabled tools and backends are remembered across server restarts

Enhanced Dashboard - Reconnect failed backends, view real-time status, improved error handling

Connection Testing - Test server connections before adding them to your configuration

Export/Import Config - Backup and share your server configurations easily

Parallel Tool Execution - Execute multiple tool calls simultaneously for better performance

Result Filtering & Aggregation - Reduce context bloat with

maxRows,fields,format, and aggregation options

Features

Core Gateway Features

🔀 Multi-Server Aggregation - Route multiple MCP servers through one gateway

🎛️ Web Dashboard - Real-time UI to manage tools, backends, and server lifecycle

➕ Hot-Reload Server Management - Add, edit, delete MCP servers from dashboard without restart

🌐 HTTP Streamable Transport - Primary transport, works with all clients

📡 SSE Transport - Backward compatibility for older clients

🔐 Authentication - API Key and OAuth/JWT support

⚡ Rate Limiting - Protect your backend servers

🐳 Docker Ready - Easy deployment with Docker/Compose

📊 Health Checks - Monitor backend status with detailed diagnostics

🔄 Auto-Restart - Server restarts automatically on crash or via dashboard

💾 UI State Persistence - Remembers disabled tools/backends across restarts

Code Execution Mode (Token-Efficient AI)

Inspired by Anthropic's Code Execution with MCP - achieve up to 98.7% token reduction:

🔍 Progressive Tool Disclosure - Search and lazy-load tools to reduce token usage (85% reduction)

💻 Sandboxed Code Execution - Execute TypeScript/JavaScript in secure Node.js VM

📉 Context-Efficient Results - Filter, aggregate, and transform tool results (60-80% reduction)

🔒 Privacy-Preserving Operations - PII tokenization for sensitive data

📁 Skills System - Save and reuse code patterns for zero-shot execution (eliminates prompt tokens)

🗄️ State Persistence - Workspace for agent state across sessions

🛠️ Gateway MCP Tools - All code execution features exposed as MCP tools for any client

🧹 Response Optimization - Automatically strip null/empty values from responses (20-40% reduction)

🧠 Session Context - Track sent data to avoid resending in multi-turn conversations

🔗 Schema Deduplication - Reference identical schemas by hash (up to 90% reduction)

📐 Micro-Schema Mode - Ultra-compact schemas with abbreviated types (60-70% reduction)

🔄 Delta Responses - Send only changes for repeated queries (90%+ reduction)

📊 Context Tracking - Monitor context window usage and get warnings before overflow

📝 Auto-Summarization - Extract key insights from large results (60-90% reduction)

🔍 Query Planning - Analyze code to detect optimization opportunities (30-50% improvement)

Monitoring & Observability

📈 Prometheus Metrics - Tool call latency, error rates, cache performance

📊 JSON Metrics API - Programmatic access to gateway statistics

💾 Result Caching - LRU cache with TTL for tool results

📝 Audit Logging - Track sensitive operations

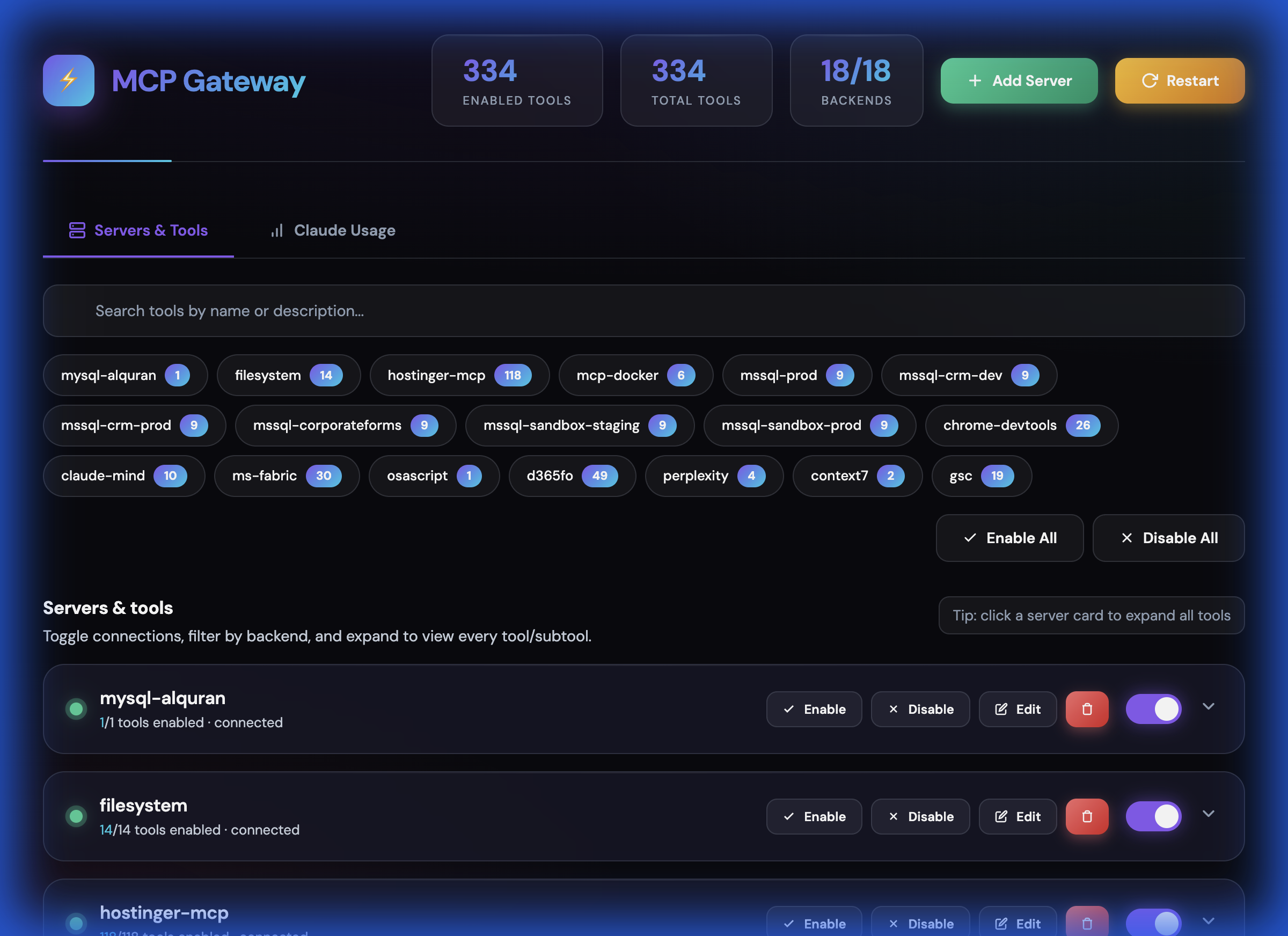

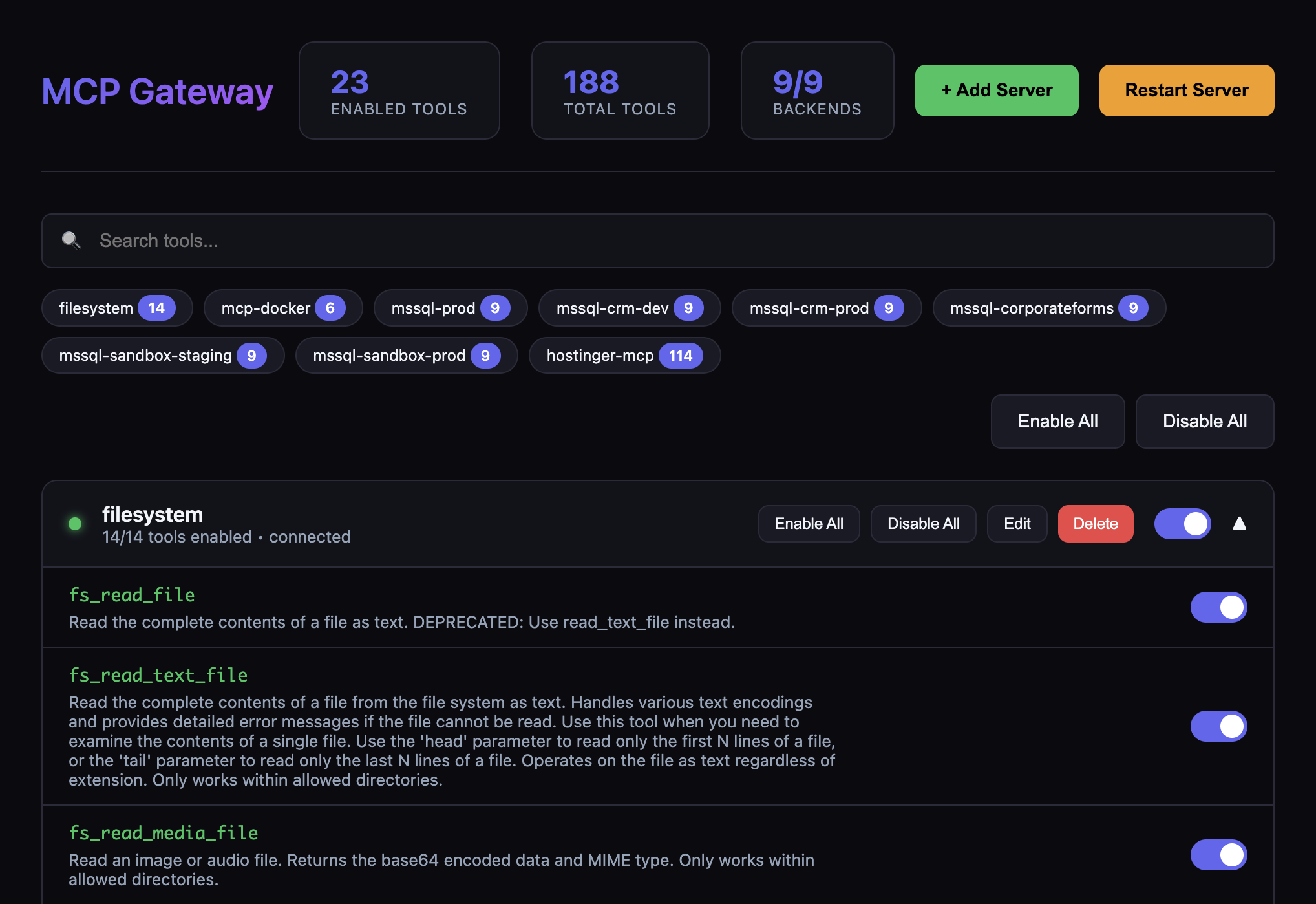

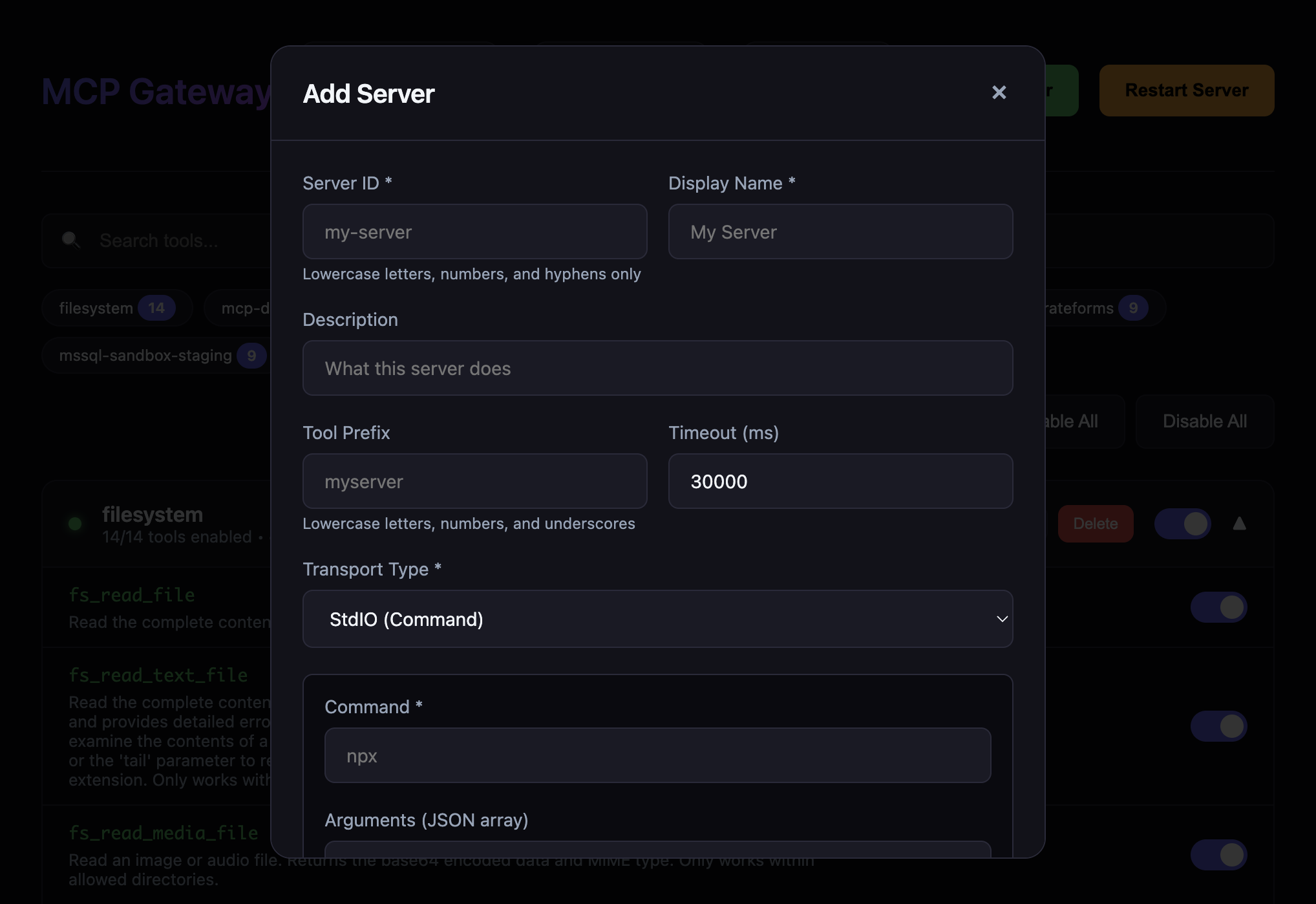

Screenshots

Dashboard Overview

Tools Management

Add Server Dialog

Quick Start

1. Install Dependencies

2. Configure Backend Servers

Copy the example config and edit it:

Edit config/servers.json to add your MCP servers:

3. Start the Gateway

The gateway will start on http://localhost:3010 by default.

Security modes

For local experimentation you can run without auth:

AUTH_MODE=none

However, sensitive endpoints (/dashboard, /dashboard/api/*, /api/code/*, /metrics/json) are blocked by default when AUTH_MODE=none. To allow unauthenticated access (not recommended except for isolated local use), explicitly opt in:

ALLOW_INSECURE=1

For secure usage, prefer:

AUTH_MODE=api-keywithAPI_KEYS=key1,key2or

AUTH_MODE=oauthwith the appropriateOAUTH_*settings shown below.

Endpoints

Core Endpoints

Endpoint | Transport | Use Case |

| HTTP Streamable | Primary endpoint - works with all clients |

| Server-Sent Events | Backward compatibility |

| JSON | Health checks and status |

| Web UI | Manage tools, backends, and restart server |

| Prometheus | Prometheus-format metrics |

| JSON | JSON-format metrics |

Code Execution API

Endpoint | Method | Description |

| GET | Search tools with filters |

| GET | Get filesystem-like tool tree |

| GET | Get all tool names (minimal tokens) |

| GET | Lazy-load specific tool schema |

| GET | Tool statistics by backend |

| GET | Auto-generated TypeScript SDK |

| POST | Execute code in sandbox |

| POST | Call tool with result filtering |

| POST | Call tool with aggregation |

| POST | Execute multiple tools in parallel |

| GET/POST | List or create skills |

| GET | Search skills |

| GET/DELETE | Get or delete skill |

| POST | Execute a skill |

| GET/POST | Get or update session state |

| GET | Cache statistics |

| POST | Clear cache |

Dashboard API

Endpoint | Method | Description |

| GET | Get all tools with enabled status |

| GET | Get all backends with status |

| POST | Toggle tool enabled/disabled |

| POST | Toggle backend enabled/disabled |

| POST | Reconnect a failed backend |

| POST | Add new backend server |

| PUT | Update backend configuration |

| DELETE | Remove backend server |

| GET | Export server configuration |

| POST | Import server configuration |

| POST | Restart the gateway server |

Dashboard

Access the web dashboard at http://localhost:3010/dashboard to:

View all connected backends and their real-time status

Add new MCP servers with connection testing (STDIO, HTTP, SSE transports)

Edit existing servers (modify command, args, environment variables)

Delete servers with graceful disconnect

Enable/disable individual tools or entire backends

Search and filter tools across all backends

Export/import configuration for backup and sharing

Reconnect failed backends with one click

Restart the entire gateway server

View tool counts and backend health at a glance

The dashboard persists UI state (disabled tools/backends) across server restarts.

Client Configuration

Claude Desktop / Claude Code

Open Claude Desktop → Settings → Connectors

Click Add remote MCP server

Enter your gateway URL:

Complete authentication if required

Note: Claude requires adding remote servers through the UI, not config files.

Claude Desktop via STDIO Proxy

If Claude Desktop doesn't support HTTP/SSE transports directly, you can use the included STDIO proxy script:

The proxy (scripts/claude-stdio-proxy.mjs) reads JSON-RPC messages from stdin, forwards them to the gateway HTTP endpoint, and writes responses to stdout. It automatically manages session IDs.

Cursor

Open Cursor → Settings → Features → MCP

Click Add New MCP Server

Choose Type:

HTTPorSSEEnter your gateway URL:

For HTTP (recommended):

For SSE:

Or add to your Cursor settings JSON:

OpenAI Codex

Option 1: CLI

Option 2: Config File

Add to ~/.codex/config.toml:

Important: Codex requires HTTPS for remote servers and only supports HTTP Streamable (not SSE).

VS Code Copilot

Open Command Palette (

Cmd/Ctrl + Shift + P)Run MCP: Add MCP Server

Choose Remote (URL)

Enter your gateway URL:

Approve the trust prompt

Or add to your VS Code settings.json:

Cross-IDE Configuration (.agents/)

MCP Gateway uses a centralized .agents/ directory as the single source of truth for AI agent configuration across all IDEs:

How It Works

The .agents/AGENTS.md file is symlinked to each IDE's configuration location:

IDE | Symlink |

Cursor |

|

Windsurf |

|

Claude Code |

|

Codex |

|

This means:

One file to maintain - Edit

.agents/AGENTS.mdand all IDEs get the updateConsistent behavior - Same rules, skills, and protocols across all tools

Version controlled - Track all configuration in git

Skills Auto-Activation

The .agents/AGENTS.md includes trigger keywords that automatically activate relevant skills:

Trigger | Skill Activated |

"review code", "security audit" |

|

"debug", "fix bug", "not working" |

|

"commit", "push", "create PR" |

|

"build UI", "dashboard", "React" |

|

"deploy", "docker", "production" |

|

Claude Code: Skills auto-load via the skill-activation.mjs hook.

Other IDEs: Follow the instructions in AGENTS.md to manually load skills.

Setting Up for Your Fork

If you fork this repository:

The symlinks are already configured in the repo

Edit

.agents/AGENTS.mdto customize rules for your projectAdd/modify skills in

.agents/skills/

Backend Server Configuration

The gateway can connect to MCP servers using different transports:

STDIO (Local Process)

HTTP (Remote Server)

Tool Prefixing

Use toolPrefix to namespace tools from different servers:

Server with

toolPrefix: "fs"exposesread_fileasfs_read_filePrevents naming collisions between servers

Makes it clear which server handles each tool

Authentication

API Key Authentication

Set environment variables:

Clients send the key in the Authorization header:

OAuth Authentication

Docker Deployment

Build and Run

Docker Compose

Environment Variables

Core Configuration

Variable | Default | Description |

|

| Server port |

|

| Server host |

|

| Log level (debug, info, warn, error) |

|

| Gateway name in MCP responses |

|

| Lite mode - reduces exposed gateway tools for lower token usage (recommended) |

|

| Authentication mode (none, api-key, oauth) |

| - | Comma-separated API keys |

| - | OAuth token issuer |

| - | OAuth audience |

| - | OAuth JWKS endpoint |

|

| Allowed CORS origins ( |

|

| If |

|

| If |

|

| Rate limit window (ms) |

|

| Max requests per window |

Optional Features

MCP Gateway includes optional features that are disabled by default for minimal, public-friendly deployments. Enable them by setting the corresponding environment variable to 1.

Variable | Default | Description |

|

| Enable Skills system - reusable code patterns and skill execution |

|

| Enable Cipher Memory - cross-IDE persistent memory with Qdrant vector store |

|

| Enable Antigravity Usage - IDE quota tracking for Antigravity IDE |

|

| Enable Claude Usage - API token consumption tracking |

When a feature is disabled:

The corresponding dashboard tab is hidden

API endpoints return

404 Feature disabledwith instructions to enableNo errors occur from missing dependencies (Qdrant, Cipher service, etc.)

For personal/development use, enable the features you need in your .env:

Feature-Specific Configuration

These variables are only needed when the corresponding feature is enabled:

Variable | Feature | Default | Description |

| Cipher |

| Cipher Memory service URL |

| Cipher | - | Qdrant vector store URL |

| Cipher | - | Qdrant API key |

| Cipher |

| Qdrant collection name |

| Cipher |

| Qdrant request timeout |

Optional Features Guide

This section provides detailed instructions for enabling and using each optional feature.

Skills System (ENABLE_SKILLS=1)

The Skills system allows you to save and reuse code patterns for zero-shot execution. Skills are the most powerful token-saving feature in MCP Gateway, reducing token usage by 95%+ for recurring tasks.

What Skills Do

Save successful code patterns as reusable templates

Execute complex workflows with a single tool call (~20 tokens)

Eliminate prompt engineering for recurring tasks

Hot-reload when skill files change on disk

Enabling Skills

Storage Locations

Skills are stored in two directories:

workspace/skills/- User-created skills (editable)external-skills/- Shared/imported skills (read-only by default)

Each skill is a directory containing:

Creating Skills via MCP Tools

Executing Skills

Skills MCP Tools

Tool | Description |

| List all available skills with metadata |

| Search skills by name, description, or tags |

| Get full skill details including code |

| Execute a skill with input parameters |

| Create a new reusable skill |

Skills REST API

Endpoint | Method | Description |

| GET | List all skills |

| POST | Create a new skill |

| GET | Search skills |

| GET | Get skill details |

| DELETE | Delete a skill |

| POST | Execute a skill |

| GET | Get skill templates |

| POST | Sync external skills to workspace |

Dashboard

When enabled, a Skills tab appears in the dashboard (/dashboard) showing:

All available skills with search/filter

Skill details and code preview

Execute skills directly from UI

Create new skills from templates

Cipher Memory (ENABLE_CIPHER=1)

Cipher Memory provides persistent AI memory across all IDEs. Decisions, learnings, patterns, and insights are stored in a vector database and recalled automatically in future sessions.

What Cipher Does

Cross-IDE memory - Memories persist across Claude, Cursor, Windsurf, VS Code, Codex

Project-scoped context - Filter memories by project path

Semantic search - Find relevant memories using natural language

Auto-consolidation - Session summaries stored automatically

Prerequisites

Cipher requires two external services:

Cipher Memory Service - The memory API (default:

http://localhost:8082)Qdrant Vector Store - For semantic memory storage

Enabling Cipher

Using Cipher via MCP

The Cipher service exposes the cipher_ask_cipher tool via MCP:

Memory Types

Prefix | Use Case | Example |

| Architectural choices | "STORE DECISION: Using Redis for caching" |

| Bug fixes, discoveries | "STORE LEARNING: Fixed race condition in auth" |

| Completed features | "STORE MILESTONE: Completed user auth system" |

| Code patterns | "STORE PATTERN: Repository pattern for data access" |

| Ongoing issues | "STORE BLOCKER: CI failing on ARM builds" |

Dashboard API

Endpoint | Method | Description |

| GET | List memory sessions |

| GET | Get session history |

| POST | Send message to Cipher |

| GET | Search memories |

| GET | Get vector store statistics |

| GET | Get specific memory by ID |

Dashboard

When enabled, a Memory tab appears showing:

Total memories stored in Qdrant

Recent memories with timestamps

Memory categories breakdown (decisions, learnings, etc.)

Search interface for finding memories

Session history viewer

Claude Usage Tracking (ENABLE_CLAUDE_USAGE=1)

Track your Claude API token consumption and costs across all Claude Code sessions.

What It Does

Aggregate usage data from Claude Code JSONL logs

Track costs by model (Opus, Sonnet, Haiku)

Monitor cache efficiency (creation vs read tokens)

View daily/weekly/monthly trends

Live session monitoring

Prerequisites

This feature uses the ccusage CLI tool to parse Claude Code conversation logs from ~/.claude/projects/.

Enabling Claude Usage

No additional configuration required - the service automatically finds Claude Code logs.

Dashboard API

Endpoint | Method | Description |

| GET | Get usage summary (cached 5 min) |

| GET | Get usage for date range |

| GET | Get live session usage |

| POST | Force refresh cached data |

Response Format

Dashboard

When enabled, a Usage tab appears showing:

Total cost and token breakdown

Cost by model pie chart

Cache hit ratio (higher = more efficient)

Daily usage trend graph

Top usage days

Live current session monitoring

Antigravity Usage Tracking (ENABLE_ANTIGRAVITY=1)

Track quota and usage for Antigravity IDE (formerly Windsurf/Codeium) accounts.

What It Does

Real-time quota monitoring for all model tiers

Multi-account support (Antigravity + Techgravity accounts)

Conversation statistics from local data

Brain/task tracking for agentic workflows

Auto-detection of running Language Server processes

How It Works

The service:

Detects running

language_server_macosprocessesExtracts CSRF tokens and ports from process arguments

Queries the local gRPC-Web endpoint for quota data

Falls back to file-based stats if API unavailable

Prerequisites

Antigravity IDE installed and running

Account directories exist in

~/.gemini/antigravity/or~/.gemini/techgravity/

Enabling Antigravity Usage

No additional configuration required.

Dashboard API

Endpoint | Method | Description |

| GET | Check if Antigravity accounts exist |

| GET | Get full usage summary |

| POST | Force refresh cached data |

Response Format

Dashboard

When enabled, an Antigravity tab appears showing:

Running status indicator (green = active)

Per-account quota bars for each model

Remaining percentage with color coding (green/yellow/red)

Time until quota reset

Conversation and task statistics

Multi-account support (Antigravity + Techgravity)

Enabling All Features

For personal/development use, enable everything:

Then restart the gateway:

All four tabs will now appear in the dashboard at http://localhost:3010/dashboard.

Health Check

Response:

Architecture

Code Execution Mode

The Code Execution Mode allows AI agents to write and execute code instead of making individual tool calls, achieving up to 98.7% token reduction for complex workflows.

Why Skills? (Efficiency & Token Usage)

Skills are the most powerful token-saving feature in MCP Gateway. Here's why:

The Token Problem

Without skills, every complex operation requires:

Input tokens: Describe the task in natural language (~200-500 tokens)

Reasoning tokens: Model thinks about how to implement it (~100-300 tokens)

Output tokens: Model generates code to execute (~200-1000 tokens)

Result tokens: Large query results enter context (~500-10,000+ tokens)

Total: 1,000-12,000+ tokens per operation

The Skills Solution

With skills, the same operation requires:

Input tokens:

gateway_execute_skill({ name: "daily-report" })(~20 tokens)Result tokens: Pre-filtered, summarized output (~50-200 tokens)

Total: 70-220 tokens per operation → 95%+ reduction

Key Benefits

Benefit | Description | Token Savings |

Zero-Shot Execution | No prompt explaining how to do the task | 500-2000 tokens/call |

Deterministic Results | Pre-tested code, no LLM hallucinations | Eliminates retries |

Batched Operations | Multiple tool calls in single skill | 60-80% fewer round-trips |

Pre-filtered Output | Results processed before returning | 80-95% on large datasets |

Cached Execution | Repeated skill calls hit cache | 100% on cache hits |

Real-World Example

Without Skills (Traditional approach):

With Skills (Skill-based approach):

Gateway MCP Tools

All code execution features are exposed as MCP tools that any client can use directly. When connected to the gateway, clients automatically get these 19 tools instead of 300+ raw tool definitions:

Tool Discovery (Progressive Disclosure)

Tool | Purpose | Token Impact |

| Get all tool names with pagination | ~50 bytes/tool |

| Search by name, description, category, backend | Filters before loading |

| Lazy-load specific tool schema | Load only when needed |

| Batch load multiple schemas | 40% smaller with |

| Get semantic categories (database, filesystem, etc.) | Navigate 300+ tools easily |

| Get tools organized by backend | Visual hierarchy |

| Get statistics about tools | Counts by backend |

Execution & Filtering

Tool | Purpose | Token Impact |

| Execute TypeScript/JavaScript in sandbox | Batch multiple ops |

| Call any tool with result filtering | 60-80% smaller results |

| Call tool with aggregation | 90%+ smaller for analytics |

| Execute multiple tools in parallel | Fewer round-trips |

Skills (Highest Token Savings)

Tool | Purpose | Token Impact |

| List saved code patterns | Discover available skills |

| Search skills by name/tags | Find the right skill fast |

| Get skill details and code | Inspect before executing |

| Execute a saved skill | ~20 tokens per call |

| Save a new reusable skill | One-time investment |

Optimization & Monitoring

Tool | Purpose | Token Impact |

| View token savings statistics | Monitor efficiency |

| Call tool with delta response - only changes | 90%+ for repeated queries |

| Monitor context window usage and get warnings | Prevent overflow |

| Call tool with auto-summarization of results | 60-90% for large data |

| Analyze code for optimization opportunities | Improve efficiency |

Progressive Tool Disclosure

Instead of loading all tool definitions upfront (which can consume excessive tokens with 300+ tools), use progressive disclosure:

Detail levels for search:

name_only- Just tool namesname_description- Names with descriptionsfull_schema- Complete JSON schema

Sandboxed Code Execution

Execute TypeScript/JavaScript code in a secure Node.js VM sandbox:

The sandbox:

Auto-generates TypeScript SDK from your MCP tools

Supports async/await, loops, and conditionals

Returns only

console.logoutput (not raw data)Has configurable timeout protection

Context-Efficient Results

Reduce context bloat from large tool results:

Available aggregations: count, sum, avg, min, max, groupBy, distinct

Privacy-Preserving Operations

Automatically tokenize PII so sensitive data never enters model context:

Output shows tokenized values:

Tokens are automatically untokenized when data flows to another tool.

Skills System

Save successful code patterns as reusable skills:

Skills are stored in the skills/ directory and can be discovered via filesystem exploration.

Session State & Workspace

Persist state across agent sessions:

State is stored in the workspace/ directory.

Monitoring & Metrics

Prometheus Metrics

Returns metrics including:

mcp_tool_calls_total- Total tool calls by backend and toolmcp_tool_call_duration_seconds- Tool call latency histogrammcp_tool_errors_total- Error count by backendmcp_cache_hits_total/mcp_cache_misses_total- Cache performancemcp_active_connections- Active client connections

JSON Metrics

Caching

Tool results are cached using an LRU cache with TTL:

Token Efficiency Architecture

MCP Gateway implements a multi-layered approach to minimize token usage at every stage of AI agent interactions.

Layer 1: Progressive Tool Disclosure (85% Reduction)

Traditional MCP clients load all tool schemas upfront. With 300+ tools, this can consume 77,000+ tokens before any work begins.

How it works:

Layer 2: Smart Result Filtering (60-80% Reduction)

Large tool results can consume thousands of tokens. Smart filtering is enabled by default.

Layer 3: Server-Side Aggregations

Instead of fetching raw data and processing client-side, compute aggregations in the gateway:

Available operations: count, sum, avg, min, max, groupBy, distinct

Layer 4: Code Execution Batching

Execute multiple operations in a single round-trip. Results are processed server-side; only console.log output returns.

Layer 5: Skills (95%+ Reduction)

Skills eliminate prompt engineering entirely for recurring tasks:

Layer 6: Result Caching

Identical queries hit the LRU cache instead of re-executing:

Layer 7: PII Tokenization

Sensitive data never enters model context while still flowing between tools:

Layer 8: Response Optimization (20-40% Reduction)

Automatically strip default/empty values from all responses:

Strips: null, undefined, empty strings "", empty arrays [], empty objects {}

Layer 9: Session Context Cache (Very High Reduction)

Tracks what schemas and data have been sent in the conversation to avoid resending:

Layer 10: Schema Deduplication (Up to 90% Reduction)

Many tools share identical schemas. Reference by hash instead of duplicating:

Layer 11: Micro-Schema Mode (60-70% Reduction)

Ultra-compact schema representation using abbreviated types:

Layer 12: Delta Responses (90%+ Reduction)

For repeated queries or polling, send only changes since last call:

Layer 13: Context Window Tracking (Safety)

Monitor context usage to prevent overflow and get optimization recommendations:

Layer 14: Auto-Summarization (60-90% Reduction)

Automatically extract insights from large results:

Layer 15: Query Planning (30-50% Improvement)

Analyze code before execution to detect optimization opportunities:

Combined Token Savings

Layer | Feature | Typical Savings |

1 | Progressive Disclosure | 85% on tool schemas |

2 | Smart Filtering | 60-80% on results |

3 | Aggregations | 90%+ on analytics |

4 | Code Batching | 60-80% fewer round-trips |

5 | Skills | 95%+ on recurring tasks |

6 | Caching | 100% on repeated queries |

7 | PII Tokenization | Prevents data leakage |

8 | Response Optimization | 20-40% on all responses |

9 | Session Context | Very high on multi-turn |

10 | Schema Deduplication | Up to 90% on similar tools |

11 | Micro-Schema Mode | 60-70% on schema definitions |

12 | Delta Responses | 90%+ on repeated/polling queries |

13 | Context Tracking | Prevents context overflow |

14 | Auto-Summarization | 60-90% on large datasets |

15 | Query Planning | 30-50% through optimization |

Real-world impact: A typical 10-minute agent session with 50 tool calls drops from ~500,000 tokens to ~25,000 tokens.

Tips for AI Agents

When using MCP Gateway with AI agents (Claude, GPT, etc.), follow these best practices for efficient token usage:

1. Start with Tool Discovery

2. Use Code Execution for Complex Workflows

3. Filter Large Results

4. Use Aggregations

5. Save Reusable Patterns as Skills

Invoking Cipher from Any IDE

Cipher exposes the cipher_ask_cipher tool via MCP. To ensure memories persist across IDEs and sessions, always include the .

Tool Schema

Quick Reference

Action | Message Format |

Recall context |

|

Store decision |

|

Store bug fix |

|

Store milestone |

|

Store pattern |

|

Store blocker |

|

Search memory |

|

Session end |

|

IDE Configuration Examples

Add these instructions to your IDE's rules file so the AI automatically uses Cipher.

Claude Code can use a SessionStart hook for automatic recall. For manual configuration:

Why projectPath Matters

The projectPath parameter is critical for:

Cross-IDE Filtering: Memories are scoped to projects, so switching from Cursor to Claude Code maintains context.

Avoiding Pollution: Without projectPath, memories from different projects mix together.

Team Sync: Workspace memory features rely on consistent project paths.

Common Mistake: Using {cwd} or just the project name. These don't resolve correctly. Always use the full absolute path like /path/to/your/project.

macOS Auto-Start (LaunchAgent)

To run the gateway automatically on login:

Copy and customize the example plist file:

Update these paths in the plist file:

/path/to/mcp-gateway→ Your actual installation path/usr/local/bin/node→ Your Node.js path (runwhich nodeto find it)

Load the LaunchAgent:

Windows Setup

Running the Gateway

Windows Auto-Start (Task Scheduler)

To run the gateway automatically on Windows startup:

Open Task Scheduler (

taskschd.msc)Click Create Task (not Basic Task)

Configure:

General tab: Name it

MCP Gateway, check "Run whether user is logged on or not"Triggers tab: Add trigger → "At startup"

Actions tab: Add action:

Program:

node(or full path likeC:\Program Files\nodejs\node.exe)Arguments:

dist/index.jsStart in:

C:\path\to\mcp-gateway

Settings tab: Check "Allow task to be run on demand"

Alternatively, use the start.example.sh pattern adapted for PowerShell:

Windows Service (NSSM)

For a proper Windows service, use NSSM:

Development

License

MIT