LLMS.txt•317 kB

# Project Structure and Function Definitions

This file contains the project directory structure and function definitions to help LLMs understand the codebase.

## Directory Structure

```

mcp-agent/

├── .vscode/

│ ├── extensions.json

│ ├── settings.json

├── schema/

│ ├── mcp-agent.config.schema.json

├── src/

│ ├── mcp_agent/

│ │ ├── agents/

│ │ │ ├── __init__.py

│ │ │ ├── agent.py

│ │ ├── cli/

│ │ │ ├── commands/

│ │ │ │ ├── config.py

│ │ │ ├── __init__.py

│ │ │ ├── __main__.py

│ │ │ ├── main.py

│ │ │ ├── terminal.py

│ │ ├── core/

│ │ │ ├── context.py

│ │ │ ├── context_dependent.py

│ │ │ ├── decorator_app.py

│ │ │ ├── exceptions.py

│ │ ├── data/

│ │ │ ├── artificial_analysis_llm_benchmarks.json

│ │ ├── eval/

│ │ │ ├── __init__.py

│ │ ├── executor/

│ │ │ ├── temporal/

│ │ │ │ ├── __init__.py

│ │ │ │ ├── workflow_registry.py

│ │ │ │ ├── workflow_signal.py

│ │ │ ├── __init__.py

│ │ │ ├── decorator_registry.py

│ │ │ ├── executor.py

│ │ │ ├── signal_registry.py

│ │ │ ├── task_registry.py

│ │ │ ├── workflow.py

│ │ │ ├── workflow_registry.py

│ │ │ ├── workflow_signal.py

│ │ │ ├── workflow_task.py

│ │ ├── human_input/

│ │ │ ├── __init__.py

│ │ │ ├── handler.py

│ │ │ ├── types.py

│ │ ├── logging/

│ │ │ ├── __init__.py

│ │ │ ├── event_progress.py

│ │ │ ├── events.py

│ │ │ ├── json_serializer.py

│ │ │ ├── listeners.py

│ │ │ ├── logger.py

│ │ │ ├── progress_display.py

│ │ │ ├── rich_progress.py

│ │ │ ├── tracing.py

│ │ │ ├── transport.py

│ │ ├── mcp/

│ │ │ ├── __init__.py

│ │ │ ├── gen_client.py

│ │ │ ├── mcp_agent_client_session.py

│ │ │ ├── mcp_aggregator.py

│ │ │ ├── mcp_connection_manager.py

│ │ │ ├── mcp_server_registry.py

│ │ ├── server/

│ │ │ ├── app_server.py

│ │ │ ├── app_server_types.py

│ │ ├── telemetry/

│ │ │ ├── __init__.py

│ │ │ ├── usage_tracking.py

│ │ ├── utils/

│ │ │ ├── common.py

│ │ │ ├── pydantic_type_serializer.py

│ │ ├── workflows/

│ │ │ ├── embedding/

│ │ │ │ ├── __init__.py

│ │ │ │ ├── embedding_base.py

│ │ │ │ ├── embedding_cohere.py

│ │ │ │ ├── embedding_openai.py

│ │ │ ├── evaluator_optimizer/

│ │ │ │ ├── __init__.py

│ │ │ │ ├── evaluator_optimizer.py

│ │ │ ├── intent_classifier/

│ │ │ │ ├── __init__.py

│ │ │ │ ├── intent_classifier_base.py

│ │ │ │ ├── intent_classifier_embedding.py

│ │ │ │ ├── intent_classifier_embedding_cohere.py

│ │ │ │ ├── intent_classifier_embedding_openai.py

│ │ │ │ ├── intent_classifier_llm.py

│ │ │ │ ├── intent_classifier_llm_anthropic.py

│ │ │ │ ├── intent_classifier_llm_openai.py

│ │ │ ├── llm/

│ │ │ │ ├── __init__.py

│ │ │ │ ├── augmented_llm.py

│ │ │ │ ├── augmented_llm_anthropic.py

│ │ │ │ ├── augmented_llm_azure.py

│ │ │ │ ├── augmented_llm_bedrock.py

│ │ │ │ ├── augmented_llm_google.py

│ │ │ │ ├── augmented_llm_ollama.py

│ │ │ │ ├── augmented_llm_openai.py

│ │ │ │ ├── llm_selector.py

│ │ │ ├── orchestrator/

│ │ │ │ ├── __init__.py

│ │ │ │ ├── orchestrator.py

│ │ │ │ ├── orchestrator_models.py

│ │ │ │ ├── orchestrator_prompts.py

│ │ │ ├── parallel/

│ │ │ │ ├── __init__.py

│ │ │ │ ├── fan_in.py

│ │ │ │ ├── fan_out.py

│ │ │ │ ├── parallel_llm.py

│ │ │ ├── router/

│ │ │ │ ├── __init__.py

│ │ │ │ ├── router_base.py

│ │ │ │ ├── router_embedding.py

│ │ │ │ ├── router_embedding_cohere.py

│ │ │ │ ├── router_embedding_openai.py

│ │ │ │ ├── router_llm.py

│ │ │ │ ├── router_llm_anthropic.py

│ │ │ │ ├── router_llm_openai.py

│ │ │ ├── swarm/

│ │ │ │ ├── __init__.py

│ │ │ │ ├── swarm.py

│ │ │ │ ├── swarm_anthropic.py

│ │ │ │ ├── swarm_openai.py

│ │ │ ├── __init__.py

│ │ ├── __init__.py

│ │ ├── app.py

│ │ ├── config.py

│ │ ├── console.py

│ │ ├── py.typed

├── LLMS.md

├── logs.txt

├── test_output.txt

```

## Project README

<p align="center">

<img src="https://github.com/user-attachments/assets/6f4e40c4-dc88-47b6-b965-5856b69416d2" alt="Logo" width="300" />

</p>

<p align="center">

<em>Build effective agents with Model Context Protocol using simple, composable patterns.</em>

<p align="center">

<a href="https://github.com/lastmile-ai/mcp-agent/tree/main/examples" target="_blank"><strong>Examples</strong></a>

|

<a href="https://www.anthropic.com/research/building-effective-agents" target="_blank"><strong>Building Effective Agents</strong></a>

|

<a href="https://modelcontextprotocol.io/introduction" target="_blank"><strong>MCP</strong></a>

</p>

<p align="center">

<a href="https://pypi.org/project/mcp-agent/"><img src="https://img.shields.io/pypi/v/mcp-agent?color=%2334D058&label=pypi" /></a>

<a href="https://github.com/lastmile-ai/mcp-agent/issues"><img src="https://img.shields.io/github/issues-raw/lastmile-ai/mcp-agent" /></a>

<a href="https://lmai.link/discord/mcp-agent"><img src="https://shields.io/discord/1089284610329952357" alt="discord" /></a>

<img alt="Pepy Total Downloads" src="https://img.shields.io/pepy/dt/mcp-agent?label=pypi%20%7C%20downloads"/>

<a href="https://github.com/lastmile-ai/mcp-agent/blob/main/LICENSE"><img src="https://img.shields.io/pypi/l/mcp-agent" /></a>

</p>

## Overview

**`mcp-agent`** is a simple, composable framework to build agents using [Model Context Protocol](https://modelcontextprotocol.io/introduction).

**Inspiration**: Anthropic announced 2 foundational updates for AI application developers:

1. [Model Context Protocol](https://www.anthropic.com/news/model-context-protocol) - a standardized interface to let any software be accessible to AI assistants via MCP servers.

2. [Building Effective Agents](https://www.anthropic.com/research/building-effective-agents) - a seminal writeup on simple, composable patterns for building production-ready AI agents.

`mcp-agent` puts these two foundational pieces into an AI application framework:

1. It handles the pesky business of managing the lifecycle of MCP server connections so you don't have to.

2. It implements every pattern described in Building Effective Agents, and does so in a _composable_ way, allowing you to chain these patterns together.

3. **Bonus**: It implements [OpenAI's Swarm](https://github.com/openai/swarm) pattern for multi-agent orchestration, but in a model-agnostic way.

Altogether, this is the simplest and easiest way to build robust agent applications. Much like MCP, this project is in early development.

We welcome all kinds of [contributions](/CONTRIBUTING.md), feedback and your help in growing this to become a new standard.

## Get Started

We recommend using [uv](https://docs.astral.sh/uv/) to manage your Python projects:

```bash

uv add "mcp-agent"

```

Alternatively:

```bash

pip install mcp-agent

```

### Quickstart

> [!TIP]

> The [`examples`](/examples) directory has several example applications to get started with.

> To run an example, clone this repo, then:

>

> ```bash

> cd examples/basic/mcp_basic_agent # Or any other example

> cp mcp_agent.secrets.yaml.example mcp_agent.secrets.yaml # Update API keys

> uv run main.py

> ```

Here is a basic "finder" agent that uses the fetch and filesystem servers to look up a file, read a blog and write a tweet. [Example link](./examples/basic/mcp_basic_agent/):

<details open>

<summary>finder_agent.py</summary>

```python

import asyncio

import os

from mcp_agent.app import MCPApp

from mcp_agent.agents.agent import Agent

from mcp_agent.workflows.llm.augmented_llm_openai import OpenAIAugmentedLLM

app = MCPApp(name="hello_world_agent")

async def example_usage():

async with app.run() as mcp_agent_app:

logger = mcp_agent_app.logger

# This agent can read the filesystem or fetch URLs

finder_agent = Agent(

name="finder",

instruction="""You can read local files or fetch URLs.

Return the requested information when asked.""",

server_names=["fetch", "filesystem"], # MCP servers this Agent can use

)

async with finder_agent:

# Automatically initializes the MCP servers and adds their tools for LLM use

tools = await finder_agent.list_tools()

logger.info(f"Tools available:", data=tools)

# Attach an OpenAI LLM to the agent (defaults to GPT-4o)

llm = await finder_agent.attach_llm(OpenAIAugmentedLLM)

# This will perform a file lookup and read using the filesystem server

result = await llm.generate_str(

message="Show me what's in README.md verbatim"

)

logger.info(f"README.md contents: {result}")

# Uses the fetch server to fetch the content from URL

result = await llm.generate_str(

message="Print the first two paragraphs from https://www.anthropic.com/research/building-effective-agents"

)

logger.info(f"Blog intro: {result}")

# Multi-turn interactions by default

result = await llm.generate_str("Summarize that in a 128-char tweet")

logger.info(f"Tweet: {result}")

if __name__ == "__main__":

asyncio.run(example_usage())

```

</details>

<details>

<summary>mcp_agent.config.yaml</summary>

```yaml

execution_engine: asyncio

logger:

transports: [console] # You can use [file, console] for both

level: debug

path: "logs/mcp-agent.jsonl" # Used for file transport

# For dynamic log filenames:

# path_settings:

# path_pattern: "logs/mcp-agent-{unique_id}.jsonl"

# unique_id: "timestamp" # Or "session_id"

# timestamp_format: "%Y%m%d_%H%M%S"

mcp:

servers:

fetch:

command: "uvx"

args: ["mcp-server-fetch"]

filesystem:

command: "npx"

args:

[

"-y",

"@modelcontextprotocol/server-filesystem",

"<add_your_directories>",

]

openai:

# Secrets (API keys, etc.) are stored in an mcp_agent.secrets.yaml file which can be gitignored

default_model: gpt-4o

```

</details>

<details>

<summary>Agent output</summary>

<img width="2398" alt="Image" src="https://github.com/user-attachments/assets/eaa60fdf-bcc6-460b-926e-6fa8534e9089" />

</details>

## Table of Contents

- [Why use mcp-agent?](#why-use-mcp-agent)

- [Example Applications](#examples)

- [Claude Desktop](#claude-desktop)

- [Streamlit](#streamlit)

- [Gmail Agent](#gmail-agent)

- [RAG](#simple-rag-chatbot)

- [Marimo](#marimo)

- [Python](#python)

- [Swarm (CLI)](#swarm)

- [Core Concepts](#core-components)

- [Workflows Patterns](#workflows)

- [Augmented LLM](#augmentedllm)

- [Parallel](#parallel)

- [Router](#router)

- [Intent-Classifier](#intentclassifier)

- [Orchestrator-Workers](#orchestrator-workers)

- [Evaluator-Optimizer](#evaluator-optimizer)

- [OpenAI Swarm](#swarm-1)

- [Advanced](#advanced)

- [Composing multiple workflows](#composability)

- [Signaling and Human input](#signaling-and-human-input)

- [App Config](#app-config)

- [MCP Server Management](#mcp-server-management)

- [Contributing](#contributing)

- [Roadmap](#roadmap)

- [FAQs](#faqs)

## Why use `mcp-agent`?

There are too many AI frameworks out there already. But `mcp-agent` is the only one that is purpose-built for a shared protocol - [MCP](https://modelcontextprotocol.io/introduction). It is also the most lightweight, and is closer to an agent pattern library than a framework.

As [more services become MCP-aware](https://github.com/punkpeye/awesome-mcp-servers), you can use mcp-agent to build robust and controllable AI agents that can leverage those services out-of-the-box.

## Examples

Before we go into the core concepts of mcp-agent, let's show what you can build with it.

In short, you can build any kind of AI application with mcp-agent: multi-agent collaborative workflows, human-in-the-loop workflows, RAG pipelines and more.

### Claude Desktop

You can integrate mcp-agent apps into MCP clients like Claude Desktop.

#### mcp-agent server

This app wraps an mcp-agent application inside an MCP server, and exposes that server to Claude Desktop.

The app exposes agents and workflows that Claude Desktop can invoke to service of the user's request.

https://github.com/user-attachments/assets/7807cffd-dba7-4f0c-9c70-9482fd7e0699

This demo shows a multi-agent evaluation task where each agent evaluates aspects of an input poem, and

then an aggregator summarizes their findings into a final response.

**Details**: Starting from a user's request over text, the application:

- dynamically defines agents to do the job

- uses the appropriate workflow to orchestrate those agents (in this case the Parallel workflow)

**Link to code**: [examples/basic/mcp_agent_server](./examples/basic/mcp_agent_server)

> [!NOTE]

> Huge thanks to [Jerron Lim (@StreetLamb)](https://github.com/StreetLamb)

> for developing and contributing this example!

### Streamlit

You can deploy mcp-agent apps using Streamlit.

#### Gmail agent

This app is able to perform read and write actions on gmail using text prompts -- i.e. read, delete, send emails, mark as read/unread, etc.

It uses an MCP server for Gmail.

https://github.com/user-attachments/assets/54899cac-de24-4102-bd7e-4b2022c956e3

**Link to code**: [gmail-mcp-server](https://github.com/jasonsum/gmail-mcp-server/blob/add-mcp-agent-streamlit/streamlit_app.py)

> [!NOTE]

> Huge thanks to [Jason Summer (@jasonsum)](https://github.com/jasonsum)

> for developing and contributing this example!

#### Simple RAG Chatbot

This app uses a Qdrant vector database (via an MCP server) to do Q&A over a corpus of text.

https://github.com/user-attachments/assets/f4dcd227-cae9-4a59-aa9e-0eceeb4acaf4

**Link to code**: [examples/usecases/streamlit_mcp_rag_agent](./examples/usecases/streamlit_mcp_rag_agent/)

> [!NOTE]

> Huge thanks to [Jerron Lim (@StreetLamb)](https://github.com/StreetLamb)

> for developing and contributing this example!

### Marimo

[Marimo](https://github.com/marimo-team/marimo) is a reactive Python notebook that replaces Jupyter and Streamlit.

Here's the "file finder" agent from [Quickstart](#quickstart) implemented in Marimo:

<img src="https://github.com/user-attachments/assets/139a95a5-e3ac-4ea7-9c8f-bad6577e8597" width="400"/>

**Link to code**: [examples/usecases/marimo_mcp_basic_agent](./examples/usecases/marimo_mcp_basic_agent/)

> [!NOTE]

> Huge thanks to [Akshay Agrawal (@akshayka)](https://github.com/akshayka)

> for developing and contributing this example!

### Python

You can write mcp-agent apps as Python scripts or Jupyter notebooks.

#### Swarm

This example demonstrates a multi-agent setup for handling different customer service requests in an airline context using the Swarm workflow pattern. The agents can triage requests, handle flight modifications, cancellations, and lost baggage cases.

https://github.com/user-attachments/assets/b314d75d-7945-4de6-965b-7f21eb14a8bd

**Link to code**: [examples/workflows/workflow_swarm](./examples/workflows/workflow_swarm/)

## Core Components

The following are the building blocks of the mcp-agent framework:

- **[MCPApp](./src/mcp_agent/app.py)**: global state and app configuration

- **MCP server management**: [`gen_client`](./src/mcp_agent/mcp/gen_client.py) and [`MCPConnectionManager`](./src/mcp_agent/mcp/mcp_connection_manager.py) to easily connect to MCP servers.

- **[Agent](./src/mcp_agent/agents/agent.py)**: An Agent is an entity that has access to a set of MCP servers and exposes them to an LLM as tool calls. It has a name and purpose (instruction).

- **[AugmentedLLM](./src/mcp_agent/workflows/llm/augmented_llm.py)**: An LLM that is enhanced with tools provided from a collection of MCP servers. Every Workflow pattern described below is an `AugmentedLLM` itself, allowing you to compose and chain them together.

Everything in the framework is a derivative of these core capabilities.

## Workflows

mcp-agent provides implementations for every pattern in Anthropic’s [Building Effective Agents](https://www.anthropic.com/research/building-effective-agents), as well as the OpenAI [Swarm](https://github.com/openai/swarm) pattern.

Each pattern is model-agnostic, and exposed as an `AugmentedLLM`, making everything very composable.

### AugmentedLLM

[AugmentedLLM](./src/mcp_agent/workflows/llm/augmented_llm.py) is an LLM that has access to MCP servers and functions via Agents.

LLM providers implement the AugmentedLLM interface to expose 3 functions:

- `generate`: Generate message(s) given a prompt, possibly over multiple iterations and making tool calls as needed.

- `generate_str`: Calls `generate` and returns result as a string output.

- `generate_structured`: Uses [Instructor](https://github.com/instructor-ai/instructor) to return the generated result as a Pydantic model.

Additionally, `AugmentedLLM` has memory, to keep track of long or short-term history.

<details>

<summary>Example</summary>

```python

from mcp_agent.agents.agent import Agent

from mcp_agent.workflows.llm.augmented_llm_anthropic import AnthropicAugmentedLLM

finder_agent = Agent(

name="finder",

instruction="You are an agent with filesystem + fetch access. Return the requested file or URL contents.",

server_names=["fetch", "filesystem"],

)

async with finder_agent:

llm = await finder_agent.attach_llm(AnthropicAugmentedLLM)

result = await llm.generate_str(

message="Print the first 2 paragraphs of https://www.anthropic.com/research/building-effective-agents",

# Can override model, tokens and other defaults

)

logger.info(f"Result: {result}")

# Multi-turn conversation

result = await llm.generate_str(

message="Summarize those paragraphs in a 128 character tweet",

)

logger.info(f"Result: {result}")

```

</details>

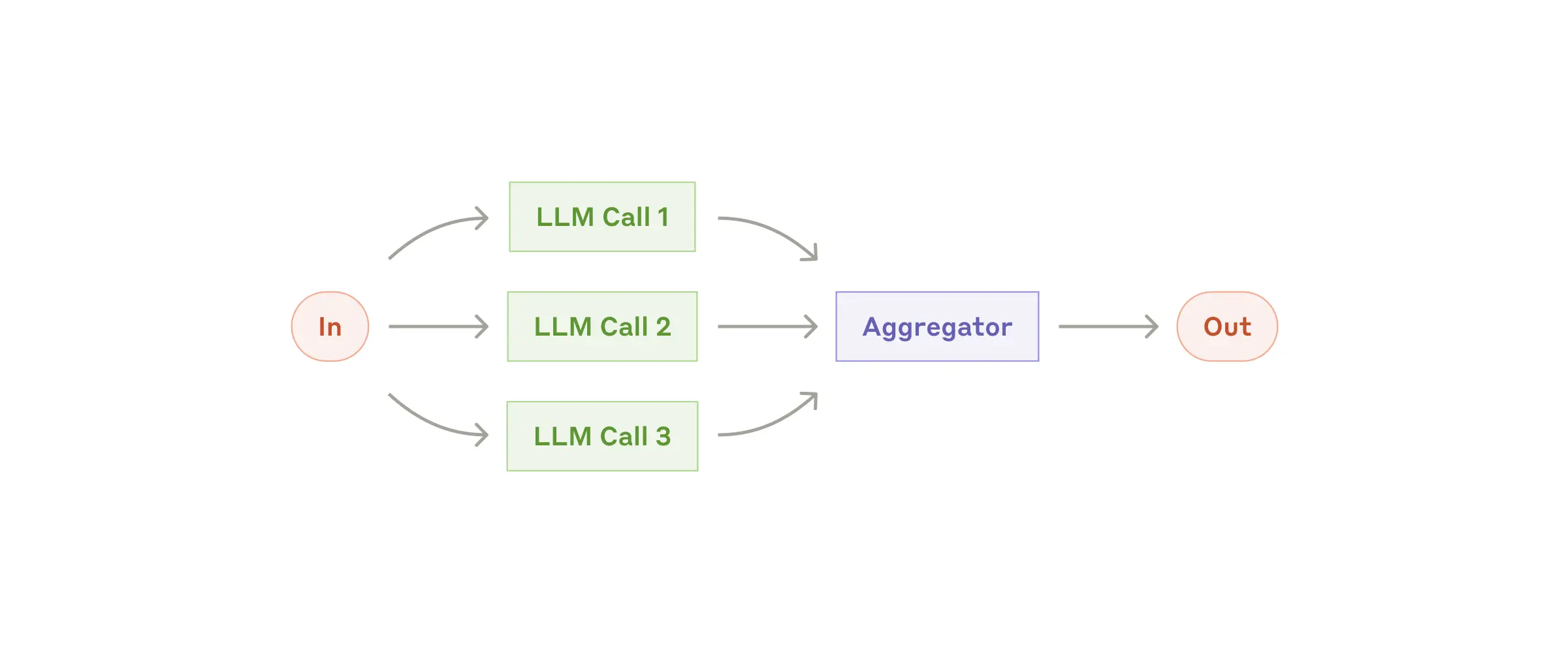

### [Parallel](src/mcp_agent/workflows/parallel/parallel_llm.py)

Fan-out tasks to multiple sub-agents and fan-in the results. Each subtask is an AugmentedLLM, as is the overall Parallel workflow, meaning each subtask can optionally be a more complex workflow itself.

> [!NOTE]

>

> **[Link to full example](examples/workflows/workflow_parallel/main.py)**

<details>

<summary>Example</summary>

```python

proofreader = Agent(name="proofreader", instruction="Review grammar...")

fact_checker = Agent(name="fact_checker", instruction="Check factual consistency...")

style_enforcer = Agent(name="style_enforcer", instruction="Enforce style guidelines...")

grader = Agent(name="grader", instruction="Combine feedback into a structured report.")

parallel = ParallelLLM(

fan_in_agent=grader,

fan_out_agents=[proofreader, fact_checker, style_enforcer],

llm_factory=OpenAIAugmentedLLM,

)

result = await parallel.generate_str("Student short story submission: ...", RequestParams(model="gpt4-o"))

```

</details>

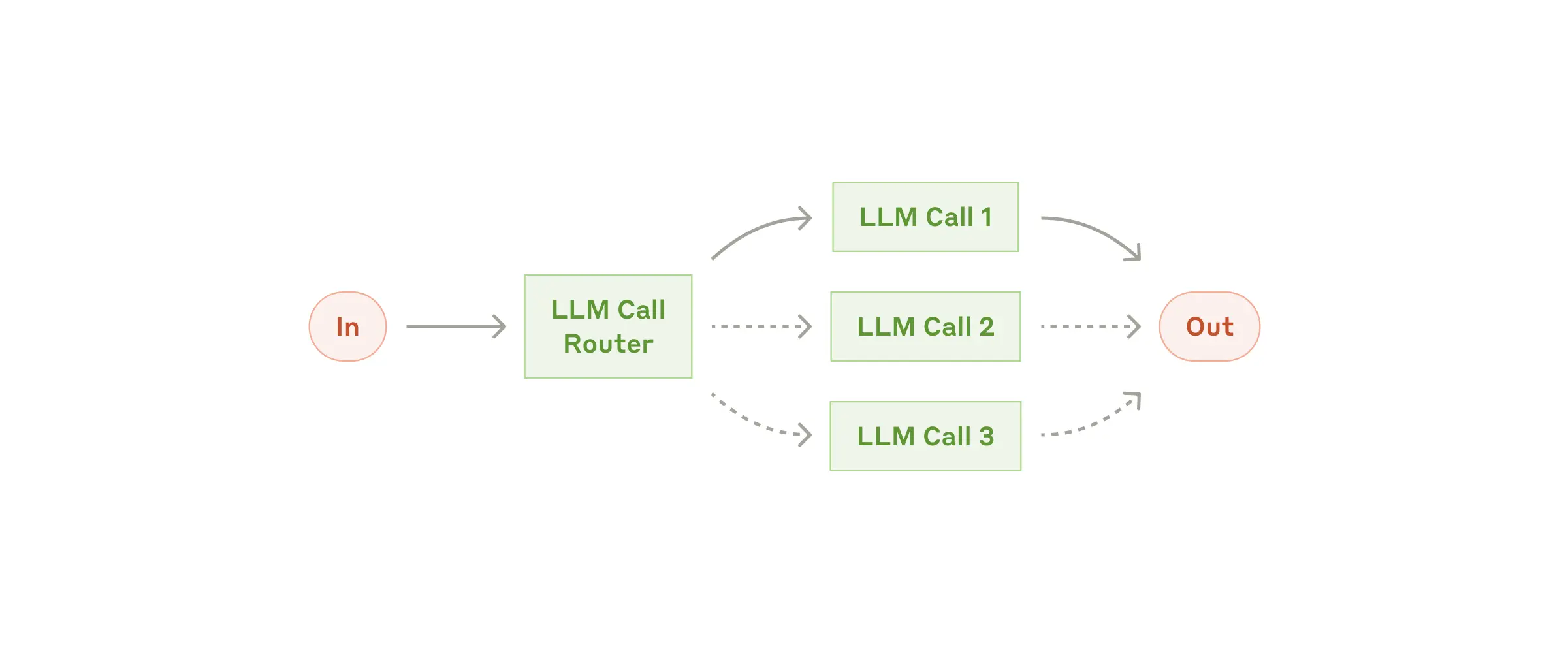

### [Router](src/mcp_agent/workflows/router/)

Given an input, route to the `top_k` most relevant categories. A category can be an Agent, an MCP server or a regular function.

mcp-agent provides several router implementations, including:

- [`EmbeddingRouter`](src/mcp_agent/workflows/router/router_embedding.py): uses embedding models for classification

- [`LLMRouter`](src/mcp_agent/workflows/router/router_llm.py): uses LLMs for classification

> [!NOTE]

>

> **[Link to full example](examples/workflows/workflow_router/main.py)**

<details>

<summary>Example</summary>

```python

def print_hello_world:

print("Hello, world!")

finder_agent = Agent(name="finder", server_names=["fetch", "filesystem"])

writer_agent = Agent(name="writer", server_names=["filesystem"])

llm = OpenAIAugmentedLLM()

router = LLMRouter(

llm=llm,

agents=[finder_agent, writer_agent],

functions=[print_hello_world],

)

results = await router.route( # Also available: route_to_agent, route_to_server

request="Find and print the contents of README.md verbatim",

top_k=1

)

chosen_agent = results[0].result

async with chosen_agent:

...

```

</details>

### [IntentClassifier](src/mcp_agent/workflows/intent_classifier/)

A close sibling of Router, the Intent Classifier pattern identifies the `top_k` Intents that most closely match a given input.

Just like a Router, mcp-agent provides both an [embedding](src/mcp_agent/workflows/intent_classifier/intent_classifier_embedding.py) and [LLM-based](src/mcp_agent/workflows/intent_classifier/intent_classifier_llm.py) intent classifier.

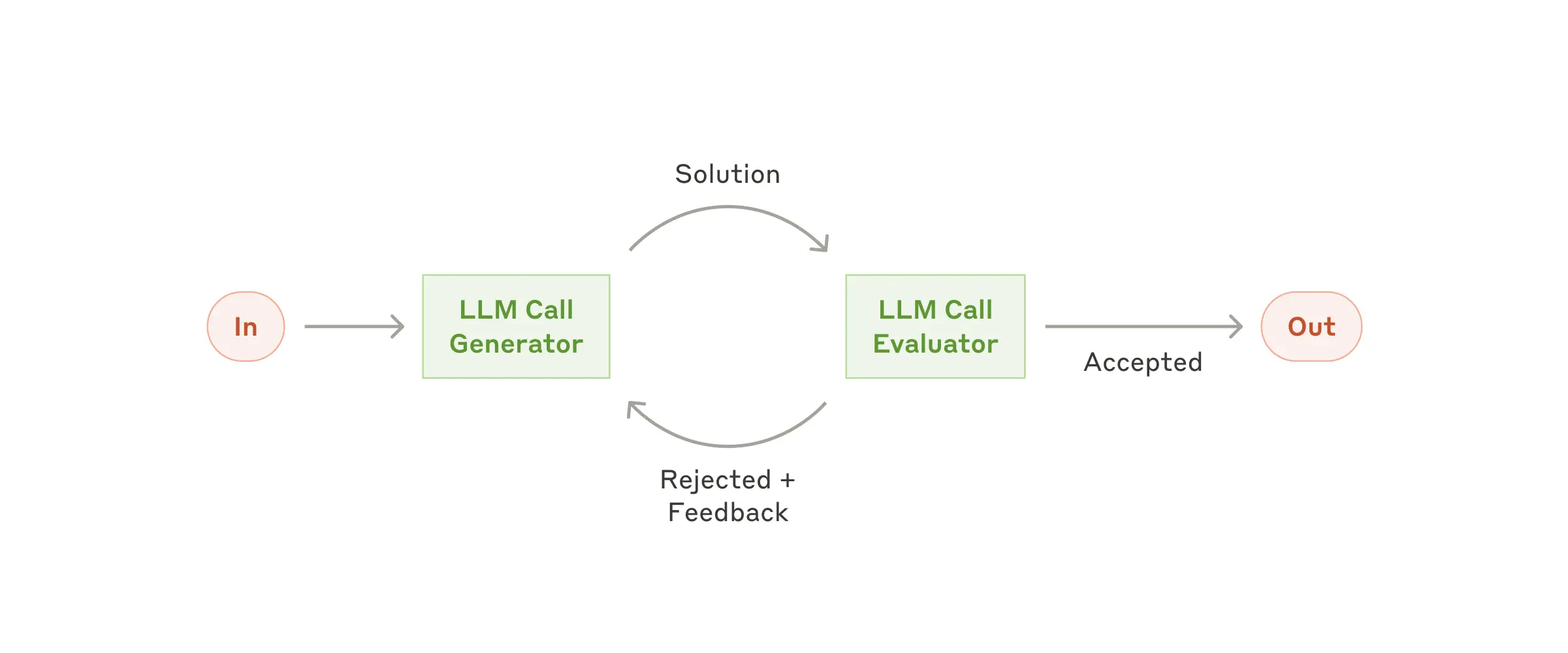

### [Evaluator-Optimizer](src/mcp_agent/workflows/evaluator_optimizer/evaluator_optimizer.py)

One LLM (the “optimizer”) refines a response, another (the “evaluator”) critiques it until a response exceeds a quality criteria.

> [!NOTE]

>

> **[Link to full example](examples/workflows/workflow_evaluator_optimizer/main.py)**

<details>

<summary>Example</summary>

```python

optimizer = Agent(name="cover_letter_writer", server_names=["fetch"], instruction="Generate a cover letter ...")

evaluator = Agent(name="critiquer", instruction="Evaluate clarity, specificity, relevance...")

llm = EvaluatorOptimizerLLM(

optimizer=optimizer,

evaluator=evaluator,

llm_factory=OpenAIAugmentedLLM,

min_rating=QualityRating.EXCELLENT, # Keep iterating until the minimum quality bar is reached

)

result = await eo_llm.generate_str("Write a job cover letter for an AI framework developer role at LastMile AI.")

print("Final refined cover letter:", result)

```

</details>

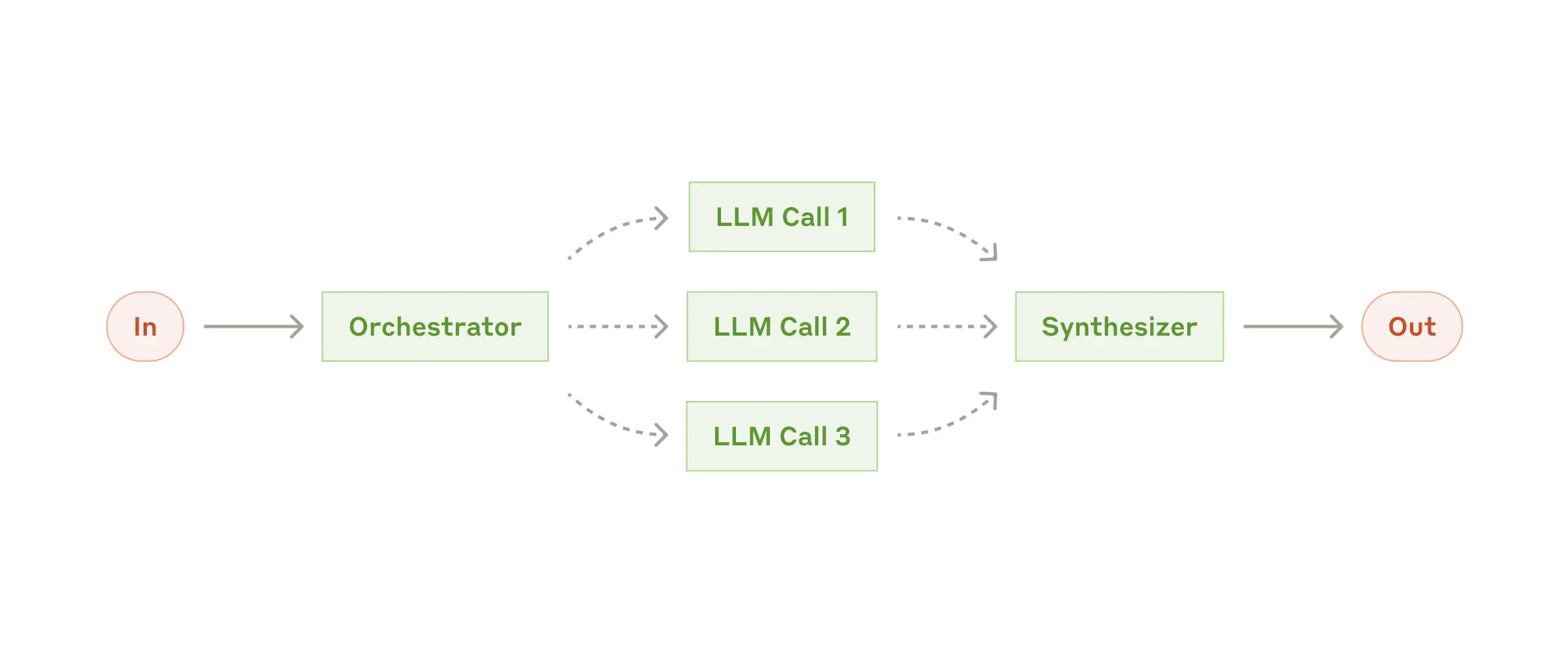

### [Orchestrator-workers](src/mcp_agent/workflows/orchestrator/orchestrator.py)

A higher-level LLM generates a plan, then assigns them to sub-agents, and synthesizes the results.

The Orchestrator workflow automatically parallelizes steps that can be done in parallel, and blocks on dependencies.

> [!NOTE]

>

> **[Link to full example](examples/workflows/workflow_orchestrator_worker/main.py)**

<details>

<summary>Example</summary>

```python

finder_agent = Agent(name="finder", server_names=["fetch", "filesystem"])

writer_agent = Agent(name="writer", server_names=["filesystem"])

proofreader = Agent(name="proofreader", ...)

fact_checker = Agent(name="fact_checker", ...)

style_enforcer = Agent(name="style_enforcer", instructions="Use APA style guide from ...", server_names=["fetch"])

orchestrator = Orchestrator(

llm_factory=AnthropicAugmentedLLM,

available_agents=[finder_agent, writer_agent, proofreader, fact_checker, style_enforcer],

)

task = "Load short_story.md, evaluate it, produce a graded_report.md with multiple feedback aspects."

result = await orchestrator.generate_str(task, RequestParams(model="gpt-4o"))

print(result)

```

</details>

### [Swarm](src/mcp_agent/workflows/swarm/swarm.py)

OpenAI has an experimental multi-agent pattern called [Swarm](https://github.com/openai/swarm), which we provide a model-agnostic reference implementation for in mcp-agent.

<img src="https://github.com/openai/swarm/blob/main/assets/swarm_diagram.png?raw=true" width=500 />

The mcp-agent Swarm pattern works seamlessly with MCP servers, and is exposed as an `AugmentedLLM`, allowing for composability with other patterns above.

> [!NOTE]

>

> **[Link to full example](examples/workflows/workflow_swarm/main.py)**

<details>

<summary>Example</summary>

```python

triage_agent = SwarmAgent(...)

flight_mod_agent = SwarmAgent(...)

lost_baggage_agent = SwarmAgent(...)

# The triage agent decides whether to route to flight_mod_agent or lost_baggage_agent

swarm = AnthropicSwarm(agent=triage_agent, context_variables={...})

test_input = "My bag was not delivered!"

result = await swarm.generate_str(test_input)

print("Result:", result)

```

</details>

## Advanced

### Composability

An example of composability is using an [Evaluator-Optimizer](#evaluator-optimizer) workflow as the planner LLM inside

the [Orchestrator](#orchestrator-workers) workflow. Generating a high-quality plan to execute is important for robust behavior, and an evaluator-optimizer can help ensure that.

Doing so is seamless in mcp-agent, because each workflow is implemented as an `AugmentedLLM`.

<details>

<summary>Example</summary>

```python

optimizer = Agent(name="plan_optimizer", server_names=[...], instruction="Generate a plan given an objective ...")

evaluator = Agent(name="plan_evaluator", instruction="Evaluate logic, ordering and precision of plan......")

planner_llm = EvaluatorOptimizerLLM(

optimizer=optimizer,

evaluator=evaluator,

llm_factory=OpenAIAugmentedLLM,

min_rating=QualityRating.EXCELLENT,

)

orchestrator = Orchestrator(

llm_factory=AnthropicAugmentedLLM,

available_agents=[finder_agent, writer_agent, proofreader, fact_checker, style_enforcer],

planner=planner_llm # It's that simple

)

...

```

</details>

### Signaling and Human Input

**Signaling**: The framework can pause/resume tasks. The agent or LLM might “signal” that it needs user input, so the workflow awaits. A developer may signal during a workflow to seek approval or review before continuing with a workflow.

**Human Input**: If an Agent has a `human_input_callback`, the LLM can call a `__human_input__` tool to request user input mid-workflow.

<details>

<summary>Example</summary>

The [Swarm example](examples/workflows/workflow_swarm/main.py) shows this in action.

```python

from mcp_agent.human_input.handler import console_input_callback

lost_baggage = SwarmAgent(

name="Lost baggage traversal",

instruction=lambda context_variables: f"""

{

FLY_AIR_AGENT_PROMPT.format(

customer_context=context_variables.get("customer_context", "None"),

flight_context=context_variables.get("flight_context", "None"),

)

}\n Lost baggage policy: policies/lost_baggage_policy.md""",

functions=[

escalate_to_agent,

initiate_baggage_search,

transfer_to_triage,

case_resolved,

],

server_names=["fetch", "filesystem"],

human_input_callback=console_input_callback, # Request input from the console

)

```

</details>

### App Config

Create an [`mcp_agent.config.yaml`](/schema/mcp-agent.config.schema.json) and a gitignored [`mcp_agent.secrets.yaml`](./examples/basic/mcp_basic_agent/mcp_agent.secrets.yaml.example) to define MCP app configuration. This controls logging, execution, LLM provider APIs, and MCP server configuration.

### MCP server management

mcp-agent makes it trivial to connect to MCP servers. Create an [`mcp_agent.config.yaml`](/schema/mcp-agent.config.schema.json) to define server configuration under the `mcp` section:

```yaml

mcp:

servers:

fetch:

command: "uvx"

args: ["mcp-server-fetch"]

description: "Fetch content at URLs from the world wide web"

```

#### [`gen_client`](src/mcp_agent/mcp/gen_client.py)

Manage the lifecycle of an MCP server within an async context manager:

```python

from mcp_agent.mcp.gen_client import gen_client

async with gen_client("fetch") as fetch_client:

# Fetch server is initialized and ready to use

result = await fetch_client.list_tools()

# Fetch server is automatically disconnected/shutdown

```

The gen_client function makes it easy to spin up connections to MCP servers.

#### Persistent server connections

In many cases, you want an MCP server to stay online for persistent use (e.g. in a multi-step tool use workflow).

For persistent connections, use:

- [`connect`](<(src/mcp_agent/mcp/gen_client.py)>) and [`disconnect`](src/mcp_agent/mcp/gen_client.py)

```python

from mcp_agent.mcp.gen_client import connect, disconnect

fetch_client = None

try:

fetch_client = connect("fetch")

result = await fetch_client.list_tools()

finally:

disconnect("fetch")

```

- [`MCPConnectionManager`](src/mcp_agent/mcp/mcp_connection_manager.py)

For even more fine-grained control over server connections, you can use the MCPConnectionManager.

<details>

<summary>Example</summary>

```python

from mcp_agent.context import get_current_context

from mcp_agent.mcp.mcp_connection_manager import MCPConnectionManager

context = get_current_context()

connection_manager = MCPConnectionManager(context.server_registry)

async with connection_manager:

fetch_client = await connection_manager.get_server("fetch") # Initializes fetch server

result = fetch_client.list_tool()

fetch_client2 = await connection_manager.get_server("fetch") # Reuses same server connection

# All servers managed by connection manager are automatically disconnected/shut down

```

</details>

#### MCP Server Aggregator

[`MCPAggregator`](src/mcp_agent/mcp/mcp_aggregator.py) acts as a "server-of-servers".

It provides a single MCP server interface for interacting with multiple MCP servers.

This allows you to expose tools from multiple servers to LLM applications.

<details>

<summary>Example</summary>

```python

from mcp_agent.mcp.mcp_aggregator import MCPAggregator

aggregator = await MCPAggregator.create(server_names=["fetch", "filesystem"])

async with aggregator:

# combined list of tools exposed by 'fetch' and 'filesystem' servers

tools = await aggregator.list_tools()

# namespacing -- invokes the 'fetch' server to call the 'fetch' tool

fetch_result = await aggregator.call_tool(name="fetch-fetch", arguments={"url": "https://www.anthropic.com/research/building-effective-agents"})

# no namespacing -- first server in the aggregator exposing that tool wins

read_file_result = await aggregator.call_tool(name="read_file", arguments={})

```

</details>

## Contributing

We welcome any and all kinds of contributions. Please see the [CONTRIBUTING guidelines](./CONTRIBUTING.md) to get started.

### Special Mentions

There have already been incredible community contributors who are driving this project forward:

- [Shaun Smith (@evalstate)](https://github.com/evalstate) -- who has been leading the charge on countless complex improvements, both to `mcp-agent` and generally to the MCP ecosystem.

- [Jerron Lim (@StreetLamb)](https://github.com/StreetLamb) -- who has contributed countless hours and excellent examples, and great ideas to the project.

- [Jason Summer (@jasonsum)](https://github.com/jasonsum) -- for identifying several issues and adapting his Gmail MCP server to work with mcp-agent

## Roadmap

We will be adding a detailed roadmap (ideally driven by your feedback). The current set of priorities include:

- **Durable Execution** -- allow workflows to pause/resume and serialize state so they can be replayed or be paused indefinitely. We are working on integrating [Temporal](./src/mcp_agent/executor/temporal.py) for this purpose.

- **Memory** -- adding support for long-term memory

- **Streaming** -- Support streaming listeners for iterative progress

- **Additional MCP capabilities** -- Expand beyond tool calls to support:

- Resources

- Prompts

- Notifications

## FAQs

### What are the core benefits of using mcp-agent?

mcp-agent provides a streamlined approach to building AI agents using capabilities exposed by **MCP** (Model Context Protocol) servers.

MCP is quite low-level, and this framework handles the mechanics of connecting to servers, working with LLMs, handling external signals (like human input) and supporting persistent state via durable execution. That lets you, the developer, focus on the core business logic of your AI application.

Core benefits:

- 🤝 **Interoperability**: ensures that any tool exposed by any number of MCP servers can seamlessly plug in to your agents.

- ⛓️ **Composability & Cutstomizability**: Implements well-defined workflows, but in a composable way that enables compound workflows, and allows full customization across model provider, logging, orchestrator, etc.

- 💻 **Programmatic control flow**: Keeps things simple as developers just write code instead of thinking in graphs, nodes and edges. For branching logic, you write `if` statements. For cycles, use `while` loops.

- 🖐️ **Human Input & Signals**: Supports pausing workflows for external signals, such as human input, which are exposed as tool calls an Agent can make.

### Do you need an MCP client to use mcp-agent?

No, you can use mcp-agent anywhere, since it handles MCPClient creation for you. This allows you to leverage MCP servers outside of MCP hosts like Claude Desktop.

Here's all the ways you can set up your mcp-agent application:

#### MCP-Agent Server

You can expose mcp-agent applications as MCP servers themselves (see [example](./examples/basic/mcp_agent_server)), allowing MCP clients to interface with sophisticated AI workflows using the standard tools API of MCP servers. This is effectively a server-of-servers.

#### MCP Client or Host

You can embed mcp-agent in an MCP client directly to manage the orchestration across multiple MCP servers.

#### Standalone

You can use mcp-agent applications in a standalone fashion (i.e. they aren't part of an MCP client). The [`examples`](/examples/) are all standalone applications.

### Tell me a fun fact

I debated naming this project _silsila_ (سلسلہ), which means chain of events in Urdu. mcp-agent is more matter-of-fact, but there's still an easter egg in the project paying homage to silsila.

## Code Examples

The MCP-Agent framework provides multiple ways to create and run AI agents with MCP server connections:

### Basic Agent Example

```python

from mcp_agent.app import MCPApp

from mcp_agent.agents.agent import Agent

from mcp_agent.workflows.llm.augmented_llm_openai import OpenAIAugmentedLLM

app = MCPApp(name="my_agent")

async def main():

async with app.run() as agent_app:

# Create an agent with filesystem and fetch capabilities

agent = Agent(

name="finder",

instruction="You help find and analyze files and web content",

server_names=["fetch", "filesystem"]

)

async with agent:

# Attach an LLM to the agent

llm = await agent.attach_llm(OpenAIAugmentedLLM)

# Generate responses using MCP tools

result = await llm.generate_str(

message="Find and read the config file"

)

print(result)

```

### Decorator Style Agent

```python

from mcp_agent.core.decorator_app import MCPAgentDecorator

agent_app = MCPAgentDecorator("my-app")

@agent_app.agent(

name="basic_agent",

instruction="A simple agent that helps with basic tasks",

servers=["filesystem"]

)

async def main():

async with agent_app.run() as agent:

result = await agent.send("basic_agent", "What files are here?")

print(result)

```

### Router-Based Workflow

```python

from mcp_agent.workflows.router.router_llm_openai import OpenAILLMRouter

from mcp_agent.agents.agent import Agent

# Create specialized agents

finder_agent = Agent(

name="finder",

instruction="Find and read files",

server_names=["filesystem"]

)

writer_agent = Agent(

name="writer",

instruction="Write content to files",

server_names=["filesystem"]

)

# Router automatically selects the best agent

router = OpenAILLMRouter(agents=[finder_agent, writer_agent])

# Route a request to the most appropriate agent

results = await router.route_to_agent(

request="Read the config file", top_k=1

)

selected_agent = results[0].result

```

### Configuration

MCP-Agent uses YAML configuration files (`mcp_agent.config.yaml`):

```yaml

execution_engine: "asyncio"

mcp:

servers:

filesystem:

command: "npx"

args: ["-y", "@modelcontextprotocol/server-filesystem"]

fetch:

command: "uvx"

args: ["mcp-server-fetch"]

openai:

default_model: "gpt-4o-mini"

```

## Function and Class Definitions

*Note: Test files, example files, and script files are excluded from this section.*

### src/mcp_agent/agents/agent.py

**Class: `Agent`**

- **Inherits from**: BaseModel

- **Description**: An Agent is an entity that has access to a set of MCP servers and can interact with them.

Each agent should have a purpose defined by its instruction.

- **Attributes**:

- `name` (str): Agent name.

- `instruction` (str | Callable[[Dict], str]) = 'You are a helpful agent.': Instruction for the agent. This can be a string or a callable that takes a dictionary and returns a string. The callable can be used to generate dynamic instructions based on the context.

- `server_names` (List[str]) = Field(default_factory=list): List of MCP server names that the agent can access.

- `functions` (List[Callable]) = Field(default_factory=list): List of local functions that the agent can call.

- `context` (Optional[Context]) = None: The application context that the agent is running in.

- `connection_persistence` (bool) = True: Whether to persist connections to the MCP servers.

- `human_input_callback` (Optional[Callable]) = None: Callback function for requesting human input. Must match HumanInputCallback protocol.

- `llm` (Optional[Any]) = None: The LLM instance that is attached to the agent. This is set in attach_llm method.

- `initialized` (bool) = False: Whether the agent has been initialized. This is set to True after agent.initialize() is completed.

- `model_config` = ConfigDict(arbitrary_types_allowed=True, extra='allow')

- `_function_tool_map` (Dict[str, FastTool]) = PrivateAttr(default_factory=dict)

- `_namespaced_tool_map` (Dict[str, NamespacedTool]) = PrivateAttr(default_factory=dict)

- `_server_to_tool_map` (Dict[str, List[NamespacedTool]]) = PrivateAttr(default_factory=dict)

- `_namespaced_prompt_map` (Dict[str, NamespacedPrompt]) = PrivateAttr(default_factory=dict)

- `_server_to_prompt_map` (Dict[str, List[NamespacedPrompt]]) = PrivateAttr(default_factory=dict)

- `_agent_tasks` ('AgentTasks') = PrivateAttr(default=None)

- `_init_lock` (asyncio.Lock) = PrivateAttr(default_factory=asyncio.Lock)

**Class: `InitAggregatorRequest`**

- **Inherits from**: BaseModel

- **Description**: Request to load/initialize an agent's servers.

- **Attributes**:

- `agent_name` (str)

- `server_names` (List[str])

- `connection_persistence` (bool) = True

- `force` (bool) = False

**Class: `InitAggregatorResponse`**

- **Inherits from**: BaseModel

- **Description**: Response for the load server request.

- **Attributes**:

- `initialized` (bool)

- `namespaced_tool_map` (Dict[str, NamespacedTool]) = Field(default_factory=dict)

- `server_to_tool_map` (Dict[str, List[NamespacedTool]]) = Field(default_factory=dict)

- `namespaced_prompt_map` (Dict[str, NamespacedPrompt]) = Field(default_factory=dict)

- `server_to_prompt_map` (Dict[str, List[NamespacedPrompt]]) = Field(default_factory=dict)

**Class: `ListToolsRequest`**

- **Inherits from**: BaseModel

- **Description**: Request to list tools for an agent.

- **Attributes**:

- `agent_name` (str)

- `server_name` (Optional[str]) = None

**Class: `CallToolRequest`**

- **Inherits from**: BaseModel

- **Description**: Request to call a tool for an agent.

- **Attributes**:

- `agent_name` (str)

- `server_name` (Optional[str]) = None

- `name` (str)

- `arguments` (Optional[dict[str, str]]) = None

**Class: `ListPromptsRequest`**

- **Inherits from**: BaseModel

- **Description**: Request to list prompts for an agent.

- **Attributes**:

- `agent_name` (str)

- `server_name` (Optional[str]) = None

**Class: `GetPromptRequest`**

- **Inherits from**: BaseModel

- **Description**: Request to get a prompt from an agent.

- **Attributes**:

- `agent_name` (str)

- `server_name` (Optional[str]) = None

- `name` (str)

- `arguments` (Optional[dict[str, str]]) = None

**Class: `GetCapabilitiesRequest`**

- **Inherits from**: BaseModel

- **Description**: Request to get the capabilities of a specific server.

- **Attributes**:

- `agent_name` (str)

- `server_name` (Optional[str]) = None

**Class: `GetServerSessionRequest`**

- **Inherits from**: BaseModel

- **Description**: Request to get the session data of a specific server.

- **Attributes**:

- `agent_name` (str)

- `server_name` (str)

**Class: `GetServerSessionResponse`**

- **Inherits from**: BaseModel

- **Description**: Response to the get server session request.

- **Attributes**:

- `session_id` (str | None) = None

- `session_data` (dict[str, Any]) = Field(default_factory=dict)

- `error` (Optional[str]) = None

**Class: `AgentTasks`**

- **Description**: Agent tasks for executing agent-related activities.

- **Attributes**:

- `server_aggregators_for_agent` (Dict[str, MCPAggregator]) = {}

- `server_aggregators_for_agent_lock` (asyncio.Lock) = asyncio.Lock()

- `agent_refcounts` (dict[str, int]) = {}

**Function:** `Agent.model_post_init(self, __context) -> None`

**Function:** `Agent.attach_llm(self, llm_factory: Callable[..., LLM] | None = None, llm: LLM | None = None) -> LLM`

- **Description**: Create an LLM instance for the agent. Args: llm_factory: A callable that constructs an AugmentedLLM or its subclass. The factory should accept keyword arguments matching the AugmentedLLM constructor parameters. llm: An instance of AugmentedLLM or its subclass. If provided, this will be used instead of creating a new instance. Returns: An instance of AugmentedLLM or one of its subclasses.

- **Parameters**

- `self`

- `llm_factory` (Callable[..., LLM] | None, optional): A callable that constructs an AugmentedLLM or its subclass. The factory should accept keyword arguments matching the AugmentedLLM constructor parameters.

- `llm` (LLM | None, optional): An instance of AugmentedLLM or its subclass. If provided, this will be used instead of creating a new instance.

- **Returns**

- `LLM`: An instance of AugmentedLLM or one of its subclasses.

**Function:** `Agent.initialize(self, force: bool = False)`

- **Description**: Initialize the agent.

- **Parameters**

- `self`

- `force` (bool, optional): Default is False

**Function:** `Agent.shutdown(self)`

- **Description**: Shutdown the agent and close all MCP server connections. NOTE: This method is called automatically when the agent is used as an async context manager.

- **Parameters**

- `self`

**Function:** `Agent.close(self)`

- **Description**: Close the agent and release all resources. Synonymous with shutdown.

- **Parameters**

- `self`

**Function:** `Agent.__aenter__(self)`

**Function:** `Agent.__aexit__(self, exc_type, exc_val, exc_tb)`

**Function:** `Agent.get_capabilities(self, server_name: str | None) -> ServerCapabilities | Dict[str, ServerCapabilities]`

- **Description**: Get the capabilities of a specific server.

- **Parameters**

- `self`

- `server_name` (str | None)

- **Returns**

- `ServerCapabilities | Dict[str, ServerCapabilities]`: Return value

**Function:** `Agent.get_server_session(self, server_name: str)`

- **Description**: Get the session data of a specific server.

- **Parameters**

- `self`

- `server_name` (str)

**Function:** `Agent.list_tools(self, server_name: str | None = None) -> ListToolsResult`

**Function:** `Agent.list_prompts(self, server_name: str | None = None) -> ListPromptsResult`

**Function:** `Agent.get_prompt(self, name: str, arguments: dict[str, str] | None = None) -> GetPromptResult`

**Function:** `Agent.request_human_input(self, request: HumanInputRequest) -> str`

- **Description**: Request input from a human user. Pauses the workflow until input is received. Args: request: The human input request Returns: The input provided by the human Raises: TimeoutError: If the timeout is exceeded ValueError: If human_input_callback is not set or doesn't have the right signature

- **Parameters**

- `self`

- `request` (HumanInputRequest): The human input request

- **Returns**

- `str`: The input provided by the human

- **Raises**: TimeoutError: If the timeout is exceeded ValueError: If human_input_callback is not set or doesn't have the right signature

**Function:** `Agent.call_callback_and_signal()`

**Function:** `Agent.call_tool(self, name: str, arguments: dict | None = None, server_name: str | None = None) -> CallToolResult`

**Function:** `Agent._call_human_input_tool(self, arguments: dict | None = None) -> CallToolResult`

**Function:** `AgentTasks.__init__(self, context: 'Context')`

**Function:** `AgentTasks.initialize_aggregator_task(self, request: InitAggregatorRequest) -> InitAggregatorResponse`

- **Description**: Load/initialize an agent's servers.

- **Parameters**

- `self`

- `request` (InitAggregatorRequest)

- **Returns**

- `InitAggregatorResponse`: Return value

**Function:** `AgentTasks.shutdown_aggregator_task(self, agent_name: str) -> bool`

- **Description**: Shutdown the agent's servers.

- **Parameters**

- `self`

- `agent_name` (str)

- **Returns**

- `bool`: Return value

**Function:** `AgentTasks.list_tools_task(self, request: ListToolsRequest) -> ListToolsResult`

- **Description**: List tools for an agent.

- **Parameters**

- `self`

- `request` (ListToolsRequest)

- **Returns**

- `ListToolsResult`: Return value

**Function:** `AgentTasks.call_tool_task(self, request: CallToolRequest) -> CallToolResult`

- **Description**: Call a tool for an agent.

- **Parameters**

- `self`

- `request` (CallToolRequest)

- **Returns**

- `CallToolResult`: Return value

**Function:** `AgentTasks.list_prompts_task(self, request: ListPromptsRequest) -> ListPromptsResult`

- **Description**: List tools for an agent.

- **Parameters**

- `self`

- `request` (ListPromptsRequest)

- **Returns**

- `ListPromptsResult`: Return value

**Function:** `AgentTasks.get_prompt_task(self, request: GetPromptRequest) -> GetPromptResult`

- **Description**: Get a prompt for an agent.

- **Parameters**

- `self`

- `request` (GetPromptRequest)

- **Returns**

- `GetPromptResult`: Return value

**Function:** `AgentTasks.get_capabilities_task(self, request: GetCapabilitiesRequest) -> Dict[str, ServerCapabilities]`

- **Description**: Get the capabilities of a specific server.

- **Parameters**

- `self`

- `request` (GetCapabilitiesRequest)

- **Returns**

- `Dict[str, ServerCapabilities]`: Return value

**Function:** `AgentTasks.get_server_session(self, request: GetServerSessionRequest) -> GetServerSessionResponse`

- **Description**: Get the session for a specific server.

- **Parameters**

- `self`

- `request` (GetServerSessionRequest)

- **Returns**

- `GetServerSessionResponse`: Return value

### src/mcp_agent/app.py

**Class: `MCPApp`**

- **Description**: Main application class that manages global state and can host workflows.

Example usage:

app = MCPApp()

@app.workflow

class MyWorkflow(Workflow[str]):

@app.task

async def my_task(self):

pass

async def run(self):

await self.my_task()

async with app.run() as running_app:

workflow = MyWorkflow()

result = await workflow.execute()

**Function:** `MCPApp.__init__(self, name: str = 'mcp_application', description: str | None = None, settings: Optional[Settings] | str = None, human_input_callback: Optional[HumanInputCallback] = None, signal_notification: Optional[SignalWaitCallback] = None, upstream_session: Optional['ServerSession'] = None, model_selector: ModelSelector = None)`

- **Description**: Initialize the application with a name and optional settings. Args: name: Name of the application description: Description of the application. If you expose the MCPApp as an MCP server, provide a detailed description, since it will be used as the server's description. settings: Application configuration - If unspecified, the settings are loaded from mcp_agent.config.yaml. If this is a string, it is treated as the path to the config file to load. human_input_callback: Callback for handling human input signal_notification: Callback for getting notified on workflow signals/events. upstream_session: Upstream session if the MCPApp is running as a server to an MCP client. initialize_model_selector: Initializes the built-in ModelSelector to help with model selection. Defaults to False.

- **Parameters**

- `self`

- `name` (str, optional): Name of the application

- `description` (str | None, optional): Description of the application. If you expose the MCPApp as an MCP server, provide a detailed description, since it will be used as the server's description.

- `settings` (Optional[Settings] | str, optional): Application configuration - If unspecified, the settings are loaded from mcp_agent.config.yaml. If this is a string, it is treated as the path to the config file to load.

- `human_input_callback` (Optional[HumanInputCallback], optional): Callback for handling human input

- `signal_notification` (Optional[SignalWaitCallback], optional): Callback for getting notified on workflow signals/events.

- `upstream_session` (Optional['ServerSession'], optional): Upstream session if the MCPApp is running as a server to an MCP client.

- `model_selector` (ModelSelector, optional): Default is None

**Function:** `MCPApp.context(self) -> Context`

**Function:** `MCPApp.config(self)`

**Function:** `MCPApp.server_registry(self)`

**Function:** `MCPApp.executor(self)`

**Function:** `MCPApp.engine(self)`

**Function:** `MCPApp.upstream_session(self)`

**Function:** `MCPApp.upstream_session(self, value)`

**Function:** `MCPApp.workflows(self)`

**Function:** `MCPApp.tasks(self)`

**Function:** `MCPApp.session_id(self)`

**Function:** `MCPApp.logger(self)`

**Function:** `MCPApp.initialize(self)`

- **Description**: Initialize the application.

- **Parameters**

- `self`

**Function:** `MCPApp.cleanup(self)`

- **Description**: Cleanup application resources.

- **Parameters**

- `self`

**Function:** `MCPApp.run(self)`

- **Description**: Run the application. Use as context manager. Example: async with app.run() as running_app: # App is initialized here pass

- **Parameters**

- `self`

- **async with app.run() as running_app**: # App is initialized here pass

**Function:** `MCPApp.workflow(self, cls: Type) -> Type`

- **Description**: Decorator for a workflow class. By default it's a no-op, but different executors can use this to customize behavior for workflow registration. Example: If Temporal is available & we use a TemporalExecutor, this decorator will wrap with temporal_workflow.defn.

- **Parameters**

- `self`

- `cls` (Type)

- **Returns**

- `Type`: Return value

- **Example**: If Temporal is available & we use a TemporalExecutor, this decorator will wrap with temporal_workflow.defn.

**Function:** `MCPApp.workflow_signal(self, fn: Callable[..., R] | None = None) -> Callable[..., R]`

- **Description**: Decorator for a workflow's signal handler. Different executors can use this to customize behavior for workflow signal handling. Args: fn: The function to decorate (optional, for use with direct application) name: Optional custom name for the signal. If not provided, uses the function name. Example: If Temporal is in use, this gets converted to @workflow.signal.

- **Parameters**

- `self`

- `fn` (Callable[..., R] | None, optional): The function to decorate (optional, for use with direct application)

- **Returns**

- `Callable[..., R]`: Return value

- **Example**: If Temporal is in use, this gets converted to @workflow.signal.

**Function:** `MCPApp.decorator(func)`

**Function:** `MCPApp.wrapper()`

**Function:** `MCPApp.workflow_run(self, fn: Callable[..., R]) -> Callable[..., R]`

- **Description**: Decorator for a workflow's main 'run' method. Different executors can use this to customize behavior for workflow execution. Example: If Temporal is in use, this gets converted to @workflow.run.

- **Parameters**

- `self`

- `fn` (Callable[..., R])

- **Returns**

- `Callable[..., R]`: Return value

- **Example**: If Temporal is in use, this gets converted to @workflow.run.

**Function:** `MCPApp.wrapper()`

**Function:** `MCPApp.workflow_task(self, name: str | None = None, schedule_to_close_timeout: timedelta | None = None, retry_policy: Dict[str, Any] | None = None) -> Callable[[Callable[..., R]], Callable[..., R]]`

- **Description**: Decorator to mark a function as a workflow task, automatically registering it in the global activity registry. Args: name: Optional custom name for the activity schedule_to_close_timeout: Maximum time the task can take to complete retry_policy: Retry policy configuration **kwargs: Additional metadata passed to the activity registration Returns: Decorated function that preserves async and typing information Raises: TypeError: If the decorated function is not async ValueError: If the retry policy or timeout is invalid

- **Parameters**

- `self`

- `name` (str | None, optional): Optional custom name for the activity

- `schedule_to_close_timeout` (timedelta | None, optional): Maximum time the task can take to complete

- `retry_policy` (Dict[str, Any] | None, optional): Retry policy configuration

- **Returns**

- `Callable[[Callable[..., R]], Callable[..., R]]`: Decorated function that preserves async and typing information

- **Raises**: TypeError: If the decorated function is not async ValueError: If the retry policy or timeout is invalid

**Function:** `MCPApp.decorator(target: Callable[..., R]) -> Callable[..., R]`

**Function:** `MCPApp._bound_adapter()`

**Function:** `MCPApp.is_workflow_task(self, func: Callable[..., Any]) -> bool`

- **Description**: Check if a function is marked as a workflow task. This gets set for functions that are decorated with @workflow_task.

- **Parameters**

- `self`

- `func` (Callable[..., Any])

- **Returns**

- `bool`: Return value

**Function:** `MCPApp._register_global_workflow_tasks(self)`

- **Description**: Register all statically defined workflow tasks with this app instance.

- **Parameters**

- `self`

**Function:** `MCPApp._bound_adapter()`

### src/mcp_agent/cli/commands/config.py

**Function:** `show()`

- **Description**: Show the configuration.

### src/mcp_agent/cli/main.py

**Function:** `main(verbose: bool = typer.Option(False, '--verbose', '-v', help='Enable verbose mode'), quiet: bool = typer.Option(False, '--quiet', '-q', help='Disable output'), color: bool = typer.Option(True, '--color/--no-color', help='Enable/disable color output'))`

- **Description**: Main entry point for the MCP Agent CLI.

- **Parameters**

- `verbose` (bool, optional): Default is typer.Option(False, '--verbose', '-v', help='Enable verbose mode')

- `quiet` (bool, optional): Default is typer.Option(False, '--quiet', '-q', help='Disable output')

- `color` (bool, optional): Default is typer.Option(True, '--color/--no-color', help='Enable/disable color output')

### src/mcp_agent/cli/terminal.py

**Class: `Application`**

**Function:** `Application.__init__(self, verbosity: int = 0, enable_color: bool = True)`

**Function:** `Application.log(self, message: str, level: str = 'info')`

**Function:** `Application.status(self, message: str)`

### src/mcp_agent/config.py

**Module Description**: Reading settings from environment variables and providing a settings object for the application configuration.

**Class: `MCPServerAuthSettings`**

- **Inherits from**: BaseModel

- **Description**: Represents authentication configuration for a server.

- **Attributes**:

- `api_key` (str | None) = None

- `model_config` = ConfigDict(extra='allow', arbitrary_types_allowed=True)

**Class: `MCPRootSettings`**

- **Inherits from**: BaseModel

- **Description**: Represents a root directory configuration for an MCP server.

- **Attributes**:

- `uri` (str): The URI identifying the root. Must start with file://

- `name` (Optional[str]) = None: Optional name for the root.

- `server_uri_alias` (Optional[str]) = None: Optional URI alias for presentation to the server

- `model_config` = ConfigDict(extra='allow', arbitrary_types_allowed=True)

**Class: `MCPServerSettings`**

- **Inherits from**: BaseModel

- **Description**: Represents the configuration for an individual server.

- **Attributes**:

- `name` (str | None) = None: The name of the server.

- `description` (str | None) = None: The description of the server.

- `transport` (Literal['stdio', 'sse', 'streamable_http', 'websocket']) = 'stdio': The transport mechanism.

- `command` (str | None) = None: The command to execute the server (e.g. npx) in stdio mode.

- `args` (List[str]) = Field(default_factory=list): The arguments for the server command in stdio mode.

- `url` (str | None) = None: The URL for the server for SSE, Streamble HTTP or websocket transport.

- `headers` (Dict[str, str] | None) = None: HTTP headers for SSE or Streamable HTTP requests.

- `http_timeout_seconds` (int | None) = None: HTTP request timeout in seconds for SSE or Streamable HTTP requests. Note: This is different from read_timeout_seconds, which determines how long (in seconds) the client will wait for a new event before disconnecting

- `read_timeout_seconds` (int | None) = None: Timeout in seconds the client will wait for a new event before disconnecting from an SSE or Streamable HTTP server connection.

- `terminate_on_close` (bool) = True: For Streamable HTTP transport, whether to terminate the session on connection close.

- `auth` (MCPServerAuthSettings | None) = None: The authentication configuration for the server.

- `roots` (List[MCPRootSettings] | None) = None: Root directories this server has access to.

- `env` (Dict[str, str] | None) = None: Environment variables to pass to the server process.

- `model_config` = ConfigDict(extra='allow', arbitrary_types_allowed=True)

**Class: `MCPSettings`**

- **Inherits from**: BaseModel

- **Description**: Configuration for all MCP servers.

- **Attributes**:

- `servers` (Dict[str, MCPServerSettings]) = Field(default_factory=dict)

- `model_config` = ConfigDict(extra='allow', arbitrary_types_allowed=True)

**Class: `AnthropicSettings`**

- **Inherits from**: BaseModel

- **Description**: Settings for using Anthropic models in the MCP Agent application.

- **Attributes**:

- `api_key` (str | None) = None

- `model_config` = ConfigDict(extra='allow', arbitrary_types_allowed=True)

**Class: `BedrockSettings`**

- **Inherits from**: BaseModel

- **Description**: Settings for using Bedrock models in the MCP Agent application.

- **Attributes**:

- `aws_access_key_id` (str | None) = None

- `aws_secret_access_key` (str | None) = None

- `aws_session_token` (str | None) = None

- `aws_region` (str | None) = None

- `profile` (str | None) = None

- `model_config` = ConfigDict(extra='allow', arbitrary_types_allowed=True)

**Class: `CohereSettings`**

- **Inherits from**: BaseModel

- **Description**: Settings for using Cohere models in the MCP Agent application.

- **Attributes**:

- `api_key` (str | None) = None

- `model_config` = ConfigDict(extra='allow', arbitrary_types_allowed=True)

**Class: `OpenAISettings`**

- **Inherits from**: BaseModel

- **Description**: Settings for using OpenAI models in the MCP Agent application.

- **Attributes**:

- `api_key` (str | None) = None

- `reasoning_effort` (Literal['low', 'medium', 'high']) = 'medium'

- `base_url` (str | None) = None

- `model_config` = ConfigDict(extra='allow', arbitrary_types_allowed=True)

**Class: `AzureSettings`**

- **Inherits from**: BaseModel

- **Description**: Settings for using Azure models in the MCP Agent application.

- **Attributes**:

- `api_key` (str | None) = None

- `endpoint` (str)

- `credential_scopes` (List[str] | None) = Field(default=['https://cognitiveservices.azure.com/.default'])

- `model_config` = ConfigDict(extra='allow', arbitrary_types_allowed=True)

**Class: `GoogleSettings`**

- **Inherits from**: BaseModel

- **Description**: Settings for using Google models in the MCP Agent application.

- **Attributes**:

- `api_key` (str | None) = None: Or use the GOOGLE_API_KEY environment variable

- `vertexai` (bool) = False

- `project` (str | None) = None

- `location` (str | None) = None

- `model_config` = ConfigDict(extra='allow', arbitrary_types_allowed=True)

**Class: `TemporalSettings`**

- **Inherits from**: BaseModel

- **Description**: Temporal settings for the MCP Agent application.

- **Attributes**:

- `host` (str)

- `namespace` (str) = 'default'

- `task_queue` (str)

- `max_concurrent_activities` (int | None) = None

- `api_key` (str | None) = None

- `timeout_seconds` (int | None) = 60

**Class: `UsageTelemetrySettings`**

- **Inherits from**: BaseModel

- **Description**: Settings for usage telemetry in the MCP Agent application.

Anonymized usage metrics are sent to a telemetry server to help improve the product.

- **Attributes**:

- `enabled` (bool) = True: Enable usage telemetry in the MCP Agent application.

- `enable_detailed_telemetry` (bool) = False: If enabled, detailed telemetry data, including prompts and agents, will be sent to the telemetry server.

**Class: `OpenTelemetrySettings`**

- **Inherits from**: BaseModel

- **Description**: OTEL settings for the MCP Agent application.

- **Attributes**:

- `enabled` (bool) = True

- `service_name` (str) = 'mcp-agent'

- `service_instance_id` (str | None) = None

- `service_version` (str | None) = None

- `otlp_endpoint` (str | None) = None: OTLP endpoint for OpenTelemetry tracing

- `console_debug` (bool) = False: Log spans to console

- `sample_rate` (float) = 1.0: Sample rate for tracing (1.0 = sample everything)

**Class: `LogPathSettings`**

- **Inherits from**: BaseModel

- **Description**: Settings for configuring log file paths with dynamic elements like timestamps or session IDs.

- **Attributes**:

- `path_pattern` (str) = 'logs/mcp-agent-{unique_id}.jsonl': Path pattern for log files with a {unique_id} placeholder. The placeholder will be replaced according to the unique_id setting. Example: "logs/mcp-agent-{unique_id}.jsonl"

- `unique_id` (Literal['timestamp', 'session_id']) = 'timestamp': Type of unique identifier to use in the log filename: - timestamp: Uses the current time formatted according to timestamp_format - session_id: Generates a UUID for the session

- `timestamp_format` (str) = '%Y%m%d_%H%M%S': Format string for timestamps when unique_id is set to "timestamp". Uses Python's datetime.strftime format.

**Class: `LoggerSettings`**

- **Inherits from**: BaseModel

- **Description**: Logger settings for the MCP Agent application.

- **Attributes**:

- `type` (Literal['none', 'console', 'file', 'http']) = 'console'

- `transports` (List[Literal['none', 'console', 'file', 'http']]) = []: List of transports to use (can enable multiple simultaneously)

- `level` (Literal['debug', 'info', 'warning', 'error']) = 'info': Minimum logging level

- `progress_display` (bool) = False: Enable or disable the progress display

- `path` (str) = 'mcp-agent.jsonl': Path to log file, if logger 'type' is 'file'.

- `path_settings` (LogPathSettings | None) = None: Save log files with more advanced path semantics, like having timestamps or session id in the log name.

- `batch_size` (int) = 100: Number of events to accumulate before processing

- `flush_interval` (float) = 2.0: How often to flush events in seconds

- `max_queue_size` (int) = 2048: Maximum queue size for event processing

- `http_endpoint` (str | None) = None: HTTP endpoint for event transport

- `http_headers` (dict[str, str] | None) = None: HTTP headers for event transport

- `http_timeout` (float) = 5.0: HTTP timeout seconds for event transport

**Class: `Settings`**

- **Inherits from**: BaseSettings

- **Description**: Settings class for the MCP Agent application.

- **Attributes**:

- `model_config` = SettingsConfigDict(env_nested_delimiter='__', env_file='.env', env_file_encoding='utf-8', extra='allow', nested_model_default_partial_update=True)

- `mcp` (MCPSettings | None) = MCPSettings(): MCP config, such as MCP servers

- `execution_engine` (Literal['asyncio', 'temporal']) = 'asyncio': Execution engine for the MCP Agent application

- `temporal` (TemporalSettings | None) = None: Settings for Temporal workflow orchestration

- `anthropic` (AnthropicSettings | None) = None: Settings for using Anthropic models in the MCP Agent application

- `bedrock` (BedrockSettings | None) = None: Settings for using Bedrock models in the MCP Agent application

- `cohere` (CohereSettings | None) = None: Settings for using Cohere models in the MCP Agent application

- `openai` (OpenAISettings | None) = None: Settings for using OpenAI models in the MCP Agent application

- `azure` (AzureSettings | None) = None: Settings for using Azure models in the MCP Agent application

- `google` (GoogleSettings | None) = None: Settings for using Google models in the MCP Agent application

- `otel` (OpenTelemetrySettings | None) = OpenTelemetrySettings(): OpenTelemetry logging settings for the MCP Agent application

- `logger` (LoggerSettings | None) = LoggerSettings(): Logger settings for the MCP Agent application

- `usage_telemetry` (UsageTelemetrySettings | None) = UsageTelemetrySettings(): Usage tracking settings for the MCP Agent application

**Function:** `MCPRootSettings.validate_uri(cls, v: str) -> str`

- **Description**: Validate that the URI starts with file:// (required by specification 2024-11-05)

- **Parameters**

- `cls`

- `v` (str)

- **Returns**

- `str`: Return value

**Function:** `Settings.find_config(cls) -> Path | None`

- **Description**: Find the config file in the current directory or parent directories.

- **Parameters**

- `cls`

- **Returns**

- `Path | None`: Return value

**Function:** `Settings.find_secrets(cls) -> Path | None`

- **Description**: Find the secrets file in the current directory or parent directories.

- **Parameters**

- `cls`

- **Returns**

- `Path | None`: Return value

**Function:** `Settings._find_config(cls, filenames: List[str]) -> Path | None`

- **Description**: Find the config file of one of the possible names in the current directory or parent directories.

- **Parameters**

- `cls`

- `filenames` (List[str])

- **Returns**

- `Path | None`: Return value

**Function:** `get_settings(config_path: str | None = None) -> Settings`

- **Description**: Get settings instance, automatically loading from config file if available.

- **Parameters**

- `config_path` (str | None, optional): Default is None

- **Returns**

- `Settings`: Return value

**Function:** `deep_merge(base: dict, update: dict) -> dict`

- **Description**: Recursively merge two dictionaries, preserving nested structures.

- **Parameters**

- `base` (dict)

- `update` (dict)

- **Returns**

- `dict`: Return value

### src/mcp_agent/core/context.py

**Module Description**: A central context object to store global state that is shared across the application.

**Class: `Context`**

- **Inherits from**: BaseModel

- **Description**: Context that is passed around through the application.

This is a global context that is shared across the application.

- **Attributes**:

- `config` (Optional[Settings]) = None

- `executor` (Optional[Executor]) = None

- `human_input_handler` (Optional[HumanInputCallback]) = None

- `signal_notification` (Optional[SignalWaitCallback]) = None

- `upstream_session` (Optional[ServerSession]) = None

- `model_selector` (Optional[ModelSelector]) = None

- `session_id` (str | None) = None

- `app` (Optional['MCPApp']) = None

- `server_registry` (Optional[ServerRegistry]) = None

- `task_registry` (Optional[ActivityRegistry]) = None

- `signal_registry` (Optional[SignalRegistry]) = None

- `decorator_registry` (Optional[DecoratorRegistry]) = None

- `workflow_registry` (Optional['WorkflowRegistry']) = None

- `tracer` (Optional[trace.Tracer]) = None

- `model_config` = ConfigDict(extra='allow', arbitrary_types_allowed=True)

**Function:** `configure_otel(config: 'Settings')`

- **Description**: Configure OpenTelemetry based on the application config.

- **Parameters**

- `config` ('Settings')

**Function:** `configure_logger(config: 'Settings', session_id: str | None = None)`

- **Description**: Configure logging and tracing based on the application config.

- **Parameters**

- `config` ('Settings')

- `session_id` (str | None, optional): Default is None

**Function:** `configure_usage_telemetry(_config: 'Settings')`

- **Description**: Configure usage telemetry based on the application config. TODO: saqadri - implement usage tracking

- **Parameters**

- `_config` ('Settings')

**Function:** `configure_executor(config: 'Settings')`

- **Description**: Configure the executor based on the application config.

- **Parameters**

- `config` ('Settings')

**Function:** `configure_workflow_registry(config: 'Settings', executor: Executor)`

- **Description**: Configure the workflow registry based on the application config.

- **Parameters**

- `config` ('Settings')

- `executor` (Executor)

**Function:** `initialize_context(config: Optional['Settings'] = None, task_registry: Optional[ActivityRegistry] = None, decorator_registry: Optional[DecoratorRegistry] = None, signal_registry: Optional[SignalRegistry] = None, store_globally: bool = False)`

- **Description**: Initialize the global application context.

- **Parameters**

- `config` (Optional['Settings'], optional): Default is None

- `task_registry` (Optional[ActivityRegistry], optional): Default is None

- `decorator_registry` (Optional[DecoratorRegistry], optional): Default is None

- `signal_registry` (Optional[SignalRegistry], optional): Default is None

- `store_globally` (bool, optional): Default is False

**Function:** `cleanup_context()`

- **Description**: Cleanup the global application context.

**Function:** `get_current_context() -> Context`

- **Description**: Synchronous initializer/getter for global application context. For async usage, use aget_current_context instead.

- **Returns**

- `Context`: Return value

**Function:** `run_async()`

**Function:** `get_current_config()`

- **Description**: Get the current application config.

### src/mcp_agent/core/context_dependent.py

**Class: `ContextDependent`**

- **Description**: Mixin class for components that need context access.

Provides both global fallback and instance-specific context support.

**Function:** `ContextDependent.__init__(self, context: Optional['Context'] = None)`

**Function:** `ContextDependent.context(self) -> 'Context'`

- **Description**: Get context, with graceful fallback to global context if needed. Raises clear error if no context is available.

- **Parameters**

- `self`

- **Returns**

- `'Context'`: Return value

**Function:** `ContextDependent.use_context(self, context: 'Context')`

- **Description**: Temporarily use a different context.

- **Parameters**

- `self`

- `context` ('Context')

### src/mcp_agent/core/decorator_app.py

**Module Description**: Decorator-based interface for MCP Agent applications. Provides a simplified way to create and manage agents using decorators.

**Class: `MCPAgentDecorator`**

- **Description**: A decorator-based interface for MCP Agent applications.

Provides a simplified way to create and manage agents using decorators.

**Class: `AgentAppWrapper`**

- **Description**: Wrapper class providing a simplified interface to the agent application.

**Function:** `MCPAgentDecorator.__init__(self, name: str, config_path: Optional[str] = None)`

- **Description**: Initialize the decorator interface. Args: name: Name of the application config_path: Optional path to config file

- **Parameters**

- `self`

- `name` (str): Name of the application

- `config_path` (Optional[str], optional): Optional path to config file

**Function:** `MCPAgentDecorator._load_config(self)`

- **Description**: Load configuration, properly handling YAML without dotenv processing

- **Parameters**

- `self`

**Function:** `MCPAgentDecorator.agent(self, name: str, instruction: str, servers: List[str]) -> Callable`

- **Description**: Decorator to create and register an agent. Args: name: Name of the agent instruction: Base instruction for the agent servers: List of server names the agent should connect to

- **Parameters**

- `self`

- `name` (str): Name of the agent

- `instruction` (str): Base instruction for the agent

- `servers` (List[str]): List of server names the agent should connect to

- **Returns**

- `Callable`: Return value

**Function:** `MCPAgentDecorator.decorator(func: Callable) -> Callable`

**Function:** `MCPAgentDecorator.wrapper()`

**Function:** `MCPAgentDecorator.run(self)`

- **Description**: Context manager for running the application. Handles setup and teardown of the app and agents.

- **Parameters**

- `self`

**Function:** `AgentAppWrapper.__init__(self, app: MCPApp, agents: Dict[str, Agent])`

**Function:** `AgentAppWrapper.send(self, agent_name: str, message: str) -> Any`

- **Description**: Send a message to a specific agent and get the response. Args: agent_name: Name of the agent to send message to message: Message to send Returns: Agent's response

- **Parameters**

- `self`

- `agent_name` (str): Name of the agent to send message to

- `message` (str): Message to send

- **Returns**

- `Any`: Agent's response

### src/mcp_agent/core/exceptions.py

**Module Description**: Custom exceptions for the mcp-agent library. Enables user-friendly error handling for common issues.

**Class: `MCPAgentError`**

- **Inherits from**: Exception

- **Description**: Base exception class for FastAgent errors

**Class: `ServerConfigError`**

- **Inherits from**: MCPAgentError

- **Description**: Raised when there are issues with MCP server configuration

Example: Server name referenced in agent.servers[] but not defined in config

**Class: `AgentConfigError`**

- **Inherits from**: MCPAgentError

- **Description**: Raised when there are issues with Agent or Workflow configuration

Example: Parallel fan-in references unknown agent

**Class: `ProviderKeyError`**

- **Inherits from**: MCPAgentError

- **Description**: Raised when there are issues with LLM provider API keys

Example: OpenAI/Anthropic key not configured but model requires it

**Class: `ServerInitializationError`**

- **Inherits from**: MCPAgentError

- **Description**: Raised when a server fails to initialize properly.