Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@Azure SQL MCP ServerShow me the total sales by category this month as a bar chart"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

Azure SQL MCP Server — Complete Guide

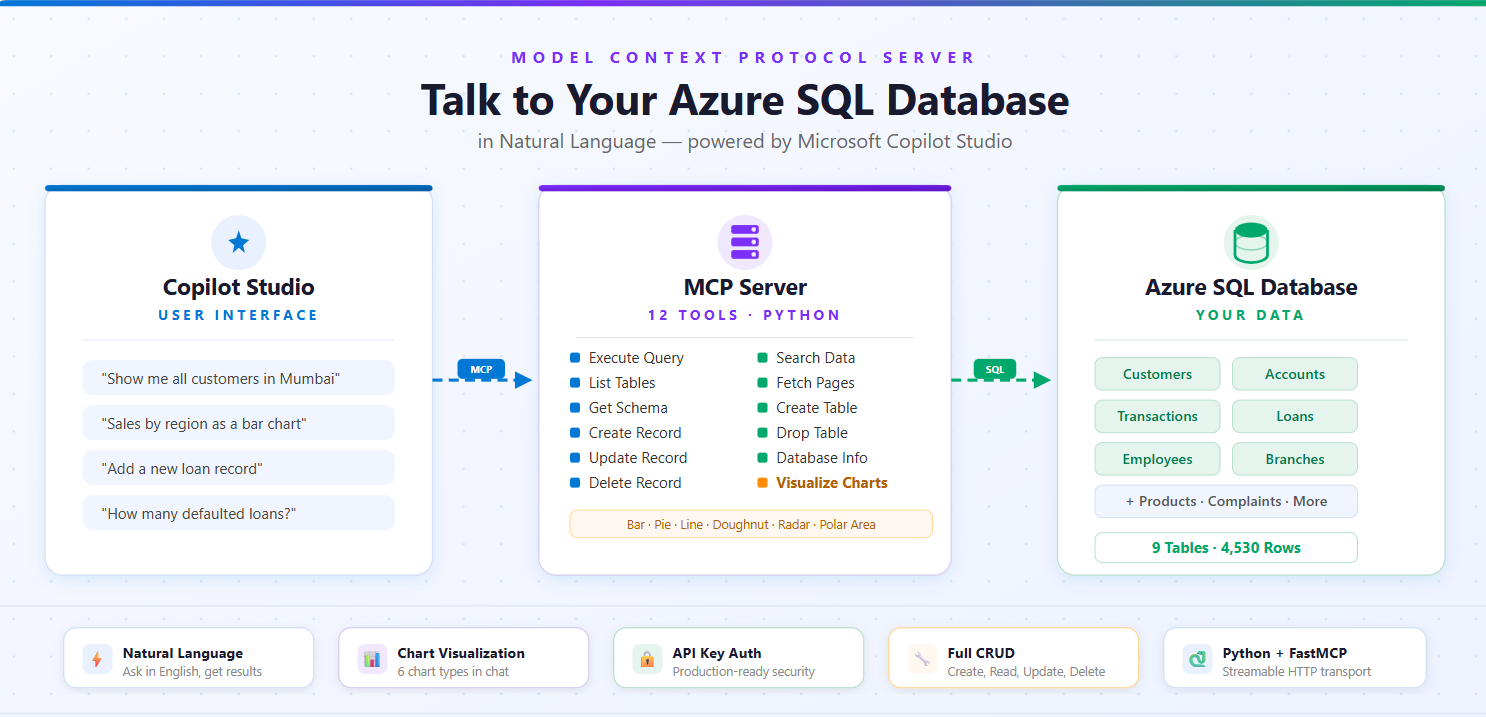

A Model Context Protocol (MCP) server that connects Microsoft Copilot Studio to your Azure SQL Database. Supports 12 tools for querying, CRUD operations, schema inspection, search, and chart visualization.

Table of Contents

Architecture

1. Prerequisites

Python 3.8+

ODBC Driver 18 for SQL Server — Download here

Azure SQL Database with server hostname, database name, username, and password

Cloudflare Tunnel (for local testing) —

winget install Cloudflare.cloudflared

Install ODBC Driver

Windows: Download and install from the link above.

macOS:

Linux (Ubuntu/Debian):

2. Installation

3. Configuration

Create a .env file in the project root:

Never commit Add it to

.gitignore.

4. Code Fixes

The MCP SDK requires specific configuration. Apply these 3 fixes to azure_sql_mcp.py:

Fix 1: Lifespan function signature

Fix 2: Host and port on the constructor

Fix 3: HTTP transport

5. Running the Server

You should see:

MCP endpoint: http://localhost:8000/mcp

6. Exposing to the Internet

Copilot Studio needs a public HTTPS URL. Use Cloudflare Tunnel (free, no signup).

Why not ngrok? ngrok's free tier shows a browser warning page (

ERR_NGROK_6024) that blocks API clients like Copilot Studio.

Steps

Install (one-time):

winget install Cloudflare.cloudflaredClose and reopen your terminal after install.

In a new terminal (keep the server running in the first one):

cloudflared tunnel --url http://localhost:8000Copy the URL from the output:

https://electronic-annie-jose-spoken.trycloudflare.comYour MCP server URL for Copilot Studio:

https://electronic-annie-jose-spoken.trycloudflare.com/mcp

URLs change on restart. For a permanent URL, deploy to Azure App Service (see Production Deployment).

7. Copilot Studio Setup

Step 1: Add the MCP Server

Go to Copilot Studio

Open your agent → Tools → Add a tool → New tool → Model Context Protocol

Fill in:

Field | Value |

Server name |

|

Server description |

|

Server URL |

|

Authentication | None (local testing) or API key (production) |

Click Create → Next → Create new connection → Add and configure

Step 2: Configure the Agent (Overview tab)

Both fields are required — the agent won't work without them.

Description:

Instructions (click Edit):

Step 3: Publish and Test

Click Publish, wait a minute, then test with prompts like:

"Show me all tables in the database"

"What's the schema of the customers table?"

"Get the first 10 rows from orders"

"How many records are in each table?"

"Show me sales by region as a bar chart"

8. All 12 Tools Reference

# | Tool | What it does | Read-Only |

1 |

| Run any SQL query | No |

2 |

| List all tables with row counts | Yes |

3 |

| Get column details for a table | Yes |

4 |

| Fetch paginated table data | Yes |

5 |

| Database metadata & stats | Yes |

6 |

| INSERT a new row | No |

7 |

| UPDATE existing rows (WHERE required) | No |

8 |

| DELETE rows (WHERE required) | No |

9 |

| Search text across columns | Yes |

10 |

| Create a new table | No |

11 |

| Drop a table | No |

12 |

| Generate charts (Adaptive Card) | Yes |

All tools support both markdown and json response formats.

9. CRUD Operations

CREATE — azure_sql_create_record

Natural language: "Add a new customer named John Doe with email

READ — azure_sql_execute_query

Natural language: "Show me all customers from Seattle"

UPDATE — azure_sql_update_record

Safety: WHERE clause is required — prevents accidental mass updates.

Natural language: "Update customer 123's email to

DELETE — azure_sql_delete_record

Safety: WHERE clause is required — prevents accidental mass deletion.

Natural language: "Delete customer with ID 999"

SEARCH — azure_sql_search

If columns is omitted, all text columns are searched automatically.

Natural language: "Search for 'john' in customers table"

CREATE TABLE — azure_sql_create_table

Column properties: name, type, primary_key, identity, nullable, default.

Natural language: "Create a customers table with id, name, email, and phone columns"

DROP TABLE — azure_sql_drop_table

Uses DROP TABLE IF EXISTS — won't error if table doesn't exist.

Natural language: "Drop the old_backup_table"

10. Chart Visualization

Tool: azure_sql_visualize_data

Generates charts via QuickChart API and returns Adaptive Cards that render directly in Copilot Studio.

Parameters

Parameter | Required | Default | Description |

| Yes | — | SQL query to get chart data |

| No |

| bar, pie, line, doughnut, radar, polarArea |

| Yes | — | Chart title |

| Yes | — | Column for labels (X-axis / slices) |

| Yes | — | Column for values (Y-axis / data) |

| No | 800 | 400–1200 pixels |

| No | 500 | 300–800 pixels |

Chart Types

Type | Best For |

| Comparing categories (sales by region) |

| Proportions (market share) |

| Trends over time (monthly revenue) |

| Modern proportions (budget breakdown) |

| Multi-dimensional data (performance metrics) |

| Cyclical data (seasonal patterns) |

Example: Bar Chart

Example: Pie Chart

Example: Line Chart (Trends)

What Copilot Displays

Every chart returns an Adaptive Card with the chart image, plus automatic statistics: Total, Average, Highest (with label), Lowest (with label), and Data Points count.

Best Practices for Charts

Bar charts: 3–15 categories, sorted by value DESC

Pie charts: 3–8 slices, sorted by value DESC

Line charts: 5–50 points, sorted by date/time ASC

Use clear column aliases:

SUM(sales) as total_salesnotSUM(s)Test your query with

azure_sql_execute_queryfirst, then visualize

11. Example Queries & Use Cases

Data Exploration

Data Analysis

Data Quality

Parameterized Queries (SQL injection safe)

Aggregation

Joins

Time-Based Queries

Complete CRUD Workflow

Create table →

azure_sql_create_tableInsert data →

azure_sql_create_recordSearch →

azure_sql_searchUpdate →

azure_sql_update_recordVisualize →

azure_sql_visualize_dataClean up →

azure_sql_delete_recordorazure_sql_drop_table

12. Production Deployment

Azure App Service

Create deployment files:

runtime.txt:python-3.11Procfile:web: python azure_sql_mcp.pyDeploy:

az login az group create --name mcp-servers --location eastus az appservice plan create --name mcp-plan --resource-group mcp-servers --sku B1 --is-linux az webapp create --name azure-sql-mcp --resource-group mcp-servers --plan mcp-plan --runtime "PYTHON:3.11" az webapp config appsettings set --name azure-sql-mcp --resource-group mcp-servers --settings \ AZURE_SQL_SERVER="your-server.database.windows.net" \ AZURE_SQL_DATABASE="your-database" \ AZURE_SQL_USERNAME="your-username" \ AZURE_SQL_PASSWORD="your-password" \ AZURE_SQL_DRIVER="ODBC Driver 18 for SQL Server" \ PORT="8000" az webapp up --name azure-sql-mcp --resource-group mcp-serversPermanent URL:

https://azure-sql-mcp.azurewebsites.net/mcp

Docker

13. API Key Authentication

For production, add API key middleware to protect your server.

Step 1: Add middleware to azure_sql_mcp.py

Add this above the Pydantic Models section:

Step 2: Update the entry point

Step 3: Add to .env

Generate a strong key: python -c "import secrets; print(secrets.token_urlsafe(32))"

Step 4: Configure in Copilot Studio

Field | Value |

Authentication type | API key |

Type | Header |

Header name |

|

Enter the same key value when creating the connection.

14. Troubleshooting

Problem | Fix |

| Add |

| Set |

Server starts but no HTTP output | Set transport to |

ngrok warning page blocks Copilot Studio | Use Cloudflare Tunnel instead |

| Close and reopen your terminal |

Copilot says "Server URL is not valid" | URL must be HTTPS and end with |

Copilot | Check both terminals running (server + tunnel), URL ends with |

"This feature isn't available until your agent has finished setting up" | Fill in agent Description and Instructions on Overview tab, then Publish |

Copilot "Connector request failed: Not Found" | URL needs |

Copilot says "Authentication failed" | Verify API key matches between Copilot Studio and |

Tools not appearing in Copilot Studio | Check server logs for errors, verify server is running |

Connection to Azure SQL fails | Check |

ODBC driver not found | Install ODBC Driver 18 |

Query timeout | Optimize query, add indexes, use |

Permission denied | Grant necessary permissions to database user |

Chart shows "Column Not Found" | Match |

Chart shows "No Data Found" | Check your WHERE clause and date ranges |

Check installed ODBC drivers

15. Security Checklist

.envfile is in.gitignore(never commit credentials)Server URL uses HTTPS

API key authentication enabled for production

Parameterized queries used for user input

Azure SQL firewall restricts access to known IPs

Database user has least-privilege permissions

Azure SQL auditing enabled

API key and passwords rotated regularly

No multiple SQL statements allowed (built-in)

WHERE clause required for UPDATE/DELETE (built-in)

Project Structure

Adding Custom Tools

Changelog

v2.0.0 — Chart Visualization

Added

azure_sql_visualize_datatool (6 chart types)Adaptive Card output for Copilot Studio

Auto-statistics (total, average, min, max)

QuickChart API integration (no API key required)

v1.0.0 — Initial Release

5 core tools: execute_query, list_tables, get_table_schema, get_table_data, get_database_info

Pydantic validation, parameterized queries, dual output formats

v2.1.0 — Full CRUD + Deployment

Added 7 new tools: create_record, update_record, delete_record, search, create_table, drop_table, visualize_data

Cloudflare Tunnel support (replaces ngrok)

API key authentication middleware

Copilot Studio agent configuration (description + instructions)