The MCP Hub server provides a comprehensive multi-agent AI system for research-driven code generation and execution with robust monitoring capabilities.

Core Capabilities:

Complete Research & Code Workflow: Process complex user requests through question enhancement, web research, intelligent Python code generation, and secure execution in isolated Modal sandboxes

Individual Agent Access: Use specialized agents independently for specific tasks like query enhancement, web searching, text processing, or code generation

Secure Code Execution: Run generated code in pre-warmed sandbox environments with automatic package installation and clear error handling

Research Integration: Perform targeted web searches with automatic summarization and APA-style citation formatting

Text Processing: Summarize content, perform reasoning, or extract keywords using large language models

System Monitoring: Access comprehensive health status, performance analytics, cache statistics, and sandbox pool management

Multi-LLM Support: Compatible with Nebius, OpenAI, Anthropic, and HuggingFace providers

Advanced Features: Intelligent caching to reduce redundant API calls, fault tolerance, and resource utilization tracking

Uses .env file for managing API keys and environment configuration

Built on Gradio's MCP server capabilities for creating a multi-agent architecture with interconnected agent services and a web interface

Uses Nebius (OpenAI-compatible) models for text processing, summarization, and question enhancement

Requires Python 3.12+ as the core runtime environment for the MCP server implementation

Click on "Install Server".

Wait a few minutes for the server to deploy. Once ready, it will show a "Started" state.

In the chat, type

@followed by the MCP server name and your instructions, e.g., "@MCP Hubresearch how to implement feature scaling in Python pandas"

That's it! The server will respond to your query, and you can continue using it as needed.

Here is a step-by-step guide with screenshots.

title: ShallowCodeResearch emoji: 📉 colorFrom: red colorTo: pink sdk: gradio sdk_version: 5.33.1 app_file: app.py pinned: false short_description: Coding research assistant that generates code and tests it tags:

mcp

multi-agent

research

code-generation

ai-assistant

gradio

python

web-search

llm

modal

mcp-server-track python_version: '3.12'

Shallow Research Code Assistant - Multi-Agent AI Code Assistant

Technologies Used

This is part of the MCP track for the Hackathon (with a smidge of Agents)

Gradio for the UI and MCP logic

Modal AI for spinning up sandboxes for code execution

Nebius, OpenAI, Anthropic and Hugging Face can be used for LLM calls

Nebius set by default for inference, with a priority on token speed that can be found on the platform

❤️ A very big thank you to the sponsors for the generous credits for this hackathon and Hugging Face and Gradio for putting this event together 🔥

Special thanks to Yuvi for putting up with us in the Discord asking for credits 😂

Related MCP server: Deep Research MCP Server

🚀 Multi-agent system for AI-powered search and code generation

What is the Shallow Research MCP Hub for Code Assistance?

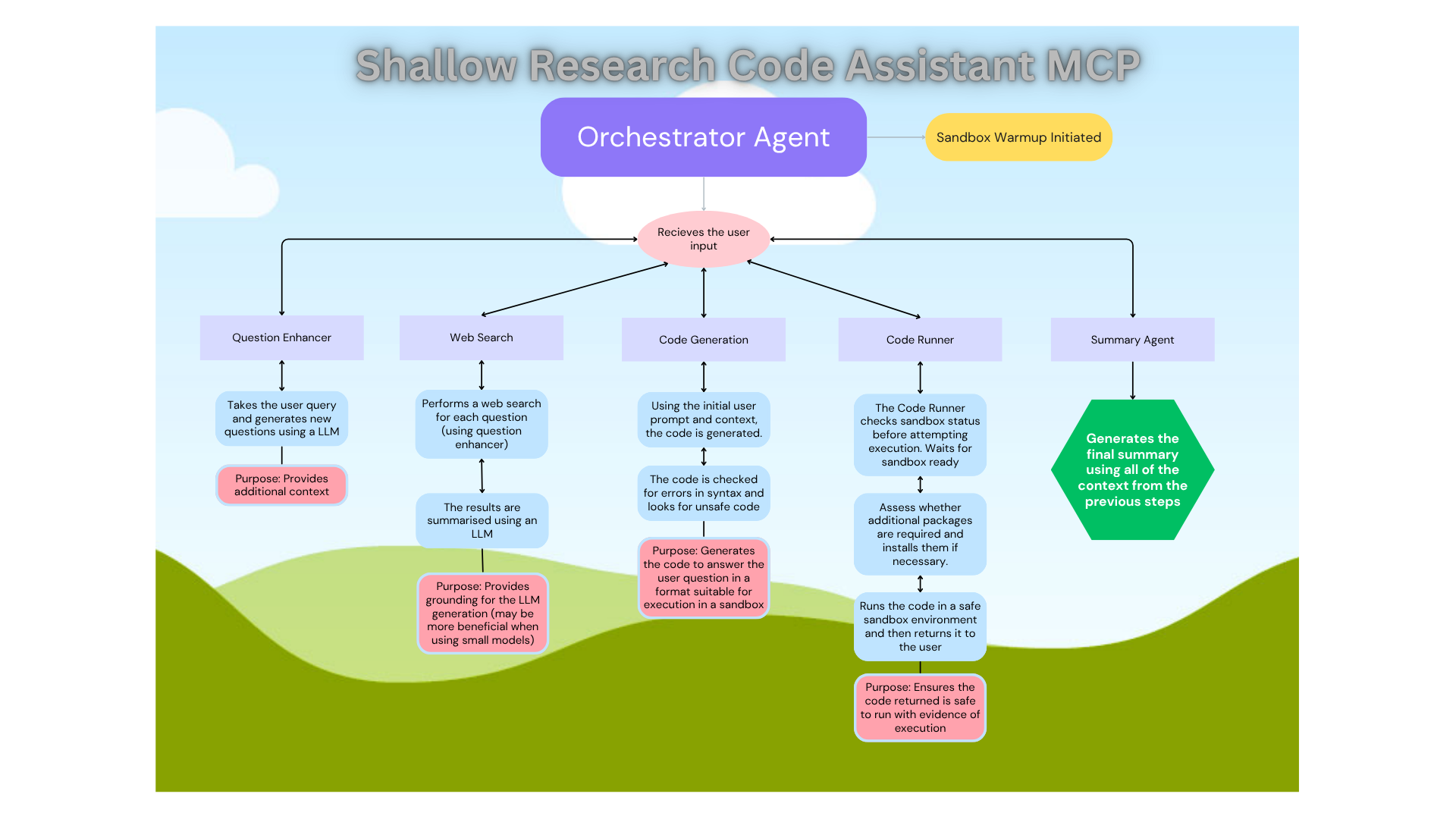

Shallow Research Code Assistant is a sophisticated multi-agent research and code assistant built using Gradio's Model Context Protocol (MCP) server functionality. It orchestrates specialized AI agents to provide comprehensive research capabilities and generate executable Python code. This "shallow" research tool (Its definitely not deep research) augments the initial user query to broaden scope before performing web searches for grounding.

The coding agent then generates the code to answer the user question and checks for errors. To ensure the code is valid, the code is executed in a remote sandbox using the Modal infrustructure. These sandboxes are spawned when needed with a small footprint (only pandas, numpy, request and scikit-learn are installed).

However, if additional packages are required, this will be installed prior to execution (some delays expected here depending on the request).

Once executed the whole process is summarised and returned to the user.

📹 Demo Video

Click the badge above to watch the complete demonstration of the MCP Demo Shallow Research Code Assistant in action

Key information

I've found that whilst using VS Code for the MCP interaction, its useful to type the main agent function name to ensure the right tool is picked.

For example "agent research request: How do you write a python script to perform scaling of features in a dataframe"

This is the JSON script required to set up the MCP in VS Code

This is the JSON script required to set up the MCP Via Cline in VS Code

✨ Key Features

🧠 Multi-Agent Architecture: Specialized agents working in orchestrated workflows

🔍 Intelligent Research: Web search with automatic summarization and citation formatting

💻 Code Generation: Context-aware Python code creation with secure execution

🔗 MCP Server: Built-in MCP server for seamless agent communication

🎯 Multiple LLM Support: Compatible with Nebius, OpenAI, Anthropic, and HuggingFace (Currently set to Nebius Inference)

🛡️ Secure Execution: Modal sandbox environment for safe code execution

📊 Performance Monitoring: Advanced metrics collection and health monitoring

🏛️ MCP Workflow Architecture

The diagram above illustrates the complete Multi-Agent workflow architecture, showing how different agents communicate through the MCP (Model Context Protocol) server to deliver comprehensive research and code generation capabilities.

🚀 Quick Start

Configure your environment by setting up API keys in the Settings tab

Choose your LLM provider Nebius Set By Default in the Space

Input your research query in the Orchestrator Flow tab

Watch the magic happen as agents collaborate to research and generate code

🏗️ Architecture

Core Agents

Question Enhancer: Breaks down complex queries into focused sub-questions

Web Search Agent: Performs targeted searches using Tavily API

LLM Processor: Handles text processing, summarization, and analysis

Citation Formatter: Manages academic citation formatting (APA style)

Code Generator: Creates contextually-aware Python code

Code Runner: Executes code in secure Modal sandboxes

Orchestrator: Coordinates the complete workflow

Workflow Example

🛠️ Setup Requirements

Required API Keys

LLM Provider (choose one):

Nebius API (recommended)

OpenAI API

Anthropic API

HuggingFace Inference API

Tavily API (for web search)

Modal Account (for code execution)

Environment Configuration

Set these environment variables or configure in the app:

🎯 Use Cases

Code Generation

Prototype Development: Rapidly create functional code based on requirements

IDE Integration: Add this to your IDE for grounded LLM support

Learning & Education

Code Examples: Generate educational code samples with explanations

Concept Exploration: Research and understand complex programming concepts

Best Practices: Learn current industry standards and methodologies

🔧 Advanced Features

Performance Monitoring

Real-time metrics collection

Response time tracking

Success rate monitoring

Resource usage analytics

Intelligent Caching

Reduces redundant API calls

Improves response times

Configurable TTL settings

Fault Tolerance

Circuit breaker protection

Rate limiting management

Graceful error handling

Automatic retry mechanisms

Sandbox Pool Management

Pre-warmed execution environments

Optimized performance

Resource pooling

Automatic scaling

📱 Interface Tabs

Orchestrator Flow: Complete end-to-end workflow

Individual Agents: Access each agent separately for specific tasks

Advanced Features: System monitoring and performance analytics

🤝 MCP Integration

This application demonstrates advanced MCP (Model Context Protocol) implementation:

Server Architecture: Full MCP server with schema generation

Function Registry: Proper MCP function definitions with typing

Multi-Agent Communication: Structured data flow between agents

Error Handling: Robust error management across agent interactions

📊 Performance

Response Times: Optimized for sub-second agent responses

Scalability: Handles concurrent requests efficiently

Reliability: Built-in fault tolerance and monitoring

Resource Management: Intelligent caching and pooling

🔍 Technical Details

Python: 3.12+ required

Framework: Gradio with MCP server capabilities

Execution: Modal for secure sandboxed code execution

Search: Tavily API for real-time web research

Monitoring: Comprehensive performance and health tracking

Ready to experience the future of AI-assisted research and development?

Start by configuring your API keys and dive into the world of multi-agent AI collaboration! 🚀

📝 License

This project is licensed under the MIT License.

You are free to use, modify, and distribute this software with proper attribution. See the LICENSE file for details.

Tools

- ShallowCodeResearch_agent_citation_formatter

- ShallowCodeResearch_agent_code_generator

- ShallowCodeResearch_agent_llm_processor

- ShallowCodeResearch_agent_question_enhancer

- ShallowCodeResearch_agent_research_request

- ShallowCodeResearch_agent_web_search

- ShallowCodeResearch_code_runner_wrapper

- ShallowCodeResearch_get_cache_status