Using LLMs to reduce sign-up & onboarding friction

Written by Frank Fiegel on .

Something that I think a lot about is what's going to be the role of LLMs in user experience (UX). My thesis is that as LLMs become more capable and faster, we will see them being used for use cases such as auto populating fields, determining user's preferences, etc. In this post, I'll talk about a few experiments I am running for these use cases as part of my work on Glama.

Deriving user's display name

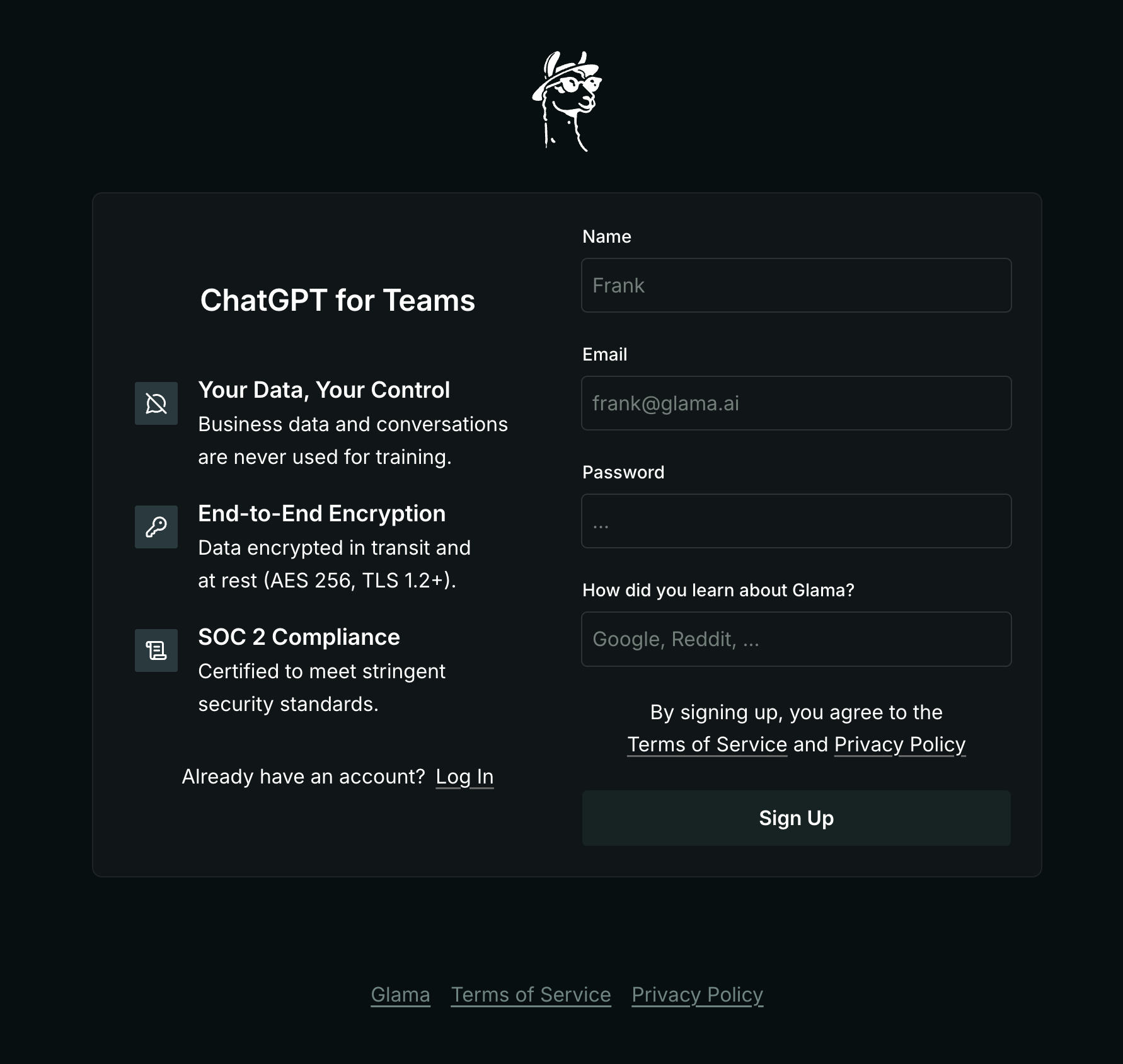

When users sign up for Glama, they are asked to provide their name, email, password, and how they discovered Glama. This is a fairly standard UX flow, with the email being used to send a confirmation message.

However, the main challenge lies in the inconsistency of user-entered names. For example, one person might enter "John Doe" while another opts for "John D". To ensure proper addressing in emails, we need to infer an appropriate display name from these varied inputs. To accomplish this, I use the following prompt:

const { name: displayName } = await quickPrompt({

model: 'openai@gpt-4o-mini',

name: 'generateDisplayName',

prompt: dedent`

Based on the user's name and email, suggest the name that I should be using to address them in emails, e.g., "John Doe" -> "John" or "john.doe@gmail.com" -> "John".

User's name: ${name}

User's email: ${emailAddress}

Reply with JSON:

{

"name": "John"

}

`,

zodSchema: z.object({

name: z.string(),

}),

});

By providing both the name and email, the model can derive a suitable display name even if the user omits their name. For example, if the user's email is "john.doe@gmail.com", the model can infer that the user's name is "John".

Using LLMs to derive a display name is a very simple use case, but without the use of LLMs, I would have to enforce stricter constraints on the name field to ensure consistency, which doesn't make for a great user experience.

Normalizing referral sources

I mentioned that we ask users to tell us how they've discovered Glama. We use this information to understand how users are finding us, and which sources convert the most to paying clients. For this information to be useful, we need to normalize the sources so that we can compare them.

Traditionally, there have been two ways to achieve this:

- Using a select input with a list of options

- Using pattern matching to normalize the sources

I am not a fan of select inputs for this use case for a few reasons:

- It assumes that we already know all the possible sources. Meaning that we might not be capturing accurate information.

- It misses the opportunity to capture additional context (e.g. "your Reddit post about XYZ")

- It forces the user to search for a specific option. Because of this, the selected value tends to be a random one, which makes the collected data less useful.

Pattern matching is a decent solution, but it still requires manual review of cases where we cannot match the source against a known list of sources.

Meanwhile, LLMs are perfect for this use case.

const { source: normalizedSource } = await quickPrompt({

model: 'openai@gpt-4o-mini',

name: 'normalizeSource',

prompt: dedent`

User has provided us with information about how they've discovered our website, e.g., "I found you on Reddit".

Based on the user's input, and the provided list of known sources, normalize user's input to a value that is either a known source or a new source.

Known sources: ${knownSources.join(', ')}

User's input: ${source}

Reply with JSON:

{

"source": "Google"

}

`,

zodSchema: z.object({

source: z.string(),

}),

});

This prompt either returns a known source or a new source. For example, if the user's input is "I found you on Reddit", the model will return "Reddit". Every time a new source is encountered, I add it to the list of known sources.

In the database, I capture the original input and the normalized input. The normalized input is what I used for data analyses. Meanwhile, I occasionally still review the original input to search for additional clues.

Other use cases for LLMs in user onboarding

A few other use cases I am exploring include:

- Qualifying leads based on their input (e.g. if their name input was gibberish, e.g., 'just testing', then we will assign a low intent score)

- Enriching leads using their input combined with company data (e.g. we use a third-party to get the company's employee data, and we use LLMs to match the user's input with the employee data)

These are not necessarily for the purpose of improving the UX, but it avoids me troubling users with additional questions.

Conclusion

The key takeaway is that as LLMs become snappier and more capable, we will see them being used to reduce friction and optimize the user experience. I am excited to see how these use cases will evolve over time. If you have encountered other fun use cases, I would love to hear about them (frank@glama.ai).